This article is an English-translated of this blog post.

https://blog.hayashikun.com/entry/2020/12/21/183000

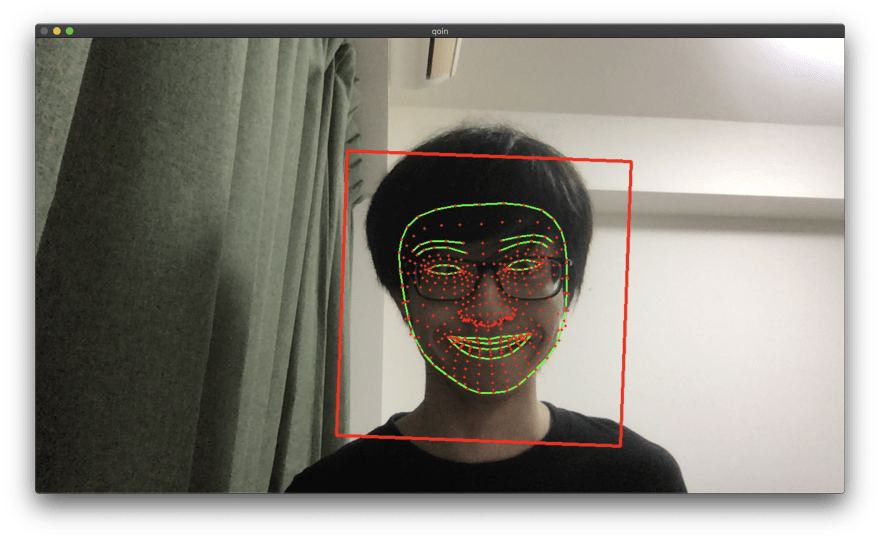

I will introduce a project named qoin to make it easy to use mediapipe detection data.

Also, I'll show some application which uses qoin.

What is MediaPipe?

MediaPipe is an open-source, cross-platform application that detects hands, faces, and objects on the video in real-time using machine learning techniques.

This project is developed by Google and mainly written in C++.

In the MediaPipe, Tensorflow performs the detection.

MediaPipe is indeed interesting; however, it is difficult to extract, save, and use the detected data in other applications.

The only (as long as I confirmed) way was to rewrite code and build by myself.

So, I developed qoin to use detection data easily.

qoin

qoin is a gRPC server and client application and is written in C++.

It includes the detection part of C++ code from MediaPipe.

Because MediaPipe communicates internally with Protocol Buffers, I used gRPC, neither REST nor GraphQL.

You can run qoin as a gRPC server or client.

The gRPC server returns messages containing detected data as the response, and the service is named PullStream.

Contrary, the gRPC client sent messages as the request, named PushStream.

You can make an application that uses detection data by just implementing a gRPC server or client following the proto definition.

For now, The Face Mesh and Hand Tracking have been implemented.

The proto definitions of qoin are here.

https://github.com/hayashikun/qoin/tree/master/qoin/proto

The definitions defined in MediaPipe are also needed, so you have to include these definitions explained as follows.

Fun applications

poin

This is a desktop application that a pointer moves in the display following hand moving.

It is written in Rust using conrod as the GUI framework.

There is no official gRPC implementation, but we can use such as tonic.

qoin is running as a server, providing HandTrackingPullStream service.

In the build time, it is looking for the proto definitions of qoin and MediaPipe.

https://github.com/hayashikun/poin/blob/master/build.rs

pyoin

This is a Python application that judges the direction of the head, the shape of the hand, and the number of the fingers.

qoin is running as a server, providing FaceMeshPullStream service for head-direction and HandTrackingPullStream service for rock-paper-scissors.

The proto definitions of qoin and MediaPipe are included here.

https://github.com/hayashikun/pyoin/blob/master/codegen.py

The shape of the hand and the number of the fingers were evaluated using simple neural networks.

https://github.com/hayashikun/pyoin/blob/master/data/janken.ipynb

You can easily save detection data for training.

https://github.com/hayashikun/pyoin/blob/master/app/sampler/hand_tracking.py

qover

The poin and pyoin work on the same PC as the PC qoin running.

qover is an application that exchanges data across the network.

By using qover, you can run poin and pyoin on another machine from the PC qoin running.

As I explained before, qoin runs as both a server and client.

qover receives data from qoin running as a client (PushStream) and sends other applications as a server (PullStrem).

This an example of qover.

qoin is running on the PC as the client, and the data are sent to the qover deployed on ECS.

qover deliver the data to the web page in the smartphone.

The web page was made using Vue, and the detection and display are written like this.

https://github.com/hayashikun/geekten20/blob/master/src/components/HandTransfer.vue

Because of the CORS, you need to use an envoy proxy or something.

https://github.com/hayashikun/qover/blob/master/envoy/envoy.yaml

Bazel

The MediaPipe and many of Google's projects use Bazel as a build tool, and qoin also uses Bazel.

The dependencies are written in the WORKSPACE file.

Most are of MediaPipe.

Some rules such as cc_proto_library are defined in both the @com_google_protobuf and @rules_cc repository, and the build would fail if you load the wrong rules.

Top comments (0)