“Liquid Software” release practices are rapidly becoming the standard in many companies. However, as software shapes digital transformation, DevOps teams are feeling challenged to manage their growing influence on corporations’ success or failure. In a talk I gave last week, we looked into the growing pains that most enterprises (many of them JFrog customers) face when adopting and consolidating DevOps at scale, and how these challenges are being mitigated with end-to-end platform solutions. We also wrap up with some DevOps best practices that will help you address emerging trends that your bosses’ bosses care about.

Or just click here to open the slides in a full window.

Pipelines

I got many good questions around JFrog new product: “Pipelines”. Most of them were with one intent: “Why should I invest and effort and use Pipelines?”

Here are some of the most popular ones:

1) What is the scale that is supported?

JFrog Pipelines can scale horizontally and allows you to have a centrally managed solution supporting 1000s of users and 1000s of concurrent builds. Steps in a single pipeline can run on multi-OS, multi-architecture nodes, reducing the need to have multiple CI/CD tools.

Here are few images so you could get a ‘feel’ to the product.

Feel free to click on them to get the full image size.

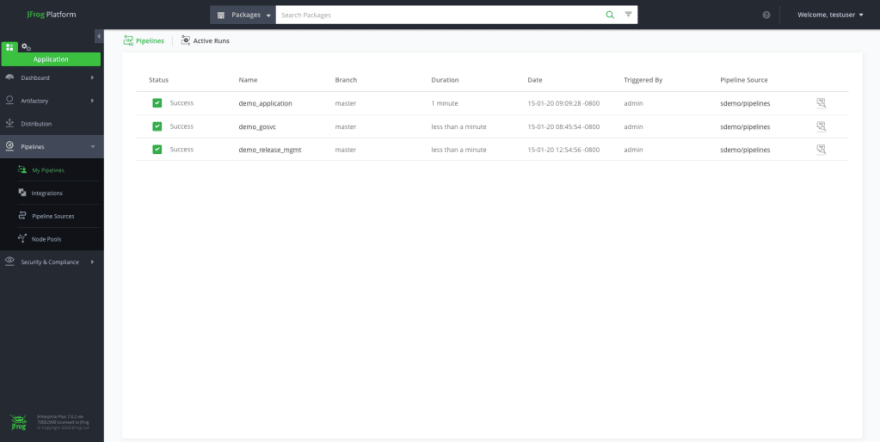

Pipelines Dashboard

You can see each pipe and what is the status of its runs:

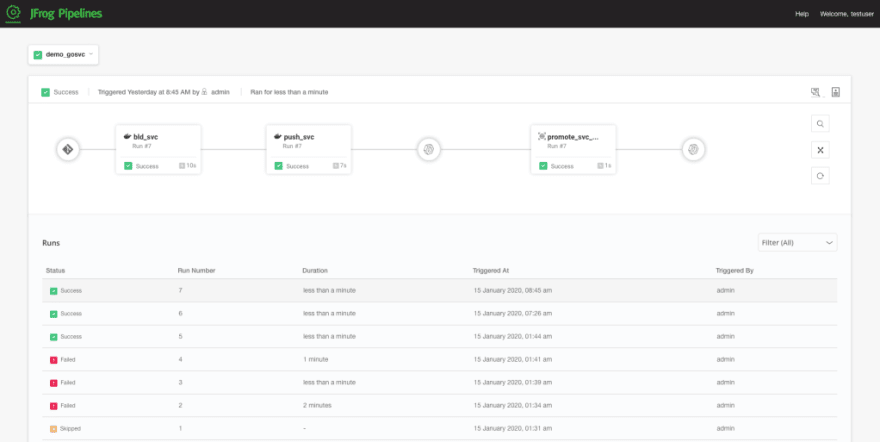

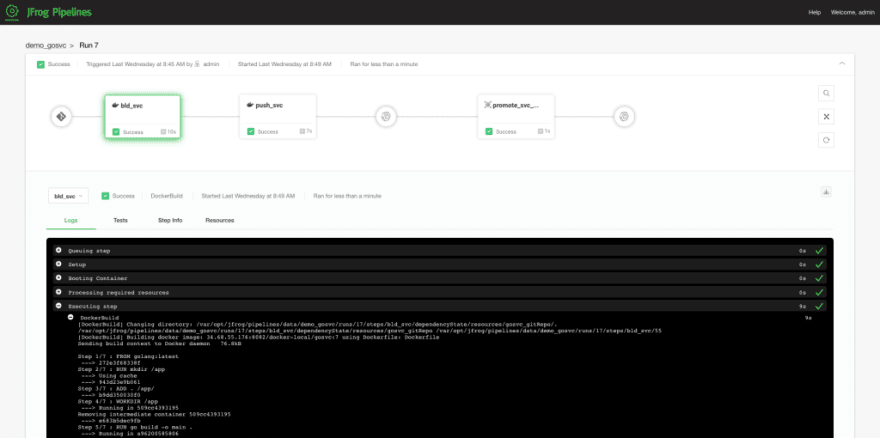

And for each task:

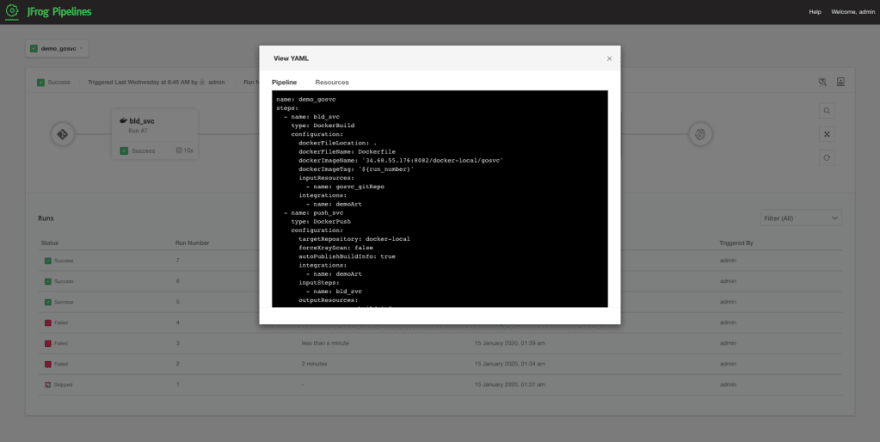

and even the yml file that is used:

2) How can I trigger Pipelines? What are the most popular options?

JFrog Pipeline can be triggered from your source control repository and also from any other CI tool that you have in your DevOps platform (e.g. webhooks, time-based triggers, etc’).

This allows creating complex pipelines easily and creating workflows that span multiple projects (e.g. multiple repositories). Think about a case, where you have one repository for your backend and one for your front-end. You wish to build, test and deploy both of them from one workflow. This is an easy one for Pipelines.

3) What is the added value of using Pipelines as part of the JFrog Platform?

Pipelines are natively integrated with the JFrog platform.

What does it mean?

It allows leveraging the platform capabilities as best practices. You can leverage resources like BuildInfo, ReleaseBundle as first-class citizens so pipelines can handle their information as native steps.

4) How can I manage data that is generated during the pipeline process?

Information that is generated during steps can be stored as immutable versions in resources. For example – Docker Image tags, BuildInfo build name and number, Git repository commitSHA, etc.

This enables the ability to create a cross-team “Pipeline of Pipelines” with a systemic exchange of information between pipelines and reduces manual handoffs.

All the information about your pipeline will be stored in Artifactory (under its special repository) so you can manage it.

5) What about security? How do I maintain all the secrets?

Pipeline offers centralized secrets management. Each secret (e.g. token, password, etc’) needs to be defined just once and scoped to the relevant pipelines. Recycling these secrets becomes very simple and requires no changes to individual pipelines.

Be strong.

Top comments (0)