A stream is an abstract interface for working with streaming data. They are used to process data piece by piece rather than loading the entire data into memory. It can be thought as a conveyor belt whereby items are processed one at a time rather than in large batches. All streams in node.js are instances of EventEmitter and can either be readable, writable or both. Streams are perfect for handling large volumes of data like videos and are more efficient in data processing in terms of time and memory space.

There are four fundamental streams in node.js :

- Readable Streams - Streams from which we read data for example http requests. The most important events in this streams include data event (emitted when there is new data to consume) & end event (emitted when there is no more data to consume). Some functions used are pipe() & read ()

- Writable Streams - These are streams to which we write data for example fs write streams and sending http responses to the client. The most emportant events are drain & finish events. The important functions include write() & end().

- Duplex Streams - Streams which are both readable and writable for example net web socket (communication channel between client and server that is multidirectional and open once the connection has been established)

- Transform Streams - These are duplex streams which transform data as it is read or written for example zlib Gzip creation module for compressing data

The events and functions stated above have already been implemented in node.js but we can implement our own streams using the same functions.

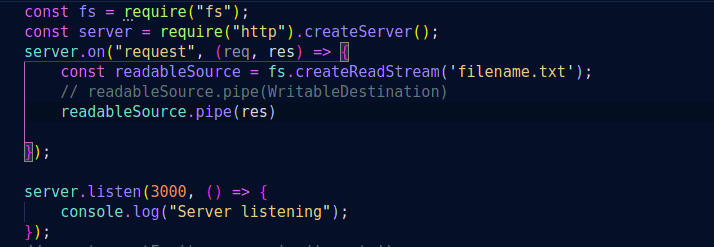

An example of the implementation of streams is shown below:

In order to handle the speed of the data coming from a source to a different stream (back pressure), the pipe() method is the most appropriate one to use between the input and output streams. The output stream can either be a duplex or a transform stream.

Streams have two major advantages memory efficiency since you don't have to load the entire data into memory and time efficiency since it takes less time to process data as soon as you have it (you do not have to wait for the entire data to be loaded).

Latest comments (0)