Colab is a free cloud service based on Jupyter Notebooks for machine learning education and research. It provides a runtime fully configured for deep learning and free-of-charge access to a robust GPU.

These 8 tips are the result of two weeks playing with Colab to train a YOLO model using Darkent. I was about to give up for some inconvenience of the tool. But, at a second chance and after some struggling, some tweaks, I discovered a wonderful tool, for free and available for everyone.

The first thing you'll notice using Colab notebooks is the handicap of dealing with a runtime that will blow up every 12 hours into the space! This is why is so important to speed up the time you need to run your runtime again. These tips allowed me to start to train again the model in less time and less manual interaction possible. I had my new trained weights automatically saved in my local computer during the trainig as well

These tips are based on training YOLO using Darknet, but I'll try to generalize.

Let's take a look!

I'm in get to the point mode here, but you can find step by step tutorial, the runnable Colab notebook or the github repo

1. Map your Google Drive

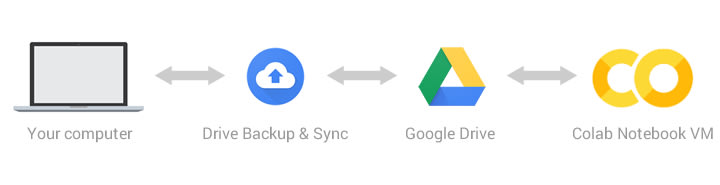

On Colab notebooks you can access your Google Drive as a network mapped drive in the Colab VM runtime.

# This cell imports the drive library and mounts your Google Drive as a VM local drive.

# You can access to your Drive files using this path "/content/gdrive/My Drive/"

from google.colab import drive

drive.mount('/content/gdrive')

2. Work with your files transparently in your computer

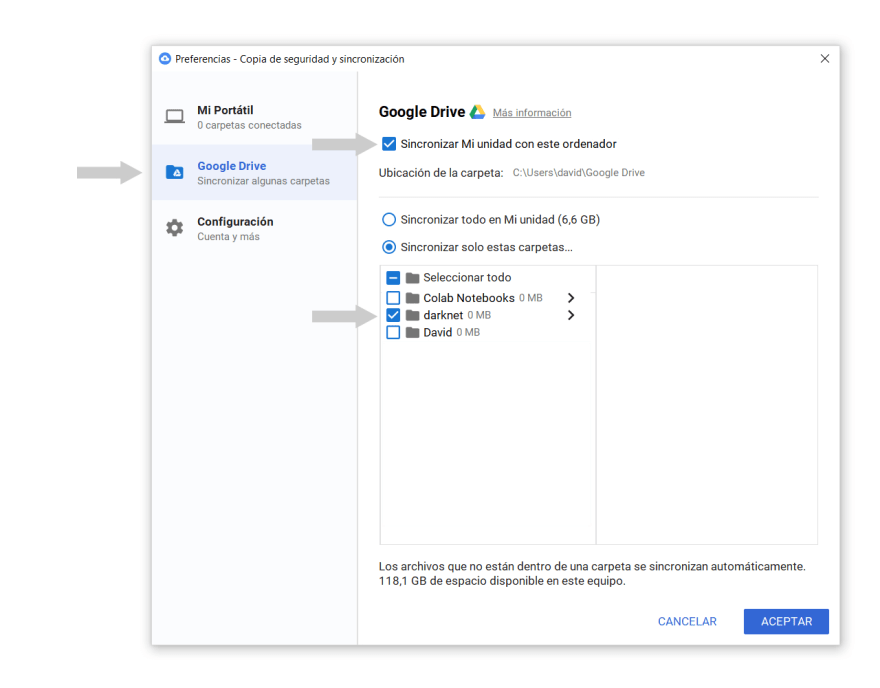

You can sync a Google Drive folder in your computer. Along with the previous tip, your local files will be available locally in your Colab notebook.

I'll use a Google Drive folder named darknet. This is the folder I'll keep synced in my local computer.

3. Reduce manual interactions on every run

In my case I need to download cuDNN form Nvidia every time. This library needs login to download. My approach is to save the tar file in my computer one time. On every run I extract the files directly where the lib files need to be. No need to manual upload.

You can apply this to any lib you need to install.

# Extracts the cuDNN files from Drive folder directly to the VM CUDA folders

!tar -xzvf gdrive/My\ Drive/darknet/cuDNN/cudnn-10.0-linux-x64-v7.5.0.56.tgz -C /usr/local/

!chmod a+r /usr/local/cuda/include/cudnn.h

# Now we check the version we already installed. Can comment this line on future runs

!cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

4. Don't compile libraries on every run, just once.

Because I'm using Darknet, I need to clone the repository and compile the framework on every run. This takes some time on every run. My solution was to compile the sources only the first time I run the notebook and keep a copy of the executable file in my Drive folder. On future runs, I only need to copy the bin file to the necessary folder.

Code for the first run

# Leave this code uncommented on the very first run of your notebook

# or if you ever need to recompile darknet again.

# Comment this code on the future runs.

!git clone https://github.com/kriyeng/darknet/

%cd darknet

# Check the folder

!ls

# I have a branch where I have done the changes commented below

!git checkout feature/google-colab

#Compile Darknet

!make

#Copies the Darknet compiled version to Google drive

!cp ./darknet /content/gdrive/My\ Drive/darknet/bin/darknet

If you are interested in using Darknet: I use a modified version of Darknet. I needed to apply some changes on the original source. My approach here is to use the configuration files directly from Drive. Original Darknet does not allow to have spaces on the file paths in the config file

obj.data. I added the feature to accept escaped spaces: (...)/grdive/My\ Drive/darknet

I reduced the number of log lines printed on screen. Original source prints more lines on screen that Colab notebook can synchronize with your browser.

Code to execute after the first run

# Uncomment after the first run, when you have a copy of compiled darkent in your Google Drive

# Makes a dir for darknet and move there

!mkdir darknet

%cd darknet

# Copy the Darkent compiled version to the VM local drive

!cp /content/gdrive/My\ Drive/darknet/bin/darknet ./darknet

# Set execution permissions to Darknet

!chmod +x ./darknet

5. Clean your root folder on Google Drive

There's some resources from Google that explains that having a lot of files in your root folder can affect the process of mapping the unit. If you have a lot of files in your root folder on Drive, create a new folder and move all of them there. I suppose that having a lot of folders on the root folder will have similar impact.

6. Copy your data sets to VM local filesystem to improve training speeds.

Colab notebooks sometimes have some lag working with the Drive files. I found that copying your dataset to the VM filesystem improves the speed. This causes that on every run you need to spend some time to copy your data set files locally, but it'll have a considerable impact on training times.

You can try two approaches here depending on your data set size and your results.

First approach. Copy your data from Drive to the VM drive

# Copy files from Google Drive to the VM local filesystem

!cp -r "/content/gdrive/My Drive/darknet/img" ./img

Maybe there's another option is to have your data set compressed in your Drive. Then copy the tar file and extract locally in the notebook. I've not tested this one.

Second approach. Upload your data set to git repo and download them from there

# Git clone directly to ./img folder

!git clone https://[your-repository] ./img

# Check the result - Uncomment when you checked for speed up further runs

!ls -la ./img

7. Work with the config files directly from your computer.

Keeping all you config files on your computer can make the things a lot easier and handy. You modify any config locally, with your favorite editor and will be synced automatically and accessible from your notebook.

This is how I execute darknet to start training.

!./darknet detector train "/content/gdrive/My Drive/darknet/obj.data" "/content/gdrive/My Drive/darknet/yolov3.cfg" "/content/gdrive/My Drive/darknet/darknet53.conv.74" -dont_show

and this is my obj.data file:

classes=1

train = /content/gdrive/My\ Drive/darknet/train.txt

test = /content/gdrive/My\ Drive/darknet/test.txt

names = /content/gdrive/My\ Drive/darknet/obj.names

backup = /content/gdrive/My\ Drive/darknet/backup

8. Get your trained weights directly synced in your computer in real-time during the training

As you can see in the lines above, I'm setting the backup folder to my Google Drive. Because my Drive is synced to my computer, then I receive the trained weights in real-time while the notebook is training. This has some advantages:

- You can check how it is going locally in your computer with your test data set while the model is training.

- When the runtime is closed after 12 hours, you have your last trained weights saved in your computer

- You can run the notebook again and start training where it was the last session with this code:

# Start training at the point where the last runtime finished

!./darknet detector train "/content/gdrive/My Drive/darknet/obj.data" "/content/gdrive/My Drive/darknet/yolov3.cfg" "/content/gdrive/My Drive/darknet/backup/yolov3_last.weights" -dont_show

You can find an step tutorial or the runnable Colab notebook here 👇

- More deep explanation in the step by step tutorial

- Run it for yourself at Colab: Colab notebook for training YOLO using Darknet with tips & tricks to turn Colab notebook into a useful tool

- You can find the notebook at Github

Thanks for reading! I hope you enjoyed! I'll encourage you to send me some feedback and suggestions. Open a discussion here!

Happy object detection programming!

Sources

- YOLO original web site Joseph Redmon Page

- AlexeyAB darknet repo github

- The Ivan Goncharov notebook

- Performance Analysis of Google Colaboratory as a Tool for Accelerating Deep Learning Applications

Oldest comments (8)

Tip 8 was the most useful for me, I lost my results twice after training my model for 10 hours... Thanks

That's painful!

Very happy it helped you!👏 Thanks for let me know.

Good luck on your trainings!

Hi David, thanks for the interesting article, I'm particularly excited to see if copying my training / test files speeds up my training.

Have you ever been temporarily stopped from accessing backends? I've been training models on and off for the last 48hours or so and I've now been cut off. Do you know how long the cool off period is? I can't find much info online.

Cheers,

Charlie

Hi Charlie!

Sorry, I don't know. When I had some time off I just not tried before the day after. Then, I always had access again.

First of all, thanks for the interesting article.

Is it necessary to install cuDNN in the colab if we're using other framework? For my case, I'm using PyTorch.

Thanks for your attention.

8 tips not work for me

where is the problem? thanks

Hi Clemente,

I think a space is missing in your path.

My\ DriveAnyway, I recommend to use a symbolic link to make your path more friendly.

It is explained in the beginning of the notebook shared in the following post 👇

Train Yolo on Colab

Good luck!

Thank you for so kind!

Wish you happiness every day~