Since its arrival in November 2022, ChatGPT has revolutionized the way we all work by leveraging generative artificial intelligence (AI) to streamline tasks, produce content, and provide swift and error-free recommendations. By harnessing the power of this groundbreaking technology, companies and individuals can amplify efficiency and precision while reducing reliance on human intervention.

At the core of ChatGPT and other AI algorithms lie Large Language Models (LLMs), renowned for their remarkable capacity to generate human-like written content. One prominent application of LLMs is in the realm of website chatbots utilized by companies.

By feeding customer and product data into LLMs and continually refining the training, these chatbots can deliver instantaneous responses, personalized recommendations, and unfettered access to information. Furthermore, their round-the-clock availability empowers websites to provide continuous customer support and engagement, unencumbered by constraints of staff availability.

While LLMs are undeniably beneficial for organizations, enabling them to operate more efficiently, there is also a significant concern regarding the utilization of cloud-based services like OpenAI and ChatGPT for LLMs. With sensitive data being entrusted to these cloud-based platforms, companies can potentially lose control over their data security.

Simply put, they relinquish ownership of their data. In these privacy-conscious times, companies in regulated industries are expected to adhere to the highest standards when it comes to handling customer data and other sensitive information.

In heavily regulated industries like healthcare and finance, companies need to have the ability to self-host some open-source LLM models to regain control of their own privacy. Here is what you need to know about self-hosting LLMs and how you can easily do so with Plural.

Before you decide to self-host

In the past year, the discussion surrounding LLMs has evolved, transitioning from "Should we utilize LLMs?" to "Should we opt for a self-hosted solution or rely on a proprietary off-the-shelf alternative?"

Like many engineering questions, the answer to this one is not straightforward. While we are strong proponents of self-hosting infrastructure – we even self-host our AI chatbot for compliance reasons – we also rely on our Plural platform, leveraging the expertise of our team, to ensure our solution is top-notch.

We often urge our customers to answer these questions below before self-hosting LLMs.

- Where would you want to host LLMs?

- Do you have a client-server architecture in mind? Or, something with edge devices, such as on your phone?

It also depends on your use case:

- What will the LLMs be used for in your organization?

- Do you work in a regulated industry and need to own your proprietary data?

- Does it need to be in your product in a short period?

- Do you have engineering resources and expertise available to build a solution from scratch?

If you require compliance as a crucial feature for your LLM and have the necessary engineering expertise to self-host, you'll find an abundance of tools and frameworks available. By combining these various components, you can build your solution from the ground up, tailored to your specific needs.

If your aim is to quickly implement an off-the-shelf model for a RAG-LLM application, which only requires proprietary context, consider using a solution at a higher abstraction level such as OpenLLM, TGI, or vLLM.

Why Self-Host LLMs?

Although there are various advantages to self-hosting LLMs, three key benefits stand out prominently.

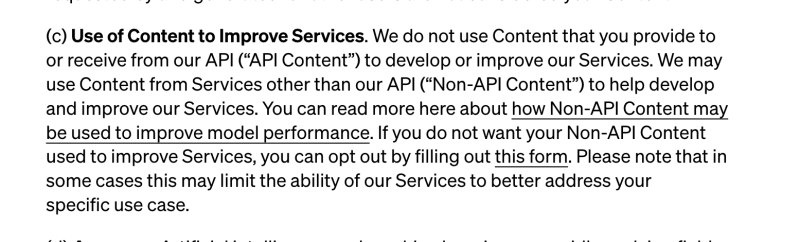

- Greater security, privacy, and compliance: It is ultimately the main reason why companies often opt to self-host LLMs. If you were to look at OpenAI’s Terms of Use, it even mentions that “We may use Content from Services other than our API (“Non-API Content”) to help develop and improve our Services.

OpenAI Terms of Use neglect a users privacy.

Anything you or your employees upload into ChatGPT will be included in future training data. And, despite its attempt to anonymize the data, it ultimately contributes knowledge of the model. Unsurprisingly, there is even a conversation happening in the space as to whether or not ChatGPT's use of data is even legal, but that’s a topic for a different day. What we do know is that many privacy-conscious companies have already begun to prohibit employees from using ChatGPT.

Customization: By self-hosting LLMs, you can scale alongside your use case. Organizations that rely heavily on LLMs might reach a point where it becomes economical to self-host. A telltale sign of this occurring is when you begin to hit rate limits with public API endpoints and the performance of these models is ultimately affected. Ideally, you can build it all yourself, train a model, and create a model server for your chosen ML framework/model runtime (e.g. tf, PyTorch, Jax.), but most likely you would leverage a distributed ML framework like Ray.

Avoid Vendor-Lock-In: When between open-source and proprietary solutions, a crucial question to address is your comfort with cloud vendor lock-in. Major machine learning services provide their own managed ML services, allowing you to host an LLM model server. However, migrating between them can be challenging, and depending on your specific use case, it may result in higher long-term expenses compared to open-source alternatives.

Popular Solutions to host LLMs

OpenLLM via Yatai

[

GitHub - bentoml/OpenLLM: Operating LLMs in production

Operating LLMs in production. Contribute to bentoml/OpenLLM development by creating an account on GitHub.

](https://github.com/bentoml/OpenLLM?ref=plural.sh)

OpenLLM via Yatai

OpenLLM is specifically tailored for AI application developers who are tirelessly building production-ready applications using LLMs. It brings forth an extensive array of tools and functionalities to seamlessly fine-tune, serve, deploy, and monitor these models, streamlining the end-to-end deployment workflow for LLMs.

Features that stand out

- Serve LLMs over a RESTful API or gRPC with a single command. You can interact with the model using a Web UI, CLI, Python/JavaScript client, or any HTTP client of your choice.

- First-class support for LangChain, BentoML, and Hugging Face Agents

- E.g., tie a remote self-hosted OpenLLM into your langchain app

- Token streaming support

- Embedding endpoint support

- Quantization support

- You can fuse model-compatible existing pre-trained QLoRAa/LoRA adapters with the chosen LLM with the addition of a flag to the serve command, still experimental though: https://github.com/bentoml/OpenLLM#️-fine-tuning-support-experimental

Why run Yatai on Plural

[

GitHub - bentoml/Yatai: Model Deployment at Scale on Kubernetes 🦄️

Model Deployment at Scale on Kubernetes 🦄️. Contribute to bentoml/Yatai development by creating an account on GitHub.

](https://github.com/bentoml/Yatai?ref=plural.sh)

Yatai on Plural.sh

If you check out the official GitHub repo of OpenLLM you’ll see that the integration with BentoML makes it easy to run multiple LLMs in parallel across multiple GPUs/Nodes, or chain LLMs with other types of AI/ML models, and deploy the entire pipeline on BentoCloud https://l.bentoml.com/bento-cloud. However, you can achieve the same on a Plural-deployed Kubernetes via Yatai , which is essentially an open-source BentoCloud which should come at a much lower price point.

Ray Serve Via Ray Cluster

[

Ray Serve: Scalable and Programmable Serving — Ray 2.7.0

](https://docs.ray.io/en/latest/serve/index.html?ref=plural.sh)

Ray Serve via Ray Cluster

Ray Serve is a scalable model-serving library for building online inference APIs. Serve is framework-agnostic, so you can use a single toolkit to serve everything from deep learning models built with frameworks like PyTorch, TensorFlow, and Keras, to Scikit-Learn models, to arbitrary Python business logic. It has several features and performance optimizations for serving Large Language Models such as response streaming, dynamic request batching, multi-node/multi-GPU serving, etc.

Features that stand out

- It’s a huge well-documented ML Platform. In our opinion, it is the best-documented platform with loads of examples to work off of. However, you need to know what you’re doing when working with it, and it takes some time to get adapted.

- Not focused on LLMs, but there are many examples of how to OS LLMS from Hugging Face,

- Integrates nicely with Prometheus for cluster metrics and comes with a useful dashboard for you to monitor both servings and if you’re doing anything else on your ray cluster like data processing or model training, that can be monitored nicely.

- It’s what OpenAI uses to train and host their models, so it’s fair to say it is probably the most robust solution ready to handle production-ready use cases.

Why run Ray on Plural

Plural offers a fully functional Ray cluster on a Plural-deployed Kubernetes cluster where you can do anything you can do with Ray, from data-parallel data-crunching over distributed model training to serving off-the-shelf OS LLMs

Hugginface’s TGI

[

GitHub - huggingface/text-generation-inference: Large Language Model Text Generation Inference

Large Language Model Text Generation Inference. Contribute to huggingface/text-generation-inference development by creating an account on GitHub.

](https://github.com/huggingface/text-generation-inference?ref=plural.sh)

Hugginface TGI

A Rust, Python, and gRPC server for text generation inference. Used in production at HuggingFace to power Hugging Chat, the Inference API, and Inference Endpoint.

Features that stand out

- Everything you need is containerized, so if you just want to run off-the-shelf HF models, this is probably one of the quickest ways to do it.

- They have no intent at the time of this writing to provideofficial Kubernetes support, citing

Why run Hugging Face LLM on Plural

When you run an HF LLM model inference server via Text Generation Inference (TGI) on a Plural-deployed Kubernetes cluster you benefit from all the goodness of our built-in telemetry, monitoring, and integration with other marketplace apps to orchestrate it and host your data and vector stores. Here is a great example we recommend following along for deploying TGI on Kubernetes.

[

GitHub - louis030195/text-generation-inference-helm

Contribute to louis030195/text-generation-inference-helm development by creating an account on GitHub.

](https://github.com/louis030195/text-generation-inference-helm/tree/main?ref=plural.sh)

Example of deploying TGI on Kubernetes

Building a LLM stack to self-host

When building an LLM stack, the first hurdle you'll encounter is finding the ideal stack that caters to your specific requirements. Given the multitude of available options, the decision-making process can be overwhelming. Once you've narrowed down your choices, creating and deploying a small application on a local host becomes a relatively straightforward task.

However, scaling said application presents an entirely separate challenge, which requires a certain level of expertise and time. For that, you’ll want to leverage some of the OS cloud-native platforms/tools we outlined above. It might make sense to use Rayin some cases as it gives you an end-to-end platform to process data, train, tune, and serve your ML applications beyond LLMs.

OpenLLM is more geared towards simplicity and operates at a higher abstraction level than Ray. If your end goal is to host a RAG LLM-app using langchain and/or llama-index, OpenLLM in conjunction with Yatai probably can get you there quickest. Keep in mind if you do end up going that route you’ll likely compromise on flexibility as opposed to Ray.

For a typical RAG LLM app, you want to set up a data stack alongside the model serving component where you orchestrate periodic or event-driven updates to your data as well as all the related data-mangling, creating embeddings, fine-tuning the models, etc.

The Plural marketplace offers various data stack apps that can perfectly suit your needs. Additionally, our marketplace provides document-store/retrieval optimized databases, such as Elastic or Weaviate, which can be used as vector databases. Furthermore, during operations, monitoring and telemetry play a crucial role. For instance, a Grafana dashboard for your self-hosted LLM app could prove to be immensely valuable.

If you choose to go a different route you can elect to use a proprietary managed service or SaaS solution (which doesn’t come without overhead either, as it would require additional domain-specific knowledge as well.) Operating and maintaining those platforms on Kubernetes is the main overhead you’ll have.

Plural to self-host LLMs

If you were to choose a solution like Plural you can focus on building your applications and not worry about the day-2 operations that come with maintaining those applications. If you are still debating between ML tooling, it could be beneficial to spin up an example architecture using Plural.

Our platform can bridge the gap between the “localhost” and “hello-world” examples in these frameworks to scalable production-ready apps because you don’t lose time on figuring out how to self-host model-hosting platforms likeRay and Yatai.

Plural is a solution that aims to provide a balance between self-hosting infrastructure applications within your own cloud account, seamless upgrades, and scaling.

To learn more about how Plural works and how we are helping organizations deploy secure and scalable machine learning infrastructure on Kubernetes, reach out to our team to schedule a demo.

If you would like to test out Plural, sign up for a free open-source account and get started today.

Top comments (0)