Tools and Setup

Before we dive into the fun part of getting keys shared amongst cloud providers, there are a variety of tools required to get this tutorial working. First, you’ll need to download and install Vault, then get it up and running. You will also need to install cURL and OpenSSL — these usually comes pre-installed with most Linux OSs, and are available via most package managers (apt, yum, brew, choco/scoop, etc.). Our examples also use head and diff which are part of the coreutils and diffutils packages under Ubuntu; you can either find a similar package for your OS or find a manual workaround for those portions. Next, install the AWS command line tools (CLI) and make sure you configure the CLI to connect to your account. The last step is to install and configure the Heroku CLI.

One last note — the Heroku feature to utilize keys from AWS requires a private or shield database plan, so please ensure your account has been configured accordingly.

Intro

In today’s hyperconnected world, the former approach of locking services behind Virtual Private Networks (VPNs) or within a demilitarized zone (DMZ) is no longer secure. Instead, we must operate on a zero-trust network model, where every actor must be assumed as malicious. This means that a focus on encryption — both at rest and in transit — along with identity and access management is critical to ensuring that systems can interact with each other.

One of the most important parts of the encryption process is the keys used to encrypt and decrypt information or used to validate identity. A recent approach to this need is called Bring Your Own Key (BYOK) — where you as the customer/end user own and manage your key, and provide it to third parties (notably cloud providers) for usage. However, before we dig into what BYOK is and how we can best leverage it, let’s have a quick recap on key management.

Key Management

At a high level, key management is the mechanism by which keys are generated, validated, and revoked — manually and as part of workflows. Another function of key management is ensuring that the root certificate that is used as a source of all truth is kept protected at a layer below other certificates, since revoking a root certificate would render the entire tree of certificates issued by it invalid.

One of the more popular tools used for key management is HashiCorp’s Vault — specifically designed for a world of low trust and dynamic infrastructure, where key ages can be measured in minutes or hours, rather than years. It includes functionality to manage secrets, encryption, and identity-based access, provides many ways to interact with it (CLI, API, web-based UI), and can connect to many different providers through plugins. This article will not focus on how to deploy Vault in a secure fashion, but the use cases that Vault can offer around BYOK and now to consume the keys in multiple cloud environments.

A key feature of using Vault is that it functions in an infrastructure- and provider-agnostic fashion — it can be used to provision and manage keys across different systems and clouds. At the same time, Vault can be used to encrypt and decrypt information without exposing keys to users, allowing for greater security.

BYOK on Multi Cloud

At this point, we’d like to dive into a specific use-case — demonstrating how you can create and ingest your own keys into multiple clouds, focusing on Amazon Web Services (AWS) and Heroku. For our purposes, we’ll start with uploading our keys to AWS KMS using Amazon’s CLI and demonstrating how the keys can be used within AWS. We will then rotate the keys manually — ideally, this is automated in a production implementation. Finally, we will utilize the generated keys in Heroku to encrypt a Postgres database.

📝 Note

Before we get started, ensure that Vault is installed and running, and you have followed the Vault transit secrets engine setup guide, since we’ll use that to generate the keys we upload to AWS. Once you have validated that Vault is running and the AWS CLI is installed, it is time to get started.

Create Key in Vault

First, we need to create our encryption key within Vault:

vault write -f transit/keys/byok-demo-key exportable=true allow\_plaintext\_backup=true

To export the key, we can use the Vault UI (http://localhost:8200/ui/vault/secrets/transit/actions/byok-demo-key?action=export), or we’ll need to use cURL, since the Vault CLI doesn’t support exporting it directly. The 127.0.0.1 address maps to the Vault server — a production setup would not be localhost, nor unencrypted.

curl — header “X-Vault-Token: <token>” [http://127.0.0.1:8200/v1/transit/export/encryption-key/byok-demo-key/1](http://127.0.0.1:8200/v1/transit/export/encryption-key/byok-demo-key/1)

This command will output a base64 encoded plaintext version of the key which we will upload to AWS KMS. Save the base64 plaintext key in a file — we used vault_key.b64.

Upload Key to AWS KMS

Now, we need to generate a Customer Master Key (CMK) with no key material:

\# create the keyaws kms create-key — origin EXTERNAL — description “BYOK Demo” — key-usage ENCRYPT\_DECRYPT\# give it a nice nameaws kms create-alias — alias-name alias/byok-demo-key — target-key-id <from above>

Copy down the key ID from the output, and download the public key and import token:

aws kms get-parameters-for-import — key-id <from above> — wrapping-algorithm RSAES\_OAEP\_SHA\_1 — wrapping-key-spec RSA\_2048

Copy the public key and import token from the output of the above step into separate files (imaginatively, we used import_token.b64 and public_key.b64 as the filenames), then base64 decode them:

openssl enc -d -base64 -A -in public\_key.b64 -out public\_key.binopenssl enc -d -base64 -A -in import\_token.b64 -out import\_token.bin

With the import token and public key downloaded, we can now use them to wrap the key from vault, first by converting the key to the OpenSSL byte format, then encrypting it using the public key from KMS.

\# convert the vault key to bytesopenssl enc -d -base64 -A -in vault\_key.b64 -out vault\_key.bin\# encrypt the vault key with the KMS keyopenssl rsautl -encrypt -in vault\_key.bin -oaep -inkey public\_key.bin -keyform DER -pubin -out encrypted\_vault\_key.bin\# import the encrypted key into KMSaws kms import-key-material — key-id <from above> — encrypted-key-material fileb://encrypted\_vault\_key.bin — import-token fileb://import\_token.bin — expiration-model KEY\_MATERIAL\_EXPIRES — valid-to $(date — iso-8601=ns — date=’364 days’)

Now that the key has been uploaded, we can quickly encrypt and decrypt via the CLI to validate that the key is functioning properly:

\# generate some random text to a filehead /dev/urandom | tr -dc A-Za-z0–9 | head -c 1024 > encrypt\_me.txt\# encrypt the fileaws kms encrypt — key-id <from above> — plaintext fileb://encrypt\_me.txt — output text — query CiphertextBlob | base64 — decode > encrypted\_file.bin\# decrypt the fileaws kms decrypt — key-id <from above> — ciphertext-blob fileb://encrypted\_file.bin — output text — query Plaintext | base64 — decode > decrypted.txt\# validate they match; should be a blank linediff encrypt\_me.txt decrypted.txt

At this point, the key is in place and can be used to encrypt data at rest or in transit in different parts of AWS. One of the easiest and most important places to encrypt data is in S3; files sitting around in storage mandate being encrypted. When creating a bucket, make sure you enable server-side encryption, select KMS as the key type, then choose the specific key you created from the KMS master key dropdown:

At this point, any objects that are put in the bucket will automatically be encrypted and then decrypted when they are read. This same approach can be used for encrypting databases — for a complete list of the different services that integrate with KMS, you can see the list here.

Use KMS Key in Heroku

Our next step is to use the key we generated and uploaded to AWS in Heroku! For our purposes, we’ll encrypt a Postgres database during creation. To do this, we need to grant Heroku’s AWS account access to the key that we created. This can be done via the AWS UI when creating a key, via the CLI, or via a policy.

During the key creation wizard in AWS, step four will ask to define key usage permissions; there will be a separate section that allows you to add AWS account IDs to the key:

Type in the Heroku AWS account ID (021876802972) and finish the wizard. If you want to use the CLI to achieve this, you need to update the default policy for the key:

\# get the existing key policyaws kms get-key-policy — policy-name default — key-id <from above> — output text

Save the output from the above into a text file called heroku-kms-policy.json and add the following two statements:

{

"Sid": "Allow use of the key",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::021876802972:root"

},

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt\*",

"kms:GenerateDataKey\*",

"kms:DescribeKey"

],

"Resource": "\*"

},

{

"Sid": "Allow attachment of persistent resources",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::021876802972:root"

},

"Action": [

"kms:CreateGrant",

"kms:ListGrants",

"kms:RevokeGrant"

],

"Resource": "*",

"Condition": {

"Bool": {

"kms:GrantIsForAWSResource": "true"

}

}

}

Now, update the existing policy with the new statements:

aws kms put-key-policy — key-id <from above> — policy-name default — policy file://heroku-kms-policy.json

There is no output from the above, so re-run the get-key-policy command to validate that it worked.

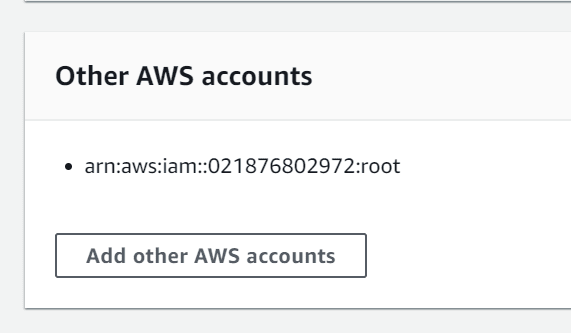

To update the policy via the UI, browse to the key under the “Customer managed keys” section in the AWS KMS console and edit to add the two statements from above. When switching from policy to default view, the “Other AWS accounts” section should show up:

With Heroku granted access to the key, we now can use the Heroku CLI to attach a Postgres database to an app, specifying the key ARN (which should look something like arn:aws:kms:region:account ID:key/key ID):

heroku addons:create heroku-postgresql:private-7 — encryption-key CMK\_ARN — app your-app-name

You can find a full set of documentation on encrypting your Postgres database on Heroku, including information on how to encrypt an already existing database.

Cleaning Up

At this point, given the cost of running a private or shield database in Heroku, you may want to delete any resources you have created. Similarly, removing the keys from AWS is suggested, since it will take seven days for them to be fully deprovisioned and deleted. Quitting the Vault dev server will remove all information, since the dev instance is ephemeral.

Considerations

While there is a lot to like about the BYOK approach to AWS and Heroku, there are a few considerations that need to be highlighted:

- While Vault allows for key rotation, it requires external automation — cron, a CI pipeline, Nomad/Kubernetes jobs, etc.

- Similarly, customer managed keys in AWS also require manual rotation.

- If there is a catastrophic failure in AWS, it is possible that the key will need to be re-imported; automating, testing, and validating this should be part of any production use case.

- If keys are deleted in AWS while being used in Heroku, all services and servers that depend on that key will be shutdown.

Conclusion

With all of this in place, we have demonstrated that maintaining local ownership of your encryption keys is both possible and desirable, and that ownership can then be extended into various cloud providers. This article only scratched the surface of the functionality offered by Vault, KMS, and Heroku — Vault itself can be used to manage asymmetric encryption as well, helping to protect data in transit along with identity validation. KMS is a cornerstone for encryption in AWS, and hooks into most services, allowing for an effortless way to ensure data is kept secure. Finally, Heroku’s ability to consume KMS keys directly allows for an additional level of security without adding management overhead.

Top comments (0)