Setup Stacked Topology High availability clusters using kubeadm

steps: to High availability clusters

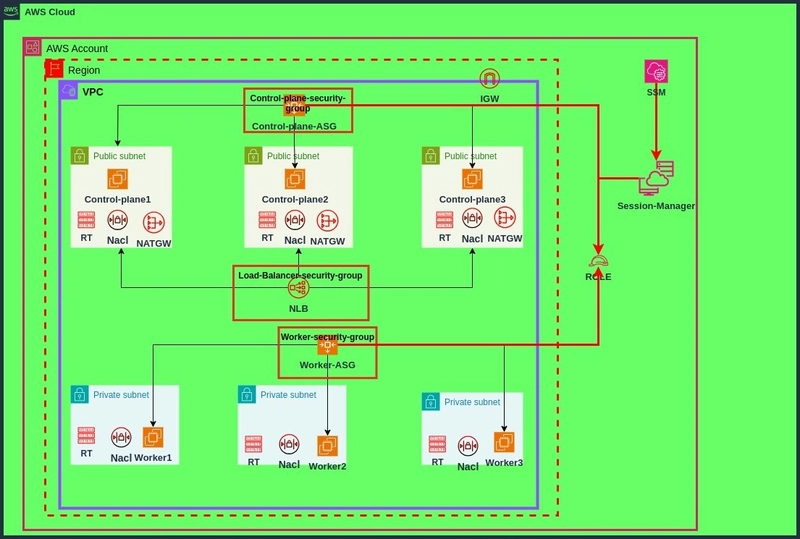

- deploy infrastructure at aws for self hosted kubernetes cluster

- vpc1=77.132.0.0/16 and integrate with internet gateway

- 3 public subnet for each Azs cidrblock will be at range 77.132.100.0/24, 77.132.101.0/24,77.132.102.0/24

- 3 private subnet for each Azs cidrblock will be at range 77.132.103.0/24 , 77.132.104.0/24 , 77.132.105.0/24 5 . associate routetable with public subnets

- associate per routetable that have route to outside by Nat gateways with each private subnet

- deploy network load balancer which have target registered with public subnets

- deploy 3 security groups for load balancer , control plane nodes , worker nodes

- use session manager for access the all nodes by adding a iam role and have permission .

- deploy ec2 autoscaling for control plane and worker nodes

to deploy high availability clusters :

require to have 3 control plane nodes which give beneficial performance and role decision to whom be master

2 . following formula of Quarom= N/2+1 which N=No.of control plane node

ex 3/2+1 = 2 meaning the minimum to consider it Healthy at 2 control plane node are ready for role decision and preemptionto setup High availability control plane :

disable swap

2, update kernel paramsinstall container runtime ,containerd

install runc

install cni pluging

install kubeadm ,kubelet , kubectl

optional check version of kubeadm , kubelet,kubectl

initialize the control plane with kubeadm

sudo kubeadm --control-plane-endpoint “Load-Balancer-DNS:6443” --pod-network-cidr 192.168.0.0/16 --upload-certs optional[--node-name master1 ]

install calico cni pluging 3.29.3

when you join other control plane node , use

sudo kubeadm join mylb-b12f4818ac02ac54.elb.us-east-1.amazonaws.com:6443 --token wme2w0.ft4sn3x0tc2035bu \

--discovery-token-ca-cert-hash sha256:a4e516c840d20093ef2262c57b3afb171be07cf0ec0514de8198b91f46418b36 \

--control-plane --certificate-key 403d1068384eebe16c1a50fc25e81b24ac7ccef26423244af9e1966e80531f9b --node-name

Note: give name for node using --node-name

- setup worker nodes

- disable swap 2, update kernel params

- install container runtime ,containerd

- install runc

- install cni pluging

- install kubeadm ,kubelet , kubectl

- optional check version of kubeadm , kubelet,kubectl

- copy kubeconfig from controlplane node to worker node kubeconfig

sudo kubeadm join mylb-b12f4818ac02ac54.elb.us-east-1.amazonaws.com:6443 --token wme2w0.ft4sn3x0tc2035bu \

--discovery-token-ca-cert-hash sha256:a4e516c840d20093ef2262c57b3afb171be07cf0ec0514de8198b91f46418b36 --node-name

if forget or missing token output when kubeadm bootstraps finish

sudo kubeadm token --print-join-command

master.sh

` #!/bin/bash

`#disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# update kernel params

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

# install container runtime ,containerd

curl -LO https://github.com/containerd/containerd/releases/download/v2.0.4/containerd-2.0.4-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-2.0.4-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl enable containerd --now

# install runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

# install cni

curl -LO "https://github.com/containernetworking/plugins/releases/download/v1.6.2/cni-plugins-linux-amd64-v1.6.2.tgz"

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

# install kubeadm, kubelet, kubectl

sudo apt-get update -y

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubeadm version

kubelet --version

kubectl version --client

# initial kubeadm for Ha cluster

sudo kubeadm init --control-plane-endpoint mylb-030c3a1c6c4fae6a.elb.us-east-1.amazonaws.com:6443 --pod-network-cidr 192.168.0.0/16 --upload-certs --node-name master1

# configure crictl to work with containerd

sudo crictl config runtime-endpoint: unix:///run/containerd/containerd.sock

sudo chown $(id -u):$(id -g) /var/run/containerd

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# This command changes the ownership of the Kubernetes admin configuration file

# - $(id -u) gets the current user's ID number

# - $(id -g) gets the current user's group ID number

# - /etc/kubernetes/admin.conf is the Kubernetes admin config file containing cluster credentials

# - chown changes the owner and group of the file to match the current user

# This allows the current user to access the Kubernetes cluster without needing sudo

sudo chown $(id -u):$(id -g) /etc/kubernetes/admin.conf

export KUBECONFIG=/etc/kubernetes/admin.conf

# install calico cni plugin 3.29.3

kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.29.3/manifests/tigera-operator.yaml

curl -o custom-resources.yaml https://raw.githubusercontent.com/projectcalico/calico/v3.29.3/manifests/custom-resources.yaml

kubectl create -f custom-resources.yaml

# alias kubectl utility

alias k=kubectl > .bashrc && source .bashrc

master-ha1.sh

#!/bin/bash

# disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# update kernel params

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

# install container runtime ,containerd

curl -LO https://github.com/containerd/containerd/releases/download/v2.0.4/containerd-2.0.4-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-2.0.4-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl enable containerd --now

# install runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

# install cni

curl -LO "https://github.com/containernetworking/plugins/releases/download/v1.6.2/cni-plugins-linux-amd64-v1.6.2.tgz"

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

# install kubeadm, kubelet, kubectl

sudo apt-get update -y

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubeadm version

kubelet --version

kubectl version --client

# initial kubeadm for Ha cluster

sudo kubeadm join mylb-030c3a1c6c4fae6a.elb.us-east-1.amazonaws.com:6443 --token 236pfl.0f9p8r06gcmfmj7p \

--discovery-token-ca-cert-hash sha256:992eb054da8690fbfc3ccbf2b0b30e5ce323c6b3444b3f11724dafa072e5f45a \

--control-plane --certificate-key 3fb4811956c257fd5407fb6aafd099077eb66a2da357683447b1b186d4dd890c --node-name master2

# sudo kubeadm join mylb-b12f4818ac02ac54.elb.us-east-1.amazonaws.com:6443 --token wme2w0.ft4sn3x0tc2035bu \

# --discovery-token-ca-cert-hash sha256:a4e516c840d20093ef2262c57b3afb171be07cf0ec0514de8198b91f46418b36 \

# --control-plane --certificate-key 403d1068384eebe16c1a50fc25e81b24ac7ccef26423244af9e1966e80531f9b --node-name master2

# configure crictl to work with containerd

sudo crictl config runtime-endpoint: unix:///run/containerd/containerd.sock

sudo chown $(id -u):$(id -g) /var/run/containerd

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chown $(id -u):$(id -g) /etc/kubernetes/admin.conf

export KUBECONFIG=/etc/kubernetes/admin.conf

Note:

when join other control plane node , no need to install calico cni 2.29.3 , automatically installed

# alias kubectl utility

alias k=kubectl > .bashrc && source .bashrc

master-ha2.sh

#!/bin/bash

disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

install container runtime ,containerd

sudo tar Cxzvf /usr/local containerd-2.0.4-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl enable containerd --now

install runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

install cni

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

install kubeadm, kubelet, kubectl

sudo apt-get update -y

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubeadm version

kubelet --version

kubectl version --client

initial kubeadm for Ha cluster

sudo kubeadm join mylb-030c3a1c6c4fae6a.elb.us-east-1.amazonaws.com:6443 --token 236pfl.0f9p8r06gcmfmj7p \

--discovery-token-ca-cert-hash sha256:992eb054da8690fbfc3ccbf2b0b30e5ce323c6b3444b3f11724dafa072e5f45a \

--control-plane --certificate-key 3fb4811956c257fd5407fb6aafd099077eb66a2da357683447b1b186d4dd890c --node-name master3

sudo kubeadm join mylb-b12f4818ac02ac54.elb.us-east-1.amazonaws.com:6443 --token wme2w0.ft4sn3x0tc2035bu \

--discovery-token-ca-cert-hash sha256:a4e516c840d20093ef2262c57b3afb171be07cf0ec0514de8198b91f46418b36 \

--control-plane --certificate-key 403d1068384eebe16c1a50fc25e81b24ac7ccef26423244af9e1966e80531f9b --node-name master3

configure crictl to work with containerd

sudo crictl config runtime-endpoint: unix:///run/containerd/containerd.sock

sudo chown $(id -u):$(id -g) /var/run/containerd

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chown $(id -u):$(id -g) /etc/kubernetes/admin.conf

export KUBECONFIG=/etc/kubernetes/admin.conf

alias kubectl utility

alias k=kubectl > .bashrc && source .bashrc

for worker nodes

worker1.sh

!/bin/bash

disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

install container runtime ,containerd

sudo tar Cxzvf /usr/local containerd-2.0.4-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl enable containerd --now

install runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

install cni

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

install kubeadm, kubelet, kubectl

sudo apt-get update -y

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubeadm version

kubelet --version

kubectl version --client

initial kubeadm for Ha cluster

sudo kubeadm token create --print-join-command

sudo kubeadm join mylb-7ede46b278348924.elb.us-east-1.amazonaws.com:6443 --token s1pzb2.e49unzo701usj6er \

--discovery-token-ca-cert-hash sha256:3d5d6836796b31f8d638feef97711973cbb62388cd549fb7676016e4d11d3689 --node-name worker1

sudo kubeadm join mylb-030c3a1c6c4fae6a.elb.us-east-1.amazonaws.com:6443 --token 236pfl.0f9p8r06gcmfmj7p \

--discovery-token-ca-cert-hash sha256:992eb054da8690fbfc3ccbf2b0b30e5ce323c6b3444b3f11724dafa072e5f45a --node-name worker1

configure crictl to work with containerd

sudo crictl config runtime-endpoint: unix:///run/containerd/containerd.sock

sudo chown $(id -u):$(id -g) /run/containerd/containerd.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chown $(id -u):$(id -g) /etc/kubernetes/admin.conf

export KUBECONFIG=/etc/kubernetes/admin.conf

copy kubeconfig from control plane to worker nodes kubeconfig

alias kubectl utility

alias k=kubectl > .bashrc && source .bashrc

worker2.sh

!/bin/bash

disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

install container runtime ,containerd

sudo tar Cxzvf /usr/local containerd-2.0.4-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl enable containerd --now

install runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

install cni

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

install kubeadm, kubelet, kubectl

sudo apt-get update -y

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubeadm version

kubelet --version

kubectl version --client

initial kubeadm for Ha cluster

sudo kubeadm token create --print-join-command

sudo kubeadm join mylb-7ede46b278348924.elb.us-east-1.amazonaws.com:6443 --token s1pzb2.e49unzo701usj6er \

--discovery-token-ca-cert-hash sha256:3d5d6836796b31f8d638feef97711973cbb62388cd549fb7676016e4d11d3689 --node-name worker2

sudo kubeadm join mylb-7966c35b34cfe6a2.elb.us-east-1.amazonaws.com:6443 --token wedeoe.3dktwv0s0a1tfudi \

--discovery-token-ca-cert-hash sha256:1104fe6342e316036524c87a994e47035f091f6869a4f84292cecc8dd4f279bd --node-name worker2

configure crictl to work with containerd

sudo crictl config runtime-endpoint: unix:///run/containerd/containerd.sock

sudo chwon $(id -u):$(id -g) /run/containerd/containerd.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chown $(id -u):$(id -g) /etc/kubernetes/admin.conf

export KUBECONFIG=/etc/kubernetes/admin.conf

alias kubectl utility

alias k=kubectl > .bashrc && source .bashrc

worker3.sh

#!/bin/bash

disable swap

sudo swapoff -a

sudo sed -i '/ swap / s/^(.*)$/#\1/g' /etc/fstab

update kernel params

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

install container runtime ,containerd

sudo tar Cxzvf /usr/local containerd-2.0.4-linux-amd64.tar.gz

curl -LO https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir -p /usr/local/lib/systemd/system/

sudo mv containerd.service /usr/local/lib/systemd/system/

sudo mkdir -p /etc/containerd

sudo containerd config default | sudo tee /etc/containerd/config.toml

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/g' /etc/containerd/config.toml

sudo systemctl daemon-reload

sudo systemctl enable containerd --now

install runc

curl -LO https://github.com/opencontainers/runc/releases/download/v1.1.12/runc.amd64

sudo install -m 755 runc.amd64 /usr/local/sbin/runc

install cni

sudo mkdir -p /opt/cni/bin

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.6.2.tgz

install kubeadm, kubelet, kubectl

sudo apt-get update -y

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update -y

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

kubeadm version

kubelet --version

kubectl version --client

initial kubeadm for Ha cluster

sudo kubeadm token create --print-join-command

sudo kubeadm join mylb-7ede46b278348924.elb.us-east-1.amazonaws.com:6443 --token s1pzb2.e49unzo701usj6er \

--discovery-token-ca-cert-hash sha256:3d5d6836796b31f8d638feef97711973cbb62388cd549fb7676016e4d11d3689 --node-name worker2

sudo kubeadm join mylb-7966c35b34cfe6a2.elb.us-east-1.amazonaws.com:6443 --token wedeoe.3dktwv0s0a1tfudi \

--discovery-token-ca-cert-hash sha256:1104fe6342e316036524c87a994e47035f091f6869a4f84292cecc8dd4f279bd --node-name worker3

configure crictl to work with containerd

sudo crictl config runtime-endpoint: unix:///run/containerd/containerd.sock

sudo chwon $(id -u):$(id -g) /run/containerd/containerd.sock

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

sudo chown $(id -u):$(id -g) /etc/kubernetes/admin.conf

export KUBECONFIG=/etc/kubernetes/admin.conf

alias kubectl utility

alias k=kubectl > .bashrc && source .bashrc

Note: remember to copy kubeconfig from control plane to worker nodes kubeconfig

to deploy infrastructure using by pulumi python and using pulumi esc to store key/value

"""An AWS Python Pulumi program"""

import pulumi , pulumi_aws as aws , json

import pulumi

cfg1=pulumi.Config()

vpc1=aws.ec2.Vpc(

"vpc1",

aws.ec2.VpcArgs(

cidr_block=cfg1.require(key="block1"),

tags={

"Name" : "vpc1"

}

)

)

intgw1=aws.ec2.InternetGateway(

"intgw1",

aws.ec2.InternetGatewayArgs(

vpc_id=vpc1.id,

tags={

"Name" : "intgw1"

}

)

)

zones=["us-east-1a", "us-east-1b" , "us-east-1c"]

cidr1=cfg1.require(key="cidr1")

cidr2=cfg1.require(key="cidr2")

cidr3=cfg1.require(key="cidr3")

cidr4=cfg1.require(key="cidr4")

cidr5=cfg1.require(key="cidr5")

cidr6=cfg1.require(key="cidr6")

pubsubnets=[cidr1,cidr2,cidr3]

privsubnets=[cidr4,cidr5,cidr6]

pubnames=["pub1" , "pub2" , "pub3" ]

privnames=[ "priv1" , "priv2" , "priv3" ]

for allpub in range(len(pubnames)):

pubnames[allpub]=aws.ec2.Subnet(

pubnames[allpub],

aws.ec2.SubnetArgs(

vpc_id=vpc1.id,

cidr_block=pubsubnets[allpub],

availability_zone=zones[allpub],

map_public_ip_on_launch=True,

tags={

"Name" : pubnames[allpub]

}

)

)

table1=aws.ec2.RouteTable(

"table1",

aws.ec2.RouteTableArgs(

vpc_id=vpc1.id,

routes=[

aws.ec2.RouteTableRouteArgs(

cidr_block=cfg1.require(key="any_ipv4_traffic"),

gateway_id=intgw1.id

)

],

tags={

"Name" : "table1"

}

)

)

pub_associate1=aws.ec2.RouteTableAssociation(

"pub_associate1",

aws.ec2.RouteTableAssociationArgs(

subnet_id=pubnames[0].id,

route_table_id=table1.id

)

)

pub_associate2=aws.ec2.RouteTableAssociation(

"pub_associate2",

aws.ec2.RouteTableAssociationArgs(

subnet_id=pubnames[1].id,

route_table_id=table1.id

)

)

pub_associate3=aws.ec2.RouteTableAssociation(

"pub_associate3",

aws.ec2.RouteTableAssociationArgs(

subnet_id=pubnames[2].id,

route_table_id=table1.id

)

)

for allpriv in range(len(privnames)):

privnames[allpriv]=aws.ec2.Subnet(

privnames[allpriv],

aws.ec2.SubnetArgs(

vpc_id=vpc1.id,

cidr_block=privsubnets[allpriv],

availability_zone=zones[allpriv],

tags={

"Name" : privnames[allpriv]

}

)

)

eips=[ "eip1" , "eip2" , "eip3" ]

for alleips in range(len(eips)):

eips[alleips]=aws.ec2.Eip(

eips[alleips],

aws.ec2.EipArgs(

domain="vpc",

tags={

"Name" : eips[alleips]

}

)

)

nats=[ "natgw1" , "natgw2" , "natgw3" ]

mynats= {

"nat1" : aws.ec2.NatGateway(

"nat1",

connectivity_type="public",

subnet_id=pubnames[allpub].id,

allocation_id=eips[0].allocation_id,

tags={

"Name" : "nat1"

}

),

"nat2" : aws.ec2.NatGateway(

"nat2",

connectivity_type="public",

subnet_id=pubnames[1].id,

allocation_id=eips[1].allocation_id,

tags={

"Name" : "nat2"

}

),

"nat3" : aws.ec2.NatGateway(

"nat3",

connectivity_type="public",

subnet_id=pubnames[2].id,

allocation_id=eips[2].allocation_id,

tags={

"Name" : "nat3"

}

),

}

priv2table2=aws.ec2.RouteTable(

"priv2table2",

aws.ec2.RouteTableArgs(

vpc_id=vpc1.id,

routes=[

aws.ec2.RouteTableRouteArgs(

cidr_block=cfg1.require(key="any_ipv4_traffic"),

nat_gateway_id=mynats["nat1"]

)

],

tags={

"Name" : "priv2table2"

}

)

)

priv3table3=aws.ec2.RouteTable(

"priv3table3",

aws.ec2.RouteTableArgs(

vpc_id=vpc1.id,

routes=[

aws.ec2.RouteTableRouteArgs(

cidr_block=cfg1.require(key="any_ipv4_traffic"),

nat_gateway_id=mynats["nat2"]

)

],

tags={

"Name" : "priv3table3"

}

)

)

priv4table4=aws.ec2.RouteTable(

"priv4table4",

aws.ec2.RouteTableArgs(

vpc_id=vpc1.id,

routes=[

aws.ec2.RouteTableRouteArgs(

cidr_block=cfg1.require(key="any_ipv4_traffic"),

nat_gateway_id=mynats["nat3"]

)

],

tags={

"Name" : "priv4table4"

}

)

)

privassociate1=aws.ec2.RouteTableAssociation(

"privassociate1",

aws.ec2.RouteTableAssociationArgs(

subnet_id=privnames[0].id,

route_table_id=priv2table2.id

)

)

privassociate2=aws.ec2.RouteTableAssociation(

"privassociate2",

aws.ec2.RouteTableAssociationArgs(

subnet_id=privnames[1].id,

route_table_id=priv3table3.id

)

)

privassociate3=aws.ec2.RouteTableAssociation(

"privassociate3",

aws.ec2.RouteTableAssociationArgs(

subnet_id=privnames[2].id,

route_table_id=priv4table4.id

)

)

nacl_ingress_trafic=[

aws.ec2.NetworkAclIngressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="myips"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=100

),

aws.ec2.NetworkAclIngressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="deny",

rule_no=101

),

aws.ec2.NetworkAclIngressArgs(

from_port=80,

to_port=80,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=200

),

aws.ec2.NetworkAclIngressArgs(

from_port=443,

to_port=443,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=300

),

aws.ec2.NetworkAclIngressArgs(

from_port=1024,

to_port=65535,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=400

),

aws.ec2.NetworkAclIngressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=500

)

]

nacl_egress_trafic=[

aws.ec2.NetworkAclEgressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="myips"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=100

),

aws.ec2.NetworkAclEgressArgs(

from_port=22,

to_port=22,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="deny",

rule_no=101

),

aws.ec2.NetworkAclEgressArgs(

from_port=80,

to_port=80,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=200

),

aws.ec2.NetworkAclEgressArgs(

from_port=443,

to_port=443,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=300

),

aws.ec2.NetworkAclEgressArgs(

from_port=1024,

to_port=65535,

protocol="tcp",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=400

),

aws.ec2.NetworkAclEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_block=cfg1.require(key="any_ipv4_traffic"),

icmp_code=0,

icmp_type=0,

action="allow",

rule_no=500

)

]

mynacls=aws.ec2.NetworkAcl(

"mynacls",

aws.ec2.NetworkAclArgs(

vpc_id=vpc1.id,

ingress=nacl_ingress_trafic,

egress=nacl_egress_trafic,

tags={

"Name": "mynacls"

}

)

)

nacl_associate1=aws.ec2.NetworkAclAssociation(

"nacl_associate1",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=pubnames[0].id

),

)

nacl1=aws.ec2.NetworkAclAssociation(

"nacl1",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=pubnames[0].id

)

)

nacl2=aws.ec2.NetworkAclAssociation(

"nacl2",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=pubnames[1].id

)

),

nacl3=aws.ec2.NetworkAclAssociation(

"nacl3",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=pubnames[2].id

)

),

nacl4=aws.ec2.NetworkAclAssociation(

"nacl4",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=privnames[0].id

)

),

nacl5=aws.ec2.NetworkAclAssociation(

"nacl5",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=privnames[1].id

)

),

nacl6=aws.ec2.NetworkAclAssociation(

"nacl6",

aws.ec2.NetworkAclAssociationArgs(

network_acl_id=mynacls.id,

subnet_id=privnames[2].id

)

),

master_ingress_rule={

"rule2":aws.ec2.SecurityGroupIngressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[ cfg1.require(key="any_ipv4_traffic") ]

),

}

master_egress_rule={

"rule5": aws.ec2.SecurityGroupEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[ cfg1.require(key="any_ipv4_traffic") ]

)

}

mastersecurity=aws.ec2.SecurityGroup(

"mastersecurity",

aws.ec2.SecurityGroupArgs(

name="mastersecurity",

vpc_id=vpc1.id,

tags={

"Name" : "mastersecurity"

},

ingress=[

master_ingress_rule["rule2"],

],

egress=[master_egress_rule["rule5"]]

)

)

worker_ingress_rule={

"rule2": aws.ec2.SecurityGroupIngressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")]

),

}

worker_egress_rule={

"rule5": aws.ec2.SecurityGroupEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[ cfg1.require(key="any_ipv4_traffic") ]

)

}

workersecurity=aws.ec2.SecurityGroup(

"workersecurity",

aws.ec2.SecurityGroupArgs(

name="workersecurity",

vpc_id=vpc1.id,

tags={

"Name" : "workersecurity"

},

ingress=[

worker_ingress_rule["rule2"]

],

egress=[worker_egress_rule["rule5"]]

)

)

lbsecurity=aws.ec2.SecurityGroup(

"lbsecurity",

aws.ec2.SecurityGroupArgs(

name="lbsecurity",

vpc_id=vpc1.id,

tags={

"Name" : "lbsecurity"

},

ingress=[

aws.ec2.SecurityGroupIngressArgs(

from_port=6443,

to_port=6443,

protocol="tcp",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")] # for kube-apiserver

),

],

egress=[

aws.ec2.SecurityGroupEgressArgs(

from_port=0,

to_port=0,

protocol="-1",

cidr_blocks=[cfg1.require(key="any_ipv4_traffic")]

)

]

)

)

mylb=aws.lb.LoadBalancer(

"mylb",

aws.lb.LoadBalancerArgs(

name="mylb",

load_balancer_type="network",

idle_timeout=300,

ip_address_type="ipv4",

security_groups=[lbsecurity.id],

subnets=[

pubnames[0].id,

pubnames[1].id,

pubnames[2].id

],

tags={

"Name" : "mylb"

},

internal=False,

enable_cross_zone_load_balancing=True

)

)

mytargets2=aws.lb.TargetGroup(

"mytargets2",

aws.lb.TargetGroupArgs(

name="mytargets2",

port=6443,

protocol="TCP",

target_type="instance",

vpc_id=vpc1.id,

tags={

"Name" : "mytargets2"

},

health_check=aws.lb.TargetGroupHealthCheckArgs(

enabled=True,

interval=60,

port="traffic-port",

healthy_threshold=3,

unhealthy_threshold=3,

timeout=30,

matcher="200-599"

)

)

)

listener1=aws.lb.Listener(

"listener1",

aws.lb.ListenerArgs(

load_balancer_arn=mylb.arn,

port=6443,

protocol="TCP",

default_actions=[

aws.lb.ListenerDefaultActionArgs(

type="forward",

target_group_arn=mytargets2.arn

),

]

)

)

clusters_role=aws.iam.Role(

"clusters_role",

aws.iam.RoleArgs(

name="clustersroles",

assume_role_policy=json.dumps({

"Version" : "2012-10-17",

"Statement" : [{

"Effect" : "Allow",

"Action": "sts:AssumeRole",

"Principal": {

"Service" : "ec2.amazonaws.com"

}

}]

})

)

)

ssmattach1=aws.iam.RolePolicyAttachment(

"ssmattach1",

aws.iam.RolePolicyAttachmentArgs(

role=clusters_role.name,

policy_arn=aws.iam.ManagedPolicy.AMAZON_SSM_MANAGED_EC2_INSTANCE_DEFAULT_POLICY

)

)

myprofile=aws.iam.InstanceProfile(

"myprofile",

aws.iam.InstanceProfileArgs(

name="myprofile",

role=clusters_role.name,

tags={

"Name" : "myprofile"

}

)

)

mastertemps=aws.ec2.LaunchTemplate(

"mastertemps",

aws.ec2.LaunchTemplateArgs(

image_id=cfg1.require(key="ami"),

name="mastertemps",

instance_type=cfg1.require(key="instance-type"),

vpc_security_group_ids=[mastersecurity.id],

block_device_mappings=[

aws.ec2.LaunchTemplateBlockDeviceMappingArgs(

device_name="/dev/sdb",

ebs=aws.ec2.LaunchTemplateBlockDeviceMappingEbsArgs(

volume_size=8,

volume_type="gp3",

delete_on_termination=True,

encrypted="false"

)

)

],

tags={

"Name" : "mastertemps"

},

iam_instance_profile= aws.ec2.LaunchTemplateIamInstanceProfileArgs(

arn=myprofile.arn

)

)

)

controlplane=aws.autoscaling.Group(

"controlplane",

aws.autoscaling.GroupArgs(

name="controlplane",

vpc_zone_identifiers=[

pubnames[0].id,

pubnames[1].id,

pubnames[2].id

],

desired_capacity=3,

max_size=9,

min_size=1,

launch_template=aws.autoscaling.GroupLaunchTemplateArgs(

id=mastertemps.id,

version="$Latest"

),

default_cooldown=600,

tags=[

aws.autoscaling.GroupTagArgs(

key="Name",

value="controlplane",

propagate_at_launch=True

)

],

health_check_grace_period=600,

health_check_type="EC2",

)

)

lbattach=aws.autoscaling.Attachment(

"lbattach",

aws.autoscaling.AttachmentArgs(

autoscaling_group_name=controlplane.name ,

lb_target_group_arn=mytargets2.arn

)

)

workertemps=aws.ec2.LaunchTemplate(

"workertemps",

aws.ec2.LaunchTemplateArgs(

image_id=cfg1.require(key="ami"),

name="workertemps",

instance_type=cfg1.require(key="instance-type"),

vpc_security_group_ids=[mastersecurity.id],

block_device_mappings=[

aws.ec2.LaunchTemplateBlockDeviceMappingArgs(

device_name="/dev/sdr",

ebs=aws.ec2.LaunchTemplateBlockDeviceMappingEbsArgs(

volume_size=8,

volume_type="gp3",

delete_on_termination=True,

encrypted="false"

)

)

],

tags={

"Name" : "workertemps"

},

iam_instance_profile=aws.ec2.LaunchTemplateIamInstanceProfileArgs(

arn=myprofile.arn

)

)

)

worker=aws.autoscaling.Group(

"worker",

aws.autoscaling.GroupArgs(

name="worker",

vpc_zone_identifiers=[

privnames[0].id,

privnames[1].id,

privnames[2].id

],

desired_capacity=3,

max_size=9,

min_size=1,

launch_template=aws.autoscaling.GroupLaunchTemplateArgs(

id=workertemps.id,

version="$Latest"

),

default_cooldown=600,

tags=[

aws.autoscaling.GroupTagArgs(

key="Name",

value="worker",

propagate_at_launch=True

)

],

health_check_grace_period=600,

health_check_type="EC2",

)

)

myssmdoc=aws.ssm.Document(

"myssmdoc",

aws.ssm.DocumentArgs(

name="mydoc",

document_format="YAML",

document_type="Command",

version_name="1.1.0",

content="""schemaVersion: '1.2'

description: Check ip configuration of a Linux instance.

parameters: {}

runtimeConfig:

'aws:runShellScript':

properties:

- id: '0.aws:runShellScript' runCommand:

- sudo su

- sudo apt update -y && sudo apt upgrade -y

- sudo apt install -y net-tools

- sudo systemctl enable amazon-ssm-agent --now

"""

)

)

myssmassociates=aws.ssm.Association(

"myssmassociates",

aws.ssm.AssociationArgs(

name=myssmdoc.name,

association_name="myssmassociates",

targets=[

aws.ssm.AssociationTargetArgs(

key="InstanceIds",

values=["*"]

)

],

)

)

controlplanes=aws.ec2.get_instances(

instance_tags={

"Name": "controlplane"

},

instance_state_names=["running"],

)

workers=aws.ec2.get_instances(

instance_tags={

"Name": "worker"

},

instance_state_names=["running"],

)

get_controlplanepubips=controlplanes.public_ips

get_controlplaneprivips=controlplanes.private_ips

get_workerprivips=workers.private_ips

get_loadbalancer=mylb.dns_name

pulumi.export("get_controlplanepubips", get_controlplanepubips)

pulumi.export("get_controlplaneprivips", get_controlplaneprivips)

pulumi.export("get_workerprivips", get_workerprivips)

pulumi.export("get_loadbalancer", get_loadbalancer)

Note:

for control plane security group

allow all traffic in inbound and outbound rules

for worker secuiity group

allow all traffic in inbound and outbound rules

for network load balancer security group

allow tcp 6443 for apiserver which allow registered target control plane nodes

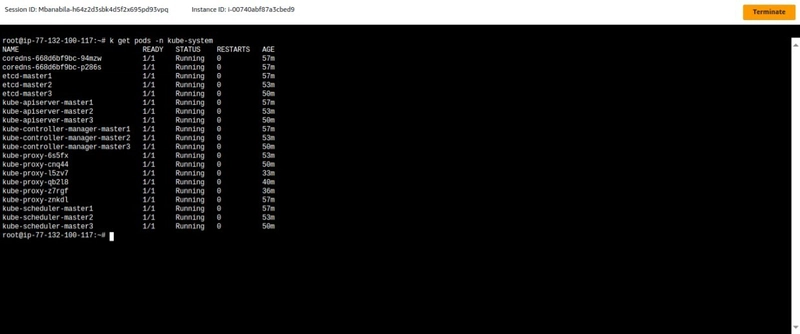

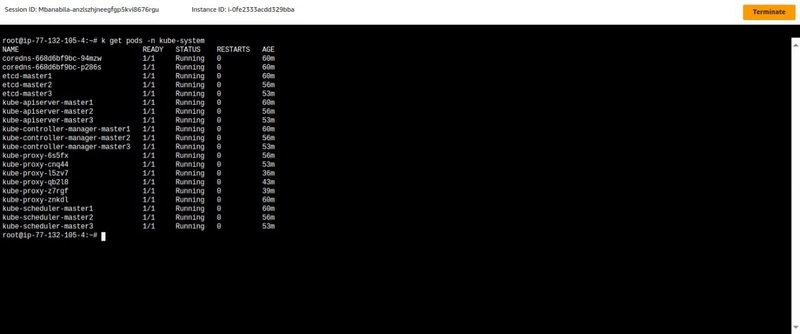

outcomes : from control plane and worker nodes

references:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

Top comments (0)