Introduction

Our computer is like a battlefield where multiple programs are constantly

fighting for CPU time. Without a proper management system, everything would slow down, freeze, or even crash. Whether you're browsing the web, running apps, or handling background tasks, something needs to decide which process gets priority and how system resources are shared.

This is where the Operating System (OS) comes in—it acts as the ultimate manager, ensuring that every process gets a fair share of CPU time, avoiding conflicts, and keeping the system running smoothly.

But what exactly is a process? Simply put, a process is a running instance of a program. Each process has its own memory, registers, and execution state, allowing multiple processes to run simultaneously without interfering with each other.

What You’ll Learn in This Blog:

- What a process is and its lifecycle

- How the OS tracks processes using the Process Control Block (PCB)

- How process scheduling ensures efficiency

- The role of context switching in multitasking

- How processes are created, managed, and terminated

- Real-world examples of process management

1. What a process is and its lifecycle

A process is an instance of a program in execution. While a program is

just a set of stored instructions (such as a .exe file or a script), it becomes a process when it is loaded into main memory and starts running. So, a process is simply a running program—using CPU time, memory, and system resources to execute its tasks.

However, processes are not limited to just the apps you open. Your Operating System (OS) itself runs multiple background processes to keep your system functional. Some common types of processes include:

- System processes – Managing memory, hardware, and security.

- Background services – Updating software, syncing files, and running scheduled tasks.

- Driver processes – Handling devices like keyboards, printers, and network adapters. Because many processes run at the same time, the OS needs to manage them efficiently to ensure smooth performance and prevent one process from using all the resources.

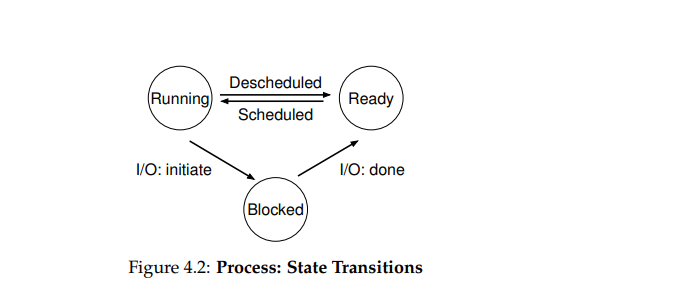

Understanding the Process Lifecycle:

A process doesn’t simply move from start to finish in a straight path. Instead, it moves through different states based on what it’s doing and what resources it needs. The OS keeps track of these states and transitions to manage CPU time efficiently and ensure fair resource allocation.

Here’s a breakdown of the different process states:

1. New (Created): A process starts in the New state when it is created. At this stage, the OS assigns the necessary memory and resources before it can run.

2. Ready: Once a process is ready to execute, it moves into the Ready state, waiting for the CPU to schedule it. Multiple processes in the ready state form a queue, where the OS decides which one runs next.

3. Running: A process moves to the Running state when it is assigned CPU time and actively executes its instructions. The CPU switches between processes rapidly to maintain multitasking.

4. Waiting (Blocked): A process enters the Waiting state if it needs to wait for something, like user input, disk access, or network data. It remains in this state until the required resource is available.

5. Terminated (Exit): A process reaches the Terminated state when it completes execution or encounters an error. The OS then frees up its resources and removes it from the system.

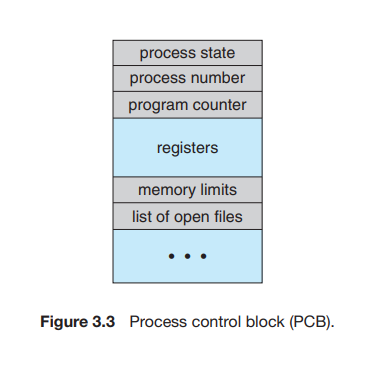

2. How the OS Tracks Processes Using the Process Control Block

When multiple processes are running, the OS must efficiently track and manage them. This is done using a Process Control Block (PCB), a data structure that stores essential details about each process.

The PCB helps the OS keep track of a process's state, resources, and execution history, ensuring smooth multitasking and process switching.

Key Components of the Process Control Block

- Process ID (PID): A unique number assigned to each process for identification.

- Process State: Indicates whether the process is in the New, Ready, Running, Waiting, or Terminated state.

- Program Counter (PC): Stores the memory address of the next instruction to be executed.

- CPU Registers: Hold temporary data, execution-related information, and process-specific variables.

- Memory Information: Contains details about the memory allocated to the process, including the address space.

- I/O Information: Tracks the input/output devices and files the process is interacting with.

- Priority: Determines the scheduling priority of the process, deciding how soon it gets CPU time. The OS updates the PCB dynamically as a process moves through different states. This allows seamless switching between processes and efficient resource allocation.

Transition to Process Scheduling

Tracking processes is just the first step—next, the OS must decide which process should run and when. That’s where Process Scheduling comes into play. Let’s dive in!

Since multiple processes compete for execution, the OS must schedule them efficiently to maintain system performance and responsiveness. This is where process scheduling plays a crucial role.

Illustration of a Process Control Block

3. How Process Scheduling Ensures Efficiency

Now that we understand how the OS tracks processes using the Process Control Block (PCB), the next challenge is determining which process gets CPU time and when. Since multiple processes compete for execution, the OS must schedule them efficiently to maintain system performance and responsiveness.

To achieve this, the OS follows three levels of scheduling:

- Long-Term Scheduling: Decides which processes are admitted into the system for execution, helping manage the overall system workload.

- Short-Term Scheduling: Determines which process runs next, using scheduling algorithms like First-Come-First-Serve (FCFS), Round Robin, and Priority Scheduling to optimize performance.

- Medium-Term Scheduling: Temporarily suspends (or swaps out) processes to free up memory and improve efficiency, particularly in virtual memory systems.

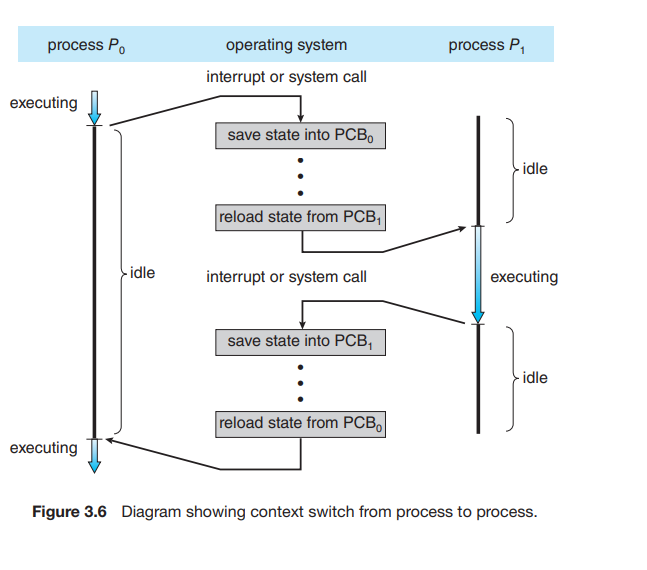

4. Context Switching: The Backbone of Multitasking

Context switching is how the OS quickly switches between processes, keeping multitasking smooth. But every switch comes with a cost—since the CPU spends time saving and loading process states instead of executing tasks.

How Context Switching Works:

- Interrupt or System Call: A running process (P₀) gets interrupted (e.g., time slice ends, higher-priority process needs CPU).

- Save Current Process State: The OS stores P₀'s execution details (program counter, registers, etc.) in its Process Control Block (PCB₀).

- Load the Next Process: The OS retrieves P₁’s saved state from PCB₁ and restores its execution context.

- Resume Execution: P₁ starts running while P₀ stays idle.

- Repeat: When it’s time to switch back, the OS repeats the process, saving P₁’s state and restoring P₀’s.

5.Process Creation & Termination

Every process in an operating system starts somewhere. But how exactly are processes created? And once they complete their tasks, how does the OS handle their termination?

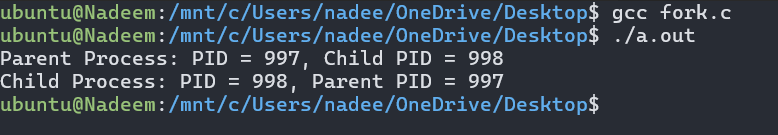

- Process Creation: How New Processes Start A new process is typically created when a user runs a program or when an existing process spawns a child process. This parent-child relationship allows complex applications to break tasks into multiple subprocesses, improving efficiency. In UNIX-based operating systems, processes are created using the fork() system call. This function duplicates the calling (parent) process, creating a new (child) process with an identical memory space.

- Example: Process Creation Using fork() in C: Given below is the simple example which shows how a process creates a child process using fork() in C:

#include <stdio.h>

#include <unistd.h> // Provides access to the fork() system call

int main() {

// Creating a child process using fork()

int pid = fork();

// fork() returns:

// > 0 → to the parent process (the returned value is the child's PID)

// == 0 → to the child process

// < 0 → if fork() fails (error case)

if (pid > 0) {

// This block runs in the **parent process**

printf("Parent Process: PID = %d, Child PID = %d\n", getpid(), pid);

}

else if (pid == 0) {

// This block runs in the **child process**

printf("Child Process: PID = %d, Parent PID = %d\n", getpid(), getppid());

}

else {

// If fork() fails, an error message is displayed

printf("Fork failed! Unable to create a new process.\n");

}

sleep(30); // Keep the process running for 30 seconds

return 0; // Exit the program successfully

}

-

Process Termination: How Processes End

A process can terminate in various ways:

- Normal termination: When the process completes execution and exits gracefully.

- Abnormal termination: If an error occurs, causing the process to stop unexpectedly.

-

Killed by another process: A process can be forcefully stopped using the

killcommand in UNIX/Linux.

- Example: Killing a Process in Linux

-

Find the process ID (PID) using:

ps aux | grep process_name

-

Kill the process using:

kill -9 PID

6 Real-World Use Cases & Examples

Process management isn't just a theoretical concept—it plays a crucial role in real-world applications. Let’s explore two well-known examples:

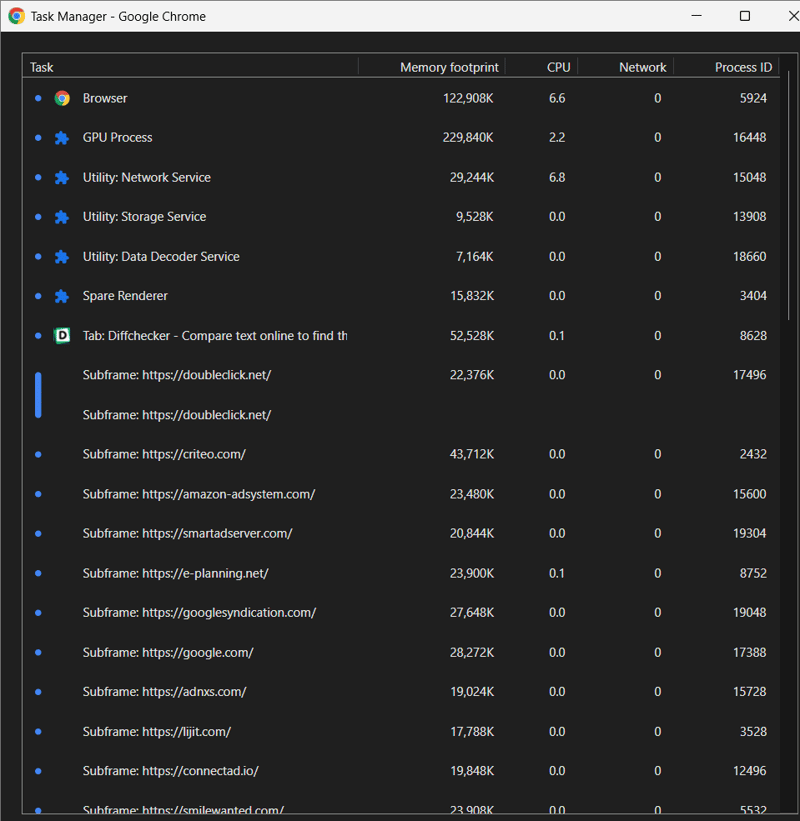

**1. Google Chrome: Multi-Process Architecture

Have you ever noticed that when one Chrome tab crashes, the whole browser doesn’t? That’s because it follows a multi-process model, where:

- Each tab runs as a separate process → A crash in one tab doesn’t affect others.

- Plugins and extensions have their own processes → Improves security and isolation.

- Processes

run in a sandbox → Prevents malware from spreading to the OS.

We can observe this by opening Chrome’s built-in Task Manager:

Open Google Chrome.

Press Shift + Esc (Windows) or go to Menu > More Tools > Task Manager.

You can see each tab, extension, and service listed as a separate process.

Here's an example of what it looks like:

In the above image, we can see multiple processes running, such as:

In the above image, we can see multiple processes running, such as: - Browser process (Main UI)

- GPU process (Handles graphical rendering)

- Network service (Manages internet connections)

- Tabs running as separate processes (e.g., Diffchecker tab)

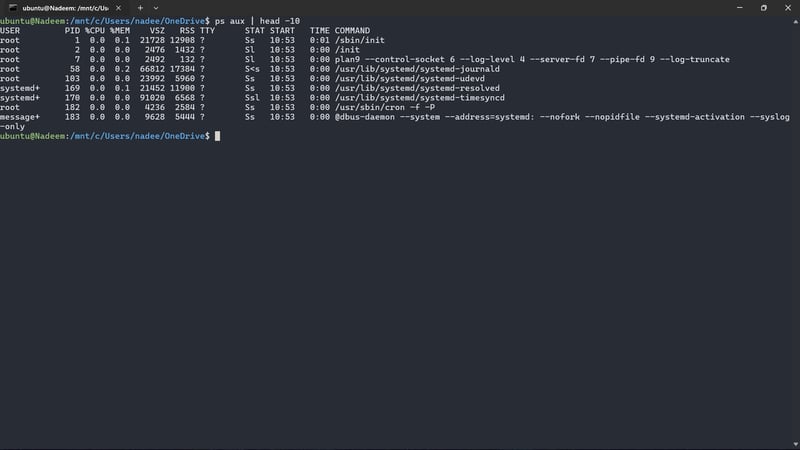

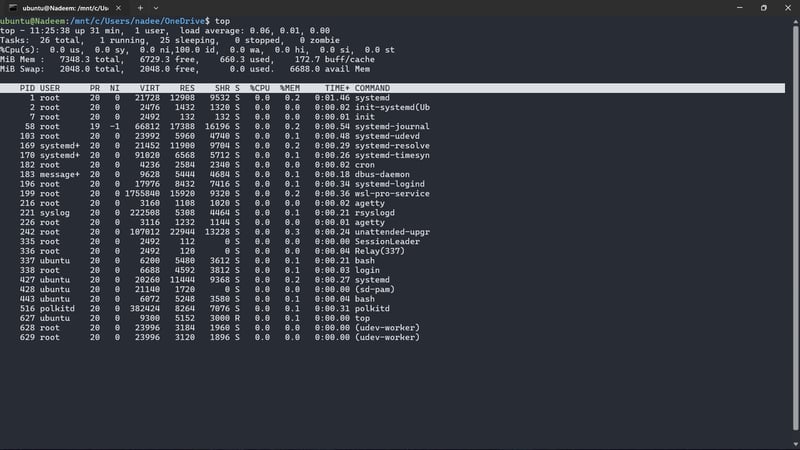

- Multiple subframe processes (for ads, embedded content, etc.) 2. Linux: Managing System Processes with systemd Linux follows a hierarchical process management model, where system processes are initialized by **systemd or init. These processes handle everything from launching system services to managing user applications. Key Linux System Processes: systemd - The first process started at boot, managing all other processes. cron - Handles scheduled tasks (e.g., backups, script execution). sshd - Manages remote SSH connections. Xorg - Provides graphical display services. To view top 10 running processes in Linux, run the below command:

ps aux | head -10

For a real-time view, run the below command:

top

7 Conclusion

Behind every smooth computing experience lies the OS’s powerful process management system—silently juggling multiple programs, allocating resources, and ensuring everything runs without chaos. Without it, even the most advanced systems would struggle to keep up.

Key Takeaways:

- Every process follows a lifecycle, transitioning through different states.

- The OS tracks each process using a Process Control Block (PCB) to store vital execution details.

- CPU scheduling ensures fair resource distribution, optimizing performance.

- Context switching keeps multitasking smooth by rapidly switching between active processes.

- Processes are created and terminated dynamically, adapting to system needs.

- Real-world applications like Chrome’s multi-process model and Linux’s system management highlight its importance.

Final Thought

Next time you open multiple applications, pause for a moment—how does your OS decide which one gets CPU time first? The answer lies in CPU Scheduling, a key concept we’ll explore in the next blog!

What’s your biggest question about OS process management? Drop a comment below, and let’s discuss! 🚀

Top comments (0)