How to train and serve deep learning models on low budget — $20 or less per month

My friends, Samuel James and emmy adigun, and I recently submitted our project to the Facebook’s AI Hackathon hosted on DevPost. The main hackathon requirement was to (as stated on the hackathon page):

Build a creative and well-implemented solution using PyTorch that can unlock positive impact on businesses or people. The solution can be a machine learning model, an application, or focused on a creative project like art or music — all built with PyTorch.

None of us, at least I, had ever done anything with PyTorch. Besides, we had our separate daily jobs and we were pretty much a cross-continent distributed team. After a short call, we decided to help people eat healthier green by identifying diseased plants and fruits with artificial intelligence. With each of us possessing expertise in different parts of software engineering craft, we allocated our responsibilities as follows:

- emmy adigun, being a user experience expert, to design the mobile app

- Samuel James, the Chuck Norris of code, develop and train the deep learning model in PyTorch plus setting up the mobile app with Expo.

- And I, coordinating and helping when I can.

With responsibilities, Sammy and I worked on the deep learning part together as this was new to both us. We started out searching for available dataset and using the image classification tutorial on PyTorch website. Our starting point was Google Colab to leverage free GPU instances. This, however, did not work out as planned as took longer than usual before the model training completed. Meaning, we to keep our laptops on for a long time. At this point, we split the work so as to deliver faster: Sam focused on developing the mobile app while I focused on PyTorch.

Welcome, Amazon SageMaker!

We wanted to run on a budget as low as we could, thankfully we have team AWS Account loaded with credits from AWS. I launched an Amazon SageMaker notebook instance with GPU (p2.xlarge), and got to work. We trained the PyTorch model successfully in good time and exported the model as pickled object in GPU and CPU versions. Here comes the hard part.

We could have easily hosted the model on Amazon SageMaker, but this would cost us about $100 a month. This is large portion of our AWS credits. Hence, we decided to be creative: Train on Amazon SageMaker, Deploy on Heroku Containers. Our idea is still an experiment, as such running on the free Heroku pricing tier.

Training time: ~45 mins

Total Cost: ~$5.00

Enter, Heroku Container Registry & Runtime

For me, this was more or less like the 8th Wonder of the World: free docker runtime. I wrote a simple Python Flask api to serve, following the same pattern as hosting custom models on Amazon SageMaker, built the docker container on my local and now time to push the image to Heroku Container Registry. This posed another issue as the image about 1.8GB in size and my internet upload speed was not fast enough, running for more than 1 hour. How to solve this? We got more creative.

We setup an Amazon CodeBuild pipeline linked to our project GitHub repository. The idea behind the build was push our model serving container image to Heroku. For this to happen, we needed to include Heroku credentials in the build. As fast as we wanted to be, we are also security first. So, we saved our Heroku security credentials in Amazon Systems Manager Parameter Store, and accessed at build time. With these setup, we were ready to go and as with every software project, nothing works the first time. However, we got it working. Our new build time: 5 mins , compared to >1 hour when we run from my local machine. Now, we only commit to GitHub, AWS CodeBuild does the rest

Setup time : Friday night, 2 hours 30 mins

Total cost : <$2

Finally, Automation. WHAT!!!@?

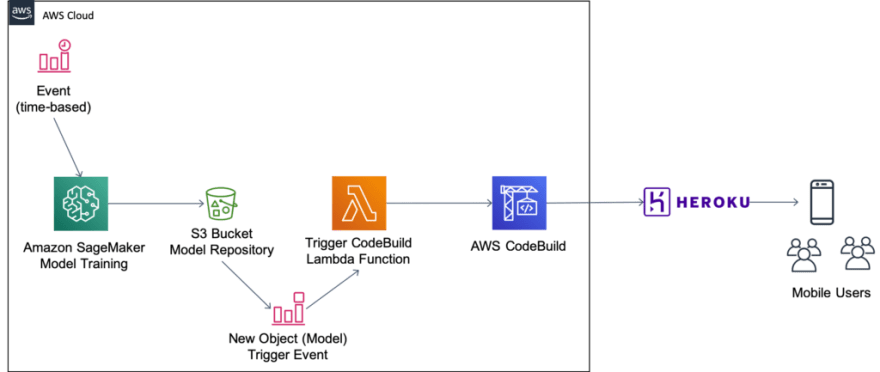

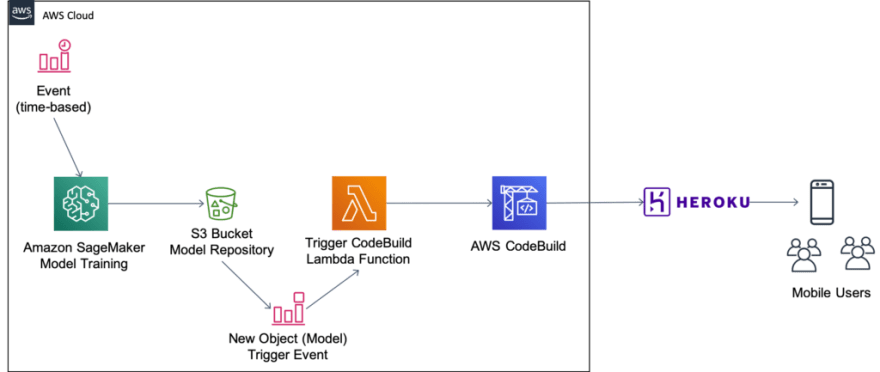

Our goal, should we be named as winners, would be to scale solution from product and technical perspective. On the technical front, until we make money, we need to find a way to be agile with our software —infrastructure as code, continuous deployment, continuous delivery. What better place to do this, if not on Amazon Web Services Cloud. I will not be sharing the code to our repository, but will show you a glimpse of our architecture.

Total cost : ~ $20.00 per month

Wrapping Up

For now, we are not able to share our code but I hope you got the idea and inspired you. We are not saying this is the best, however, constraints placed on us while working on this project forced to rethink our approach and come up with a creative solution to build within budget. If you have any questions, feel free to reach out to me or emmy adigun or Samuel James. You can reach me via email, follow me on Twitter or connect with me on LinkedIn. Can’t wait to hear from you!!

Top comments (0)