This is another post on CDK after a while that's a bit different. You can check out the previous two posts that I had written here:

Deploying a Node app to Beanstalk using aws-cdk (TypeScript)

Ryan Dsouza ・ Apr 18 '20

Let's see something today that we have all done manually in the past, creating thumbnails for images for profile pic uploads in S3.

Here we shall walk through how we can react events when we upload a file to S3 and send that to SQS. We shall also attach a Lambda handler that processes the message from the SQS queue and generates our thumbnail in the same bucket.

Note: The code for the entire repository is here if you want to jump right in :)

ryands17

/

s3-thumbnail-generator

ryands17

/

s3-thumbnail-generator

Generate thumbnails with S3 via SQS and Lambda created using AWS CDK

S3 image resizer

This is an aws-cdk project where you can generate a thumbnail based on S3 event notifications using an SQS Queue with Lambda.

Steps

-

Change the region in the

cdk.context.jsonto the one where your want to deploy. Default isus-east-2. -

Run

yarn(recommended) ornpm install -

Go to the resources folder

cd resources. -

Run

yarn add --arch=x64 --platform=linux sharpornpm install --arch=x64 --platform=linux sharpto buildsharpfor Lambda. -

Go back to the root and run

yarn cdk deploy --profile profileNameto deploy the stack to your specified region. You can skip providing the profile name if it isdefault. You can learn about creating profiles using the aws-cli here. -

Now you can add image/s in the photos folder in S3 and check after a couple of seconds that images in a folder named thumbnails is generated by Lambda via SQS.

The…

In this repo, all the code that we write to create resources will be in the s3_thumbnail-stack.ts file situated in the lib folder.

Let's start by initializing a couple of constants:

const bucketName = 'all-images-bucket'

const prefix = 'photos/'

These constants are the name of the bucket that we will be creating and the path where we will be storing our photos respectively.

Then, in the constructor of our S3ThumbnailStack class, we shall start by creating the Queue that will handle all the object creation events coming from S3.

const queue = new sqs.Queue(this, 'thumbnailQueue', {

queueName: 'thumbnailPayload',

receiveMessageWaitTime: cdk.Duration.seconds(20),

visibilityTimeout: cdk.Duration.seconds(60),

retentionPeriod: cdk.Duration.days(2),

})

This code creates an SQS Queue named thumbnailPayload and sets some parameters:

receiveMessageWaitTime: This is the time at what interval should one poll for messages. We have kept it at 20 seconds as that is the longest we can have. This method is also known as long polling.

visibilityTimeout: This is the time for a message to be visible again for other handlers to process it. Here we are assuming that the Lambda will complete the thumbnail generation within 60 seconds. This time should be set accordingly so that the same messages are not consumed twice.

retentionPeriod: The amount of days the message will remain in the queue if unprocessed.

Moving on, lets create the lambda handler.

const handler = new lambda.Function(this, 'resizeImage', {

runtime: lambda.Runtime.NODEJS_12_X,

code: lambda.Code.fromAsset('resources'),

handler: 'index.handler',

timeout: cdk.Duration.seconds(20),

memorySize: 256,

logRetention: logs.RetentionDays.ONE_WEEK,

environment: {

QUEUE_URL: queue.queueUrl,

PREFIX: prefix,

},

})

Most of these options are familiar so lets discuss about the code and environment parameters respectively.

code: This is where our Lambda code will be taken from. We have specified a

fromAsset('resources'), meaning CDK will take this from a folder named resources in our code. In this folder, there's a simple NodeJS Lambda function that resizes images using the sharp library.environment: These contain two values. One for the queue URL which we will be needing to delete the message after we have processed it and the other is the S3 bucket prefix from where we need to fetch the image uploaded.

The Lambda handler looks something like this that is in the resources folder.

const AWS = require('aws-sdk')

const sharp = require('sharp')

const prefix = process.env.PREFIX

const s3 = new AWS.S3()

const sqs = new AWS.SQS({

region: process.env.AWS_REGION,

})

exports.handler = async event => {

try {

for (let message of event.Records) {

const body = JSON.parse(message.body)

if (Array.isArray(body.Records) && body.Records[0].s3) {

const objectName = decodeURIComponent(

body.Records[0].s3.object.key.replace(/\+/g, ' ')

)

const bucketName = body.Records[0].s3.bucket.name

console.log({ objectName, bucketName })

try {

await resizeAndSaveImage({ objectName, bucketName })

await deleteMessageFromQueue(message.receiptHandle)

} catch (e) {

console.log('an error occured!')

console.error(e)

}

} else {

await deleteMessageFromQueue(message.receiptHandle)

}

}

return {

statusCode: 200,

body: JSON.stringify('success!'),

}

} catch (e) {

console.error(e)

return {

statusCode: 500,

error: JSON.stringify('Server error!'),

}

}

}

async function resizeAndSaveImage({ objectName, bucketName }) {

const objectWithoutPrefix = objectName.replace(prefix, '')

const typeMatch = objectWithoutPrefix.match(/\.([^.]*)$/)

if (!typeMatch) {

console.log('Could not determine the image type.')

return

}

// Check that the image type is supported

const imageType = typeMatch[1].toLowerCase()

if (imageType !== 'jpg' && imageType !== 'png') {

console.log(`Unsupported image type: ${imageType}`)

return

}

// Download the image

const image = await s3

.getObject({

Bucket: bucketName,

Key: objectName,

})

.promise()

// Transform the image via sharp

const width = 300

const buffer = await sharp(image.Body).resize(width).toBuffer()

const key = `thumbnails/${objectWithoutPrefix}`

return s3

.putObject({

Bucket: bucketName,

Key: key,

Body: buffer,

ContentType: 'image',

})

.promise()

}

function deleteMessageFromQueue(receiptHandle) {

if (receiptHandle) {

return sqs

.deleteMessage({

QueueUrl: process.env.QUEUE_URL,

ReceiptHandle: receiptHandle,

})

.promise()

}

}

This handler performs two functions, resize and save the image to S3, and delete the message from the queue once processed successfully.

And the third and final resource that we will be creating is our S3 bucket as follows:

const imagesBucket = new s3.Bucket(this, 'allImagesBucket', {

bucketName,

removalPolicy: cdk.RemovalPolicy.DESTROY,

})

This will just create our bucket and destroy it once we delete our Cloudformation stack.

There are three things needed to make this entire workflow running. Lets go through them and perform the final touches.

- Send object creation events from S3 to SQS: This is so that S3 sends an event to SQS whenever an object, in our case an profile picture is uploaded in the photos folder. This is how we can add that:

imagesBucket.addObjectCreatedNotification(new s3Notif.SqsDestination(queue), {

prefix,

})

Here, we are adding an ObjectCreatedNotification in which we are setting the destination to be an SQS Queue. Note the prefix paramter. This means only events for objects added in the photos folder or prefix in the case of S3 will be sent to SQS.

- Allow Lambda access to S3: This is important as we need to fetch the image, generate the thumbnail, and put the thumbnail back in the bucket. Lets attach a policy to the handler:

handler.addToRolePolicy(

new iam.PolicyStatement({

effect: iam.Effect.ALLOW,

resources: [`${imagesBucket.bucketArn}/*`],

actions: ['s3:PutObject', 's3:GetObject'],

})

)

This tells Lambda "You have access just to GetObject and PutObject methods on S3 on the created bucket and nothing else".

- Allow Lambda to process messages from SQS: Finally, we tell Lambda to execute when a mesasge is added in the SQS Queue from the S3 object creation event.

handler.addEventSource(new eventSource.SqsEventSource(queue))

This basically says, "Lambda, consider this SQS Queue as a source and consume any messages that you get from this queue".

And we're done! Let's deploy this stack by firing yarn cdk deploy or npm run cdk deploy if you're using NPM.

Note: I'm assuming you have setup the AWS CLI and set your access and secret keys in the default profile. You can read more about that here.

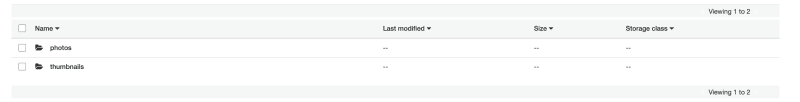

After successful stack creation, you will get a bucket like this:

As you can see I have already created a folder named photos. Let's upload a profile picture for our user that's a cat, and see if the event fires to SQS and the Lambda function is triggered.

After uploading, we can see two folders here after some time. You can refresh it after a while if you don't see it.

Awesome! Our Lambda has successfully run and we can check the difference between the two pictures as well to see whether it has worked!

Original profile pic:

Resized thumbnail:

This is how we successfully deployed a stack with S3, Lambda and SQS to generate thumbnails automatically on upload.

Thank you all for reading! Do like and share this post if you've enjoyed it :)

Top comments (0)