Introduction

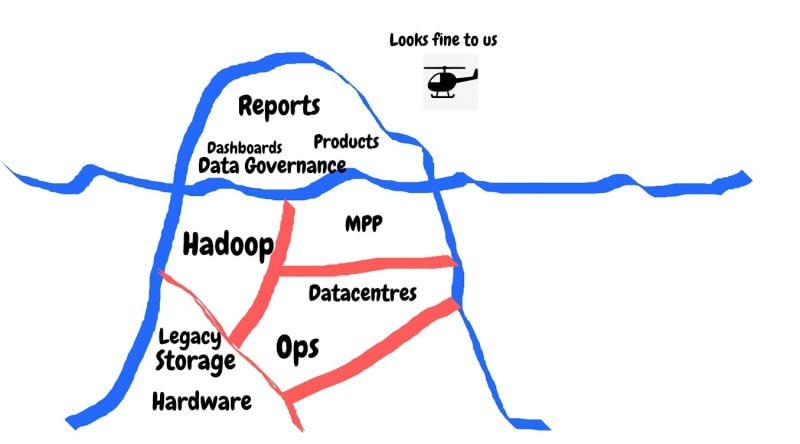

Verizon Connect is the largest telematics company in the world, tracking >5 million vehicles and processing billions of IoT data points daily. That growth and position has been built on top of 3 separate product platforms that were acquired or built from the ground up in the last 4 years. The consolidation of the data from these three platforms onto a single data platform would be a large undertaking and would require a comprehensive business case. It has taken time to build the case for our migration to the cloud and I have tried to capture some of my learnings in building such a case in this article.

About my team

My team and I manage the data platform for backend services in Verizon Connect. Our systems are used to power BI dashboards, as data sources for ML, sharing data to external partners and providing data and insights directly to our retail customers where scale is needed. These services are still largely provided off the separate legacy data platforms aligned to the 3 individual product platforms. In the past, this separation has been necessary but also workable. However, with more demand for products and services to be built off our platforms, we need to look seriously at consolidating our data to be able to scale the team and effort going forward.

However, if from the outside, your team and systems are performing, how can you convince your stakeholders that such consolidation is necessary?

4 steps for making the case for a large data project

A successful data platform* migration to the cloud starts with several non-technical steps:

- Stakeholder buy-in. Such large projects are not a technical decision alone and may need support from several groups.

- Domain driven migration: Identify different domains within your dataset. Order them in terms of priority for migration and work closely with each customer during migration.

- Focus on customer value: Deliver what matters to your customer first, tackle technical requirements second. Beware of technical scope creep.

- Build your team. Depending on your chosen solution, your team may need to learn new skills on new platforms. Start this early and it will pay dividends in terms of your project and team morale.

Stakeholder Buy-in

A common mistake would be to believe that the decision to undertake such a large project is a purely technical decision and yours or your teams alone to make. It is not and you must bring your stakeholders along with you for this project. You have 3 major stakeholders to convince. The first is your direct management. They must see the value in the project and what it can bring for them and your organisation. They should be the easiest to convince as they should be aware of the cost of maintaining your legacy platform. That is, if you have been informing them and they have been listening. Once convinced, they will be your greatest ally to garner resources and ensure your team is protected during the migration.

With your management onboard, the second stakeholder is your customer. As a technical team, it can be easy to focus solely on the technical benefits a migration to the cloud can bring. While migrating your data platform to the cloud solves numerous technical issues, those issues may not be directly aligned to your customers priorities. Your customers don’t particularly care about the infinite scalability, elasticity and cost benefits of cloud economics. However, they want their data on time, of high quality and responsive. They want data discoverability and accessibility. Plus while all customers must care about security and data governance, it may not be their highest priority. If you are trying to make a case for migration to the cloud, you must align your reasons for a cloud data platform to your customers priorities.

| What your customers care about? | What they won’t care about? |

|---|---|

| Time to insight | Hardware refresh |

| Data discoverability and accessibility | Infinite scalability and elasticity |

| Data quality and reliability | Support Costs |

| Self-service | Open Source |

| What everyone should care about. |

|---|

| Security & Data Governance |

I would suggest you have tangible metrics on how a move to the cloud will improve your customers experience. Reduction in time to insight and development turnaround time would appeal to them. Something as simple as our daily jobs will roll up 30 minutes earlier if we leverage the cloud correctly will appeal to them. I would suggest that you put together a deck outlining the reasons for the migration, what you can achieve with the migration and high-level overview of the technology in scope. Depending on your audience, you can pick and choose which slides to show. Once the deck is ready, go on a roadshow, presenting to different teams on the project and selling it to them. Hopefully, you will have teams clamouring to be the first customers to launch on the platform.

The final stakeholder you must convince is your team. They are of utmost importance to the execution of the project. You won’t win without them. As with your manager, they should be aware of the pain and problems with your legacy platform. As a technical team, they should be excited by the prospect of migrating to the cloud. Investing in your team is so important that I have dedicated the final lesson solely to that.

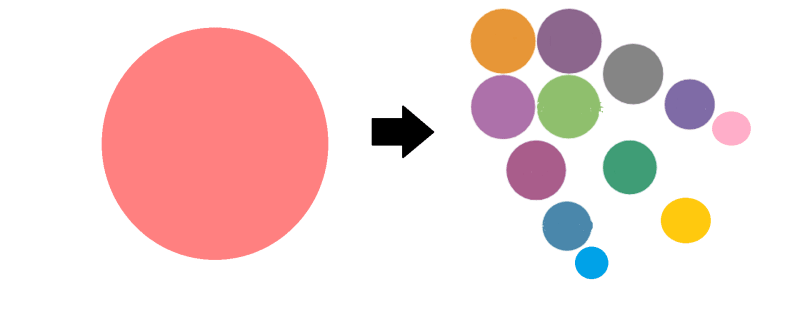

Domain driven approach

Identify data domains in your dataset and migrate those one-by-one to the new platform first. Don’t take a big bang approach in trying to migrate everything at once. We defined domains in terms of customers. For example, identify the data that your marketing team uses and that is the marketing data domain. By assigning customers to data, you can migrate that customer completely to the new platform. Hopefully you will have customers clamouring to be first but I would recommend picking a more technical team to start with. They will want to move and an initial success will build confidence and momentum in the project. If you have a solid partner with you for the initial migration, this is invaluable to you and your management. When the project is discussed at the CTO level, your director will need partners who support the project and understand the benefit of it.

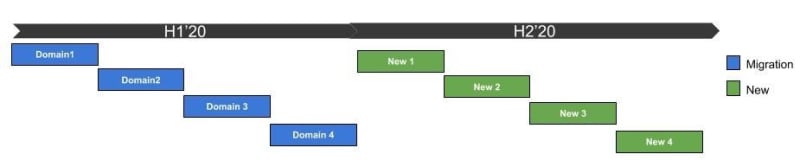

Breaking your migration into smaller deliverables means that they can be planned and delivered in a staggered timeline. It can also allow you to manage your backlog so that it is possible to keep delivering new features to customers while working on the migration. If a new feature needs to be delivered over the cloud migration, you can delay starting a new domain while your team works on the new feature.

You may need to envision your migration as this

rather than this

It may seem counterintuitive to be adding new pipelines to your current platform while migrating to a new platform but you cannot expect your customers requirements to stand still. If anything, you need to be even more attentive to your customers in this period. Communicating with them more and buying them into your migration story will give you an ally when you need it. Make it their story and they will add it to their roadmap and allow them to take advantage of the benefits a cloud platform can give. Inevitably, your customers will have to move and disrupt their day-to-day operations. Happy, engaged customers will be easier to deal with during the migration.

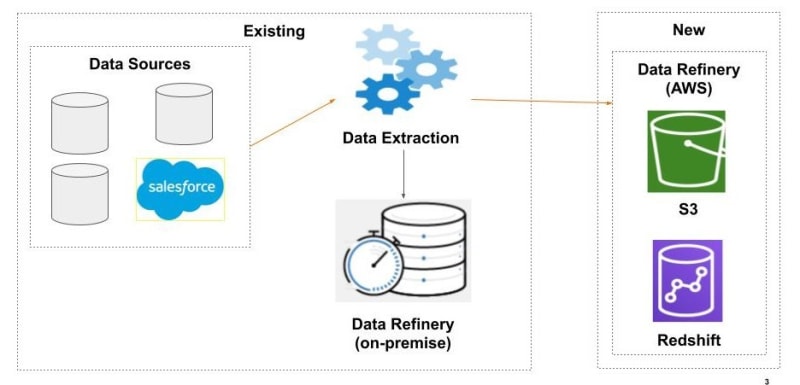

Our approach to enable this was to leverage our existing data extraction tool by adding the new data refinery as a second data target. Our basic migration plan is to add the second data target to existing data objects. If we need to add a new object, the choice is now whether we need to 1) push to old target, 2) push to old and new target or 3) push to new target only. This approach ensures we can continue to support our customers on the old platform while also future-proofing the move to the new platform.

A company’s investment in a data platform is usually a large cost and a migration provides a touchpoint to assess this investment. An advantage of executing a domain driven migration is that it allows a review of all domains in your existing dataset. In addition, the operational cost model of the cloud means you can assign costs to each domain. Assigning these costs to each domain allows you to assess if there is a return being made on that investment. You could take this one step further and assess all objects in each domain for cost vs benefit.

One final advantage we found were objects that could not be assigned to any domain and at the moment these will not be migrated. Leaving these out of the migration reduces the scope, cost and time needed to execute. This can happen for many reasons. In the lifetime of a data platform, objects tend to accumulate. Objects related to legacy features, that one-off report for the director who has since left the company or the objects you loaded in for that ML project that went nowhere.

Focus on customer value

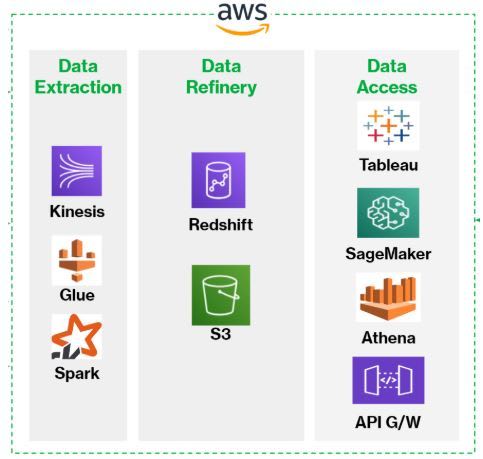

A data platform consists of three logical parts.

Data extraction: tools or applications to extract data from source, process it and load it into the data refinery.

Data refinery: where data is stored and joined with other data to create composite views.

Data Access: tools and reporting layer to access or export data in the storage layer.

Your customer interacts with the refinery via the access layer and your initial focus should be on those two layers. While it can be tempting to spend time on the extraction layer, your customer should never interact with it. Your existing ETL tool may work fine and you will get more customer value from focusing on the data refinery and data access layers.

If your team suggests going down the route of changing your ETL tool to some sexy new tool, you should only go that way if there is a benefit to the customer. As part of stakeholder management, you need to keep the customer front and centre of your deliverable.

Another advantage of maintaining your existing ETL tool and extraction interfaces is that you minimise the impact of the migration on your source system. Very often, you are sourcing data from your main product’s backend which is generally an RDBMS of some flavour. Depending on your architecture, migrating to a different ETL tool may have an impact on the source database and cause some unnecessary noise that will impact on the time you can devote to the refinery and access layers.

A final advantage of maintaining your existing ETL tool is that you can execute a side-by-side migration. You can duplicate the insert of data writing the same data to the new and old systems in a single run of the ETL. This also creates a great opportunity for validating the data in the new platform against the old platform.

I don’t want to give the impression that every data platform migration should maintain their existing data extraction layer. That is what made sense for us but it could be different for every project. Another likely scenario is to minimise the impact on your customer by maintaining the data access layer. My single point is that you should focus on the areas that bring the most customer value first.

Build Your Team

I referenced your team as one of the major stakeholders you need to convince before starting any cloud migration project. In addition, you need to invest in their development and training to ensure success. We have taken the approach of upskilling our existing team. Otherwise you can look to hire in the skills through new hires or consultants.

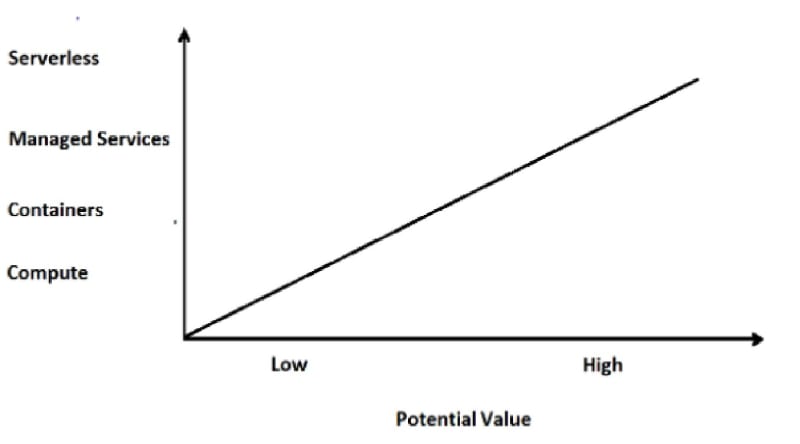

Depending on how far up the cloud value graph you are targeting, you will need to invest more or less in training up your team. If your migration consists of a lift and shift onto compute instances, then the existing skill-set of your team may suffice. If you are moving up to managed services or even serverless, you will need to invest more in your team. For example, if you are using Kafka in your existing platform and plan to retain that in the cloud, you don’t need to learn a new service. If you plan to move to Kinesis, then someone in your team will need to learn this service.

Training and Certification

While core data engineering skills are transferable between on-premise and cloud, there are differences in each and every platform that mean making the investment in team training essential. The consistency model is different between HDFS and S3, partitioning is supported by most data warehouses but not in Redshift. For many, just the pure fundamentals of Infrastructure as Code (IaS) are a new concept. I would absolutely recommend to encourage a training program for the team as soon as possible.

In upskilling the team to a cloud engineering team, I have found the AWS certifications a great vehicle to frame your training. There are numerous tracks and paths there that allow your team to specialise. The Cloud Practitioner certification is a great easy cert to start with. From there, team members can specialise in developer, data analytics or devops tracks. While you may not be utilising every service covered in each certification, it is a very structured method for upskilling. Your team members should be motivated to gain an industry recognised certification. They should be encouraged by providing access to training resources to help gain their chosen certifications. Time should be allocated for the team to upskill. Verizon Connect allocates 5% of every week for everybody to spend on training. If more time is needed, this can also be supported. Engineering managers should not be exempt from this upskilling. If you are managing a team of engineers, you must set an example by upskilling yourself.

A major added benefit I have found aligning the team's development plan with the migration project has been the dramatic increase in staff satisfaction. We measure team sentiment quarterly and since engaging the team on this initiative, sentiment has shot up. The main reason is engagement and motivation. The team had been supporting several on-premise technologies that were going out of support. They have responded very positively to the chance to rebuild it all as a cutting edge data platform on the cloud.

Cost-aware

One big difference between the cloud and on-premise system is the operational vs capital spend. In a typical on-premise setup, a platform is sized up for 3 to 5 years growth, purchased, racked and stacked. This is funded with a once-off capital investment. The developers job from that point was to make a return on that investment. In a cloud operational cost model, costs are paid on a monthly basis and the pressure is to keep your monthly costs down. Every new deployment has the potential to increase your monthly cost. In an on-premise situation, there is a ceiling on how many resources (CPU, Storage, RAM) that your change will consume. In a cloud application, there may be no ceiling and the first warning you may see about an inefficient process is a massive cloud bill. In training up your team, cost-awareness should be key concept. To explore this concept more, check out Simon Wardley and Wardley Maps.

Summary

It has taken me some time to finish this article and in the meantime AWS published this developing an application migration methodology. They recommend grouping your data objects into waves and executing each wave separately. This would seem to validate my point on the domain driven migrations. In closing, these thoughts are my own on how you should start a cloud data migration. If you find it helpful, please get in touch via the comments below and I can share more on individual points.

*Data platform could equal data lake, ODS, data warehouse, lakehouse, etc..

Top comments (0)