This article is also available on Medium.

If you've ever seen Apple's products page, you may seen lots of gimmicks triggered by scrolling.

And I initially thought that this animation was implemented with video tag. It's common to use videos to make a scroll animation by changing seek position of it. (Spoiler alert: This isn't a video tag!)

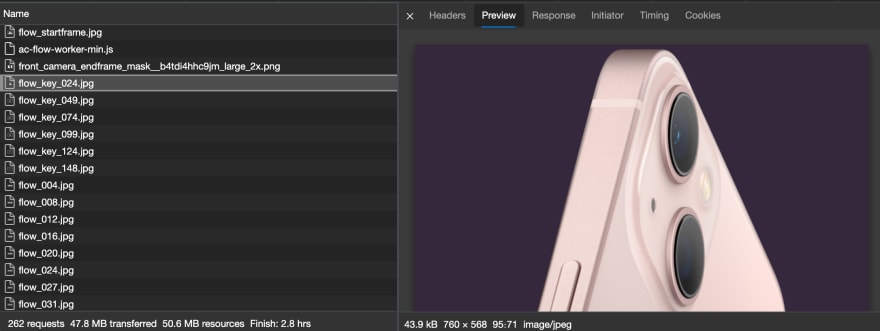

If you glanced a network activity, you'll find a few images which used for animation.

But you'll find something strange. Not all frames are complete image. Almost all frames are like an image below. It's shredded fragments.

What's going on? Let'd dive into it!!

Background: How video compression works?

I talked about using video for animation. So what actually video (and its compression algorithm) does? Please take a look at this article.

How Modern Video Compression Algorithms Actually Work - Make Tech Easier

I don't discuss much in this article. It's basically generate difference data between each frames so you can use previous pixels if they weren't changed.

Think Different: Apple's way to compress animation

I think you guessed what's going on. Yes. That strange images are difference data and Apple implemented video compression algorithm its own way!!

A manifest file

You can find a file named flow_manifest.json from network activity. The content is like this.

{

"version": 5,

"frameCount": 149,

"blockSize": 8,

"imagesRequired": 143,

"reversible": true,

"superframeFrequency": 4,

"frames": [

{

"type": "diff",

"startImageIndex": 0,

"startBlockIndex": 0,

"value": "ACLAKs..."

},

{

"type": "diff",

"startImageIndex": 1,

"startBlockIndex": 0,

"value": "ACLALA..."

},

{

"type": "diff",

"startImageIndex": 2,

"startBlockIndex": 0,

"value": "ACKAMA..."

},

...

{

"type": "chapter",

"x": 0,

"y": 8,

"width": 760,

"height": 568

},

{

"type": "diff",

"startImageIndex": 48,

"startBlockIndex": 0,

"value": "ACFAJAD..."

},

...

]

}

I think blockSize is same as block sizes of video compressing. It represents a size of pixels treated as minimal unit. So you need to treat 8x8 pixels as a block.

Thevalue on framesarray are pretty long. It seems to be encoded in some way.

I found a curious function from bundled JavaScript file.

_parseFrame(t) {

var e = this.canvasElement.width / 8

, i = this.canvasElement.height / 8

, s = t.value

, a = t.startImageIndex

, n = t.startBlockIndex;

if ("keyframe" === t.type || "chapter" === t.type)

return {

type: t.type,

width: t.width,

height: t.height,

x: t.x,

y: t.y

};

let o = []

, h = []

, l = n;

for (let t = 0; t < i; t++)

o.push(new r(e)),

h.push({});

for (let t = 0; t < s.length; t += 5) {

let i = this._createNumberFromBase64Range(s, t, 3)

, r = this._createNumberFromBase64Range(s, t + 3, 2);

for (let t = i; t < i + r; t++) {

let i = Math.floor(t / e)

, s = t % e;

o[i].set(s);

try {

h[i]["" + s] = l

} catch (t) {}

l++

}

}

return {

type: "diff",

startImageIndex: a,

rowMasks: o,

rowDiffLocations: h

}

}

_valueForCharAt(t, e) {

var i = t.charCodeAt(e);

return i > 64 && i < 91 ? i - 65 : i > 96 && i < 123 ? i - 71 : i > 47 && i < 58 ? i + 4 : 43 === i ? 62 : 47 === i ? 63 : void 0

}

_createNumberFromBase64Range(t, e, i) {

for (var s = 0; i--; )

s += this._valueForCharAt(t, e++) << 6 * i;

return s

}

It seemingly reads value data as combined Base64 encoded numbers. And this generates an object with type, startImageIndex, rowMasks and rowDiffLocations.

You can try this function on your machine with a bit of modification. You'll get a sequence of numbers as rowMasks and sequence of key-value data as rowDiffLocations.

How to use this data? There is a function for that.

_applyDiffFrame(t, e=[]) {

a(t >= 0 && t < this.manifest.frames.length, "Frame out of range."),

a("diff" === this.manifest.frames[t].type, "Invalid attempt to play a keyframe as a diff.");

const i = this.canvasElement.width / 8

, s = this.manifest.frames[t].image

, r = s.width / 8;

for (let a = 0; a < this.manifest.frames[t].rowMasks.length; a++) {

let n = this.manifest.frames[t].rowMasks[a]

, o = this.manifest.frames[t].rowDiffLocations[a]

, h = this._rangesFromRowMask(n, o, a, i, r);

if (h.length < 1)

continue;

let l = e.map((t=>this.manifest.frames[t].rowMasks[a]))

, c = n;

for (; l.length > 0; ) {

let t = l.pop();

c = c.and(t.xor(this._bitsetForNAND))

}

let u = this._rangesFromRowMask(c, o, a, i, r);

u.length > h.length && (u = h);

for (let t = 0; t < u.length; t++)

this._applyDiffRange(u[t], s, r, i)

}

}

_rangesFromRowMask(t, e, i, s, a) {

let n = []

, o = null;

if (t.isEqual(new r(s)))

return [];

let h = s;

for (let r = 0; r < h; r++)

if (t.get(r)) {

for (o = {

location: i * s + r,

length: 1,

block: e[r]

},

o.block; ++r < h && t.get(r); ) {

0 === (o.block + o.length) % a ? (n.push(o),

o = {

location: o.location + o.length,

length: 1,

block: e[r]

}) : o.length++

}

n.push(o)

}

return n

}

_applyDiffRange(t, e, i, s) {

var r = t.block

, a = r % i * 8

, n = 8 * Math.floor(r / i)

, o = 8 * t.length

, h = t.location % s * 8

, l = 8 * Math.floor(t.location / s);

this.glCanvas.drawImage(e, a, n, o, 8, h, l, o, 8)

}

It's pretty complicated. I guess it first determine the rows which should be modified by using rowMasks. All other rows excluded by them will remain same.

Then find a horizontal location to be modified by using rowDiffLocations. rowDiffLocations seems to be a relation data of index on compressed images and which on canvas.

Actual redrawing were executed on _applyDiffRange function.

But why?

Why does Apple implemented in this way? It's seems reimplementing a wheel again. Why not simply using video tag?

I think there are tow reasons.

Loading performance

You can prioritize to load specific frames at first with this algorithm.

Imagine if you tried to scroll really fast right after page loading, or simply when you're using slow internet connection. All frames may not be loaded on at that point. But it's possible to load key frames first with this algorithm. So you can see at least low frame rate version of the animation. It's resembles to loading low quality images at first then swap them for high quality ones.

I think actual loading order proves this theory.

Chroma format

Have you ever heard of chroma subsampling? It's a traditionally way to reduce amount of data.

Chroma subsampling - Wikipedia

If you chose to use lower than 4:4:4 chroma, partial color information would be dropped. Not all pixels have original color information.

So you need to use 4:4:4 chroma if you want better picture quality.

By the way, it's common to use 4:2:0 chroma for video encoding. You need to use Hi444PP profile on AVC if you want to encode with 4:4:4 chroma. Besides devices of these days have hardware video decoder for AVC/HEVC, I couldn't find a device with hardware acceleration for Hi444PP profile which available for consumers.

So it's may be difficult to achieve smooth animation with 4:4:4 chroma picture if you're using videos.

How about the chrome format of Apple's animation data?

I examined some frames with exiftool. And of course it's 4:4:4 chroma!!

Y Cb Cr Sub Sampling : YCbCr4:4:4 (1 1)

I initially thought Apple's algorithm wasn't efficient but it's not really inefficient compared to software decoded videos. It may be reasonable to use this algorithm if you need to show 4:4:4 chroma pictures. It really Apple to obsessed with quality. 😁

Top comments (0)