Most of the progress made in computer science and technology stands on the platform of correctness and efficiency. Correctness, hardware and software determinism; the idea that if we want to live in a digital world, the hardware can’t fail, the software can’t fail. No bugs in the hardware. Period. And when there’s a bug in the code, fix it quick, or you might as well scrap it all. Efficiency; go faster, do more, squeeze out every single trivial compute cycle. And we adorn you with adulation for being the elite among us.

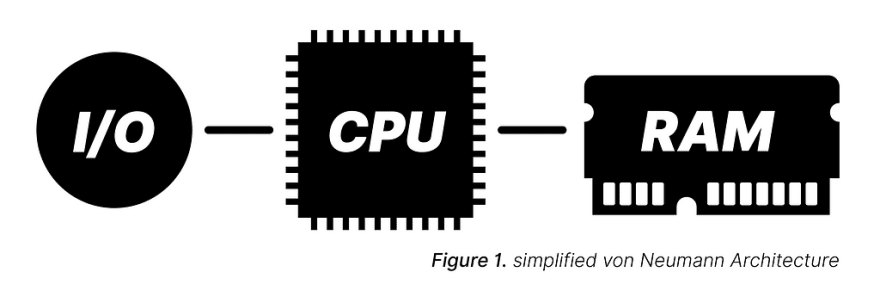

Centralized processing — the CPU — necessary to manage and maintain correctness; computation only happens with the perfect linear path of execution, and we make sure it’s efficient. Random-access memory, the ability to organize information with teleportation — incredibly efficient.

But today, Moore’s law is straining under the weight of its own progress, hardware is pushed to the very limits of material science, making it more fragile than ever, software still has bugs, and the security repercussions of the entire system is having increasingly devastating consequences on our socio-economic world. And yet, we still want to go faster, do more.

The history here is rich and complex, so excuse me for leaving any semblance of that out, but getting to the point is important, and if need be, digging into the details later is not a fruitless effort, I encourage it. I, myself, need to do more of it.

This architectural foundation was established long ago, and it was quick to deliver in capability and growth. So much so, that many stopped questioning it. But in not questioning it, we miss the opportunity to ask if there’s another way to compute. And there is.

There’s an uncharted world of computer science and we’ve found a door to it.

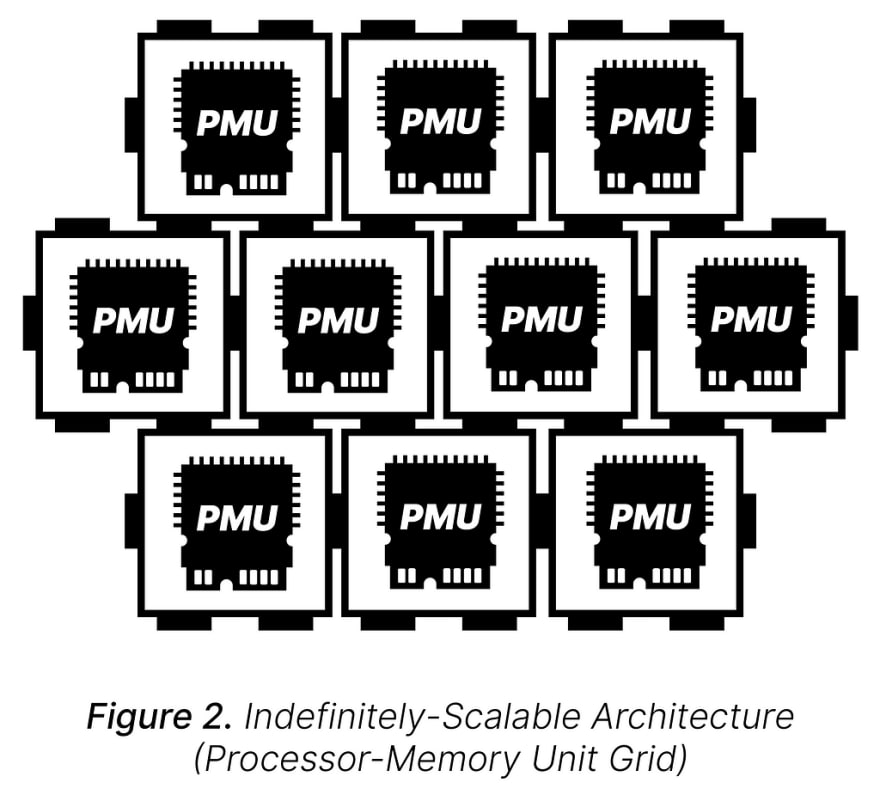

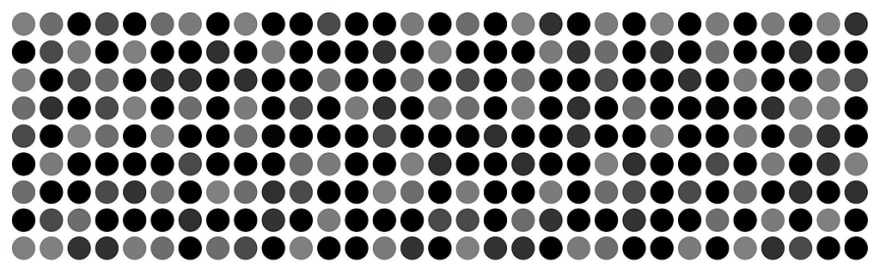

A place where centralized processing doesn’t exist. It’s replaced with distributed processing that removes the bottle-neck and allows for indefinite hardware and software scalability. This alone is a concept that unlocks a path worthy of traveling down. It’s a place with no concept of RAM. Instead, it’s much like our own physical world — spatially architected — limiting (from the perspective of efficiency), but importantly so. A centralized manager of process execution is replaced by distributed, randomly-executing actors, that compute by way of cause and effect. And if their cause doesn’t affect, perhaps they just try again; their best-effort actions working together to achieve a localized computational goal. A world where bugs are constant, and yet it does little to stifle progress and execution. Where hardware can blow up, and the system perseveres.

This is the world of robust-first and best-effort computing. A place where efficiency and correctness still play a role, but not the role. A place where robustness, durability, thick-skin — call it what you will — enters into the platform as a first-class concept, bringing with it incredible sturdiness to our ability to compute and to grow our technology into the future, much further and safer than ever thought possible.

When you see the software that runs here, as rudimentary as it may be today, it looks much less like any construct of software you have built in your head, instead, it looks like life. And in fact, living systems principles play a major foundational role in how you make computation happen robustly in this system. For any AI enthusiasts out there, whether you’re an expert in building complex neural networks or just a fan of these concepts that you may one day hope to understand, this world feels like a step in a new direction that, in its simplicity, unlocks a door to understanding our own world and the living systems within it.

There’s more — a lot more — to it all. But, this post is not a comprehensive explainer. It’s barely a teaser. And in fact, herein lies the last point, maybe the most important point to make. The answers to your questions, many of them don’t even exist yet. And so, there is an incredible opportunity for us all, one that is rare. To journey into a world of computer science that is undeclared and undocumented. A place where the simplest observations matter, where experimentation is the only work to do in order to have a chance of colonizing this new world.

Right now there is a small group of us, led by the person who has spent the most time in this new world, Dr. of Computer Science and Associate Professor Emeritus, David Ackley. And, perhaps like you, we’re not the most intellectually equipped to go there, but there’s something about it that we can’t stop thinking about. Like an adventure to be seized that fuels our excitement for the possible. Please consider joining us, please consider asking about possibly joining us. If any of these ideas resonate or intrigue you, you’re qualified, you’re hired, we would love to have you.

So what now? The following is a link dump that can help you learn more about the Robust-First Computing movement.

- We congregate in our Gitter Chat Room — come say hi, ask questions!

- The T2 Tile Project is Dr. Ackley’s weekly vlog and journey into building an indefinitely scalable hardware platform on top of robust-first concepts. A long, but incredibly worth-while rabbit-hole.

- A very notable video from the T2 Tile project, affectionately known as the Christmas rant, does a great job of pointing out what it is and why this new type of computing may be very worthwhile from many perspectives.

- You can also find many useful talks diving in into the depths of the Robust-First ecosystem and concepts on his personal Youtube Channel

- A few notable starting points are, Robust-First Computing: Beyond Efficiency and Demon Horde Sort

- If you want to see what this all looks like. My biggest contribution to the project thus far has been the website, mfm.rocks which is a loosely-interpreted simulation of Dr. Ackley’s Moveable Feast Machine. It doesn’t follow all of the the rules perfectly, but is enough to show you how computation might happen in this decentralized, actor-based world. This project is open-source and I welcome contributions!

- The Robust-First Wiki dives into the constructs of the current software and some hardware of this new architecture. It can also lead you to running the MFM on your own machine.

- You can find more research and links at Dr. Ackley’s website

If you got this far, thanks for reading. If you have any thoughts or questions, I would love to see them show up in the comments! I’ll leave you with the Robust-First Computing Team Creed:

Top comments (0)