The basic idea on which UNIX was designed is to not have a complex monolithic tool do everything. Instead, UNIX makes use of small pluggable components whereby their usage separately is not of great use. But when combined, they can perform powerful operations. Let's take the ps command as an example; ps on its own displays the currently running processes on your UNIX box. It has a decent number of flags that allows you to display many aspects of the process. For example:

- The user that started a process

- How much CPU each running process is using

- What the command used to start the process is and a lot more

The ps command does an excellent job displaying information about the running processes. However, there isn't any ps flag that filters its output. The lack of this functionality is not a missing feature in the tool; this is intentional.

There is another tool that does an excellent job filtering fed into it: grep. So, using the pipe | character, you can filter the output of ps to show only the SSH proesses running on your sustem like this : ps -ef | grep -i ssh. The ps tool is concerned with displaying each and every possible aspect of running processes. The grep command is concerned with offering the ability of filtering text, any text in many different ways.

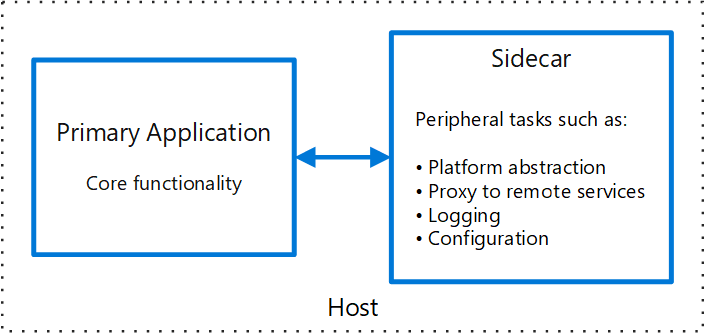

Because of both UNIX power and simplicity, this principle was used in many other domains in addition to operating systems. In Kubernetes, for example, each container should do only one job and do it well. You might want to ask that what if the container's job requires extra procedures to aid it or enhance it? there is nothing to worry about because the same way we piped the output of the ps command to grep, we can use another container sitting beside the main one in the same Pod. That second container carries out the auxiliary logic needed by the first container to function correctly. That second container is commonly known as Sidecar.

What Does a Sidecar Container Do?

A Pod is the basic atomic unit of deployment in Kubernetes. Typically, a Pod contains a single container. However, multiple containers can be placed in the same Pod. All containers running on the same Pod share the same volume and network interface of the Pod. Actuallay, the Pod itself is a container that executes the pause command. Its sole purpose is to hold the network interfaces and the Linux namespaces to run other containers. A Sidecar container is a second container added to the Pod definition. Why it must be placed in the same Pod is that it needs to use the same resources being used by the main container. Let's have an example to demonstrate the use cases of this pattern.

Scenario: Log-Shipping Sidecar

In this scenario, we have a web server container running the nginx image. The access and error logs produced by the web server are not critical enough to be placed on a Persistent Volume (PV). However, developers need to access to the last 24 hours of logs so they can trace issues and bugs. Therefore we need to ship the access and error logs for the web server to a log-aggregation service. Following the separation of concerns principle, we implement the Sidecar pattern by deploying a second container that ships the error and access logs from nginx. Nginx does one thing, serving the web pages. The second container also specializes in its task; shipping logs. Since containers are running on the same Pod, we can use a shared emptyDir volume to read and write logs. The definition file for such a Pod may look as follows:

apiVersion: v1

kind: Pod

metadata:

name: webserver

spec:

volumes:

- name: shared-logs

emptyDir: {}

containers:

- name: nginx

image: nginx

volumeMounts:

- name: shared-logs

mountPath: /var/log/nginx

- name: sidecar-container

image: busybox

command: ["sh","-c","while true; do cat /var/log/nginx/access.log /var/log/nginx/error.log; sleep 30; done"]

volumeMounts:

- name: shared-logs

mountPath: /var/log/nginx

The above definition is a standard Kubernetes Pod definition except that it deploys two containers to the same Pod. The sidecar container conventionally comes second in the definition so that when you issue the kubectl execute command, you target the main container by default. The main container is an nginx container that's instructed to store its logs on a volume mounted on /var/log/nginx. Mounting a volume at that location prevents Nginx from outputting its log data to the standard output and forces it to write them to access.log and error.log files.

Side Note on Log Aggregation

Notice that the default behaviour of the Nginx image is to store its logs to the standard output to be picked by Dockers' log collector. Docker stores those logs under /var/lib/docker/containers/container-ID/container-ID-json.log on the host machine. With more than one container (from different Pods) running on the same host and using the same location for storing their logs, your can use a DaemonSet to deploy a log-collector container like Filebat or Logstash to collect those logs and send them to a log-aggregator like ElasticSearch. You will need to mount /var/lib/docker/containers as a hostPath volume to the DaemonSet Pod to give the log-collector container access to the logs.

The sidecar container runs with the nginx container on the same Pod. This enables the sidecar container to access the same volume as the web server. In the above example, we used the cat command to simulate sending the log data to a log aggregator every 30 seconds.

Top comments (0)