The new GUI to build ReAct agents in watsonx.ai

Introduction

Building agents through a GUI becomes more integrated in the watsonx.ai studio. The agent builder is now visible at the first glance in the watsonx.ai’s studio at the same level as the access to the prompt lab.

The interface / functionalities breakdown

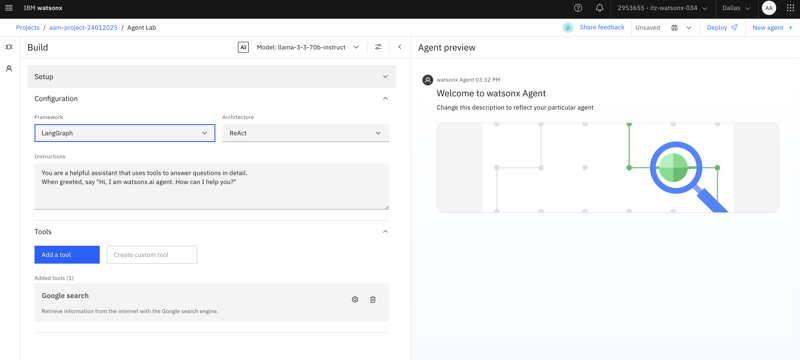

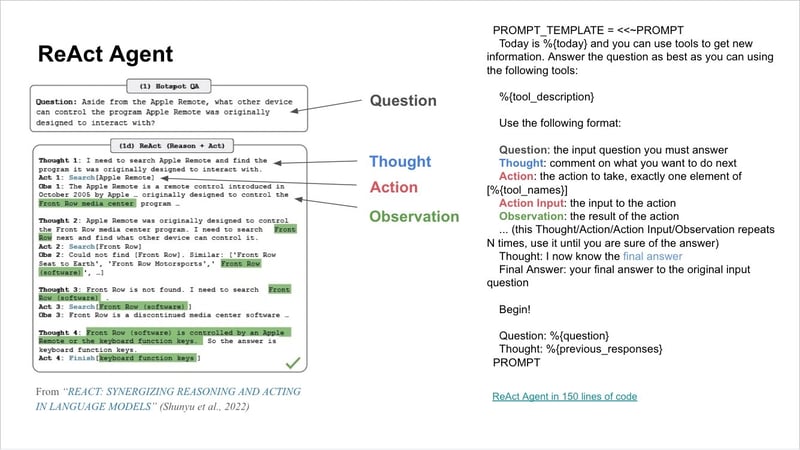

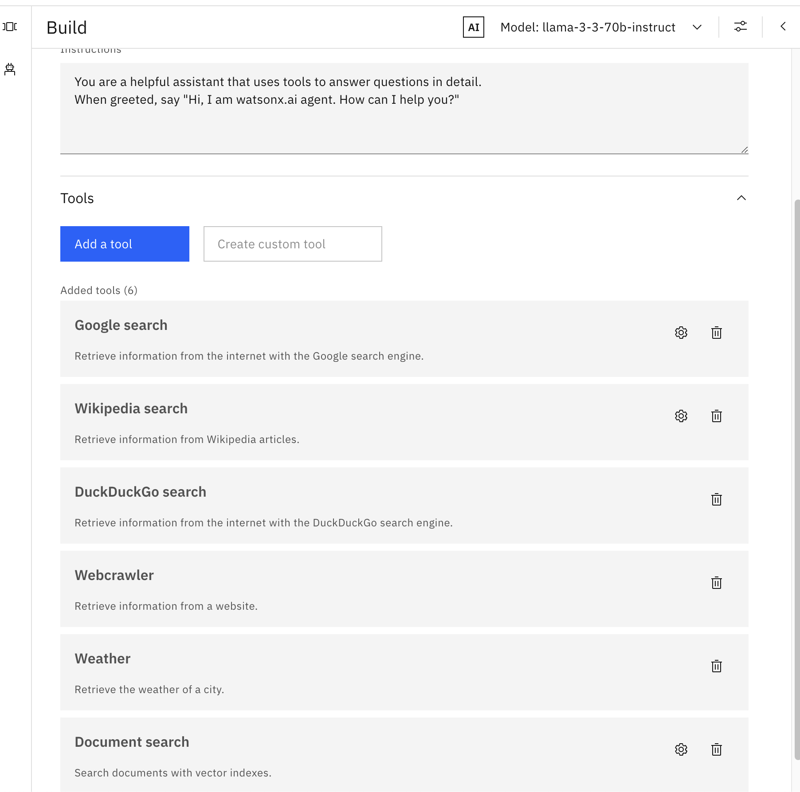

The UI is very intuitive and straightforward to use. The supported architecture is “ReAct” and the framework is “LangGraph”.

The ReAct (Reasoning and Acting) framework is a method designed to improve the capabilities of large language models (LLMs) by enabling them to interact more effectively with their environments.

LangGraph is a framework designed for building stateful, multi-actor applications with Large Language Models (LLMs).

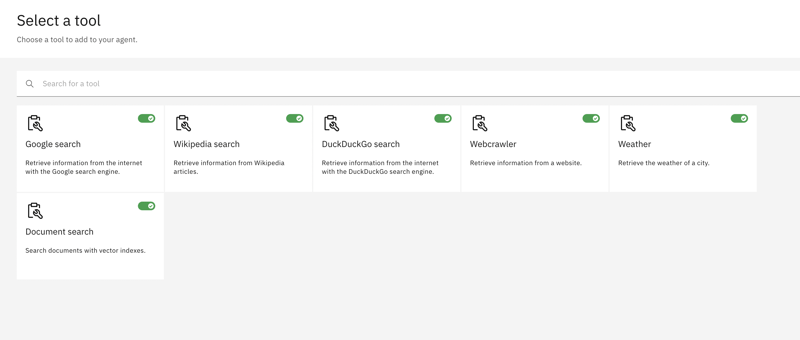

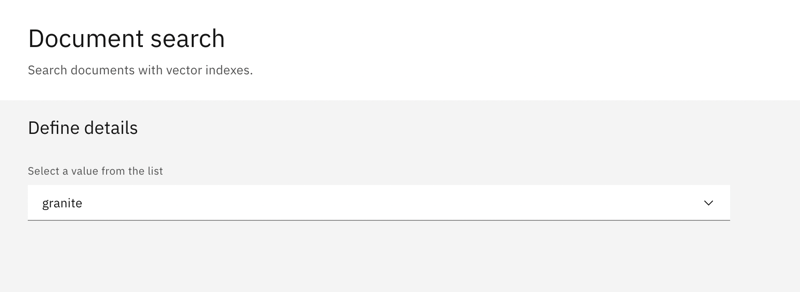

There are 6 types of tools to add to the agent.

- Google search

- Wikipedia search

- DuckDuckGo search

- Web crawler tool

- Weather

- and Document search (unique choice granite for now)

One or all of the available tools could be selected.

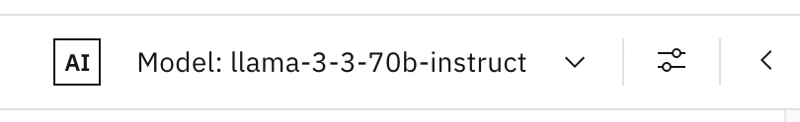

The user can choose the LLM through the menu of the agent building studio. In this example I keep “llama-3–3–70b-instruct”.

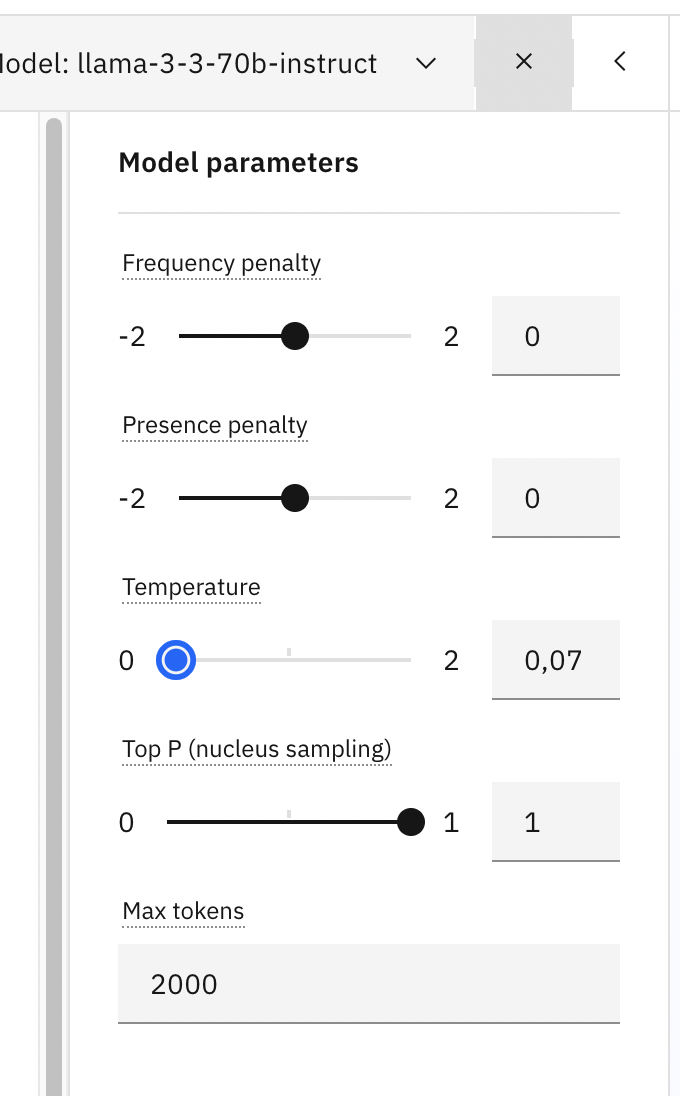

The model parameters are to be tuned adequately.

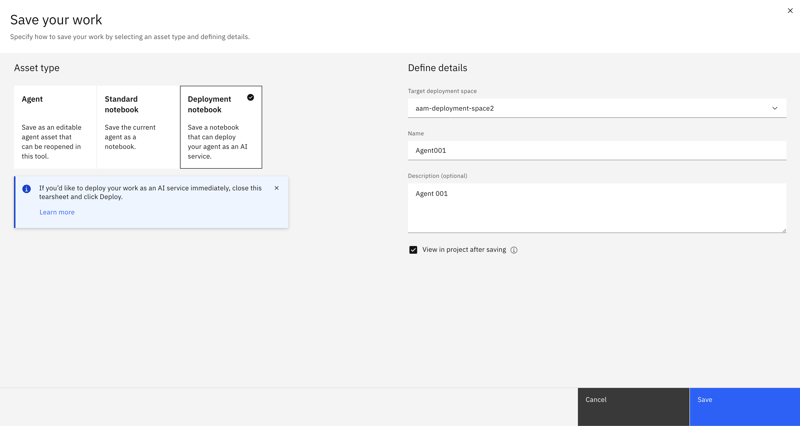

Once all is set, the agent should be saved and there are 3 options available for saving the agent.

Once the agent is generated, as in the example above, the notebook is generated and is deployable.

import os

import getpass

import requests

def get_credentials():

return {

"url" : "https://us-south.ml.cloud.ibm.com",

"apikey" : getpass.getpass("Please enter your api key (hit enter): ")

}

def get_bearer_token():

url = "https://iam.cloud.ibm.com/identity/token"

headers = {"Content-Type": "application/x-www-form-urlencoded"}

data = f"grant_type=urn:ibm:params:oauth:grant-type:apikey&apikey={credentials['apikey']}"

response = requests.post(url, headers=headers, data=data)

return response.json().get("access_token")

credentials = get_credentials()

from ibm_watsonx_ai import APIClient

client = APIClient(credentials)

space_id = "xxxxxxx"

client.set.default_space(space_id)

source_project_id = "XXXXXXXXX"

params = {

"space_id": space_id,

}

def gen_ai_service(context, params = params, **custom):

# import dependencies

from langchain_ibm import ChatWatsonx

from ibm_watsonx_ai import APIClient

from langchain_core.messages import AIMessage, HumanMessage

from langchain.tools import WikipediaQueryRun

from langchain_community.utilities import WikipediaAPIWrapper

from langchain_community.tools import DuckDuckGoSearchRun

from langchain_community.utilities import DuckDuckGoSearchAPIWrapper

from langgraph.checkpoint.memory import MemorySaver

from langgraph.prebuilt import create_react_agent

import json

model = "meta-llama/llama-3-3-70b-instruct"

service_url = "https://us-south.ml.cloud.ibm.com"

# Get credentials token

credentials = {

"url": service_url,

"token": context.generate_token()

}

# Setup client

client = APIClient(credentials)

space_id = params.get("space_id")

client.set.default_space(space_id)

def create_chat_model(watsonx_client):

parameters = {

"frequency_penalty": 0,

"max_tokens": 2000,

"presence_penalty": 0,

"temperature": 0,

"top_p": 1

}

chat_model = ChatWatsonx(

model_id=model,

url=service_url,

space_id=space_id,

params=parameters,

watsonx_client=watsonx_client,

)

return chat_model

def get_schema_model(original_json_schema):

# Create a pydantic base model class from the tool's JSON schema

from datamodel_code_generator import DataModelType, PythonVersion

from datamodel_code_generator.model import get_data_model_types

from datamodel_code_generator.parser.jsonschema import JsonSchemaParser

from typing import Optional

from pydantic import BaseModel, Field, constr

import json

json_schema = json.dumps(original_json_schema)

data_model_types = get_data_model_types(

DataModelType.PydanticV2BaseModel,

target_python_version=PythonVersion.PY_311

)

# Returns the python class code as a string

parser = JsonSchemaParser(

json_schema,

data_model_type=data_model_types.data_model,

data_model_root_type=data_model_types.root_model,

data_model_field_type=data_model_types.field_model,

data_type_manager_type=data_model_types.data_type_manager,

dump_resolve_reference_action=data_model_types.dump_resolve_reference_action,

)

model_code = parser.parse()

full_code = model_code

namespace = {

"Field": Field,

"constr": constr,

"Optional": Optional

}

value = exec(full_code, namespace)

value = exec("Model.model_rebuild()", namespace)

pydantic_model = namespace['Model']

return pydantic_model

def get_remote_tool_descriptions():

remote_tool_descriptions = {}

remote_tool_schemas = {}

import requests

headers = {

"Accept": "application/json",

"Content-Type": "application/json",

"Authorization": f'Bearer {context.generate_token()}'

}

tool_url = "https://private.api.dataplatform.cloud.ibm.com"

remote_tools_response = requests.get(f'{tool_url}/wx/v1-beta/utility_agent_tools', headers = headers)

remote_tools = remote_tools_response.json()

for resource in remote_tools["resources"]:

tool_name = resource["name"]

tool_description = resource["description"]

tool_schema = resource.get("input_schema")

remote_tool_descriptions[tool_name] = tool_description

if (tool_schema):

remote_tool_schemas[tool_name] = get_schema_model(tool_schema)

return remote_tool_descriptions, remote_tool_schemas

tool_descriptions, tool_schemas = get_remote_tool_descriptions()

def create_remote_tool(tool_name, context):

from langchain_core.tools import StructuredTool

from langchain_core.tools import Tool

import requests

def call_tool( tool_input ):

body = {

"tool_name": tool_name,

"input": tool_input

}

headers = {

"Accept": "application/json",

"Content-Type": "application/json",

"Authorization": f'Bearer {context.get_token()}'

}

tool_url = "https://private.api.dataplatform.cloud.ibm.com"

tool_response = requests.post(f'{tool_url}/wx/v1-beta/utility_agent_tools/run', headers = headers, json = body)

if (tool_response.status_code > 400):

raise Exception(f'Error calling remote tool: {tool_response.json()}' )

tool_output = tool_response.json()

return tool_response.json().get("output")

def call_tool_structured(**tool_input):

return call_tool(tool_input)

def call_tool_unstructured(tool_input):

return call_tool(tool_input)

remote_tool_schema = tool_schemas.get(tool_name)

if (remote_tool_schema):

tool = StructuredTool(

name=tool_name,

description = tool_descriptions[tool_name],

func=call_tool_structured,

args_schema=remote_tool_schema

)

return tool

tool = Tool(

name=tool_name,

description = tool_descriptions[tool_name],

func=call_tool_unstructured

)

return tool

def create_custom_tool(tool_name, tool_description, tool_code, tool_schema):

from langchain_core.tools import StructuredTool

import ast

def call_tool(**kwargs):

tree = ast.parse(tool_code, mode="exec")

function_name = tree.body[0].name

compiled_code = compile(tree, 'custom_tool', 'exec')

namespace = {}

exec(compiled_code, namespace)

return namespace[function_name](**kwargs)

tool = StructuredTool(

name=tool_name,

description = tool_description,

func=call_tool,

args_schema=get_schema_model(tool_schema)

)

return tool

def create_custom_tools():

custom_tools = []

def create_tools(inner_client, context):

tools = []

top_k_results = 5

wikipedia = WikipediaQueryRun(api_wrapper=WikipediaAPIWrapper(top_k_results=top_k_results))

tools.append(wikipedia)

max_results = 10

search = DuckDuckGoSearchRun(api_wrapper=DuckDuckGoSearchAPIWrapper(max_results=max_results))

tools.append(search)

tools.append(create_remote_tool("GoogleSearch", context))

tools.append(create_remote_tool("WebCrawler", context))

tools.append(create_remote_tool("Weather", context))

return tools

def create_agent(model, tools, messages):

memory = MemorySaver()

instructions = """

# Notes

- Use markdown syntax for formatting code snippets, links, JSON, tables, images, files.

- Any HTML tags must be wrapped in block quotes, for example ```

<html>

```.

- When returning code blocks, specify language.

- Sometimes, things don't go as planned. Tools may not provide useful information on the first few tries. You should always try a few different approaches before declaring the problem unsolvable.

- When the tool doesn't give you what you were asking for, you must either use another tool or a different tool input.

- When using search engines, you try different formulations of the query, possibly even in a different language.

- You cannot do complex calculations, computations, or data manipulations without using tools.

- If you need to call a tool to compute something, always call it instead of saying you will call it.

If a tool returns an IMAGE in the result, you must include it in your answer as Markdown.

Example:

Tool result: IMAGE(https://api.dataplatform.cloud.ibm.com/wx/v1-beta/utility_agent_tools/cache/images/plt-04e3c91ae04b47f8934a4e6b7d1fdc2c.png)

Markdown to return to user:

You are a helpful assistant that uses tools to answer questions in detail.

When greeted, say \"Hi, I am watsonx.ai agent. How can I help you?\""""

for message in messages:

if message["role"] == "system":

instruction += message["content"]

graph = create_react_agent(model, tools=tools, checkpointer=memory, state_modifier=instructions)

return graph

def convert_messages(messages):

converted_messages = []

for message in messages:

if (message["role"] == "user"):

converted_messages.append(HumanMessage(content=message["content"]))

elif (message["role"] == "assistant"):

converted_messages.append(AIMessage(content=message["content"]))

return converted_messages

def generate(context):

payload = context.get_json()

messages = payload.get("messages")

inner_credentials = {

"url": service_url,

"token": context.get_token()

}

inner_client = APIClient(inner_credentials)

model = create_chat_model(inner_client)

tools = create_tools(inner_client, context)

agent = create_agent(model, tools, messages)

generated_response = agent.invoke(

{ "messages": convert_messages(messages) },

{ "configurable": { "thread_id": "42" } }

)

last_message = generated_response["messages"][-1]

generated_response = last_message.content

execute_response = {

"headers": {

"Content-Type": "application/json"

},

"body": {

"choices": [{

"index": 0,

"message": {

"role": "assistant",

"content": generated_response

}

}]

}

}

return execute_response

def generate_stream(context):

print("Generate stream", flush=True)

payload = context.get_json()

headers = context.get_headers()

is_assistant = headers.get("X-Ai-Interface") == "assistant"

messages = payload.get("messages")

inner_credentials = {

"url": service_url,

"token": context.get_token()

}

inner_client = APIClient(inner_credentials)

model = create_chat_model(inner_client)

tools = create_tools(inner_client, context)

agent = create_agent(model, tools, messages)

response_stream = agent.stream(

{ "messages": messages },

{ "configurable": { "thread_id": "42" } },

stream_mode=["updates", "messages"]

)

for chunk in response_stream:

chunk_type = chunk[0]

finish_reason = ""

usage = None

if (chunk_type == "messages"):

message_object = chunk[1][0]

if (message_object.type == "AIMessageChunk" and message_object.content != ""):

message = {

"role": "assistant",

"content": message_object.content

}

else:

continue

elif (chunk_type == "updates"):

update = chunk[1]

if ("agent" in update):

agent = update["agent"]

agent_result = agent["messages"][0]

if (agent_result.additional_kwargs):

kwargs = agent["messages"][0].additional_kwargs

tool_call = kwargs["tool_calls"][0]

if (is_assistant):

message = {

"role": "assistant",

"step_details": {

"type": "tool_calls",

"tool_calls": [

{

"id": tool_call["id"],

"name": tool_call["function"]["name"],

"args": tool_call["function"]["arguments"]

}

]

}

}

else:

message = {

"role": "assistant",

"tool_calls": [

{

"id": tool_call["id"],

"type": "function",

"function": {

"name": tool_call["function"]["name"],

"arguments": tool_call["function"]["arguments"]

}

}

]

}

elif (agent_result.response_metadata):

# Final update

message = {

"role": "assistant",

"content": agent_result.content

}

finish_reason = agent_result.response_metadata["finish_reason"]

if (finish_reason):

message["content"] = ""

usage = {

"completion_tokens": agent_result.usage_metadata["output_tokens"],

"prompt_tokens": agent_result.usage_metadata["input_tokens"],

"total_tokens": agent_result.usage_metadata["total_tokens"]

}

elif ("tools" in update):

tools = update["tools"]

tool_result = tools["messages"][0]

if (is_assistant):

message = {

"role": "assistant",

"step_details": {

"type": "tool_response",

"id": tool_result.id,

"tool_call_id": tool_result.tool_call_id,

"name": tool_result.name,

"content": tool_result.content

}

}

else:

message = {

"role": "tool",

"id": tool_result.id,

"tool_call_id": tool_result.tool_call_id,

"name": tool_result.name,

"content": tool_result.content

}

else:

continue

chunk_response = {

"choices": [{

"index": 0,

"delta": message

}]

}

if (finish_reason):

chunk_response["choices"][0]["finish_reason"] = finish_reason

if (usage):

chunk_response["usage"] = usage

yield chunk_response

return generate, generate_stream

- Testing the agent.

# Initialize AI Service function locally

from ibm_watsonx_ai.deployments import RuntimeContext

context = RuntimeContext(api_client=client)

streaming = False

findex = 1 if streaming else 0

local_function = gen_ai_service(context, space_id=space_id)[findex]

messages = []

local_question = "Change this question to test your function"

messages.append({ "role" : "user", "content": local_question })

context = RuntimeContext(api_client=client, request_payload_json={"messages": messages})

response = local_function(context)

result = ''

if (streaming):

for chunk in response:

print(chunk, end="\n\n", flush=True)

else:

print(response)

- Deployment part.

# Look up software specification for the AI service

software_spec_id_in_project = "1629aef9-1ff8-446d-b2ac-19d55acb7ef5"

software_spec_id = ""

try:

software_spec_id = client.software_specifications.get_id_by_name("ai-service-v5-software-specification")

except:

software_spec_id = client.spaces.promote(software_spec_id_in_project, source_project_id, space_id)

# Define the request and response schemas for the AI service

request_schema = {

"application/json": {

"$schema": "http://json-schema.org/draft-07/schema#",

"type": "object",

"properties": {

"messages": {

"title": "The messages for this chat session.",

"type": "array",

"items": {

"type": "object",

"properties": {

"role": {

"title": "The role of the message author.",

"type": "string",

"enum": ["user","assistant"]

},

"content": {

"title": "The contents of the message.",

"type": "string"

}

},

"required": ["role","content"]

}

}

},

"required": ["messages"]

}

}

response_schema = {

"application/json": {

"oneOf": [{"$schema":"http://json-schema.org/draft-07/schema#","type":"object","description":"AI Service response for /ai_service_stream","properties":{"choices":{"description":"A list of chat completion choices.","type":"array","items":{"type":"object","properties":{"index":{"type":"integer","title":"The index of this result."},"delta":{"description":"A message result.","type":"object","properties":{"content":{"description":"The contents of the message.","type":"string"},"role":{"description":"The role of the author of this message.","type":"string"}},"required":["role"]}}}}},"required":["choices"]},{"$schema":"http://json-schema.org/draft-07/schema#","type":"object","description":"AI Service response for /ai_service","properties":{"choices":{"description":"A list of chat completion choices","type":"array","items":{"type":"object","properties":{"index":{"type":"integer","description":"The index of this result."},"message":{"description":"A message result.","type":"object","properties":{"role":{"description":"The role of the author of this message.","type":"string"},"content":{"title":"Message content.","type":"string"}},"required":["role"]}}}}},"required":["choices"]}]

}

}

# Store the AI service in the repository

ai_service_metadata = {

client.repository.AIServiceMetaNames.NAME: "Agent001",

client.repository.AIServiceMetaNames.DESCRIPTION: "Agent001",

client.repository.AIServiceMetaNames.SOFTWARE_SPEC_ID: software_spec_id,

client.repository.AIServiceMetaNames.CUSTOM: {},

client.repository.AIServiceMetaNames.REQUEST_DOCUMENTATION: request_schema,

client.repository.AIServiceMetaNames.RESPONSE_DOCUMENTATION: response_schema,

client.repository.AIServiceMetaNames.TAGS: ["wx-agent"]

}

ai_service_details = client.repository.store_ai_service(meta_props=ai_service_metadata, ai_service=gen_ai_service)

# Get the AI Service ID

ai_service_id = client.repository.get_ai_service_id(ai_service_details)

# Deploy the stored AI Service

deployment_custom = {

"avatar_icon": "Bot",

"avatar_color": "background",

"placeholder_image": "placeholder2.png"

}

deployment_metadata = {

client.deployments.ConfigurationMetaNames.NAME: "Agent001",

client.deployments.ConfigurationMetaNames.ONLINE: {},

client.deployments.ConfigurationMetaNames.CUSTOM: deployment_custom,

client.deployments.ConfigurationMetaNames.DESCRIPTION: "Change this description to reflect your particular agent",

client.repository.AIServiceMetaNames.TAGS: ["wx-agent"]

}

function_deployment_details = client.deployments.create(ai_service_id, meta_props=deployment_metadata, space_id=space_id)

- Test AI Service.

# Get the ID of the AI Service deployment just created

deployment_id = client.deployments.get_id(function_deployment_details)

print(deployment_id)

messages = []

remote_question = "Change this question to test your function"

messages.append({ "role" : "user", "content": remote_question })

payload = { "messages": messages }

result = client.deployments.run_ai_service(deployment_id, payload)

if "error" in result:

print(result["error"])

else:

print(result)

All this is inside a notebook for sure!

Conclusion

The agent builder interface in watsonx.ai has evolved into an enterprise garde / production-ready interface.

Give it a try at IBM watsonx.ai for free: https://eu-de.dataplatform.cloud.ibm.com/registration/stepone?context=wx&preselect_region=true

Top comments (0)