In this post I’ll be showing you a quick and easy example applying machine learning to a dataset and doing predictions with our model.

We’ll be using:

- Python

- Pandas, which is helps us in data structure and data analysis or visualisation

- Scikit-Learn, and

- Iris Dataset.

I won’t be diving too deep on how to use these tools. But for what I’ll cover in this post should be enough to get you through most datasets.

Let’s begin!

Get the Data

First let’s download the iris dataset. You can download the iris dataset here and save it as CSV.

Let’s import pandas and read our csv file.:

import pandas as pd

dataset = pd.read_csv("./iris.csv")

# let’s see a sample of our data

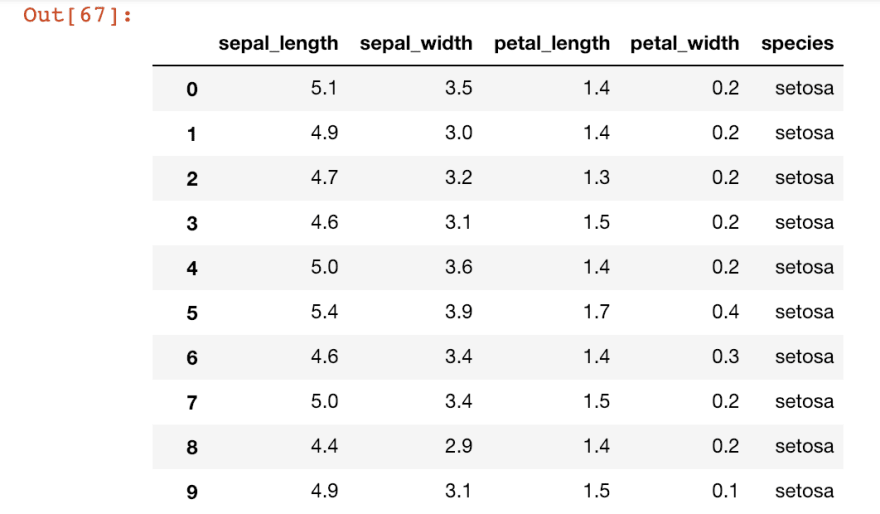

dataset.head()

The .head() functions shows us the top few rows in our dataset.

You can see the columns and the rows which contain our data.

Looking at our dataset we could see the columns which are

["sepal_length", "sepal_width", "petal_length", "petal_width", "species"]

We want to make our target (the thing we’re trying to predict, also called y) to be the species column and the rest of the columns [sepal_length, sepal_width, petal_length, petal_width] to be our features (also called X).

y = dataset["species"]

features = ["sepal_length", "sepal_width", "petal_length", "petal_width"]

X = dataset[features]

Splitting the Data

Next, we need to split our data. Typically we’d need to split our data into 3 parts. One for training, second for validation, and third for testing.

- Training data for training our model

- Validation data to validate our model and

- Test data to test our model

We need a validation dataset because the lifecycle of our training data wears off in every training. Essentially our model gets too familiar with our training dataset that it might form biases and affects the performance of our model to new, unseen data. Validation data makes sure that our model performs well on even unseen data.

Testing dataset is when we want to see the ‘final’ performance of our model after testing with our validation data. Nothing is "final" though. Machine Learning is a continuous improvement, but just so we can make sure our model still performs well even on unseen data, which is our testing data.

Scikit-Learn uses the train_test_split function which splits our data to training and validation*.

**I'm not currently sure if it can also split for test 😅✌🏽but this is enough for our small project.*

from sklearn.model_selection import train_test_split

#splitting our dataset for training and validation

#random_state to shuffle our data

train_X, val_X, train_y, val_y = train_test_split(X, y, random_state=1)

Transforming the Data

Excellent! However, machine learning doesn’t understand letters. Machine Learning is composed of a bunch of mathematical formulas and operations under the hood. In math we don’t say, One+One=Two. It must be 1+1=2.

Our y (target variable) are currently in characters or letters. [‘Versicolor’, ’Setosoa’, ‘Verginica’]. We need to somehow convert this into a meaningful number that the machine learning can understand.

It could be easier to do [0, 1, 2] right?

But the problem with this approach is that the machine learning might think there’s a correlation in the order of the labels and might form a bias due to that order.

The recommended approach would be to use one-hot encoding. Fortunately, pandas has a built in one-hot-encoder function that we can conveniently use.

train_y = pd.get_dummies(train_y)

val_y = pd.get_dummies(val_y)

Excellent! Let’s begin training our model. We’ll be using the Scikit-Learn's RandomForestClassifier since we’re trying to classify plants.

from sklearn.ensemble import RandomForestClassifier

random_forest = RandomForestClassifier(random_state=1)

random_forest.fit(train_X, train_y)

That random_state is basically shuffles our data to avoid any seeming order in our data. This makes sure that our model isn’t biased to the order of our given training data.

Testing our Model

Let’s now validate our model using our validation data.

from sklearn.metrics import mean_absolute_error

preds = random_forest.predict(val_X)

mae = mean_absolute_error(val_y, preds)

print("Mean absolute error is: {:,.5f}".format(mae * 100))

print(random_forest.score(val_X, val_y) * 100)

Mean absolute error is the basically the average error of our model. The lower the mean absolute error the better the performance of our model is.

The .score() function gives us the score of the performance of our model on the validation data.

Currently the mean absolute error is (I think) too low. And the .score() is too high. There’s a possibility of data leakage, even overfitting on our model.

I won’t dive in too much in to it but it’s best to avoid data leakage and overfitting in our models as these can severely affect the performance of our model.

Let’s test our model shall we? I’ve manually placed a test data in our model to see what it would predict.

preds = random_forest.predict([[2.0, 1.9, 3.7, 2]])

print(preds)

It gives us the result:

[[0. 1. 0.]]

Inspecting our y variable with print(y) we could see that it looks like this:

| Setosa | Versicolor | Virginica |

|---|---|---|

| 0 | 1 | 0 |

Looking at our prediction we can conclude that based on our given test data our model returned versicolor.

Inspecting the data we can logically conclude that it is actually quite right!!

Sweet!! We just trained a new model in predicting models. Incredible!! Give yourself a coffee break. ☕️ Congratulations 🎉🎉🎉

Hope you enjoyed the My Machine Learning Journey series. I hope you also learned something 😅 I’m open to any feedbacks you might have or if there’s something that might be wrong, please do comment down below.

For those trying to follow this code, I have a working kaggle notebook for this example.

Thank you!

P.S. I've just uploaded my Hello World video on my Youtube Channel - Coding Prax! Drop by and say hi 😄 like and subscribe if you want to ✌🏽

Top comments (7)

I'm having problems following this and getting working code. Specifically, on the

random_forest.fit(train_X, train_y)call, I get the following error: "ValueError: could not convert string to float: 'setosa'"I think this may be because the

train_Xdata still has the species in text format. How does thefitfunction know the relationship between theXspeciescolumn and theYsetosa/versicolor/virginicacolumns? Do I need to do one-hot encoding on the X data?Also, the steps seem to be out of order. Shouldn't you do the

get_dummies(y)call before you do thetrain_test_split(x, y, ...)? Maybe this isn't intended to be a full working example?Right! My bad 😅 The order is actually correct. Doing

get_dummiesfirst before splitting the data might cause a data leakage. We want to make sure that when we split our data, it is "pure". My mistake was thaty = pd.get_dummies(y)I've updated it so that would be like this instead:train_y = pd.get_dummies(train_y)val_y = pd.get_dummies(val_y)Sorry I took so long to reply 😅you can easily reach me tho through twitter @heyimprax.

I added the two lines above, but I still get the same error message. "ValueError: could not convert string to float: 'setosa'"

I think this may be because the train_X data still has the species in text format. How does the fit function know the relationship between the

Xspeciescolumn and theYsetosa/versicolor/virginicacolumns? Do I need to do one-hot encoding on the X data?Could you post a full, working Python script somewhere so I can see how this is supposed to work?

Oh right! Take out the

speciesin the features array. That should fix the "ValueError: could not convert string to float: 'setosa'"Also, I've added the missing

from sklearn.metrics import mean_absolute_errorfor the

mean_absolute_errorfunction.Here's a link to a working kaggle notebook: kaggle.com/interestedmike/iris-dat...

Cool series, keep up the good work!👍

My team just completed an open-sourced Content Moderation Service built Node.js, TensorFlowJS, and ReactJS that we have been working over the past weeks. We have now released the first part of a series of three tutorials - How to create an NSFW Image Classification REST API and we would love to hear your feedback(no ML experience needed to get it working). Any comments & suggestions are more than welcome. Thanks in advance!

(Fork it on GitHub or click🌟star to support us and stay connected🙌)

Great series so far Ben

Thanks Ben 😅!