Throughout my programming career, I have not explored anything sexier or more intimidating than sorting algorithms. Scouring the web for more information on this topic, I found sorting algorithms ranging from fairly simplistic to the elegantly complex. As it turns out, there is quite the library of sorting algorithms that have been created over the years, so learning and comparing all of them would take a very long time. Therefore, in this blog, I would like to focus on five of the most popular: selection sort, bubble sort, insertion sort, merge sort, and quicksort.

These algorithms will increase in complexity as we work through them. However, as complexity increases, so will their efficiency. Thus, it appears that nothing truly spectacular comes easily. Such is life. However, if willing to take on the task of understanding some of these complex algorithms (merge and quicksort specifically), I assure that you will find mind-blowingly elegant. Now that I’ve talked enough, let’s get down to business.

O Complexity

In the computing world, algorithm efficiency is measured by something called the Big O Notation (or O complexity). Big O is measured by the amount of computations/comparisons done within a particular algorithm. Since this idea seems very abstract at first, let’s look at an example.

for (var i = 0; i < arr.length; i++) {

sum += arr[i];

}

Let’s assume that arr and sum have already been defined. Here, we are looping through the array and adding each element to a variable called sum. Because the array is n elements long, we loop through the array n times. In other words, we are running the inner logic n times in total. This algorithm has a complexity of O(n).

Let’s look at another example (still assuming arr and sum are defined).

for (var i = 0; i < arr.length; i++) {

for (var j = 0; i < arr.length; i++ {

sum += arr[j];

}

}

Can you guess how many computations will be made with this algorithm? If you guess n², you would be correct. If not, that’s ok. Here’s the explanation. For simplicity, we will say that the length of the array is n elements long. For the inner for loop, we are going to do n computations (again, one for each element of the array). The outer loop is going to run the inner loop n times (one time for each element of the array). Because the inner loop runs n computations, and the outer loop runs the inner loop n times, there are a total of n² computations. We would refer to this array having a time complexity of n².

Understanding O complexity, we should now be able to analyze the sorting algorithms for their efficiency.

Selection Sort

Selection sort sorts data by selecting the smallest element in the array and swapping with the first unsorted element in the. See the graphical explanation below.

Now let’s take a look to how this looks in code. For simplicity, I am not going to define the swap function. Just know that it takes in an array to update and two indexes to swap.

for(var i = 0; i < arr.length; i++) {

for(var j = i + 1; i < arr.length; i++) {

if (arr[j] < arr[i]) {

min_val_index = j;

}

}

if (i != min_val_index) {

swap(arr, i, min_val_index);

}

}

This algorithm has a complexity of O(n²). I know what you might be thinking. There are a lot more computations per loop in this one compared to the last one. How can they both be O(n²)? While that may be true, algorithm efficiency measurement negates how many computations you do per loop. In other words, we are only concerned about the amount of times we are looping and no the computations inside the loop. Therefore, we consider this algorithm to have a complexity of O(n²)

Bubble Sort

Bubble sort sorts data by comparing each element of the array to its neighbor and then swapping them if they are in the wrong order. This gives the visual effect of the larger elements “bubbling” to the end of the array. See the graphical representation to the left.

Here’s how it looks in code. Again, I will not define the swap function.

for(var i = 0; i < arr.length; i++) {

for(var j = 0; j < arr.length - i - 1; j++) {

if(arr[j] > arr[j + 1]) {

swap(arr, j, j + 1);

}

}

}

Again, this algorithm has a complexity of O(n²), so it we’re not getting anywhere quite yet.

Insertion Sort

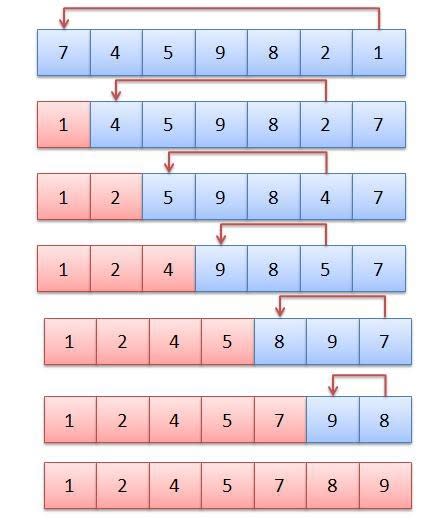

Insertion sort sorts data by going through each element in the array and inserting that item into the already sorted portion of the array. See the graphical representation to the left.

Below is the implementation of this in code.

for(var i = 1; i < arr.length; i++) {

j = i - 1;

while j >= 0 && arr[j] > arr[i] {

arr[j + 1] = arr[j];

j = j - 1;

}

arr[j + 1] = arr[i];

}

Again, the complexity of this algorithm is O(n²). It doesn’t look like we’re getting anywhere looping inside of loops. This leads us to our final two algorithms: merge sort and quicksort. But first we need to define something called recursion. This is a very complicated topic, however, merge sort and quicksort both use it to increase efficiency.

Recursion

Recursive functions are functions that call themselves. Let’s look at one of the simplest example of this: a factorial. A factorial of a number is nothing more than the product of all whole numbers less than itself. 5! = 5 * 4 * 3 * 2 * 1. With this information, we can say that the factorial of a number is equal to the product of the original number and the factorial of the original number - 1. 5! = 5 * 4!. Therefore, 5! = 5 * 4! = 5 * 4 * 3! = ……. Here we can use a recursive function. See below for the implementation of this in code.

function factorial(var n) {

if (n>=1) {

return n * factorial(n-1);

}

else {

return 1;

}

}

Merge Sort

Merge sort works by first splitting up the data into singular elements, then merging them back together in the proper order. Study the diagram to the left closely. It does this through recursive computing.

Let’s look at how this looks in code.

function mergeSort (arr) {

if (arr.length <= 1) {

return arr;

}

var mid = Math.floor(arr.length / 2);

var left = mergeSort(arr.slice(0, mid));

right = mergeSort(arr.slice(mid));

return merge(left, right);

}

function merge (arr1, arr2) {

var sorted = [];

while (arr1.length && arr2.length) {

if (arr1[0] < arr2[0]) {

sorted.push(arr1.shift());

}

else {

sorted.push(arr2.shift());

}

}

return sorted.concat(arr1.slice().concat(arr2.slice()));

}

In the first part of the mergeSort function, we are breaking down the the array into bites of 1 element long. Then once we reach one element long, we will take those elements and start merging them together with the merge function. Without getting into the deep math (believe me that the math is deep), the time complexity of merge sort is O(n * log (n)). If interested, you can find a good explanation of this here on stack exchange.

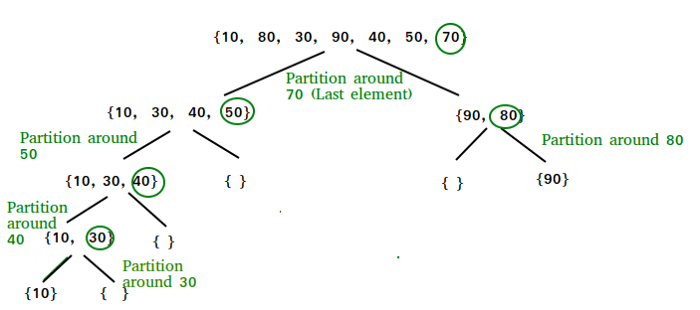

Quicksort

Similar to merge sort, quicksort attacks sorting with a divide and conquer methodology. Here, the data is partitioned by a pivot (I usually pick the last element in the array). The elements are then groups into two subarrays — one array with elements less than the pivot, and one with the elements greater than the pivot. This process is repeated until the subarrays have a length of one or zero elements. See the diagram below.

If you guessed this sounds like a recursive problem, you’d be right. This is how it looks in code.

function quickSort(arr[], low, high)

{

if (low < high)

{

pivot = partition(arr, low, high);

quickSort(arr, low, pivot - 1);

quickSort(arr, pivot + 1, high);

}

}

function partition (arr[], low, high)

{

var pivot = arr[high];

var i = (low - 1) // Index of smaller element

for (var j = low; j <= high- 1; j++)

{

// If current element is smaller than the pivot

if (arr[j] < pivot)

{

i++;

swap(arr, i, j)

}

}

swap(arr, i + 1, high)

return (i + 1)

}

Through all this, the time complexity of this is O(n log(n)). You can reference a great walkthrough of the code here if interested.

Conclusion

I hope not to have left you perplexed with the sorting algorithms above. I understand that they are very complex at times, however, the only way to get to know them is to spend time working through them. As an aside, coding languages (Ruby, Python, etc) typically use quicksort by default. This is because quicksort is the fastest performing sorting algorithm in the average case for most inputs. But by all means, please still use the built-in sorting algorithms for the programming language. Sometimes it’s just fun to see what kind of complex monsters live behind simple commands such as array.sort.

Top comments (0)