Demo: https://editor.p5js.org/heyozramos/sketches/LVsjLSPt7

A while back I came across a really fun tweet by @cassidoo on Twitter

At the end of the clip, there's a scene where a million copies of her are singing together. It's a really cool effect and in this first tutorial in a series on Segmentation Effects, I'll show you how to recreate it with JavaScript!

Our tools

We'll be using:

- p5.js in global mode. p5.js is a beginner-friendly creative coding library

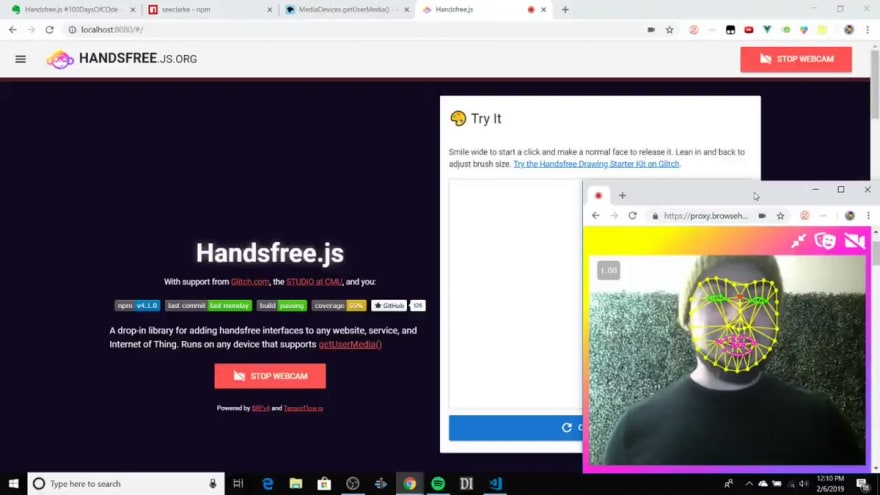

- Google's Bodypix. Bodypix is a segmentation and pose estimation computer vision library powered by TensorFlow. We'll initialize BodyPix through Handsfree.js

- Handsfree.js is my library for interacting with web pages handsfree

<head>

<script src="https://cdn.jsdelivr.net/npm/p5@0.10.2/lib/p5.js"></script>

<script src="https://unpkg.com/handsfree@6.1.7/dist/handsfree.js"></script>

<link rel="stylesheet" type="text/css" href="https://unpkg.com/handsfree@6.1.7/dist/handsfree.css">

</head>

Instantiating Handsfree.js

Handsfree.js is a library that wraps popular computer vision models like Jeeliz Weboji (head tracking), Google's BodyPix (segmentation and pose estimation), and soon, eye tracking through WebGazer and Handtracking through Handtrack.js. I originally started it as an assistive tech tool to help people browse the web handsfree through face gestures, but it also doubles as a controller for games, Algoraves, and other creative coding projects!

Oz Ramos@heyozramos

Oz Ramos@heyozramos Day 1 of #100DaysofCode and #100DaysofMLCode

Day 1 of #100DaysofCode and #100DaysofMLCode

My goal with Handsfree.js will be to wrap JavaScipt based computer vision libraries under a single API for the purpose of interacting with web pages (with head, body, hand tracking)

Here's a preview (link at end is broken, will fix!)00:36 AM - 05 Nov 2019

So to begin, we need to instantiate Handsfree.js. We instantiate once for every camera that we want to use (if you have a multi-cam setup or using a mobile device with front/back webcams). Instantiation looks like this:

/**

* This method gets called once automatically by p5.js

*/

function setup () {

// Instantiate Handsfree. "H"andsfree is the class, "h"andsfree is the instance

handsfree = new Handsfree({

autostart: true,

models: {

head: {enabled: false},

bodypix: {

enabled: true,

// Uncomment this if it runs slow for you

// modelConfig: {

// architecture: 'MobileNetV1',

// outputStride: 8,

// multiplier: 0.75,

// quantBytes: 2

// }

}

}

})

// Disable default Handsfree.js plugins

Handsfree.disableAll()

}

Creating a buffer canvas

The next thing we'll need to do is create a "buffer" canvas. This canvas will contain the cutout, which we'll then paste into the p5 canvas a bunch of times:

function setup () {

// ...

// Create a canvas that'll contain our segmentation

$buffer = document.createElement('canvas')

$buffer.style.display = 'none'

bufferCtx = $buffer.getContext('2d')

document.body.appendChild($buffer)

// This part's important, match the buffer dimensions to the webcam feed

$buffer.width = handsfree.debugger.video.width

$buffer.height = handsfree.debugger.video.height

widthHeightRatio = $buffer.width / $buffer.height

// Create a slider to adjust the number of clones

slider = createSlider(3, 12, 4, 1)

// Finally, create the p5 canvas

renderer = createCanvas(600, 600)

$canvas = renderer.canvas

canvasCtx = $canvas.getContext('2d')

}

Creating a mask

(GIF source: https://blog.tensorflow.org/2019/11/updated-bodypix-2.html)

We'll be using BodyPix as a kind of "smart green screen" that's able to remove all the background pixels. We'll do this in a method called draw that is run automatically by p5 every few milliseconds:

function draw() {

background(220);

// Just for convenience

const model = handsfree.model

const body = handsfree.body

// Only paint when we have data

if (body.data) {

// Create a mask, with all non segmented pixels as magenta

mask = model.bodypix.sdk.toMask(

body.data,

{r: 0, g: 0, b: 0, a: 0}, // foreground

{r: 255, g: 0, b: 255, a: 255} // background

)

// Paint the mask into a buffer canvas

model.bodypix.sdk.drawMask(

$buffer,

handsfree.debugger.video,

mask,

1, 0, 0

)

// Make all magenta pixels in the buffer mask transparent

let imageData = bufferCtx.getImageData(0, 0, $buffer.width, $buffer.height)

for (let i = 0; i < imageData.data.length; i += 4) {

if (imageData.data[i] === 255 && imageData.data[i + 1] === 0 && imageData.data[i + 2] === 255) {

imageData.data[i + 3] = 0

}

}

bufferCtx.putImageData(imageData, 0, 0)

// Dimensions of each mask

w = $buffer.width / slider.value()

h = w * widthHeightRatio

// Paste the mask a bunch of times

for (row = 0; row < slider.value() * 3; row++) {

for (col = 0; col < slider.value(); col++) {

// Stagger every other row

x = col * w - (w / 2) * (row % 2)

y = row * h / 3 - h / 2 * (1 + row / 10)

canvasCtx.drawImage($buffer, x, y, w, h)

}

}

}

}

On each webcam frame, Handsfree.js captures segmentation data into handsfree.body.data which we'll use to create the mask.

// Create a mask, with all background pixels as magenta (255, 0, 255)

mask = model.bodypix.sdk.toMask(

body.data,

{r: 0, g: 0, b: 0, a: 0}, // foreground

{r: 255, g: 0, b: 255, a: 255} // background

)

// Paint the mask into a buffer canvas

model.bodypix.sdk.drawMask(

$buffer,

handsfree.debugger.video,

mask,

1, 0, 0

)

Next, we'll go through each pixel and make any magenta pixels transparent:

// Make all magenta pixels in the buffer mask transparent

let imageData = bufferCtx.getImageData(0, 0, $buffer.width, $buffer.height)

for (let i = 0; i < imageData.data.length; i += 4) {

if (imageData.data[i] === 255 && imageData.data[i + 1] === 0 && imageData.data[i + 2] === 255) {

imageData.data[i + 3] = 0

}

}

bufferCtx.putImageData(imageData, 0, 0)

Finally, we paste the buffer into the p5 canvas a bunch of times:

// Dimensions of each mask

w = $buffer.width / slider.value()

h = w * widthHeightRatio

// Paste the mask a bunch of times

for (row = 0; row < slider.value() * 3; row++) {

for (col = 0; col < slider.value(); col++) {

// Stagger every other row

x = col * w - (w / 2) * (row % 2)

y = row * h / 3 - h / 2 * (1 + row / 10)

canvasCtx.drawImage($buffer, x, y, w, h)

}

}

And that's all there is to it!

Let me know in the comments if anything is unclear. I hope you enjoy playing around with this demo, it's the first in a set of tutorials on creating Instagram SparkAR-like filters for the web with p5.js.

Top comments (0)