Table Of Contents

Many Firsts

This blog post is many firsts for me. My first blog post, first post on medium, first time I compiled Rust code on a raspberry pi, first time I benchmarked a web service on raspberry pi, … there is probably even more. So please give me feedback on how I can improve my writing for you!

I realized that a large community of people exist that desire to learn the things I am learning but struggle to break into the technical space. So my goal for my writing is to lower that barrier by providing my experience, source code, and even directly helping over whatever channels work best. Feel free to ask questions here, or twitter, or anything. I hope that you can take my projects and build upon them.

Rust is Different

According to the stack overflow developer survey, Rust has been the most loved language since 2016 (over 4 years today). I have been keeping an eye on the language for some time now, and feel like it is finally in a place I would consider it stable — not mature necessarily — but stable. Stable in the sense that the syntax and community are developed. So after watching many YouTube videos, reading articles, and even testing out various projects I decided to invest more time into the rust ecosystem and I have to admit, it is nice.

For your benefit I should note that my primarily used languages are Python, C, C++, and Java; with python being my most familiar. Admittedly, Rust feels very different to me and I am still learning the fundamentals. I can not say I love the syntax. Rust does not have OOP design paradigms, and surprisingly passing variables was tough to get used to because of the borrow checker.

However, I can say I love the compiler, which uses LLVM as its back end and has clear messaging to explain what is wrong. Rust comes with amazing tools out of the box; such as automatic documentation generator, linter, code formatter, package manager, …. It even has the ability to write tests in the doc strings, and run them without third party libs.

I am in the process of learning rust by completing the rustlings courses. But I am also a hands on learner — hence this experiment.

Warp

A super-easy, composable, web server framework for warp speeds.

I found this web server framework different than others I have used in the past, and I will try a couple more soon. Regardless, I am glad I tried warp, they are on to something with this feature they call “filters”. It allows your routes to be built with a builder pattern.

let register = warp::post()

.and(warp::path("register"))

.and(warp::body::json())

.and(redis_pool.clone())

.and_then(register);

The above code sample makes a route and registers it to a function called “register” that may be accessed in the following way.

HTTP

POST /register HTTP/1.1

Host: 192.168.1.2:3030

{

"username": "chmoder",

"password": "password"

}

The neat takeaway for me here is that you compose what will catch a route and prepare the data for the function that intercepts the request.

Raspberry PI 4 Benchmark

High level talk: This service has two routes “register” and “login”. Register saves a user and password-hash to redis. Login gets the password for the username. This is totally contrived and doesn’t make sense as an application. But it does allow us to see what kind performance we can get from warp with a real-world database.

In this project you will find main.rs, and main-mutex.rs. main-mutex was my first iteration. It used a mutex to share a redis connection among the warp threads. Main.rs gives each handler a r2d2 connection pool for redis. This allowed me to remove a few things from the code and get > 2X performance.

By the way the server was compiled and ran on a raspberry pi 4 and used only 23 Mb of memory.

main-mutex.rs

siege -c100 -r 10 -H 'Content-Type: application/json' 'http://192.168.1.2:3030/login POST { "username": "chmoder","password": "password"}'

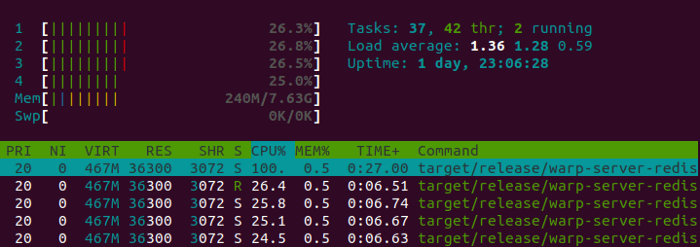

The mutex version is not completely utilizing the CPU because only has one process is in “running” state at any given time.

Our concurrency looks good but some transactions had to wait

8.4 seconds.

** SIEGE 4.0.4

** Preparing 100 concurrent users for battle.

The server is now under siege...

Transactions: 1000 hits

Availability: 100.00 %

Elapsed time: 37.55 secs

Data transferred: 0.00 MB

Response time: 3.48 secs

Transaction rate: 26.63 trans/sec

Throughput: 0.00 MB/sec

Concurrency: 92.55

Successful transactions: 1000

Failed transactions: 0

Longest transaction: 8.40

Shortest transaction: 0.04

main.rs

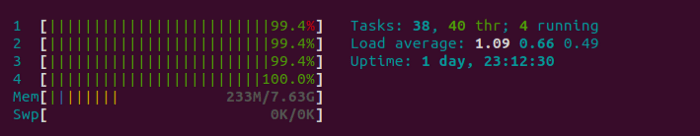

This one passes the connection pool to all the threads, fully utilizing the CPU. This ended up taking 2.19X less time to run and had the same speedup on “Longest transaction”.

** SIEGE 4.0.4

** Preparing 100 concurrent users for battle.

The server is now under siege...

Transactions: 1000 hits

Availability: 100.00 %

Elapsed time: 17.10 secs

Data transferred: 0.00 MB

Response time: 1.57 secs

Transaction rate: 58.48 trans/sec

Throughput: 0.00 MB/sec

Concurrency: 91.82

Successful transactions: 1000

Failed transactions: 0

Longest transaction: 3.58

Shortest transaction: 0.07

Go ahead and check out the repository on GitHub! Let me know what you think and what kind of modifications you were able to do!

Top comments (0)