Introduction

As you may know, after introducing Windows Vista (NTkernel release 6.0), Microsoft has improved the NT kernel ecosystem from different aspects. For example, the Vista operating system implements new features for mitigating I/O bottlenecks.

Since disk or network I/O is often the performance bottleneck and many processes must contest for I/O access, so I/O prioritization in Windows NT6 was widely acclaimed. Unfortunately, it isn’t fully in use yet. As you’ll see, there are only two application usable levels — Normal and Very Low (Background mode) in Vista but it may be more levels in post vista operating systems like Windows 7, 8.1, or 10.

The application mechanism which Microsoft provided is to enter into a background mode via the SetProcessPriorityClass and/or SetThreadPriority API. However, these can not be set for external processes, only the calling process. This lets the OS control all priorities, setting to an appropriate background I/O priority, and an Idle CPU priority.

Note there are also distinguishing features added to nt6 like the new Multimedia Class Scheduler service and also Bandwidth Reservation. These features attempt to guarantee I/O availability for playback in programs like Windows Media Player that register themselves with the Multimedia Scheduler. This is what you should do if you need reliable bandwidth streaming in.

Though, besides these improvements, Microsoft has made a lot of improvements for security issues on the Vista operating system include User Account Control, parental controls, Network Access Protection, a built-in anti-malware tool, and new digital content protection mechanisms which these features will not be discussed in this article.

I am going to discuss in this paper the need for prioritization, describes the various tactics that Microsoft uses to keep the system responsive, and provides information and guidelines for application and device driver developers to leverage these approaches.

The I/O Prioritization Concept

To keep the operating system throughput and also its responsiveness balanced, processes and also threads are given priorities so that more critical processes/threads are scheduled more frequently or given longer time slices (quantum slices).

However, with today’s advanced systems, even low-priority background threads have the resources to create frequent and large I/O requests.

These I/O requests are created without consideration of priority. Consequently, threads create I/O without the context for when the I/O is needed, how critical the I/O is, and how the I/O will be used. If a low-priority thread gets CPU time, it could easily queue hundreds or thousands of I/O requests in a concise time.

Because I/O requests typically require time to process it is possible that a low-priority thread could significantly affect the responsiveness of the system by suspending high-priority threads, which prevents them from getting their work done. Because of this, you can see a machine become less responsive when executing long-running low-priority services such as:

- disk defragmenters,

- multimedia based apps,

- anti-ransomware apps,

- networked-based apps

- and so on.

However, every thread has a base-priority level determined by the thread’s priority value and the priority class of its process. The operating system uses the base-priority level of all executable threads to determine which thread gets the next slice of CPU time.

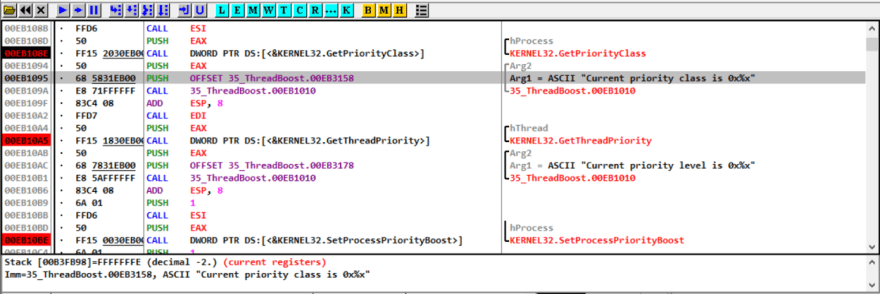

So if we wanted to know what is the base priority of a thread, we can call GetThreadPriority and GetProcessPriority to retrieve the priority level and the priority class of a process in a sequence. In the following photo, you can see a binary called these APIs to specify the current priority level of a process and a thread.

Threads are scheduled in a round-robin fashion at each priority level, and only when there are no executable threads at a higher level will scheduling of threads at a lower level take place.

For a table that shows the base-priority levels for each combination of priority class and thread priority value, refer to the slide 3 photo of this article.

So the rest of this section explores the required context to properly prioritize I/O requests. However, threads are scheduled to run based on their scheduling priority. Each thread is assigned a scheduling priority.

As you can see in the above slide, the priority levels range from zero (lowest priority) to 31 (highest priority). Only the zero-page thread can have a priority of zero. The zero-page thread is a system thread responsible for zeroing any free pages when there are no other threads that need to run.

The purpose of I/O prioritization is to improve system responsiveness without significantly decreasing overall throughput. System advances have often focused on improving the performance of the CPU to improve the work-throughput capabilities of the system.

Input and output (I/O) devices allow us to communicate with the computer system. I/O is the transfer of data between primary memory and various I/O peripherals. Input devices such as keyboards, mice, card readers, scanners, voice recognition systems, and touch screens enable us to enter data into the computer. Output devices such as monitors, printers, plotters, and speakers allow us to get information from the computer.

I/O devices have also focused on improvements to throughput. However, the largest performance bottleneck for media or storage based devices is armature seek time, which is often measured in milliseconds.

So, it is easy to see how low-priority threads might be capable of flooding a device with I/O requests that starve the I/O requests of a higher-priority thread. Like the Windows thread scheduler, which is responsible for maintaining the balance among threads that are scheduled for the CPU, the I/O subsystem must take on the responsibility of maintaining the same kind of balance for I/O requests in the system.

For both thread scheduling and I/O scheduling, the balance is driven not only by the need to optimize throughput but by the need to ensure an acceptable level of responsiveness to the user.

When optimizing for more than just throughput, throughput might be sacrificed in favor of quickly completing the I/O request for which a user is waiting.

When responsiveness is considered, the user’s I/O is given higher priority. This causes the application to be more responsive, even though overall I/O throughput might decrease.

If the system thread’s I/O is serviced first at the cost of the application’s ability to make progress, the user notices the system as slower, even though throughput is actually higher.

I/O Access Patterns

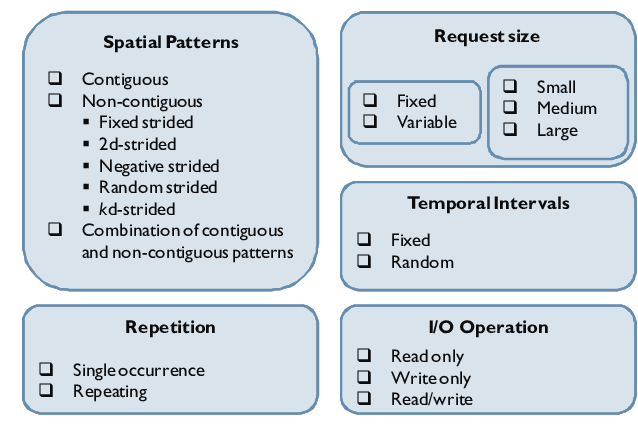

Also, in the following photo, you will see a complete categorization of I/O access patterns but I will simply explain the whole concept in the following paragraphs.

To ensure that throughput is not sacrificed more than is required to maintain responsiveness, I/O access patterns must be considered. The reasons for copying data from a device into memory can be categorized into a few simple scenarios:

- The OS might copy in binary files to be executed, or it might copy in data that executable programs might need. An example would be launching Microsoft Word. 2.An application might open a data file for use in its completeness. An example would be loading a Microsoft Word document so the user can edit the document. 3.The file system might read in or write file system metadata when a file is created, deleted, moved, or otherwise modified. An example would be creating a new Word document.

- A background task might attempt to do work that is not time-critical and should not interfere with the user’s foreground tasks. An example would be antivirus software that is scanning files in the background.

- An application might open a data file for use as a stream. An example would be playing a song in VLC Player.

Loading Access Pattern

Most I/O access falls into the pattern of the atomic load before use, or paging, gather and flush. Scenarios 1 to 3 fall into this category.

For example, a program that is to be executed must first be loaded into memory. For this to happen, a set of I/O must be completed as a group before execution can start. The system might use techniques to page out parts of an executable for the sake of limited system resources, but it does so by applying the atomic load before use rule to subsections.

After a program is running, it often performs tasks on a data file. An example is Microsoft Word, which performs tasks on .doc files. Loading a data file involves another set of I/O that must be completed as a group before the user can modify the file. Saving the modified file back to the device involves yet another set of I/O that must be completed as a group. In this way, applications that load data files follow the atomic load before use the pattern on their data files.

Finally, when the file system updates its metadata because of actions that the user performs on the system, the file system must also atomically read or write its metadata before it may proceed with other operations that depend on that metadata.

All of these scenarios follow the same access pattern. However, depending on the user’s focus at any given time, the urgency of each set of I/O operations might change.

Additionally, I/O processing of threads may depend on each other and require some I/O to complete before other I/O can be started. File system I/O often finds itself in this situation. Improving the responsiveness of the system requires a method to ensure that I/O is completed in a certain order.

Passive Access Pattern

A variation on atomic load before use is an application that is working to accomplish a set of I/O operations but grasps that it is not the focus of the user and consequently should not interfere with the responsiveness of what the user is working on. Scenario 4 falls into this category.

System processes that operate in the background often find themselves in this role. A background defragmenter is an example of such a service. A defragmented device has better responsiveness than a fragmented storage device because it requires fewer costly queries to accomplish file reads and writes.

However, it would be counterproductive to cause the user’s application to become less responsive due to the large number of I/O requests that the defragmenter is creating.

Passive access patterns are used by applications whose tasks are often considered noncritical maintenance. Fundamentally, this means that the applications are not supposed to finish a task as soon as possible because their tasks are always ongoing. Such applications are not required to be responsive and must have a way to allow activities that require responsiveness to proceed unimpeded.

Streaming Access Pattern

The opposite of the atomic load before the use pattern is the streaming I/O pattern. In this kind of access, the application does not require all of the I/O in a set to be completed before it can begin its task; it can begin processing data from the completed I/O in parallel with retrieving the next set of data.

The application requires the I/O to be accomplished in a specific order, and it requires the process to be acceptably responsive, potentially within real-time limits. Scenario 5 falls into this category.

An example of an application that uses a streaming I/O access pattern is Windows Media Player. For this type of application, the purpose of the application is to progress through the I/O set. Additionally, many media applications can compensate for missing or dropped frames of data.

For this reason, a device that puts forth a large effort to accomplish a read might be taking the worst course of action because it holds up all other I/O and causes glitches in the media playback.

How can we change the priority?

Aside from using the documented background mode priority class for SetPriorityClass, you can manually tweak the I/O priority of a running process via the NT Native APIs.

Retrieving the process I/O priority is simple. If you know how to call any API, you can call these APIs. There is documentation on the web about them, and the below paragraph will summarize their usage in setting and retrieving I/O priorities.

NtQueryInformationProcess and NtSetInformationProcess are the NT Native APIs to get and set different classes of process information, respectively. For retrieval, you simply specify the type (class) of information and the size of your input buffer.

It returns the requested information or the needed size of the buffer to get that information. The Set function works similarly, except you know the size of data you are passing to it. However, in the following sections, we will discuss the I/O prioritization strategies that correspond to the access patterns that were described earlier.

Hierarchy Prioritization Strategy

The atomic transfer before use scenario that was described earlier can be addressed by a mechanism that marks an I/O set in a transfer for preferential treatment when the I/O set is being processed in a queue.

A hierarchy prioritization strategy effectively allows marked I/O to be sorted before it is processed. This strategy involves several levels of priority that can be associated with I/O requests and thus can be handled differently by drivers that see the requests. Windows Vista currently uses the following priorities: critical (memory manager only), high, normal, and low.

Before Windows Vista, all I/O was treated equally and can be thought of as being marked as a normal priority. With hierarchy prioritization, I/O can be marked as high priority so that it is put at the front of the queue. This strategy can take on finer granularity, and other priorities such as low or critical can be added. In this strategy, I/O is processed as follows:

- All critical-priority I/O must be processed before any high-priority I/O.

- All high-priority I/O must be processed before any normal-priority I/O.

- All normal-priority I/O must be processed before any low-priority I/O.

- All low-priority I/O is processed after all higher priority I/O.

For a hierarchy prioritization strategy to work, all layers within the I/O subsystem must recognize and implement priority handling in the same way. If any layer in an I/O subsystem diverges in its handling of priority, including the hardware itself, the hierarchy prioritization strategy is at risk of being rendered ineffective.

Idle Prioritization Strategy

The non-time-critical I/O scenario that was described earlier in this paper can be addressed by a mechanism that marks the set of I/O in a transfer to yield to all other I/O when they are being processed in a queue. Idle prioritization effectively forces the marked I/O to go to the end of the line.

The idle strategy marks an I/O as having no priority. All I/O that has a priority is processed before a no-priority I/O. When this strategy is combined with the hierarchy prioritization strategy, all of the hierarchy priorities are higher than the non-priority I/O.

Because all prioritized I/O goes before no-priority I/O, there is a very real possibility that a very active I/O subsystem could starve the no-priority I/Os. This can be solved by adding a trickle-through timer that monitors the no-priority queue and processes at least one non-priority I/O per unit of time.

For an idle prioritization strategy to work, only one layer within the I/O subsystem must recognize and implement the idle strategy. After a no-priority I/O has been released from the no-priority queue, the I/O is treated as a normal-priority I/O.

Bandwidth-Reservation Strategy

The streaming scenario described earlier in this paper can be addressed by a mechanism that reserves bandwidth within the I/O subsystem for use by a thread that is creating I/O requests. A bandwidth-reservation strategy effectively gives a streaming application the ability to negotiate a minimum acceptable throughput for I/O that is being processed.

A bandwidth reservation is a request from an application for a certain amount of guaranteed throughput from the storage subsystem. Bandwidth reservations are extremely useful when an application needs a certain amount of data per period of time (such as streaming) or in other situations where the application might do bursts of I/O and require a real-time guarantee that the I/O will be completed in a timely fashion.

The bandwidth-reservation strategy uses frequency as its priority scheme. This allows applications to ask for time slices, such as three I/Os every 50 ms, within the I/O subsystem. When coupled with the hierarchy prioritization strategy, streaming I/O gets the same minimum number of I/Os per unit of time, independent of the mix of critical‑, high‑, normal‑, and low-priority I/Os that are occurring in the system at the same time.

For this strategy to work, only one layer within the I/O subsystem must recognize and implement the bandwidth reservation. Ideally, this layer should be as close as possible to the hardware. After an I/O has been released from the streaming queue, it is treated as a normal-priority I/O.

Implementing Prioritization in Applications

Applications can use several Microsoft Win32 functions to take advantage of I/O prioritization. This section gives a brief overview of the functions that are available and discusses some potential usage patterns. Developers should consider the following when adjusting application priorities:

Whenever an application modifies its priority, it risks potential issues with priority inversion. If an application sets itself or a particular thread in the application to run at a very low priority while holding a shared resource, it can cause threads that are waiting on that resource with higher priority to block much longer than they should.

Applications that use streaming should also be sensitive to causing starvation in other applications, although there is a hard limit on the amount of bandwidth that an application can reserve.

Setting the Priority for Hierarchy and Idle

An application can request a lower-than-normal priority for I/O that it issues to the system. This means that the requests that the I/O subsystem generates on the application’s behalf contain the specified priority; at that point, the driver stack becomes responsible for deciding how to interpret the priority. Therefore, not all I/O requests that are issued with a low priority are, in fact, treated as such.

Most applications use the process priority functions such as SetPriorityClass to request a priority. SetPriorityClass sets the priority class of the target process. Before Windows Vista, this function had no options to control I/O priority. Starting with Windows Vista, a new background priority class has been added. Two values control this class: the first sets the mode of the process to background and the second returns it to its original priority.

#include <windows.h>

#include <iostream>

int main(int argc, char* argv[])

{

// Now all threads in a process will make low-priority I/O requests.

SetPriorityClass(GetCurrentProcess(), PROCESS_MODE_BACKGROUND_BEGIN);

// Now primary thread issue low-priority I/O requests.

SetThreadPriority(GetCurrentThread(), THREAD_MODE_BACKGROUND_BEGIN);

DWORD dw_priority_class = GetPriorityClass(GetCurrentProcess());

std::printf("Priority class is 0x%x\n", dw_priority_class);

DWORD dw_priority_level = GetThreadPriority(GetCurrentThread());

std::printf("Priority level is 0x%x\n", dw_priority_level);

SetPriorityClass(GetCurrentProcess(), PROCESS_MODE_BACKGROUND_END);

SetPriorityClass(GetCurrentProcess(), THREAD_MODE_BACKGROUND_END);

return 0;

}

The following call starts background mode for the current process:

SetPriorityClass(GetCurrentProcess(),PROCESS_MODE_BACKGROUND_BEGIN);

The following call exits background mode:

SetPriorityClass(GetCurrentProcess(), ROCESS_MODE_BACKGROUND_END);

While the target process is in background mode, its CPU, page, and I/O priorities are reduced. From an I/O perspective, each request that this process issue is marked with an idle priority hint (very low priority). A similar function for threads, SetThreadPriority, can be used to cause only specific threads to run at low priority.

Finally, the SetFileInformationByHandle function can be used to associate a priority for I/O on a file-handle basis. In addition to the idle priority (very low), this function allows normal priority and low priority. Whether these priorities are supported and honored by the underlying drivers depends on their implementation (which is why they are referred to as hints).

FILE_IO_PRIORITY_HINT_INFO priorityHint;

priorityHint.PriorityHint = IoPriorityHintLow;

result = SetFileInformationByHandle(hFile, FileIoPriorityHintInfo, &priorityHint, sizeof(PriorityHint));

Reserving Bandwidth for Streaming

Applications that stream a lot of data, such as audio and video, often require a certain percentage of the bandwidth of the underlying storage system to deliver content to the user without glitches.

The addition of bandwidth reservations, also known as scheduled file I/O (SFIO), to the I/O subsystem exposes a way for these applications to reserve a portion of the bandwidth of the disk for their usage.

Applications can use the GetFileBandwidthReservation and SetFileBandwidthReservation functions to work with bandwidth reservations:

BOOL

WINAPI

GetFileBandwidthReservation(

__in HANDLE hFile,

__out LPDWORD lpPeriodMilliseconds,

__out LPDWORD lpBytesPerPeriod,

__out LPBOOL pDiscardable,

__out LPDWORD lpTransferSize,

__out LPDWORD lpNumOutstandingRequests

);

BOOL

WINAPI

SetFileBandwidthReservation(

__in HANDLE hFile,

__in DWORD nPeriodMilliseconds,

__in DWORD nBytesPerPeriod,

__in BOOL bDiscardable,

__out LPDWORD lpTransferSize,

__out LPDWORD lpNumOutstandingRequests

);

An application that requires a throughput of 200 bytes per second from the disk would make the following call:

result = SetFileBandwidthReservation(hFile, 1000, 200, FALSE, &transfer_size, &outstanding_requests);

The values that are returned in transfer_size and outstanding_requests tell the application the size and number of requests with which they should try to saturate the device to achieve the desired bandwidth.

Top comments (0)