Hello, My Name is Daiki!

I'm a newbie in the AWS Community Builders program.

A little while ago, my favorite service, EKS, started supporting Amazon Application Recovery Controller (ARC).

Honestly, it's not a very flashy service, but it seems to be important for increasing the availability of applications running on EKS!

As far as I can see, there are no blogs that deal with this function, so I decided to test it out this time!

What is Amazon Application Recovery Controller (ARC)?

Amazon Application Recovery Controller (ARC) is a function that improves the resilience of applications running on AWS.

Specifically, it is a function that minimizes the impact of failures on services by cutting off service communications to the region or AZ where a failure has occurred and continuing services only with normal ones.

ARC has functions that operate at the AZ level and the region level, but the AZ-level ones have the following two functions.

- Zone shift

- Zone auto shift

Zone shift is a function that allows the user to manually isolate the target AZ. The user can specify the time to isolate, and when that time has elapsed, the target AZ will be reintegrated into the service.

Zone auto shift is a function that allows AWS to detect an AZ failure and automatically isolate the AZ.

As of December 2024, the following AWS resources support APC.

- Network Load Balancer (NLB)

- Application Load Balancer (ALB)

- Amazon Elastic Kubernetes Service (EKS)

Behavior of ARC in EKS

This is a reprint from the official AWS documentation, but the following explains how ARC controls communication between services in an EKS cluster.

https://docs.aws.amazon.com/eks/latest/userguide/zone-shift.html

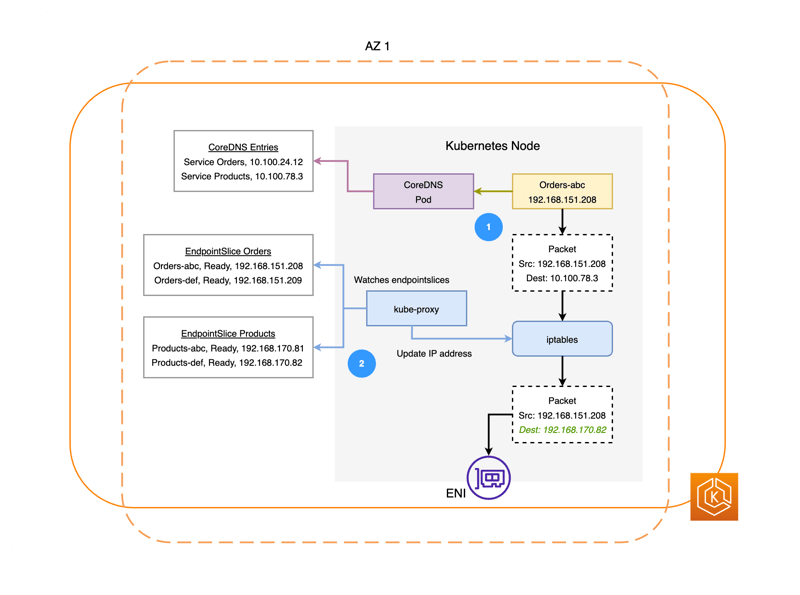

1. Communication between services in an EKS cluster under normal circumstances

Let's consider a case where the Orders Service communicates with the Producest Service.

First, the Pod of the

OrdersService queries CoreDNS, the internal DNS of the EKS cluster, for name resolution of theProducestService. Since10.100.78.3was registered as the IP address of theProducestService, this IP address is returned to theOrdersPod.When a Service is created, a corresponding EndpointSlice object is automatically created. The EndpointSlice object manages the IP address information of the Pod that is set as the distribution destination from the target Service. kube-proxy, a Kubernetes component running on the worker node, periodically monitors this EndpointSlice object, and if there are any changes to the contents of the EndpointSlice object, it changes the packet forwarding settings of iptables accordingly. The destination address of the packet from the

OrdersPod to theProductsService is converted by iptables from theProductsService's IP to one of the Pods associated with that Service.

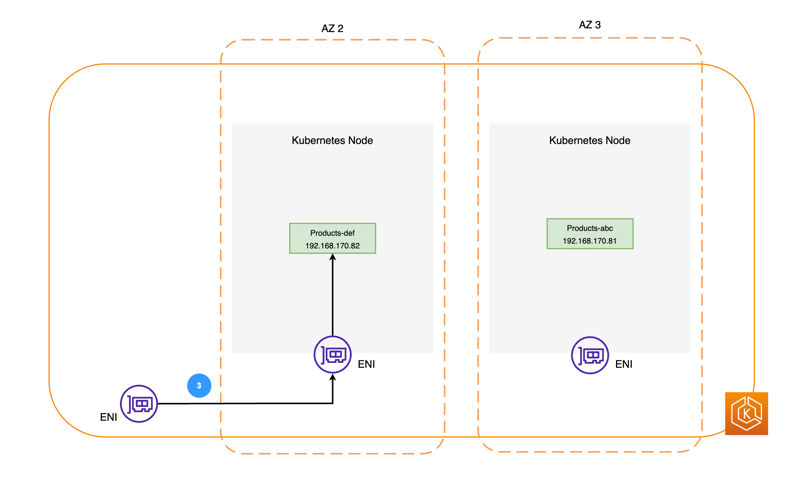

The packet whose destination address has been converted to the Products Pod's IP address in 1. 2. is sent via the ENI to the Products Pod with that IP address running on another worker node.

For more information about the Kubernetes object EndpointSlice, please refer to the following document.

https://kubernetes.io/docs/concepts/services-networking/endpoint-slices/

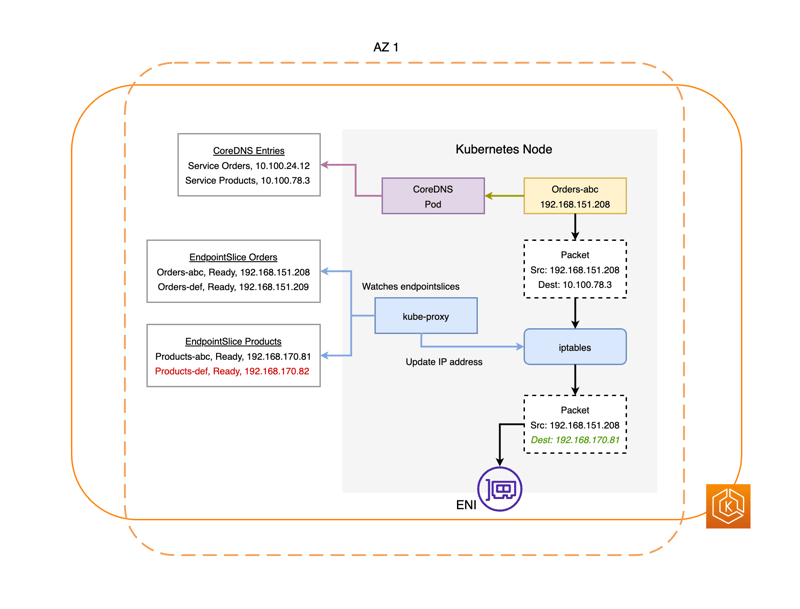

2. Communication between services in an EKS cluster when an ARC zone shift is activated

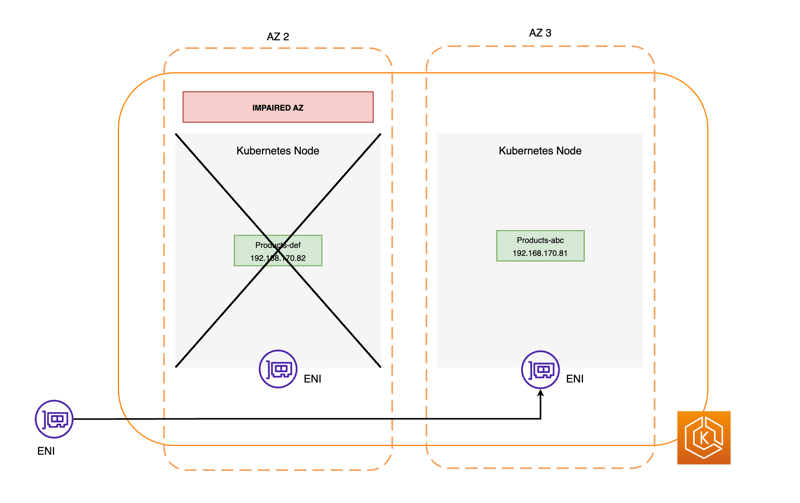

Assuming that a failure occurs in AZ2 out of the three AZs, AZ1 to AZ3, the following explains how communication between services is controlled when an ARC zone shift is activated.

- Inside the EKS cluster, the EndpointSlice Controller runs to manage the EndpointSlice object. When an APC zone shift is triggered, this EndpointSlice Controller checks whether the information of the Pods running in the AZ where the failure occurred (AZ2 in this case) is registered in all EndpointSlice objects that have already been created, and if so, deletes the Pod information from the EndpointSlice object.

- The kube-proxy running on each worker node updates the iptables settings on the worker node based on the information in the changed EndpointSlice object. In other words, it deletes the packet forwarding settings for the Pods deleted in 1.

- When communicating with a Service (

ProductsService in this case), the packet forwarding destination address is changed from the Service's IP address to the Pod's IP address based on the information in iptables, but since the information of the Pods in the failed AZ has been deleted from iptables in 2, it is not converted to the IP addresses of those Pods. This will prevent traffic from being routed to pods in the failed AZ.

In other words, zone shifting for EKS clusters seems to be a mechanism that quickly blocks communication routing to Pods in the affected AZ by modifying the contents of the EndpointSlice object that is automatically created for each Service.

I see, I kind of understand how it works!!

Try EKS ARC zone shift on a real machine!

So far, we have learned about the internal operation of an EKS cluster when an ARC zone shift is activated, so from here on, we will verify it on a real machine!

Preparation 1. Prepare an EKS cluster with ARC zone shift enabled

First, you need an EKS cluster with ARC zone shift enabled.

Please build your EKS cluster by referring to the following official AWS documentation.

https://docs.aws.amazon.com/ja_jp/eks/latest/userguide/create-cluster.html

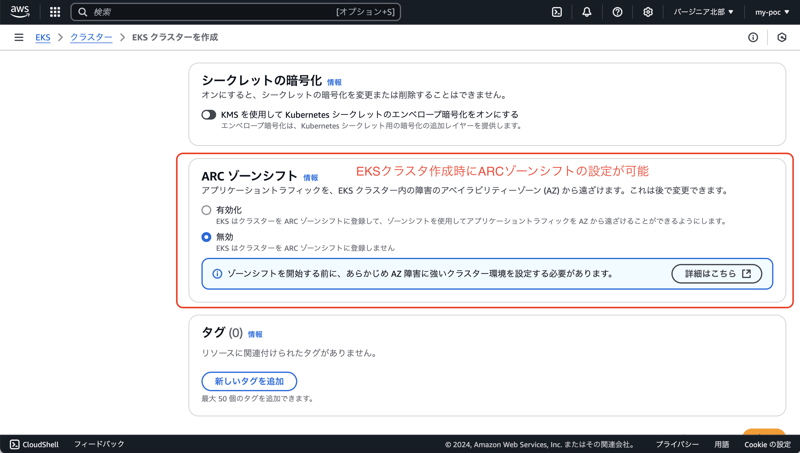

You can choose to enable ARC zone shift when creating an EKS cluster.

The only option is the enable/disable radio button.

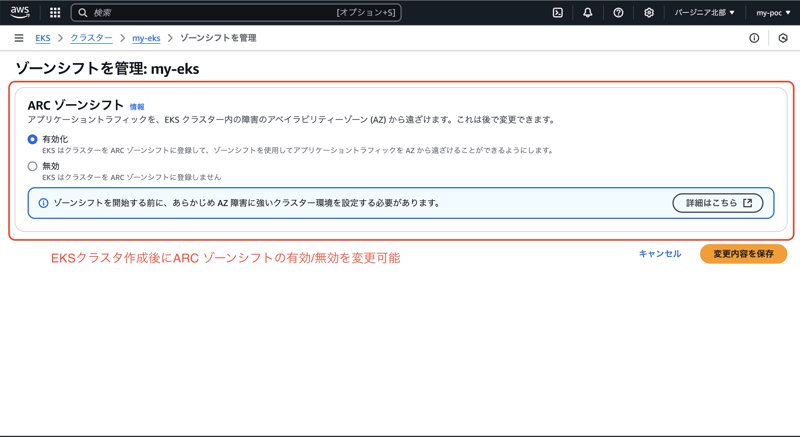

The ARC zone shift setting can be changed even after creating an EKS cluster.

For this verification, we prepared an EKS cluster with worker nodes in two AZs (us-east-1a, us-east-1b). The range of IP addresses that can be assigned to subnets and pods is as follows.

| AZ | CIDR range | Range of IP addresses that may be assigned to Pods |

|---|---|---|

| 1a | 192.168.128.0/18 | 192.168.128.4 - 192.168.191.254 |

| 1b | 192.168.192.0/18 | 192.168.192.4 - 192.168.255.254 |

Of course, ARC zone shift is enabled!

Preparation 2. Deploy a sample app to verify ARC zone shift

As a preparation for the next step, we will deploy a sample app to verify ARC zone shift.

First, create an nginx Pod with Deployment.

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: arc-sample-nginx

name: arc-sample-nginx

spec:

replicas: 2 #Create two pods

selector:

matchLabels:

app: arc-sample-nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: arc-sample-nginx

spec:

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm: #Start each pod on a worker node in a different AZ.

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- arc-sample-nginx

topologyKey: kubernetes.io/zone

weight: 100

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

command: [ "sh", "-c", "

hostname -I > /usr/share/nginx/html/index.html;

nginx -g 'daemon off;';

"] #Set the Pod to return the IP address assigned to it as a response.

replicas: 2 will launch two Pods with the same content.

Then, set the Pods to be distributed to worker nodes in different AZs using podAntiAffinity.

By setting hostname -I > /usr/share/nginx/html/index.html; in the container command of the Pod to be created, the IP address assigned to the Pod will be returned to the client as an HTTP response.

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl apply -f arc-sample-nginx.yaml

deployment.apps/arc-sample-nginx created

The creation of the Deployment has been completed.

The two Pods of the Deployment are also running normally. You can also see that those Pods are running on the subnets of each of the two AZs.

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

arc-sample-nginx-59cb7bff9f-f8nnc 1/1 Running 0 15m 192.168.245.13 ip-192-168-228-161.ec2.internal <none> <none>

arc-sample-nginx-59cb7bff9f-jsczh 1/1 Running 0 15m 192.168.191.4 ip-192-168-164-166.ec2.internal <none> <none>

Next, create a Service to route communication to these two Pods.

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: arc-sample-nginx

name: arc-sample-nginx

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: arc-sample-nginx

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl apply -f arc-sample-nginx-svc.yaml

service/arc-sample-nginx created

The sample app is now ready.

Let's run curl periodically on this sample app.

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl run curl-client --image=curlimages/curl -it -- sh

If you don't see a command prompt, try pressing enter.

~ $ while true; do curl http://arc-sample-nginx; sleep 1; done

192.168.191.4

192.168.245.13

192.168.191.4

192.168.191.4

192.168.245.13

192.168.245.13

192.168.191.4

192.168.191.4

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.191.4

192.168.191.4

192.168.191.4

192.168.245.13

192.168.245.13

192.168.191.4

192.168.191.4

The IP addresses of the two Pods are returned, so we can see that the request is being routed to each Pod.

In addition, an EndpointSlice object is also created, which is automatically created by creating the Service mentioned above. You can see that the IP addresses of the two Pods, 192.168.191.4 and 192.168.245.13, are registered as Endpoints.

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl get endpointslices

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

arc-sample-nginx-qxrgc IPv4 80 192.168.245.13,192.168.191.4 30m

kubernetes IPv4 443 192.168.174.91,192.168.229.161 8h

Execute ARC zone shift!

Preparation is complete, so let's finally execute ARC zone shift!

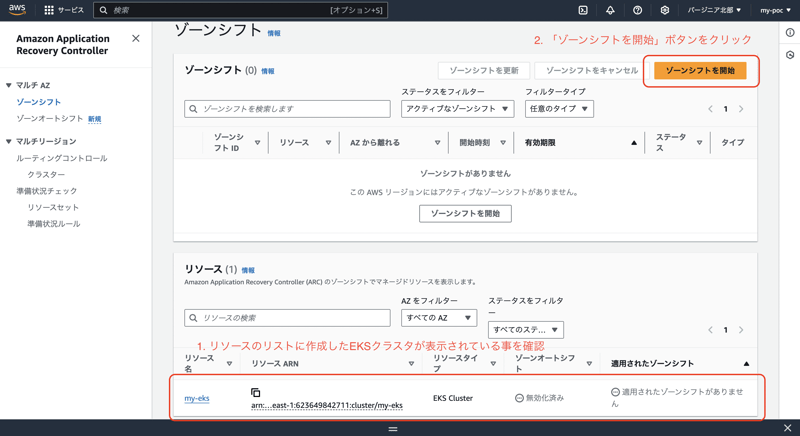

1. Go to the zone shift screen

First, go to the top page of the APC service.

Then click "Zone-level migration" in the left pane.

The AWS resources targeted for ARC are displayed at the bottom of the screen, so make sure that the EKS cluster you just built is displayed.

If it is displayed without any problems, click the "Start Zone Shift" button at the top of the screen.

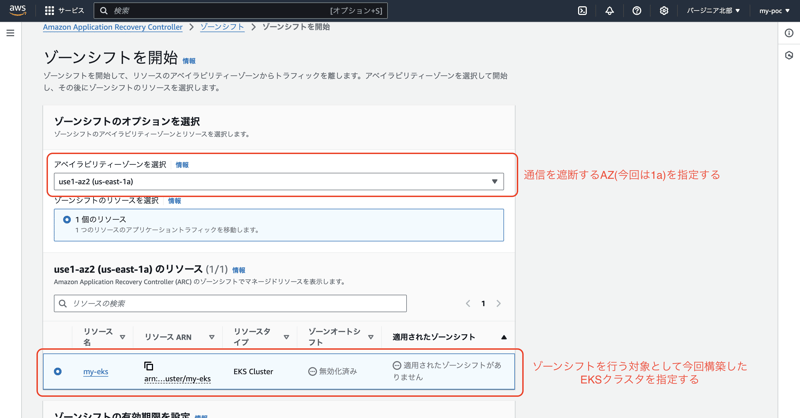

2. Execute zone shift!!

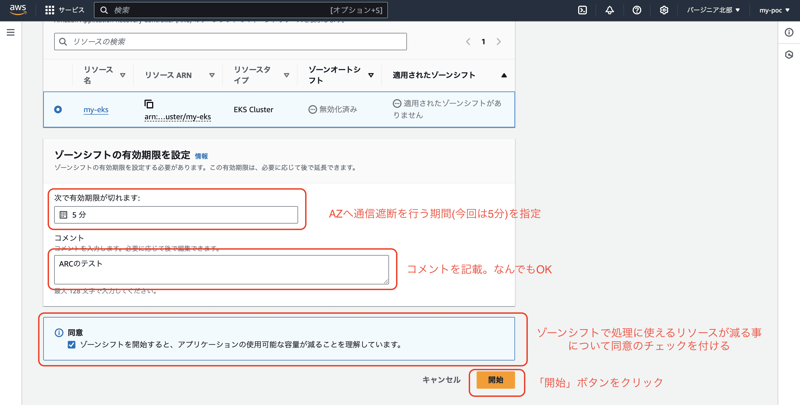

Before executing the zone shift, you need to enter some settings.

First, select the AZ you want to block.

Next, select the EKS cluster you have prepared as the resource to be zone shifted.

Select the expiration date for the zone shift.

When the expiration date has passed, the zone shift will be released and communication will be routed to the target AZ again.

You can write anything in the comments field.

Check the box to agree that the amount of resources available for processing will be reduced by the zone shift, and click the "Start" button to start the zone shift.

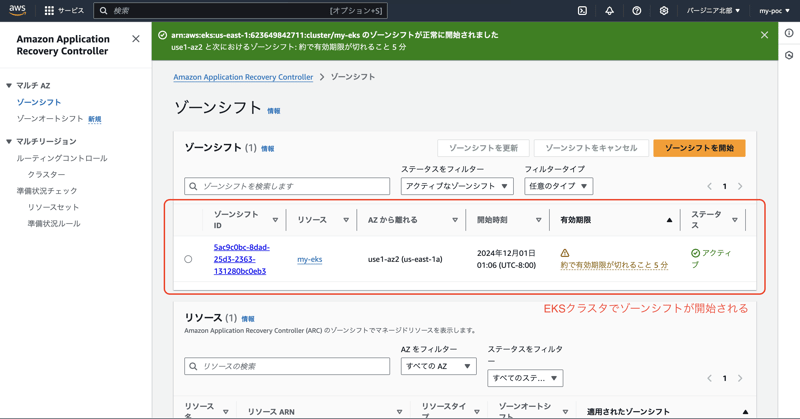

The target EKS cluster is displayed in the zone shift list.

It seems that the zone shift has started!

3. Is the zone shift really happening?

Let's check if the zone has really been shifted.

First, let's use the kubectl get endpointslices command to see how the EndpointSlice object has changed.

You can see that 192.168.191.4 is no longer listed as an endpoint.

Zone shift seems to be working!

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl get endpointslices

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

arc-sample-nginx-qxrgc IPv4 80 192.168.245.13 47m

kubernetes IPv4 443 192.168.174.91,192.168.229.161 8h

Next, I tried to run curl on the sample app every second, and it was not routed to 192.168.191.4, but all were directed to 192.168.245.13.

It seems to have been properly blocked, yay!!

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl run curl-client --image=curlimages/curl -it -- sh

If you don't see a command prompt, try pressing enter.

~ $ while true; do curl http://arc-sample-nginx; sleep 1; done

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

After 5 minutes, it was again assigned to 192.168.191.4.

This is also according to the settings!

~ $ while true; do curl http://arc-sample-nginx; sleep 1; done

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.245.13

192.168.191.4

192.168.191.4

192.168.245.13

192.168.245.13

192.168.191.4

192.168.191.4

192.168.245.13

192.168.245.13

192.168.191.4

192.168.245.13

192.168.191.4

192.168.191.4

192.168.191.4

192.168.191.4

192.168.245.13

192.168.191.4

192.168.191.4

192.168.245.13

192.168.191.4

192.168.245.13

192.168.191.4

192.168.191.4

192.168.245.13

192.168.191.4

192.168.191.4

Just to be sure, I checked the EndpointSlice object, and 192.168.191.4 was registered again.

[cloudshell-user@ip-10-134-48-42 ~]$ kubectl get endpointslices

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

arc-sample-nginx-qxrgc IPv4 80 192.168.245.13,192.168.191.4 55m

kubernetes IPv4 443 192.168.174.91,192.168.229.161 9h

The EKS line has also disappeared from the zone shift list in the ARC console.

What is the cost of ARC zone shift?

As for the cost, it seems that there is no additional charge for using zone shift.

If that's the case, it's better to use this function!

https://docs.aws.amazon.com/r53recovery/latest/dg/introduction-pricing-zonal-shift.html

Note: Karpenter and Cluster Autoscaler do not support ARC!

Currently, Karpenter and Cluster Autoscaler, which are automatic scale-out SW for worker nodes, do not support ARC zone shift and zone auto shift.

Therefore, please note that even if you activate zone shift for a certain AZ, Karpenter and Cluster Autoscaler may continue to launch worker nodes on that AZ!

To restrict the launch of worker nodes to a specific AZ, you will need to reconfigure them individually, referring to the instructions on the following site.

https://aws.github.io/aws-eks-best-practices/karpenter/

https://aws.github.io/aws-eks-best-practices/cluster-autoscaling/

On the other hand, worker nodes launched by Karpenter and Cluster Autoscaler are also subject to ARC zone shift and zone auto shift for pods running on them, so that alone is effective.

Summary

I have provided an overview of how APC zone shift works for this EKS cluster and performed a simple actual test.

We were able to confirm that performing a zone shift cuts off communication to Pods in the target AZ.

It appears that there are no additional charges, so we'd like to make effective use of it to increase system availability!!

If I have time in the future, I'd like to verify zone auto shift, which automatically detects a failed AZ and cuts off communication.

See you next time!

Top comments (0)