Introduction

To be able to choose the right tool for a task, it is helpful to understand how the tools you choose from work behind the scenes (so you know what to expect from these tools).

In this article I will briefly explain what happens behind the scenes when we invoke a Lambda function to process an event. Then, I will explain how a Lambda function scales to handle multiple events in parallel (concurrently). Finally, I'll talk about the result of a basic test that I ran to see how a Lambda function handles a burst of traffic.

Lambda Execution Environment

To execute a function Lambda creates an execution environment specifically for that function. It's a temporary, secure and isolated runtime environment that includes the dependencies of the function. And each function has its own execution environment.

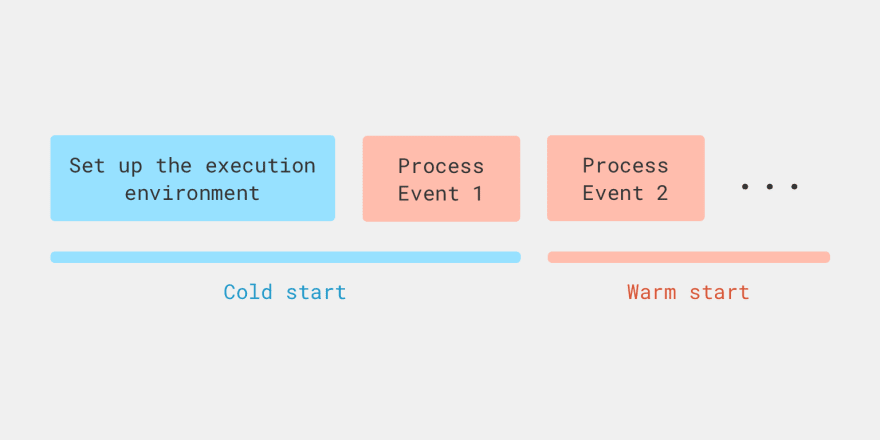

To invoke a function Lambda has to set up an execution environment for it. Once the execution environment for the function is ready the function is invoked and the environment is reused for the subsequent invocations of that function for some time.

Execution environments are temporary. When a function is idle for some time, its execution environment is removed. And when the function is needed again in a while to handle another event, the environment is created from scratch. Also, the execution environments are periodically recreated behind the scenes by Lambda to keep them fresh (for example, to ensure that the underlying resources are up to date).

When a function needs to be invoked and there are no execution environments available, Lambda has to set up a new environment. When Lambda creates a new environment instead of reusing an existing one to invoke a function, such case is called cold start.

Concurrency in Lambda

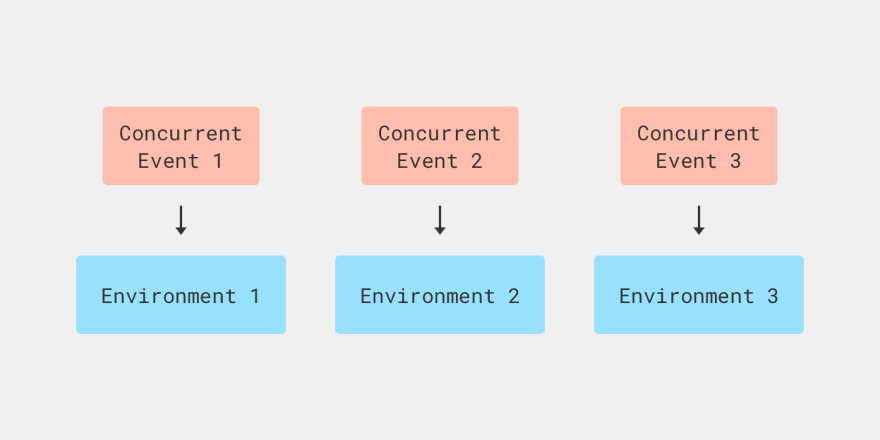

Another important concept that we need to understand is that a single execution environment can process a single event at a time. If there are multiple concurrent events for a function (for example, 100 HTTP requests at a time that need to be processed), Lambda will have to set up multiple execution environments to process those events concurrently.

The number of concurrent function invocations is limited. All Lambda functions in the same AWS account and region share a single concurrency limit. By default the limit is 1000 concurrent invocations per region. You can increase this limit by submitting a request in the Support Center Console.

A Lambda function scales by creating enough execution environments to be able to process the amount of concurrent requests it receives at any given time. The scaling works as follows:

- Let's say I have set the total number of concurrent executions in my AWS account to 5000.

- Then, a popular news website mentions my product and my function suddenly receives a burst of traffic, and the number of concurrent requests grows rapidly, up to more than 5000 requests.

- Lambda will start from 500 to 3000 (this number depends on a region) instances of my function really fast. This is called burst concurrency limit. Let's say, my function is in the US East region and the burst limit is 3000. So, I'd get 3000 instances quickly to handle that burst of traffic.

- Once the burst concurrency limit is reached, my function will scale by only 500 instances per minute until my account's concurrency execution limit is reached (until 5000 instances) and no more instances are being created to accommodate new concurrent requests.

- If this concurrency limit won't be enough to serve additional requests, those requests are gonna be throttled.

- Also, before the account's concurrency limit is reached, the requests for which Lambda won't be able to set up an execution environment on time are gonna be throttled as well.

To test how concurrency works I created a simple API endpoint using the Serverless Framework. It was a Lambda function (1024MB of memory) behind AWS API Gateway. Basically, this function handled HTTP requests at a given endpoint URL. The function looked like this:

module.exports.hello = async () => {

await new Promise((resolve) => setTimeout(resolve, 1000));

return {

statusCode: 200,

body: JSON.stringify({ message: 'Hello World' }),

};

};

This function takes approximately one second to execute, because of the timeout.

Then, I ran a load test where for the duration of 30 seconds, every second around 100 new virtual users requested the endpoint. And I've set a limit on the amount of concurrent users to no more than 100 users at a time. The results of the test were as follows:

All virtual users finished

Summary report @ 19:52:40(+0000) 2020-11-30

Scenarios launched: 2737

Scenarios completed: 2737

Requests completed: 2737

Mean response/sec: 87.11

Response time (msec):

min: 1021.8

max: 2057

median: 1033

p95: 1146.8

p99: 1326

Scenario counts:

Hello: 2737 (100%)

Codes:

200: 2737

The total of 2737 requests have been made. The minimum response time was 1021.8 ms (a warm start) and the maximum was 2057 ms (most probably, a cold start). The average response time was 1033 ms and 95% of all tests took 1146.8 ms or less. So, only a small amount of requests were affected by a cold start.

According to CloudWatch it took 100 concurrent instances of the function to handle these requests. To handle concurrent events Lambda creates multiple execution environments for the function. I was making at most 100 requests concurrently in my test, so Lambda created 100 execution environments to be able to handle those requests. Basically, my function had approximately 100 cold starts. The execution environments were reused most of the time, because there were no more bursts of concurrent requests.

Be careful when load testing your apps, especially serverless apps. If you make a mistake and unexpectedly send a huge amount of requests, you'll incur additional costs that can get quite high and/or you may overload your app such that it won't be able to serve real users.

Finally, I'd like to mention that you can control Lambda concurrency in various ways:

- You can change your concurrency limit per account by contacting AWS support

- You can reserve concurrency for a specific function

- You can provision concurrency for a specific function

Please read Managing concurrency for a Lambda function to learn more.

Useful Resources

- A YouTube video: End Cold Starts in Your Serverless Apps with AWS Lambda Provisioned Concurrency. This is a very detailed video about Lambda concurrency.

- An informative article about concurrency from AWS Documentation: Invocation Scaling.

Top comments (0)