My Journey with Multimodal Data Preprocessing and Truncated SVD

Dealing with multimodal dataset and dimensionality reduction

In one of our projects, we had a dataset containing over 1500 features to create a machine learning model. By the multimodality, I mean there were a combination of numerical, categorical, and text features in it.

To handle this dataset, I employed a standard strategy of preprocessing and the current features transformed to more features. A crucial aspect of analyzing these additional features was determining a method to identify the most important ones.

Of course, before modeling, we analyze data to keep the more informative samples and features. But in this project, we still deal with curse of dimensionality.

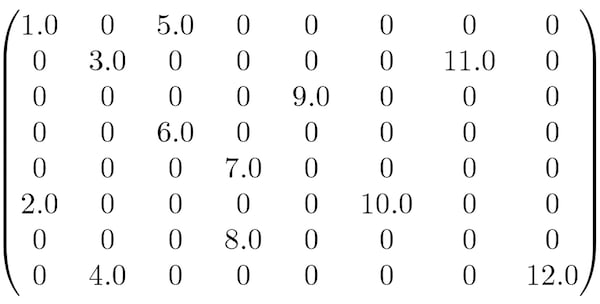

For example, among these features, there were numerous categorical variables for which I utilized OneHotEncoding for them to convert to the numeric values. This picture shows it in simple, but if want to know more about it you can visit this link.

Furthermore, there are some text features in this dataset. When we tried to use these kind of features, the Tfidf-Vectorizer came in use! This technique tries to identify the more important tokens in a text by counting their frequencies in the documents. This picture may show the idea behind in a one shot, but if you want to known more you can again visit this link.

In our machine learning pipeline, consists of featurization, preprocessing and modeling. After the featurization step, we faced with an enormous sparse dat matrix. In a sparse matrix, there are lots of cells with zero and just few cells containing non-zero values. Using this kind of data matrix can cause to computational overhead and slow down the modeling process.

The first idea was to use the well-known PCA algorithm as a dimensionality reduction technique. When I attempted to apply the PCA algorithm, I encountered an error indicating that the algorithm could not be used with a sparse matrix. But why?

Consequently, I started exploring about the Truncated SVD as an alternative method.

In the next section I tried to sum up all the things I learned from this technique in comparison to the PCA.

Why the Truncated SVD was better than PCA in for a sparse data matrix?

Truncated SVD (Singular Value Decomposition) and PCA (Principal Component Analysis) are both linear algebra techniques that can be used to reduce the dimensionality of high-dimensional data, while retaining the most important information.

As I mentioned before, I was dealing with a large dataset that after featurization step it was still large enough to push me to know about the alternative way to deal with!

The main differences between Truncated SVD and PCA which I found out about are:

1. The objective:

PCA aims to find the directions (principal components) that explain the maximum amount of variance in the data, while Truncated SVD aims to factorize a matrix into two lower rank matrices.

2. The input data:

PCA is typically applied to a covariance matrix, while Truncated SVD can be applied directly to a data matrix without computing the covariance matrix.

3. The output:

PCA provides the principal components, which are linear combinations of the original variables, while Truncated SVD provides the singular vectors, which are also linear combinations of the original variables.

4. The number of components:

In PCA, the number of principal components to keep is typically chosen based on the percentage of variance explained or by setting a fixed number of components. In Truncated SVD, the number of singular vectors to keep is typically chosen based on the rank of the matrix or a fixed number of components.

5. The computation:

Truncated SVD is typically faster than PCA for large datasets, as it only computes a subset of the singular vectors and values.

As I first described, our dataset was This was very important in our case. Because we use a pay-as-you-go Azure Compute to run the experiments. It was crucial to save the computation time.

To sum up...

Both Truncated SVD and PCA are useful techniques for reducing the dimensionality of high-dimensional data.

The choice of which technique to use depends on the specific requirements of the problem at hand. In our case, the large sparse data matrix, need to choose the Truncated SVD.

In my next post, I will show a simple code to use this technique!

Top comments (0)