I'm a big fan of https://greatexpectations.io and had an opportunity and pleasure to utilize this fantastic library in a few projects already. I would like to share a bit of flavour and possibilities which are coming with https://greatexpectations.io when you my dear reader decide to try it. As a presentation layer I decided to use #FastAPI as this is currently my fav python framework.

In this sample integration I nail down great expectation dataset SqlAlchemyDataset as core component and build around it REST API which is easily pluggable to any other project as a separate micro service.

These days we are in kind of expect unexpected mode. I think similar things are going on with data we are gathering and any kind of data. It is growing and we don't know why. We don't how to use it - we don't know if we need it. More or less we don't know what we don't know :) But there is a solution: we need to learn how to ask questions or what our expectations are in context of the data we posses. And now https://greatexpectations.io with set of already formed questions/expectation here: https://greatexpectations.io/expectations/ enters the scene.

You ask where the difference is. As a user I can pick visual client of my choice and connect it to sql database. Start reading data or even write sql to learn about data. I try to challenge this a bit here: How much time it will take to read all these tables and data ? It is doable ? How many sql queries you need to write, run and validate to answer your questions ? Do we all need to know sql magic? Well sometimes it can be just simple row count triggered every day but to be honest it is very very improbable that data will be as simple as that theses days.

Let's try a different approach.

Project Setup.

You need docker up and running before you start.

Next clone repository from this uri: https://github.com/grillazz/fastapi-greatexpectations

Once you have repository please run setup steps as follow:

- make build will download all base docker images and project dependencies and build local docker images

- make up will run docker containers locally

- make feed_db will load test data to database

About inspecting database

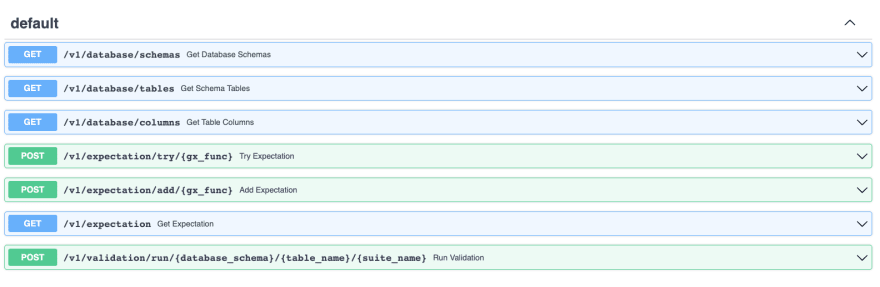

As mentioned earlier assumption is that we don't know our data and we need to inspect a bit what is in there to get information about objects like schemas, databases and tables/columns. To achieve this we have three endpoints:

curl -X 'GET' 'http://0.0.0.0:8585/v1/database/schemas' -H 'accept: application/json'

This call returns a list of database schemas. We are looking to have our sample schema shakespeare as container of sample data feed. So the response should look like one below:

[

"information_schema",

"public",

"shakespeare"

]

Next with schema in hand we can call another endpoint to get a list of tables calling:

curl -X 'GET' 'http://0.0.0.0:8585/v1/database/tables?sql_db_schema=shakespeare' -H 'accept: application/json'

As a result of this call we get a list of available tables in shakespeare schema:

[

"wordform",

"work",

"chapter",

"character",

"character_work",

"paragraph"

]

And finally we can inspect target/table to check how it is build and what datatypes it contains by calling:

curl -X 'GET' 'http://0.0.0.0:8585/v1/database/columns?database_schema=shakespeare&schema_table=chapter' -H 'accept: application/json'

As we pick chapter table we expect below response which is served by SqlAlchemyDataset meth columns:

[

{

"name": "id",

"type": {},

"nullable": false,

"default": null,

"autoincrement": false,

"comment": null

},

{

"name": "work_id",

"type": {

"length": 32,

"collation": null,

"_expect_unicode": false,

"_expect_unicode_error": null,

"_warn_on_bytestring": false

},

"nullable": false,

"default": null,

"autoincrement": false,

"comment": null

},

{

"name": "section_number",

"type": {},

"nullable": false,

"default": null,

"autoincrement": false,

"comment": null

},

{

"name": "chapter_number",

"type": {},

"nullable": false,

"default": null,

"autoincrement": false,

"comment": null

},

{

"name": "description",

"type": {

"length": 256,

"collation": null,

"_expect_unicode": false,

"_expect_unicode_error": null,

"_warn_on_bytestring": false

},

"nullable": false,

"default": null,

"autoincrement": false,

"comment": null

}

]

Time to expect / question / validate data

Before we start saving expectation suites and run validations there is an option to give it a try. We can run every expectation with no cost and additional overhead - we can call it in sandbox of expectations. Once we pick table/target and we decide to test one of expectations defined here https://greatexpectations.io/expectations/ we can run a call:

curl -X 'POST' \

'http://0.0.0.0:8585/v1/expectation/try/expect_table_row_count_to_equal?database_schema=shakespeare&schema_table=chapter' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"value":100}'

What is going here. We decided to test if table chapter in shakespeare schema has 100 rows using expect_table_row_count_to_equal and we got a response:

{

"success": false,

"expectation_config": {

"_expectation_type": "expect_table_row_count_to_equal",

"_kwargs": {

"value": 100,

"result_format": "BASIC"

},

"_raw_kwargs": null,

"meta": {},

"success_on_last_run": null,

"_ge_cloud_id": null,

"_expectation_context": null

},

"result": {

"observed_value": 945

},

"meta": {},

"exception_info": {

"raised_exception": false,

"exception_traceback": null,

"exception_message": null

}

}

Looks we were wrong and the real result equals 945. Let's try one more time running an updated call:

curl -X 'POST' \

'http://0.0.0.0:8585/v1/expectation/try/expect_table_row_count_to_equal?database_schema=shakespeare&schema_table=chapter' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"value":945}'

Now same test with a new value of 945 is passing with success:

{

"include_rendered_content": false,

"success": true,

"expectation_config": {

"_expectation_type": "expect_table_row_count_to_equal",

"_kwargs": {

"value": 945,

"result_format": "BASIC"

},

"_raw_kwargs": null,

"meta": {},

"success_on_last_run": null,

"_ge_cloud_id": null,

"_expectation_context": null,

"_include_rendered_content": false

},

"result": {

"observed_value": 945

},

"meta": {},

"exception_info": {

"raised_exception": false,

"exception_traceback": null,

"exception_message": null

}

}

One more test to expect column values to not be null. We expect there will be no null values for column description in table chapter:

curl -X 'POST' \

'http://0.0.0.0:8585/v1/expectation/try/expect_column_values_to_not_be_null?database_schema=shakespeare&schema_table=chapter' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"column":"description"}'

Again we are wrong as nearly 18% of values in description in table chapter has a null value:

{

"success": false,

"expectation_config": {

"_expectation_type": "expect_column_values_to_not_be_null",

"_kwargs": {

"column": "description",

"result_format": "BASIC"

},

"_raw_kwargs": null,

"meta": {},

"success_on_last_run": null,

"_ge_cloud_id": null,

"_expectation_context": null,

"_rendered_content": null

},

"result": {

"element_count": 945,

"unexpected_count": 169,

"unexpected_percent": 17.883597883597886,

"unexpected_percent_total": 17.883597883597886,

"partial_unexpected_list": []

},

"meta": {},

"exception_info": {

"raised_exception": false,

"exception_traceback": null,

"exception_message": null

},

"rendered_content": null

}

this is not the end...

There are a few superpowers behind the scene like pydantic or sqlalchemy but you can figure out this by reading code in repository here: https://github.com/grillazz/fastapi-greatexpectations

I'm still working on it and you can expect next use case soon with more real data and different sql provider.

This can also work with swagger ui on http://0.0.0.0:8585/docs

Testing data feed is coming from: https://github.com/catherinedevlin/opensourceshakespeare

Header picture is coming from:

https://www.behance.net/martamiazek

to be continued...

Top comments (0)