The Amazon Elastic Kubernetes Service (Amazon EKS) team is pleased to announce support for Kubernetes version 1.24 for Amazon EKS and Amazon EKS Distro. We are excited for our customers to experience the power of the “Stargazer” release. Each Kubernetes release is given a name by the release team. The team chose “Stargazer” for this release to honor the work done by hundreds of contributors across the globe: “Every single contributor is a star in our sky, and Amazon EKS extends its sincere thanks to the upstream community and the Kubernetes 1.24 Release Team for bringing this release to the greater cloud-native ecosystem.”

Updating an Amazon EKS cluster Kubernetes version

When a new Kubernetes version is available in Amazon EKS, you can update your Amazon EKS cluster to the latest version.

Update the Kubernetes version for your Amazon EKS cluster

To update the Kubernetes version for your cluster

Compare the Kubernetes version of your cluster control plane to the Kubernetes version of your nodes.

Get the Kubernetes version of your cluster control plane

kubectl version --short

Get the Kubernetes version of your nodes. This command returns all self-managed and managed Amazon EC2 and Fargate nodes. Each Fargate Pod is listed as its own node.

kubectl get nodes

The major changes from eks 1.24 was Amazon EKS ended support for Dockershim

Why we’re moving away from dockershim

Docker was the first container runtime used by Kubernetes. This is one of the reasons why Docker is so familiar to many Kubernetes users and enthusiasts. Docker support was hardcoded into Kubernetes – a component the project refers to as dockershim. As containerization became an industry standard, the Kubernetes project added support for additional runtimes. This culminated in the implementation of the container runtime interface (CRI), letting system components (like the kubelet) talk to container runtimes in a standardized way. As a result, dockershim became an anomaly in the Kubernetes project. Dependencies on Docker and dockershim have crept into various tools and projects in the CNCF ecosystem ecosystem, resulting in fragile code

Docker and Containerd in Kubernetes

Think of the Docker as a big car with all of its parts: the engine, the steering wheel, the pedals, and so on. And if we need the engine, we can easily extract it and move it into another system.

This is exactly what happened when Kubernetes needed such an engine. They basically said, "Hey, we don't need the entire car that is Docker; let's just pull out its container runtime/engine, Containerd, and install that into Kubernetes."

Docker vs. Containerd: What Is The Difference?

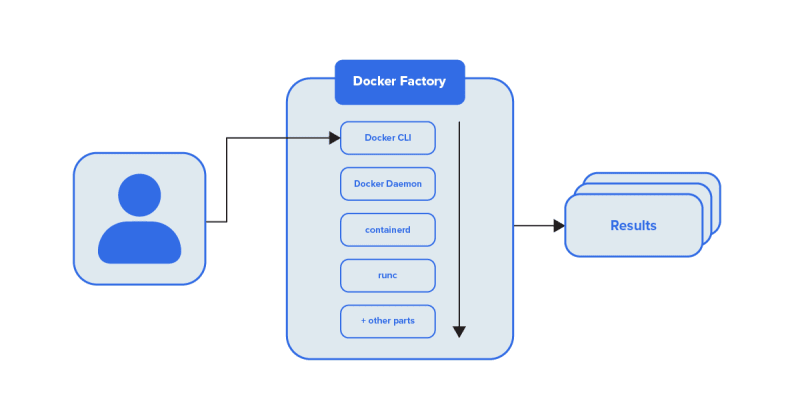

Docker was written with human beings in mind. We can imagine it as a sort of translator that tells an entire factory, filled with robots, about what the human wants to build or do. Docker CLI is the actual translator, some of the other pieces in Docker are the robots in the factory.

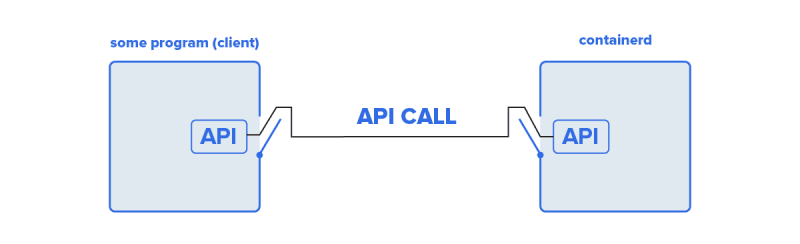

keep in mind Kubernetes is a program, and Containerd is also a program. And programs can quickly talk to each other, even if the language they speak is complex. containerd is developed from the ground up to let other programs give it instructions. It receives instructions in a specialized language named API calls.

The messages sent in API calls need to follow a certain format so that the receiving program can understand them.

Removal of Dockershim

The most significant change in this release is the removal of the Container Runtime Interface (CRI) for Docker (also known as Dockershim). Starting with version 1.24 of Kubernetes, the Amazon Machine Images (AMIs) provided by Amazon EKS will only support the containerd runtime. The EKS optimized AMIs for version 1.24 no longer support passing the flags enable-docker-bridge, docker-config-json, and container-runtime.

Before upgrading your worker nodes to Kubernetes 1.24, you must remove all references to these flags

The open container initiative (OCI) images generated by docker build tools will continue to run in your Amazon EKS clusters as before. As an end-user of Kubernetes, you will not experience significant changes.

For more information, see Kubernetes is Moving on From Dockershim: Commitments and Next Steps on the Kubernetes Blog.

Kubernetes 1.24 features and removals

Admission controller enabled

CertificateApproval, CertificateSigning, CertificateSubjectRestriction, DefaultIngressClass, DefaultStorageClass, DefaultTolerationSeconds, ExtendedResourceToleration, LimitRanger, MutatingAdmissionWebhook, NamespaceLifecycle, NodeRestriction, PersistentVolumeClaimResize, Priority, PodSecurityPolicy, ResourceQuota, RuntimeClass, ServiceAccount, StorageObjectInUseProtection, TaintNodesByCondition, and ValidatingAdmissionWebhook.

Important changes

Starting with Kubernetes 1.24, new beta APIs are no longer enabled in clusters by default. Existing beta APIs and new versions of existing beta APIs continue to be enabled. Amazon EKS will have exactly the same behaviour. For more information, see KEP-3136: Beta APIs Are Off by Default

In Kubernetes 1.23 and earlier, kubelet serving certificates with unverifiable IP and DNS Subject Alternative Names (SANs) were automatically issued with unverifiable SANs. These unverifiable SANs are omitted from the provisioned certificate. Starting from version 1.24, kubelet serving certificates aren't issued if any SAN can't be verified. This prevents kubectl exec and kubectl logs commands from working. For more information, see Certificate signing considerations for Kubernetes 1.24 and later clusters.

Topology Aware Hints

It is common practice to deploy Kubernetes workloads to nodes running across different availability zones (AZ) for resiliency and fault isolation. While this architecture provides great benefits, in many scenarios it will also result in cross-AZ data transfer charges. You may refer to this post to learn more about common scenarios for data transfer charges on EKS. Amazon EKS customers can now use Topology Aware Hints, which are enabled by default, to keep Kubernetes service traffic within the same availability zone. Topology Aware Hints provide a flexible mechanism to provide hints to components, such as kube-proxy, and use them to influence how the traffic is routed within the cluster.

Pod Security Policy (PSP) was deprecated in Kubernetes version 1.21 and will be removed in version 1.25. PSPs are being replaced by Pod Security Admission (PSA), a built-in admission controller that implements the security controls outlined in the Pod Security Standards (PSS). PSA and PSS have both reached beta feature status as of Kubernetes version 1.23 and are now enabled in EKS. Please read the following when implementing PSP and PSS, please review this blog post.

You can also leverage Policy-as-Code (PaC) solutions such as Kyverno, and OPA/Gatekeeper from the Kubernetes ecosystem as an alternative to PSA. Please visit the Amazon EKS Best Practices Guide for more information on PaC solutions and help deciding between PSA and PaC.

Simplified scaling for EKS Managed Node Groups (MNG)

For Kubernetes 1.24, we have contributed a feature to the upstream Cluster Autoscaler project that simplifies scaling the Amazon EKS managed node group (MNG) to and from zero nodes. Before, you had to tag the underlying EC2 Autoscaling Group (ASG) for the Cluster Autoscaler to recognize the resources, labels, and taints of an MNG that was scaled to zero nodes.

Starting with Kubernetes 1.24, when there are no running nodes in the MNG, the Cluster Autoscaler will call the EKS DescribeNodegroup API to get the information it needs about MNG resources, labels, and taints. When the value of a Cluster Autoscaler tag on the ASG powering an EKS MNG conflicts with the value of the MNG itself, the Cluster Autoscaler will prefer the ASG tag so that customers can override values as necessary.

Change to certificates controller

In Kubernetes 1.23 and earlier, kubelet serving certificates with unverifiable IP and DNS Subject Alternative Names (SANs) were automatically issued with the unverifiable SANs. Beginning with version 1.24, no kubelet-serving certificates will be issued if any SANs cannot be confirmed. This will prevent the kubectl exec and kubectl logs commands from working. Please follow the steps outlined in the EKS user guide to determine if you are impacted by this issue, the recommended workaround, and long-term resolution.

Upgrade your EKS with terraform

- Clone below repo from Bitbucket which consists of Terraform files for eks-test cluster

git clone git@bitbucket.org:example.eks.module

- Change the EKS version in the terraform.tfvars file In this case, we will change it from 1.22 to 1.23.

$ vi variables.tf

variable "eks_version" {

default = "1.24"

description = "kubernetes cluster version provided by AWS EKS"

}

- Once all the above modifications are done then execute terraform plan to verify

$ terraform plan

If the changes were made to a single file with launch configuration and auto scaling group (to add would increase by 2 for every file changed), output would be: Plan: 0 to add, 1 to change, 0 to destroy.

1 to change: EKS version from 1.23 to 1.24.

- After verification, now it’s time to apply the changes

$ terraform apply

# verify again and type 'yes' when prompted.

Upgrading Managed EKS Add-ons

In this case the change is trivial and works fine, simply update the version of the add-on. In my case, from this release I utilise kube-proxy, coreDNS and ebs-csi-driver.

Terraform resources for add-ons

resource "aws_eks_addon" "kube_proxy" {

cluster_name = aws_eks_cluster.cluster[0].name

addon_name = "kube-proxy"

addon_version = "1.24.7-eksbuild.2"

resolve_conflicts = "OVERWRITE"

}

resource "aws_eks_addon" "core_dns" {

cluster_name = aws_eks_cluster.cluster[0].name

addon_name = "coredns"

addon_version = "v1.8.7-eksbuild.3"

resolve_conflicts = "OVERWRITE"

}

resource "aws_eks_addon" "aws_ebs_csi_driver" {

cluster_name = aws_eks_cluster.cluster[0].name

addon_name = "aws-ebs-csi-driver"

addon_version = "v1.13.0-eksbuild.1"

resolve_conflicts = "OVERWRITE"

}

After upgrading EKS control-plane

Remember to upgrade core deployments and daemon sets that are recommended for EKS 1.24.

Kube-proxy — 1.24.7-minimal-eksbuild.2(note the change to minimal version, it is only stated in the official documentation)

VPC CNI — 1.11.4-eksbuild.1(there is versions 1.12 available but 1.11.4 is the recommended one)

aws-ebs-csi-driver- v1.13.0-eksbuild.1

The above is just a recommendation from AWS. You should look at upgrading all your components to match the 1.24 Kubernetes version. They could include:

- cluster-autoscaler or Karpenter

- kube-state-metrics

- metrics-server

Top comments (0)