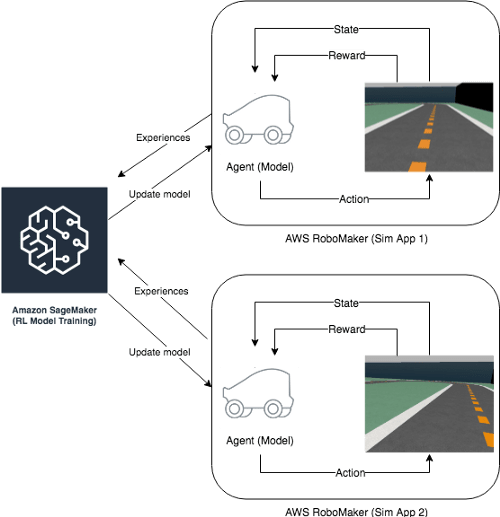

The DeepRacer car or ‘agent’ as it’s also referred to is a fully autonomous race car, programmed by us in python and trained across many iterations in AWS SageMaker on a simulation environment spun up by AWS RoboMaker.

We don’t provide the training data upfront like in supervised and unsupervised machine learning, neither do we apply any labels initially.

Instead the agent supplies its own timed delay label, known as the ‘reward’.

The data is gathered by the agent’s photo lens which are turned to greyscale. These images are off the simulated track. It tries an ‘action’ (JSON format with properties of speed and angle), you set these before training. Then analyses rewards received for its attempts, and repeats the process with different actions to look for greater rewards, being a returned as a float number in your reward function (will discuss later).

In short, the agent’s single focus is; return the maximum rewards possible.

Race League and Rules

The rules are simple. Everyone gets 4 minutes to achieve their best lap time on the re:Invent track. You’re allowed to come off the track a maximum of 3 times in order to qualify a lap. But each “off course” must be fixed by manually re-plotting the car back on the track, eating into your lap time.

Reinforcement Learning

Reinforcement Learning(RL) is a type of machine learning technique that enables an agent to learn in an interactive environment by trial and error using feedback from its own actions and experiences.

Unlike supervised learning where feedback provided to the agent is a correct set of actions for performing a task, reinforcement learning uses rewards and punishment as signals for positive and negative behaviour.

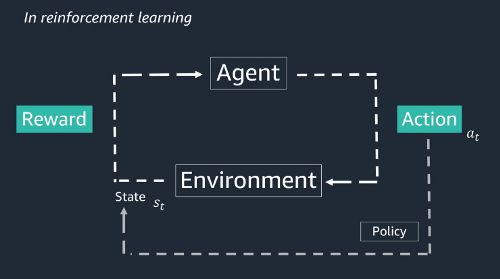

Compared to unsupervised learning, reinforcement learning is different in terms of goals. While the goal in unsupervised learning is to find similarities and differences between data points, in reinforcement learning the goal is to find a suitable action model that would maximise the total cumulative reward of the agent. The figure to the left represents the basic idea and elements involved in a reinforcement learning model.

Environment: Physical world in which the agent operates.

State: Current situation of the agent.

Reward: Feedback from the environment.

Policy: Method to map agent’s state to actions.

Value: Future reward that an agent would receive by taking an action in a particular state.

SageMaker: With each batch of experiences from RoboMaker, SageMaker updates the neural network, “and hopefully your model has improved.”

Tips and Tricks

Keep your models simple, the model above focuses on keeping the car on the centre line, it is a great place to start with, wouldn’t change a thing above as a beginner.

Don’t reward with a negative float, it can force the car to finish laps early to avoid them and cuts valuable training episodes. Punish instead with multiplying by decimal values.

Get a look at the logs of your training. They are also on cloudWatch but not very readable.

https://codelikeamother.uk/using-jupyter-notebook-for-analysing-deepracer-s-logs

Train your models for 1–2 hours, you can clone them to continue further training, but 1-2 hours gives a good indication if you are making progress on the track. (See on left).

My Experience

I’ve really enjoyed the DeepRacer experience as a fun competition but more of a way into understanding RL and machine learning in general. It’s taken a lot of time to get through the vast material but well worth the learning journey, if you are interested in learning more just let me know!

The best way to get involved is to race a model you’ve made yourself, then you’re hooked, which is a good thing.

I plan to write an enhanced deep racer guide in the future to focus on ways to be competitive and efficient with your time, hopefully they work for us at the end of October :)

Thank you!

Useful Resources

Getting Started

https://aws.amazon.com/deepracer/getting-started/

Build your own track https://medium.com/@autonomousracecarclub/guide-to-creating-a-full-re-invent-2018-deepracer-track-in-7-steps-979aff28a6f5

AWS Training

https://www.aws.training/Details/eLearning?id=32143

Top comments (0)