I recently set up an AWS organization to follow the best practice to use a multi-account environment (read "Why should I set up a multi-account AWS environment?"). That was quite easy using Control Tower, which sets up an organization with some accounts (and additional things) for you. BUT to use services across accounts wasn't easy for me.

Access-Management is Difficult

If you have used AWS before you will probably already know that managing access through permissions and policies is difficult. The possibilities are endless. Googling stuff often results in a huge amount of AWS doc pages and Stack Overflow questions or nothing at all because you have a very specific use case.

This article focuses on the possibilities for cross-account access to resources. It doesn't matter if the other account is from another company or just an account containing the production environment of your application.

ℹ️ Think of an account being a container for resources. It is a different concept of your Twitter account in which you log in with a username and password.

There are three possibilities for cross-account access:

- Assuming a role

- Resource-based policies

- Access Control Lists (ACLs)

Not all of them are applicable for all services. I'll point that out later.

⚠️ If you are in an organization, make sure no Service Control Policy (SCP) is denying access. That would end up in having no access no matter which of the three possibilities you would use.

How to Get Cross-Account Access?

Now you'll learn about the three possibilities mentioned earlier. Each of them including an example.

Assume Role

In order to assume a role in the other account (let's call it target), we use AWS STS (Secure Token Service). When assuming a role, we receive temporary credentials which provide us with access based on the assumed role.

Roles are the primary way to grant cross-account access.

This is the only option working for all services. This is therefore always an option. I'll guide you about when to chose which option later in this article.

Scenario: Allowing any identity from source account (111111111111) read access to DynamoDB table MyTable in the target account (999999999999).

What do we need?

- role in the

targetaccount with a policy allowing read access toMyTableand a policy allowing any identity from thesourceaccount to assume this role - role in the

sourceaccount with a policy allowing to assume the role in thetargetaccount - the actual

MyTable🤪

How does it look like?

Policy allowing read access (in this case only GetItem) to MyTable

(attached to MyTableCrossAccount):

{

"Effect": "Allow",

"Action": "dynamodb:GetItem",

"Resource": "arn:aws:dynamodb:eu-central-1:999999999999:table:MyTable"

}

Policy allowing an identity from source to assume the role (attached to MyTableCrossAccount):

{

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Principal": {

"AWS": "111111111111"

}

}

ℹ️ "AWS": "111111111111" is a shorthand for "AWS": "arn:aws:iam:111111111111/root".

Policy allowing to assume role in target (attached to MyTableAssume):

{

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "arn:aws:dynamodb:eu-central-1:999999999999:role/MyTableCrossAccount"

}

Assuming MyTableCrossAccount using the AWS CLI:

# logged in into 111111111111

aws sts assume-role --role-arn "arn:aws:iam::999999999999:role/MyTableCrossAccount" --role-session-name AWSCLI-Session

# use temporary credentials provided by the previous command

export AWS_ACCESS_KEY_ID=[from previous commands output]

export AWS_SECRET_ACCESS_KEY=[from previous commands output]

export AWS_SESSION_TOKEN=[from previous commands output]

# check the new identity

aws sts get-caller-identity

# now you can access MyTable

You get your temporary credentials (aws assume-role also return the expiry date) and can use them in order to get the permissions provided by the policy attached to the assumed role.

BONUS: externalId Condition for 3rd Party Access

In a multi-tenant environment where you support multiple customers with different AWS accounts, we recommend using one external ID per AWS account. This ID should be a random string generated by the third party.

It is recommended to use an external ID as a condition when given access to a third party. It prevents the so called "confused deputy" problem:

The primary function of the external ID is to address and prevent the "confused deputy" problem.

I won't go into details here, but you can read more about it in AWS article How to use an external ID when granting access to your AWS resources to a third party

Resource-Based Policy

The next possibility is by using a resource-based policy. Resource-based policies are directly attached to the resource itself. This solution is not applicable for all services.

Here is a list of services with the possibility of attaching resource-based policies to them.

Resource-based policies include a Principal element to specify which IAM identities can access that resource.

Scenario: Allowing any identity from source account (111111111111) read access to S3 bucket MyBucket in the target account (999999999999).

ℹ️ Resource-based policies for S3 buckets are also called bucket policies.

What do we need?

- bucket policy attached to

MyBucketin thetargetaccount - role in the

sourceaccount with a policy to allow read access toMyBucket

How does it look like?

Policy allowing read access (in this case only s3:GetObject) on MyBucket (attached to the role in the source account):

{

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:eu-central-1:999999999999:MyBucket/*"

}

Bucket policy allowing any identity from the source account read access (attached to MyBucket itself):

{

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:eu-central-1:999999999999:MyBucket/*",

"Principal": {

"AWS": "111111111111"

}

}

Access-Control-List (ACL)

The third option is to use ACLs. ACLs are only applicable for S3 buckets. They can be used on bucket level (bucket ACLs) or on individual object level (object ACLs). (Don't confuse these ACLs with Network ACLs (NACLs).

But ACLs support only a finite set of permissions, and these don't include all Amazon S3 permissions.

ACLs are a possibility to add cross-account access but they can't specify concrete actions (like s3:GetObject).

You can define ACLs under the Permissions tab by clicking on Edit in the Access control list (ACL) section. This is how it looks like:

As you can see, you can grant permissions on a rather high level like Read and Write. You can add multiple grantees by clicking on Add grantee.

ℹ️ Be careful with the Everyone and Authenticated user group settings because they allow access from the open world.

But there is a special case in which you need an ACL:

An object ACL is the only way to manage access to objects that are not owned by the bucket owner. [...] The AWS account that created the object must grant permissions using object ACLs.

Yes, you've read right, the owner of a bucket isn't necessarily the owner of the objects in it. The identity uploading the objects is also the owner of them. A bucket owner cannot grant permissions on objects it does not own.

When to Use What?

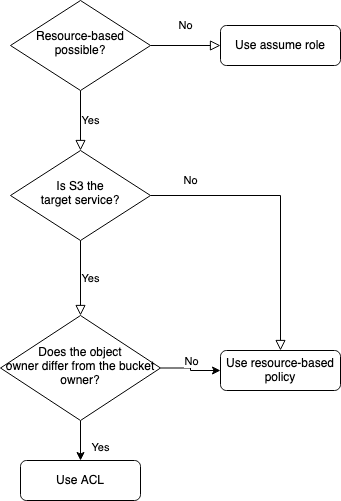

I pointed out three possibilities and now the question is "When should I use which solution?".

First of all, not all solutions are possible for all services. ACLs are only suitable for S3 and resource-based policies are also available for a subset of services.

The resource-based policy was the easiest one and ACLs only allow for setting higher-level permissions but have a special use case regarding the object owner.

This is my high-level decision tree:

Please note that more advanced use cases like distinct permission on object-level, etc., are not covered here.

There is an article in the AWS S3 docs named Access Policy Alternatives, which is about the three possibilities explained in this article.

Resources

These resources are helpful:

How IAM roles differ from resource-based policies

Actions, resources, and condition keys for AWS services

AWS re:Inforce 2019: The Fundamentals of AWS Cloud Security (FND209-R) on YouTube

Top comments (0)