Summary

Back in September 2020 Forrest Brazeal from Cloud Guru posted a new #CloudGuruChallenge which was to build an event driven ETL (Extract, Transform, Load) application.

Challenge Details can be found here.

Background

Before going into the details of the #CloudGuruChallenge, I would like to mention that this was only my second time at attempting to create an application using AWS. At the time the new challenge was announced I had just started a two week stay-cation where my main goal was to create a "Japanese Word of the Day" Twitter bot using Python.

I had a very basic POC created which worked locally on my machine but I wanted to move it to the cloud. Doing so would provide me with an opportunity to learn something new, get more practice using Python as well as not having to leave the application running on my local machine. However, moving the app to the cloud also presented some new challenges.

- What services should I use?

- Should I run the app on an EC2 instance?

- How would I store and update the data?

- How should I store sensitive data like the auth-keys/secrets?

In all the application included:

- Lambda Function #1: posts a new word to Twitter account

- Lambda Function #2: adds new words to the database

- CloudWatch Event #1: invokes Lambda Function #1 once a day

- CloudWatch Event #2: invokes Lambda Function #2 when a JSON file is uploaded to S3

- S3 Bucket: holds a JSON file consisting of new Japanese words

- DynamoDB: stores a list of Japanese words which Lambda Function #1 queries when posting a new word to Twitter

- AWS Systems Manager Parameter Store to hold the auth-keys/secrets

- AWS SAM CLI for building and deploying the app

I wanted to mention this first because it gave me the confidence to believe that I could actually complete the new challenge.

Repository for this project can be found here.

Cloud Guru Challenge – Details

The challenge was to create a Python compute job which would (once a day) extract a set of Covid19 data and display the information on a dashboard.

AWS Resources

All resources (Lambda, Events, S3, DynamoDB, AWS Glue, SNS) were created via the template.yaml file which sounded easy at first but was actually very difficult for me to fully understand. Just when I thought I got it right (and with sam build giving the green light) something would go wrong when attempting to deploy the app.

Sometimes the issue had to do with improper syntax while others times I wasn't referencing the correct name or resource. Overall it was really cool and exciting being able to spin up AWS resources without having to use the console.

Note: Don't forget to set PublicAccessBlockConfiguration to TRUE to prevent public access to your S3 resources

Resources:

Data Extraction/Filtering/Validation

In another first for me I decided to use Python Pandas as the means of extracting, filtering and transforming the raw csv data. I've heard about Python Pandas before but never used it myself nor did I have any real insight into how powerful the module is.

I used Pandas to extract the raw data from the csv files as well and performed some preliminary validation. I checked to see if the raw data included the expected column names as well the expected datatype (isnull(), map(type).all() == str, etc). My thinking was that the app should stop (and not proceed) if the raw data itself didn't contain the expected data.

My biggest concern about using the Panda Module was the shear size of the module itself as it was the bulk of the application's size. AWS provides Lambda layers and I attempted to try and use that to decrease the size/deploy time but wasn't able to figure it out. Taking application size (as well as any changes made by either AWS or Python) I'm thinking it might be good to create one's own Lambda Layer library of modules. My thinking being if there are any breaking changes/updates (AWS SDK, the module or Python) the application should still be able to run using the layer.

Resources:

Data Transformation

The final data transformation logic is contained in a separate Python module (transformdata.py) where the two sets data are merged into one 'final' dataset.

Uploading The Data

Because I had "some" experience with using DynamoDB with my Twitter-bot I thought it was going to be a piece of cake using the same database resource for this challenge. However, I should have investigated AWS QuickSight more because if I had I would have learned that QuickSight doesn't yet support DynamoDB. With that said my application basically has two databases: DynamoDB and S3.

DynamoDB

If the DynamoDB table has 0 items than the whole final dataset is uploaded. Otherwise I query the database (with date sorted descending) and return only one record (which should be the last most recent date). I then use Pandas to filter the final dataset by that date so that only items with a date greater than the filtered date are uploaded to the DB. This is to avoid having to uploaded everything each day in the database.

S3

Since I use S3 to feed the QuickSight dashboard, I simply just upload the entire dataset to S3 (csv file). This also avoids having to worry about any older dates having updates to any of the counts (cases, deaths, recovered).

Resources:

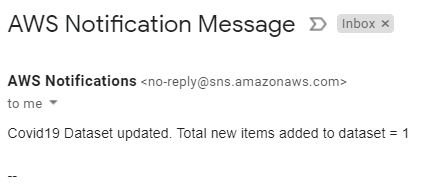

Notification

The notification I sent aren't the most user friendly nor prettiest to look at but it does notify the user of how many items were added to the database.

Tests

I have six tests (each test containing several scenarios) which are used to verify the 'raw' data and the transformed data. Making sure the expected outcome occurs.

Resources:

Dashboard

When I found out QuickSight doesn't support DynamoDB I had to quickly create an S3 resource. Once that was done I was able to connect QuickSight to the S3 dataset and put together a chart showing the number of cases vs recovered vs deaths over time. I have to say (despite the name) QuickSight wasn't very quick for me to catch on and use. The user interface didn't seem very intuitive but I say that with a grain of salt since this was my first experience using any sort of Business Intelligence (BI) service.

Repository

Code can be found here

Looking Back

First and foremost I want to thank Forrest and everyone at CloudGuru for creating these challenges as it was (without a doubt) an amazing learning adventure. I'd also want to thank my current job for 1) paying for a 3 day AWS training camp back in December 2019 and 2) for giving us access to the resources on Cloud Guru itself.

In my current job position the extent of my direct interaction with AWS itself is via the console and mostly for troubleshooting. To be able to actually create resources and deploy an application using those resources is an amazing feeling. I can't stress enough how amazing a learning opportunity these se #CloudGuruChallenges are.

Moving forward I want to continue looking/persuing:

- Lambda layers

- CI/CD

- Further usage and insight into AWS QuickSight

- More usage of Python Pandas and Unit Tests

- Complete the previous Cloud Resume challenge

- Study for the AWS Certified Cloud Practitioner exam

- Planning better before jumping into code

Top comments (0)