Sometimes we observe a downtime of popular websites because of a cloud provider's outage. The fact that we use a cloud doesn't automatically guarantee high availability of our application - there is a shared responsibility between cloud providers and its clients. Let's learn how we can use the power of cloud to make sure that our Azure resources are high available.

How much money can the company lose due to the outage?

Are you wondering how much can the outage cost? For the most popular services, the loss of money is likely to be mind-boggling - for example, YouTube was down for 37 minutes in 2020 and it resulted in losses of $1.7 million [1]. In 2013 Amazon.com had a downtime and each minute of the outage costed them about $66,240 [2]. These enormous numbers show that high availability is an important topic and can have a critical impact on the company's finances, so let's delve into it.

How to protect our application from downtime?

Imagine that we have a business-critical application hosted on virtual machine. There are several ways how we can reduce the risk of outage.

No protection

The starting point is to have a single virtual machine. Users can access the application by sending requests directly to HTTP/S port of the VM and in this way interact with our application.

As long as the virtual machine works correctly our application is available. This is not a recommend solution because each downtime of our single VM will result in situation where our users won't be able to access the application. Let's see how we can improve it.

Isolated hardware redundancy

To help us protect the virtual machines, Azure introduced special entities called fault and update domains. Let's take a look at them:

- fault domain - it is a set of virtual machines that use the same power and network infrastructure. When there will be a power, network or hardware issue, all virtual machines in that domain will be affected.

- update domain - it is a set of virtual machines that can be updated and rebooted at the same time. Azure ensures that two virtual machines that are in a separate update domain will not be affected at the same time, when maintenance activities will be required.

As a result, we can use the power of the fault and update domains and deploy two virtual machines - each in a separate domain. It can be done by using an availability set:

All you need to do is to create two virtual machines and during the creation add them to the availability set. After it you can check that the virtual machines are placed in a different update and fault domain:

There is also one important fact - we need a special service that will be responsible for balance the traffic to our virtual machines. We can use Azure Load Balancer for it and configure backend pool to contain our virtual machines. The architecture of that solution is the following:

This solution helps us to provide higher availability, but it has its limits. For example, when the whole data center will be down, our application still will be impacted. For that scenario, we will need more protection.

Availability zone redundancy

It is good to know that Azure has in almost every region three separate data centers. What is more, there is a rule that each of the data centers should be in a distance of at least 16 km. Thanks to it, when some natural disaster occurs, it is more likely that not all the data centers will be impacted. During the creation of the VM we can select the availability zone and add virtual machines to a separate zone. We still need a load balancer to handle the traffic and it can be configured for our virtual machines:

Thanks to it, when one of the data center will be down, load balancer will send traffic to the second virtual machine in a different availability zone (AZ).

This solution can fulfill the most common availability requirements, but what if we need a higher protection?

Region redundancy

To achieve region redundancy, some more steps are required. It is important that currently there is no load balancer in general availability (GA) that supports sending traffic across several regions. For that scenario we need to use a different service. One of the options is Traffic Manager. We can configure Traffic Manager to send requests to our virtual machines placed in different regions:

During the creation of the Traffic Manager, we are required to select a routing method:

Let's take a look at some of these values:

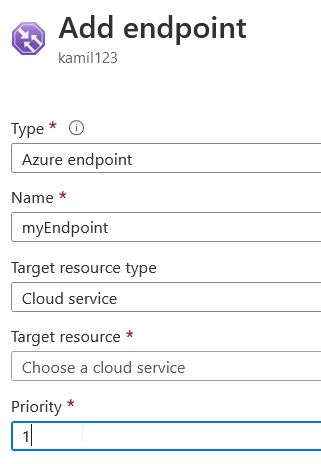

- priority - it is used to configure primary, secondary, and so on resources to which the traffic will be redirected. For example, we want to send users' requests to the first VM and only when the VM is unavailable the requests should be transferred to the second VM. In that case, the first VM should receive priority 1 and the second the priority 2:

- performance - it gives the opportunity to use not only the power of availability but also improve the performance. Your users will be redirected to resources which are the closest for them from the network latency point of view. It is determined by using the source IP address that is related to the recursive DNS service.

- weighted - you can distribute the traffic based on the weight. For example, when there is a need to balance the traffic equally between two VMs, you should assign the same weight (in our case the value is 50):

This routing method can also be used for blue-green deployments. Let's assume that we have an updated version of our application, and we want to provide it gradually to the users. We can configure that only 20% of our users will be able to use the new version - when it turns out that there are no issues related with the newly added functionality, we can make the latest version available for more users.

This routing method can also be used for blue-green deployments. Let's assume that we have an updated version of our application, and we want to provide it gradually to the users. We can configure that only 20% of our users will be able to use the new version - when it turns out that there are no issues related with the newly added functionality, we can make the latest version available for more users.

So far, we discussed having only two virtual machines. Let's be clear, in a real-life scenario we will usually use much more virtual machines - that brings us to a solution when we use both Traffic Manager and Load balancer with multiple virtual machines:

Availability with scalability

The above architecture is great when it comes to high availability, but from the scalability point of view it can be improved. Let's assume, that our application has a varied user traffic - at some point a vast number of users visit our application, but there are also periods of lower traffic. In that case, the best option is to use virtual machine scale sets (VMSS). VMSS is responsible for horizontal scaling - when the traffic is huge, it can automatically deploy new virtual machines, and when the traffic is decreased, number of virtual machines can be reduced. So the final solution can look like this:

Availability is a huge topic

In this post I tried to focus only on virtual machines and load balancer/traffic manager. It is good to remember that this topic is very wide and there are some other important challenges:

- availability of data (CosmosDB, Azure SQL Database, storage accounts, etc.)

- balance the traffic (Application Gateway, Azure Front Door, etc.)

- data backup and recovery (Azure Site Recovery, Azure Backup, etc.)

- more and more...

As you can see, there are a lot of areas to learn about availability - you can treat this post as a small introduction and I hope that it will encourage you to explore this topic deeper.

References:

[1] https://www.foxbusiness.com/technology/google-lost-ad-revenue-during-youtube-outage-expert

[2] https://www.forbes.com/sites/kellyclay/2013/08/19/amazon-com-goes-down-loses-66240-per-minute/?sh=5ebfbeae495c

You can also read this post on my official blog: https://blog.kamilbugno.com/

Top comments (0)