In previous article, I explained how to read data from ADX in Azure Databricks.

Some of you may worry about pasting secret directly in notebook, which is valid concern. I explain how we can use Azure KeyVault to securely store and use secret instead.

Prerequisites

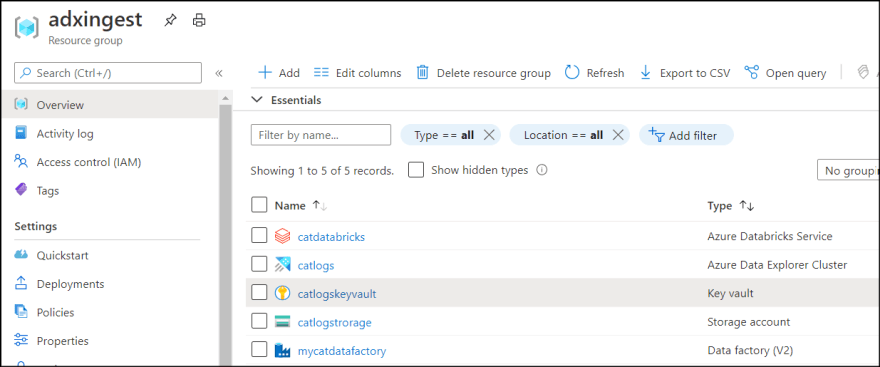

Create Azure KeyVault resource.

Store Secret in KeyVault

Move secrets into KeyVault. I store client id into keyvault too.

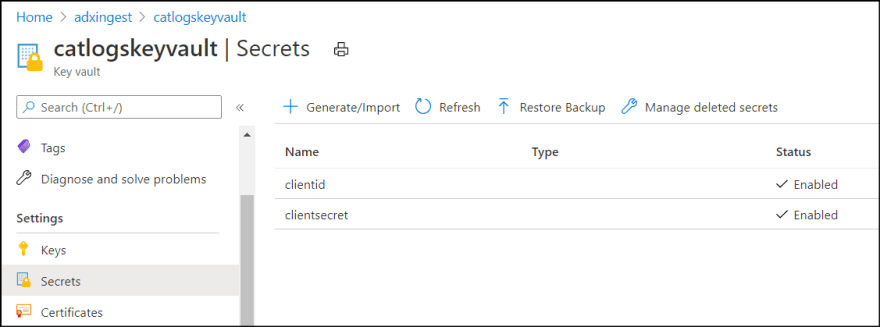

1. Open KeyVault resource from Azure Portal and select Secrets.

2. Add "clientid" and "clientsecret" secrets and paste values from notebook which you created in the previous article.

Create Secret Store in Databricks

Next, setup secret store for databricks.

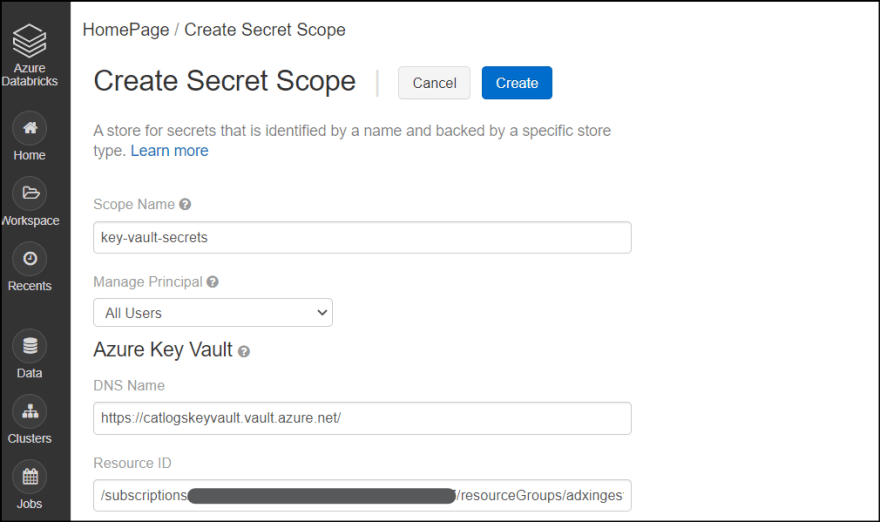

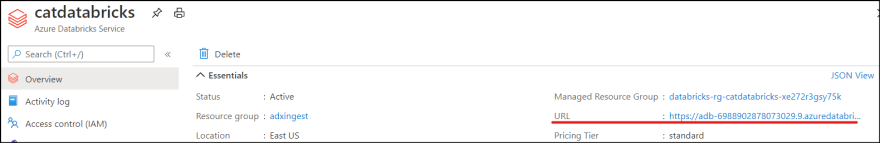

1. Go to https://your_databricks_cluster_address#secrets/createScope. You can find your URI from Databricks overview pane in Azure Portal.

2. Enter following information.

- Name: This becomes scope name

- Manage Principal: All Users

- DNS Name: You can get "Vault URI" from Azure KeyVault resource in Azure portal

- Resource ID: You can get this from URI in browser when you access KeyVault resource. Copy the address after /subscriptions

If you want to change Manage Principal to only creator, then you need to use Premium Tier.

3. Click "Create" to complete.

Once secret store is created, use Databricks CLI to confirm it.

1. Install Python 3.6+ or 2.7.9+ in your computer.

2. Install CLI via pip install.

pip install databricks-cli

3. Run configuration command.

databricks configure --token

4. It asks you the URL of Azure Databricks. You can find it in Azure Portal.

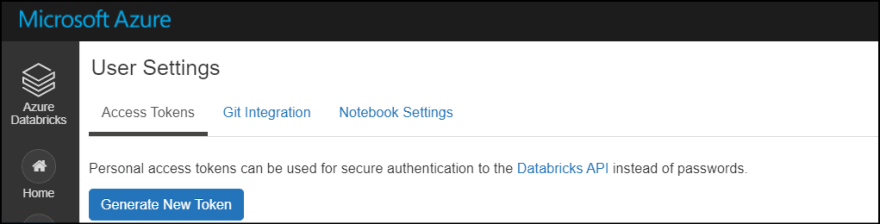

5. Then it asks you access token. You can get it from Azure Databricks portal. Select user icon on right top corner.

6. Click "Generate New Token" to obtain token.

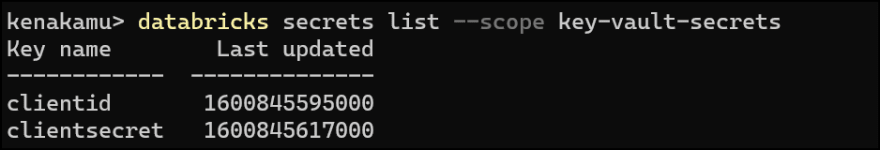

7. List the secret store with scope. You should see two secrets.

databricks secrets list --scope key-vault-secrets

Use secrets in notebook

Finally replace current notebook to use the secret.

1. Go to notebook and open the one you created.

2. Replace the code where you define client_id and client_secret. Use dbutils to get value from secret store.

client_id = dbutils.secrets.get(scope = "key-vault-secrets", key = "clientid")

client_secret = dbutils.secrets.get(scope = "key-vault-secrets", key = "clientsecret")

3. Run the notebook to confirm it works as expected.

Summary

Azure KeyVault is great place to store secrets and you don't have to worry about hardcoding values in your code. It also works as central repository for keys, so that you only need to update the keyvault whenever you regenerated secret!

Top comments (0)