Previously, I have introduce how to shift from a monolith to CQRS in order to solve some problems. As the architecture gets bigger, the maintenance effort gets higher, so it is necessary to split the monolith into several execution units to reduce the development difficulty.

However, CQRS can be used in much more than that. In this article, we will introduce another pattern where CQRS can be applied, and this is the approach I use most often when solving performance bottlenecks.

Many-to-One Data Model

According to my previous introduction, we know CQRS can convert domain objects into several read models in order to extend functional requirements. Such a pattern is what I call a one-to-many transformation. From a single data source, various models for presentation are converted based on business logic.

But sometimes we need many-to-one transformation, and this usually results in performance bottlenecks.

Let's take an e-commerce website as an example. Suppose we call only one API to pull back all the data when we open the homepage of the site.

/api/eshop/home

The purpose is to reduce the complexity of frontend development by having a single API for a single page, so that frontend developers can focus on rich presentation rather than data collection. This is common in small organizations or startups, in order to quickly produce publishable screens and simplify the communication process with the backend as much as possible.

Continuing with our example, this homepage API would require order information, user information, recommendation lists, inventory categories, and so on. Whether these data come from microservices or different data stores, they face the problem that their load is different. The data sources with high load will cause higher latency.

According to the above diagram, the order is the most heavily loaded, so the response time is the longest, up to one second. In this case there is a "long-tail effect", even if some data sources respond quickly, the entire home API time depends on the most heavily loaded data source. In this example, it is one second.

Start System Evolution

To solve the long-tail effect, we can pre-build the homepage data in an independent data source, in other words, the homepage API does not need to take time to collect the desired data from everywhere, just obtain all the desired data from a single place. Ideally, the data would already be assembled.

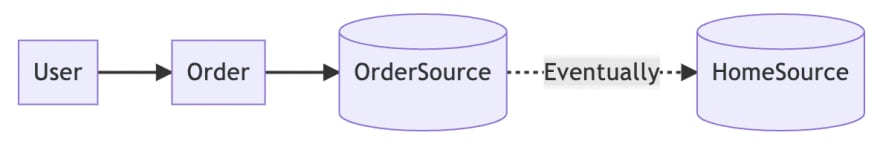

So the system will look like this.

This has the additional benefit of eliminating the time spent assembling and collating data, and the efficiency of a single data source, which can significantly reduce latency.

Nevertheless, the issue remains. Since this is a pre-built data source, who is responsible for updating it?

The most intuitive approach is that whatever domain service the user is operating, that domain service will update it. In the above example, when a user modifies order data, the order service not only modifies the original database, but also modifies the new database. The same will happen to other domain services.

Remember the problem we were trying to solve at the beginning? When operating on a busy system, the response time is obviously slow.

Since it's a busy system, such a change is like pushing a new problem onto someone who is already busy. We all know that database modifications are complex and time-consuming.

Evolve into CQRS

Therefore, what we expect is when users are operating the original domain service, someone can help us to synchronize the new database in the background without much domain service effort.

The final architecture will be similar to this. Basically, this is the pattern of CQRS.

According to the previous article, there are three ways to implement the eventual consistency of CQRS, depending on how immediately the new database must be updated. The following is the order from fast to slow, but the implementation details will not be described in this article.

- Background thread

- Message queue plus workers

- ETL

Finally, we solve the original problem we were trying to solve: long-tail effects. Not only do we not create too much overhead for the original domain services, but we also don't introduce too much coupling.

Conclusion

You may ask, "Why not just write all the domain objects to a new database, HomeSource, instead of having a domain database (e.g. OrderSource) at the beginning? A typical example of such a practice is the Service Oriented Architecture.

If we do this, the problem is no longer the long-tail effect, but how to do the horizontal scaling of HomeSource. In the final architecture, most of the order functions are still done on the original data store, and only the data that needs to be presented on the homepage is transferred to the new data store in an asynchronous way.

If the data storage of all domain services is unified, the new unified data storage will be under more loading than the sum of the original domain storage. This requires more care and effort in database design, and creates a lot of data-level coupling. This is the main reason why the service-oriented architecture is becoming obsolete.

So far we have seen two scenarios of CQRS.

- Improving productivity for extended functional requirements.

- Solving performance bottlenecks.

In fact, CQRS only provides a methodology and does not limit the situations in which it can be applied. Perhaps there is a pattern around you that would be a good fit for a CQRS solution, please feel free to share it with me.

Top comments (0)