Learn more about our open source project on Github

In today's data-driven world, organizations are constantly looking for ways to make their data analysis process more efficient and streamlined. The recent surge in Large Language Model technologies has revolutionized the field of analytics by enabling natural language interactions, which gave access to data analytics to everyone. DSensei is an open-source Slack bot that connects this technology with people by enabling

them to request data analytics in plain English directly within their familiar instant messaging tool.

In this post, we'll walk through the steps to setup Dsensei in Slack connecting to BigQuery as its data source.

Prerequisites

- A Slack account with admin access to the workspace to install Dsensei

- An OpenAI API key (you can generate from this link)

- A Google Cloud account with

roles/iam.serviceAccountCreatorIAM role to create a service account for BigQuery (see this doc for detail)

Setup

Now let's start the setup process.

Step 1. Create an Setup Slack App

First let's create a new Slack App and install it to the workspace:

- Sign in to your Slack account in browser and navigate to https://api.slack.com/apps

- Click on the "Create New App" button to create a new app, and select "From an app manifest".

- Select the workspace you want to install the app into.

- Inside the "Enter app manifest below" dialog select the format to YAML and paste the following manifest:

display_information:

name: sensei

features:

app_home:

home_tab_enabled: false

messages_tab_enabled: true

messages_tab_read_only_enabled: false

bot_user:

display_name: sensei

always_online: true

slash_commands:

- command: /info

description: Get information about DB

usage_hint: /info [dbs] | [tables db] | [schema db.table]

should_escape: false

oauth_config:

scopes:

bot:

- app_mentions:read

- chat:write

- commands

- im:history

- files:write

- files:read

settings:

event_subscriptions:

bot_events:

- app_mention

- message.im

interactivity:

is_enabled: true

org_deploy_enabled: false

socket_mode_enabled: true

token_rotation_enabled: false

Step 2. Setup BigQuery Credentials

Then let's create a Google Cloud Service Account Dsensei to access Bigquery:

- Sign in to your Google Cloud console in browser and navigate to https://console.cloud.google.com/iam-admin/serviceaccounts.

- Select the project you want Dsensei to have access to and you should get into the service account management page.

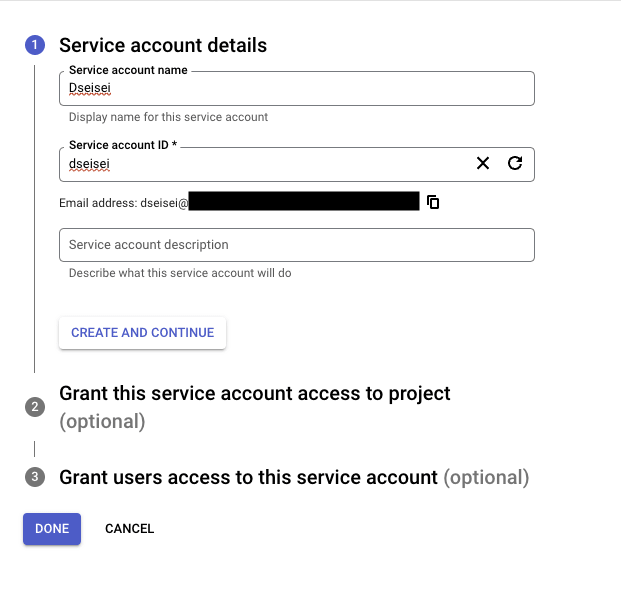

- Click "+Create service account".

- Input "Dsensei" to the "Service account name" and "dsensei" to the "Service account ID", optionally you can also put some description. Click "CREATE AND CONTINUE".

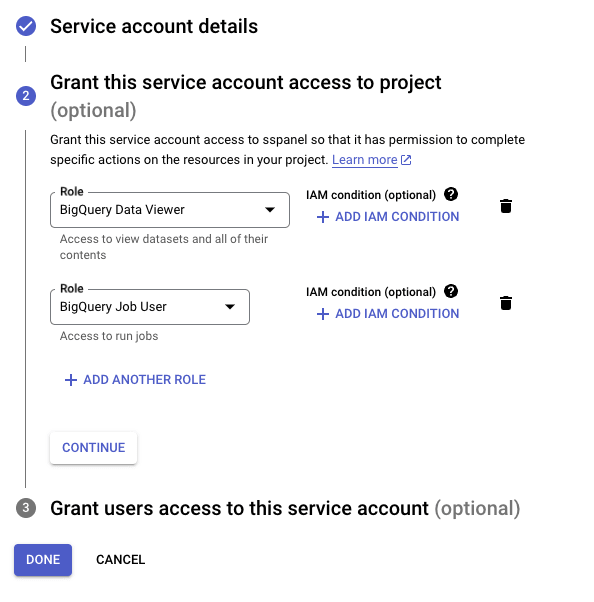

- Grant the "BigQuery Data Viewer" and "BigQuery Job User" role to the service account and click "Done".

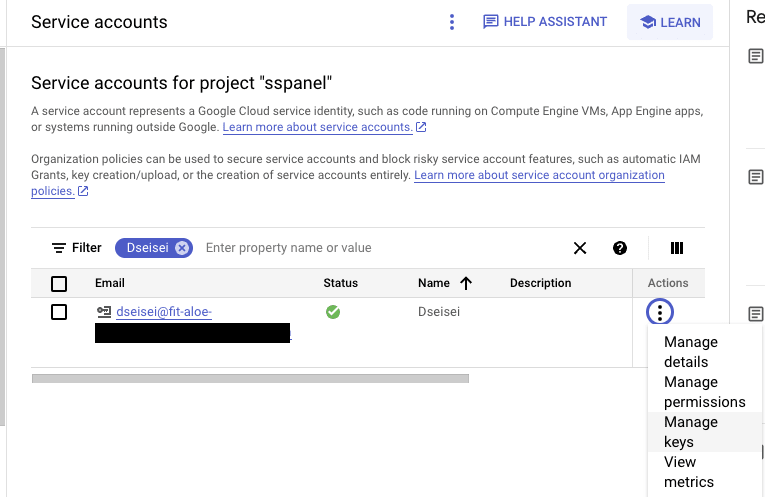

- Locate the newly create service account in the list, toggle the "Action" menu and click the "Manage keys" button.

- In the "Keys" tab click "ADD KEY" -> "Create new key" to open the key creation dialog.

- Inside the key creation dialog, select "JSON" key type and click "CREATE".

- It should download the json file, save the json file into the proper path and we will use it later.

Step 3. Setup DSensei Service

Now with the Slack App and BigQuery access setup, let's setup the Dsensei service:

- Checkout the Dsensei repo:

git clone https://github.com/logunify/dsensei.git - Switch to Node 18. You can do that with

nvm use 18, details about nvm installation and usage can be found in this doc. - Inside the checked out dsensei folder, run

npm installto install all the dependencies. -

Config credentials, we provide a template on the config, you can use it by renaming

.env.exampleto.env:- Config slack credentials:

- Goto https://api.slack.com/apps and select the app you just created.

- On the sidebar, select "OAuth & Permissions", and find the oauth token under "Oauth Tokens for Your Workspace" section. It should start with

xoxb-. Copy the token put it underSLACK_BOT_TOKENin the.envfile.

- Then select the "Basic Information" on sidebar, and find the "Signing Secret" under the "App Credentials" section. Copy the secret and put it under

SLACK_SIGNING_SECRETin the.envfile.

- Finally locate "App-Level Tokens" section under the "Basic Information" tab and click the "Generate Token and Scopes" button to generate a app token. Add the

connections:writescope in the dialog and click "Generate" to generate the token. The Slack App Token should start withxapp-. Copy the token and put it underSLACK_APP_TOKENin the.envfile.

- Config OpenAI API key:

- Find your OpenAI API key in this page, copy the token and put it under

OPENAI_API_KEYin the.envfile. - Config BigQuery key:

- In the

.envfile, setBQ_KEYto the path to the key file we generated above for the service account, like/Users/foo/gcp/dsensei.json

-

[Optional] Whitelist dataset and tables.

- You might want to limit the dataset / tables this tool can access, you can do so by list the dataset in a comma separated string in the

DATABASESfield, and / or comma separateddataset.tablenamelist in theTABLESfield.

- You might want to limit the dataset / tables this tool can access, you can do so by list the dataset in a comma separated string in the

Start DSensei and Verify in Slack!

That's it, now we should have everything setup, let's start Dsensei and verify it in Slack!

- [Optional] For the demo purpose, we copy a public sample ecommerce dataset into our BigQuery project and name it

ecommerce:- Goto this link and click "COPY"

- Select "CREATE NEW DATASET"

- Change the Project ID to the project we generate the service account in Step 2

- Click "CREATE DATASET"

- Run

npm run prodto start Dsensei service. You should see Dsensei initialize and loading schema in the logs. In our example, in looks like below:

2023-04-26T23:10:00.526Z [SlackApp] info:

____ _____ _

/ __ \ / ___/ ___ ____ _____ ___ (_)

/ / / / \__ \ / _ \ / __ \ / ___/ / _ \ / /

/ /_/ / ___/ / / __/ / / / / (__ ) / __/ / /

/_____/ /____/ \___/ /_/ /_/ /____/ \___/ /_/

2023-04-26T23:10:00.529Z [DataSourceLoader] info: Use data source from BigQuery

[INFO] socket-mode:SocketModeClient:0 Going to establish a new connection to Slack ...

2023-04-26T23:10:00.782Z [SlackApp] info: Sensei is up running, listening on port 3000

2023-04-26T23:10:00.986Z [BigQuery] info: Loaded databases: ecommerce

[INFO] socket-mode:SocketModeClient:0 Now connected to Slack

2023-04-26T23:10:01.414Z [BigQuery] info: Loaded table: ecommerce.distribution_centers

2023-04-26T23:10:01.448Z [BigQuery] info: Loaded table: ecommerce.users

2023-04-26T23:10:01.452Z [BigQuery] info: Loaded table: ecommerce.events

2023-04-26T23:10:01.454Z [BigQuery] info: Loaded table: ecommerce.products

2023-04-26T23:10:01.456Z [BigQuery] info: Loaded table: ecommerce.inventory_items

2023-04-26T23:10:01.504Z [BigQuery] info: Loaded table: ecommerce.orders

2023-04-26T23:10:01.529Z [BigQuery] info: Loaded table: ecommerce.order_items

2023-04-26T23:10:01.530Z [BigQuery] info: All 1 databases are loaded.

2023-04-26T23:10:04.334Z [BigQuery] info: Enrichment finished.

-

Now is the fun part, let's play around with Dsensei in Slack:

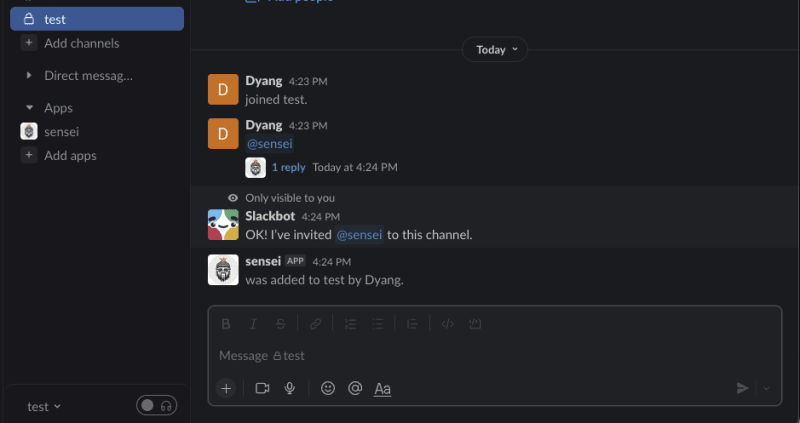

- Create a new channel and add

senseito the channel (you can also locate the "sensei" under the "Apps" section on the left and ping it directly):

- We start with commands to verify that schemas have been loaded. Send

/info dbsto get all the database and here's the response:

Your databases: -------------------- ecommerce- Send

/info tables ecommerceto get all the tables underecommercedataset and here's the response:

Your tables in ecommerce: -------------------- distribution_centers events inventory_items order_items orders products users- Send

/info schema ecommerce productsto get the detailed schema of theproductstable and here's the response:

Table ecommerce.products schema: name type description -------------------------------------------- id INTEGER cost FLOAT category STRING name STRING brand STRING retail_price FLOAT department STRING sku STRING distribution_center_id INTEGER- After verify the schemas are loaded, we will test with some data questions. Say we want to know the number of orders this month, we can simply just send "

@sensei how many new orders do I have this month?" and Dsensei will respond in a thread:

- We can follow up with Dsensei within the thread, for instance with the number of orders we have this month, we want to know how does it compared with last month. Dsensei will memorize context of all conversation with the thread so we can directly ask the follow up question by sending "

@sensei how does it compare with last month?" in the thread, and we get:

pretty cool huh?

- It can answer more advanced question that needs to conduct more sql query. For instance, we want to know the brands that process their more effectively and limit to only brands with more than 100 orders to reduce the bias, which entails the question as: "

@sensei for those brands having more than 100 orders, give me the top 5 brands that fastest at shipping their orders?".

- Create a new channel and add

Now we have everything setup, enjoy your analytics with DSensei!

Closing Thought

In conclusion, leveraging the power of chatbot to access and analyze data can greatly improve the efficiency and effectiveness of data analysis processes in organizations. DSensei is a great open-source Slack bot that can help you achieve this goal by allowing you to access and analyze data through natural language commands. By following the steps outlined in this post, you can easily set up DSensei and link it to your BigQuery data source to start benefiting from its features.

We’re passionately developing this project and would love for you to be a part of our community on Discord where you can receive the latest news, report bugs, and make feature requests. Please also feel free to submit any feedback on github directly.

Try a live demo of DSensei in our Slack Channel

Learn more about our open source project on Github

Top comments (0)