Introduction

Recently, I've watch this video which a youtuber try to solve an interview step by step. But in this case, he didn't share here code.

In my case, I love to try to solve this kind of issues but I've never work with a backend solution as API. For this reason, I found intereseting tryng to repoduce his code (with some modification) to try to achive to get an API to get information about one table.

Here is my repository with the main code

Goal

Try to have a backend-structure (with FastAPI to trying get the information about one table from the database (in this case I'm going to use Postgrest).

Source data

For this purpouse it's going to be use this dataset. In there we could find two csv:

- 1st File: Travel Company Old Clients; Number of observations: 682

- 2nd File: Travel Company New Clients; Number of observations: 1303

We are going to use only Travel Company New Clients.

Instalation

Python Installation

All the libraries in the requirements.txt:

anyio==3.7.0

click==8.1.3

exceptiongroup==1.1.1

fastapi==0.97.0

greenlet==2.0.2

h11==0.14.0

httptools==0.5.0

idna==3.4

Jinja2==3.1.2

MarkupSafe==2.0.1

psycopg2==2.9.6

pydantic==1.10.9

python-dotenv==1.0.0

PyYAML==6.0

sniffio==1.3.0

SQLAlchemy==2.0.16

starlette==0.27.0

typing_extensions==4.6.3

uvicorn==0.22.0

uvloop==0.17.0

watchfiles==0.19.0

websockets==11.0.3

Create a virtual environment

We are going to use a virtual environment to try to keep the track of all the libraries we are going to need:

python -m venv venv

And also we are going to active the environment (Linux):

source venv/bin/activate

Finally to get the requirements.txt you could use this shell:

pip freeze >requirements.txt

Install FastAPI

We are going to need to install this library to deploy our python code as a server. They told us in there documentation how to install:

This library is to deploy our python code:

pip install fastapi

... and also we are going to need our system work as server for this reason they told us to use uvicorn.

pip install "uvicorn[standard]"

Example FastAPI

To try to check is it is working I've done this example, to try if everthing is working fine:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

async def root():

return {"message": "Hello World"}

This script return only a message of hello world. I've save this script as example_1.py. To run in our bash:

uvicorn example_1:app --reload

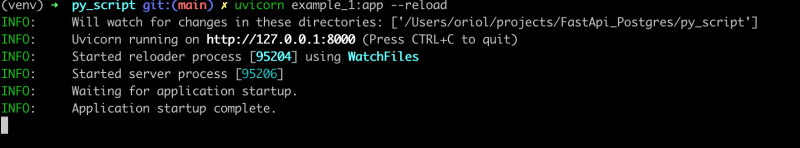

If it is working properly in bash you are going to have a message like this:

Then, you could go to your favorite browser and in the ip http://127.0.0.1:8000/ you are going to se a message like this:

Also there are a links I could find usefull like:

http://127.0.0.1:8000/docs - where you could see the documentation about your project an test the dependecies

Finally to close your bash server simple Ctrl-C and your server is going to be down.

Install Jinja2

FastAPI could be use with Jinja2 template and we are going to use it to get a template of a query

'''shell

pip install Jinja2

'''

Install SQLAlchemy

We are going to use it to connect to our database and make a querys:

pip install SQLAlchemy

Install Pydantic

To help us to translate of our results in the query to format json

pip install pydantic

Install markupsafe

Library to implements a text object that escapes characters so it is safe to use in HTML and XML

pip install MarkupSafe==2.0.1

Install psycopg2

Adaptar of PostgresSQL to python

pip install psycopg2

Postgres Installation w/docker

Env variables

We are going to use this env variables:

PS_VERSION=15

PS_USER=ps

PS_PASSWORD=ps_2023

PS_DB=RAW

Dockerfile

We are going to create a file with name Dockerfile.postgres like this:

FROM postgres

# make directory to Docker

RUN mkdir -p /home/src

# Copy source data

COPY ./src /home/src

COPY ./db_script/* /docker-entrypoint-initdb.d

# How to create a main table

RUN chmod a+r /docker-entrypoint-initdb.d/*

# Expose the port

EXPOSE 6666

Steps to create FastAPI:

Models.py

First script we are going to create is how our model is going to be connected to our database based on the table of Newclients:

import os

from sqlalchemy import Column, String, Integer

from sqlalchemy import create_engine, MetaData

from sqlalchemy.ext.declarative import declarative_base

# Use environment variable DB_CONNSTR

if os.environ["DB_CONNSTR"] is None:

DB_CONNSTR='postgresql://ps:ps_2023@localhost:5432/RAW'

else:

DB_CONNSTR = os.environ["DB_CONNSTR"]

# Create engine to connect our data base

engine = create_engine(DB_CONNSTR) if DB_CONNSTR else None

# Get all the metadata

meta = MetaData(DB_CONNSTR)

# declare metada has an object

Base = declarative_base(metadata=meta)

# Declare tables

TABLE_NAME='newclients'

class Newclients(Base):

__tablename__ = TABLE_NAME

num_age = Column(Integer, primary_key=True)

des_employetype = Column(String, primary_key=True)

is_graduate = Column(String, primary_key=True)

imp_annualincome = Column(Integer, primary_key=True)

num_familymembers = Column(Integer, primary_key=True)

is_frequentflyer = Column(String, primary_key=True)

is_evertravelledabroad= Column(String, primary_key=True)

Backend.py

In this script we are going to create a class, with the base of the paramenters:

- select

- where

- order_by

- group_by

This script is going to connect to our database and return a dictionary to get the result of our query.

To achive this point we are going to use Jinja2Templates.

Jinja2Templates

FastApi it could works native with Jinja2Templates. With this in main we could create an SQL script with the style of jinja. This is store in the folder of templates.

In this case, I've stored in github repo because dev.to doesn't let you to use double brackets

If someone knows how it could be copied in here without any problems it could be great.

Python Script

Use the Models.py and also the Jinja2Templates, we could use this to get the connection and the result of the query:

from fastapi.templating import Jinja2Templates

from sqlalchemy import text

from models import engine, TABLE_NAME

# Import templates of Jinja2

templates = Jinja2Templates(directory="templates")

class Sql_conn:

def _parse(self, query_dict: dict[str]):

# Use dict to pass variables throught jinja template

query_dict['table']= TABLE_NAME

render = templates.TemplateResponse(

"query_template.j2",{'request': None, 'data': query_dict}

)

return render.body.decode('ascii')

def _query(self, q):

# Use connection to make a query

print(q)

with engine.connect() as con:

rs = con.execute(text(q))

return rs

def _resultdict(self,result):

# Convert result to dict

resultdic = dict()

for idx, row in enumerate(result):

row_as_dict = row._mapping

resultdic.update({idx:row_as_dict})

return resultdic

def request_query(self, query_dict: dict[str]):

q = self._parse(query_dict)

data = self._query(q)

datadic = self._resultdict(data)

return datadic

Api.py

Finally in this script, we are going to use fastapi. In here based on the last script (backend.py), we are going to create our desired api:

from typing import Optional

from fastapi import FastAPI, HTTPException

from sqlalchemy.exc import ProgrammingError

from pydantic import BaseModel

from backend import Sql_conn

# Variables to the model

class Query(BaseModel):

select: str

where : Optional[str]=None

order_by : Optional[str]=None

group_by : Optional[str]=None

sort_by : Optional[str]=None

# Create our object of FastAPI

app = FastAPI()

# Based on the method post of analytics..

@app.post("/analytics")

async def query_data(query: Query):

# ... we create async function to get the result of a query

return Sql_conn().request_query(query.dict())

Dockerfile.python

We are going to create an instance to run our desired dockerfile to run our api.

FROM python:3.9

WORKDIR /app

# Install OS dependencies

RUN apt-get update && apt-get install -qq -y \

git gcc build-essential libpq-dev --fix-missing --no-install-recommends \

&& apt-get clean

# Make sure we are using latest pip

RUN pip install --upgrade pip

# Copy requirements.txt

COPY requirements.txt requirements.txt

# ... install of the requirements

RUN pip install -r requirements.txt

# ... copy all the script into the app

COPY ./py_script/ /app/

# ... select the variable of environment where is going to execute python

ENV PYTHONPATH "${PYTHONPATH}:/app/"

# ... and expose the desired port

EXPOSE 8000

CMD ["uvicorn","api:app","--host","0.0.0.0"]

Docker compose

Finally to get all the enviornment working together I've create this script:

version: '3.9'

services:

post_db:

build:

context: .

dockerfile: Dockerfile.postgres

user: postgres

image: postgres/test:v1

environment:

- POSTGRES_USER=${PS_USER}

- POSTGRES_PASSWORD=${PS_PASSWORD}

- PG_DATA:/var/lib/postgresql/data/pgdata

- POSTGRES_DB=${PS_DB}

healthcheck:

test: ["CMD-SHELL","pg_isready -U ${PS_USER} ${PS_PASSWORD}"]

interval: 10s

timeout: 5s

retries: 5

ports:

- "5432:5432"

volumes:

- db-data:/var/lib/postgresql/data

restart: unless-stopped

api:

build:

context: .

dockerfile: Dockerfile.python

environment:

- DB_CONNSTR=postgresql://${PS_USER}:${PS_PASSWORD}@post_db:5432/${PS_DB}

ports:

- 8000:8000

links:

- post_db

restart: unless-stopped

volumes:

db-data:

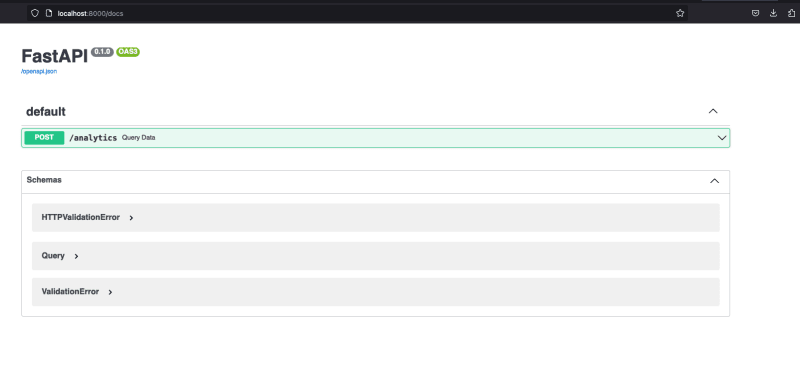

Now we could acces to our http://localhost:8000/docs and check if there is any in action:

Tests

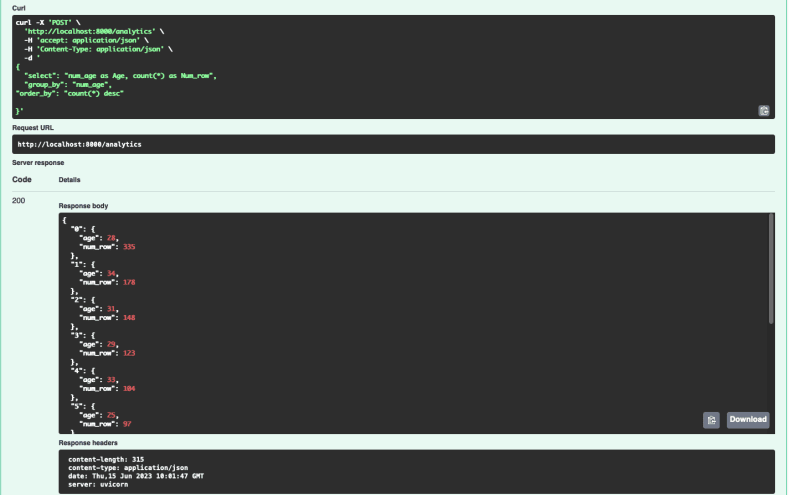

To check if everything is working properly I've made this test. You could access to make this test with tryout button:

{

"select": "num_age as Age, count(*) as Num_row",

"group_by": "num_age",

"order_by": "count(*) desc"

}

And also there is our desired result:

Conclusion

FastAPI is a really good packacge to get the information about your database and also there is a lot of features I've not test it yet, but it looks great to have it as a server to get access to your data.

Have a nice day:)

Top comments (0)