Authored by Haneesh Reddy Poddutoori

Live dashboards can help organizations make sense of their event data and understand what's happening in their businesses in real time. Marketing managers constantly want to know how many signups there were in the last hour, day, or week. Product managers are always looking to understand which product features are working well and most heavily utilized. In many situations, it is important to be able to take immediate action based on real-time event data, as in the case of limited-time sales in e-commerce or managing contact center service levels. With the realization of the value businesses can extract from real-time data, many organizations that have standardized on Tableau for BI are seeking to implement live Tableau dashboards on their event streams as well.

Getting Started

In this blog, I will step through implementing a live dashboard on event data using Tableau. The events we will track will be recent changes to various Wikimedia projects, including Wikipedia.

For this project, we will need:

- Tableau Desktop, with an active account

- An active Rockset account

- Rockset Java client library and JDBC driver

Ingesting Data from Wikimedia Event Stream

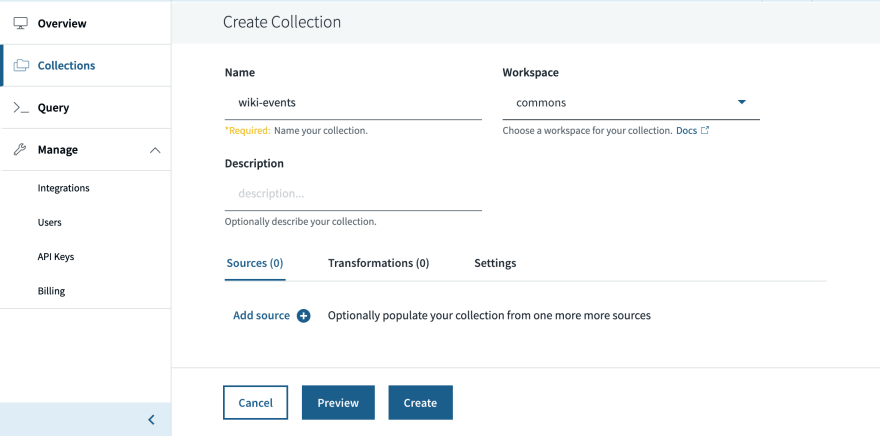

I will first create a collection, to which we will write the events originating from the Wikimedia stream.

Once we have the collection set up in Rockset, I can run a script that subscribes to events from the Wikimedia stream and writes them to the wiki-events collection in Rockset.

While we are using Rockset's Write API to ingest the Wikimedia event stream in this case, Rockset can also sync data from other sources, like Amazon DynamoDB, Amazon Kinesis, and Apache Kakfa, to power live dashboards, if required.

Now that we are ingesting the event stream, the collection is growing steadily every second. Describing the collection shows us the shape of data. The result is quite long, so I will just show an abbreviated version below to give you a sense of what the JSON data looks like, along with some of the fields we will be exploring in Tableau. The data is somewhat complex, containing sparse fields and nested objects and arrays.

Connecting a Tableau Dashboard to Real-Time Event Data

Let us jump into building the dashboard. I'll first need to connect to Rockset, as a new data source, from my Tableau Desktop application. Follow the steps here to set this up.

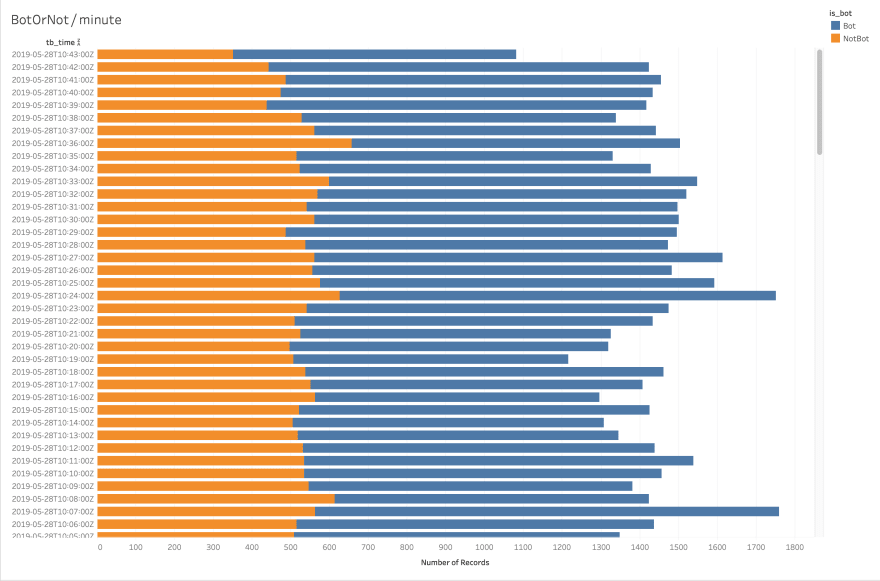

We can create a first chart showing the number of changes made by bots vs. non-bots for every minute in the last one hour. I can use a custom SQL query within Tableau to specify the query for this, which gives us the resulting chart.

That's about 1,400 events per minute, with bots responsible for the majority of them.

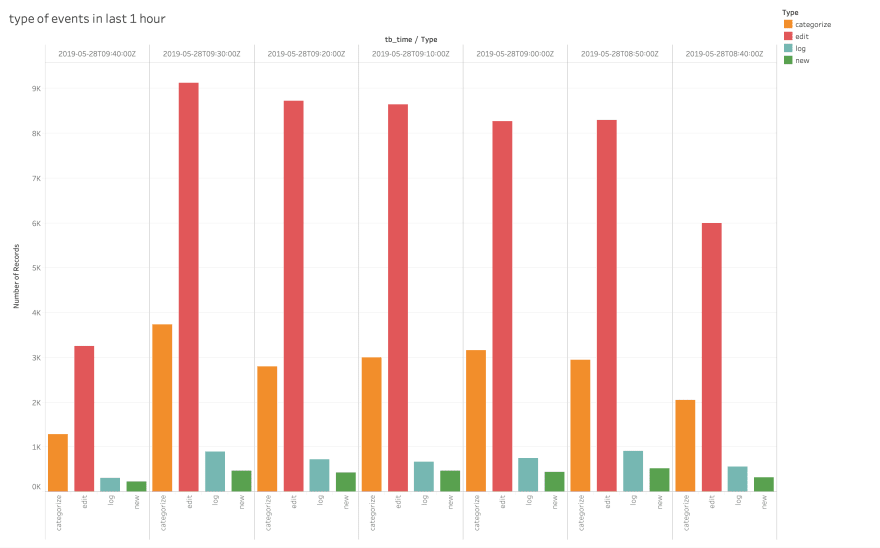

Wikimedia also tracks several types of change events: edit, new, log, and categorize. We can get an up-to-date view of the various types of changes made, at 10-minute intervals, for the last hour.

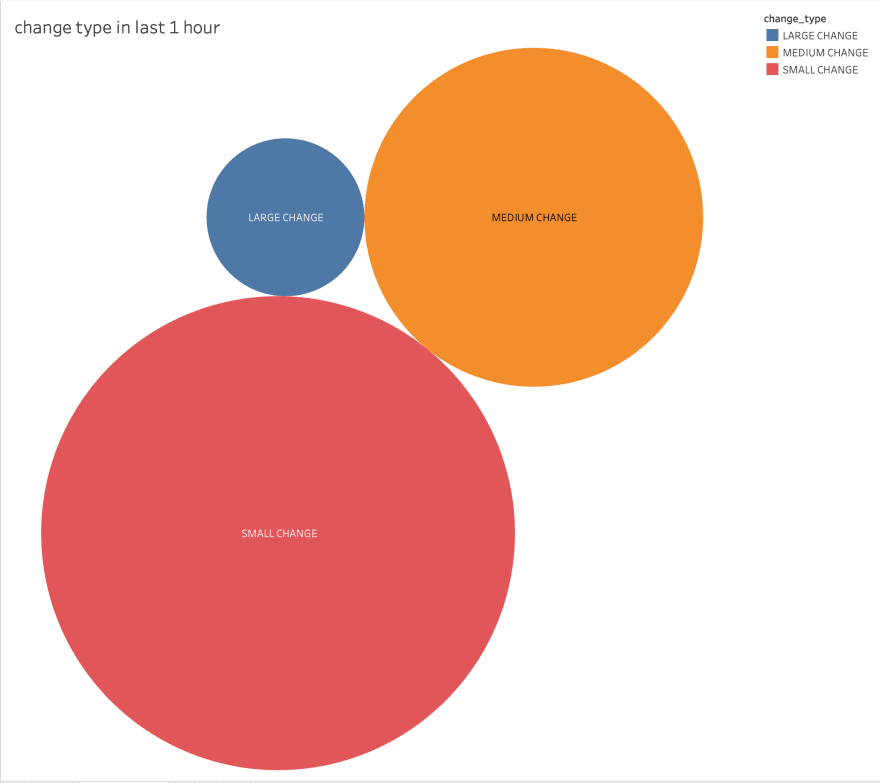

Finally, I plotted a chart to visualize the magnitude of the edits made within the last hour, whether they were small-, medium-, or large-scale edits.

Recap

In a few steps, we ingested a stream of complex JSON event data, connected Tableau to the data in Rockset, and added some charts to our live dashboard. While it may normally take tens of minutes, if not longer, to process raw event data for use with a dashboarding tool, using Tableau on real-time data in Rockset allows users to perform live analysis on their data within seconds of the events occurring.

If you wish to adapt what we've done here to your use case, the source code for this exercise is available here.

Top comments (0)