A couple of weeks ago, as I was nearing the end of my studies for the AWS Certified Solutions Architect - Associate exam, I was thinking, "Okay, what do I do once I have my certificate?" Enter the Cloud Resume Challenge. I was merely scrolling through r/AWSCertifications when someone had asked what projects they could get involved with to augment their resume and someone simply replied with a link to Forrest Brazeal's challenge located here. To complete this challenge, there were 16 requirements that had to be met, including certification. I thought, "This is going to be a cakewalk. I have networking experience and understand how DNS works, how to implement HTTPS and I have an AWS Certified Solutions Architect Certification." Boy, was I in for a surprise.

To start, the instructions were not very specific, and I feel that was on purpose. I went down plenty of late-night rabbit holes, utilizing more browser tabs than I can count; and I learned a ton along the way.

The Front End

For the webpage, I had been able to develop a fairly basic web page using HTML and CSS that displayed my resume. However, given that I had almost no design experience, the page left a lot to be desired. Therefore, I ended up finding a template on codepen.io and customizing it for my purposes. Next, I created an S3 bucket to host the page and CSS file, followed by creating a Cloudfront distribution with a signed certificate. Couple that with a few DNS records and bam! My page is online! Not yet counting visitors, but serving nonetheless!

With the webpage up, it was time to implement the page counter functionality, which wasn't too bad either. I merely added a bit of JavaScript and that was pretty much it as far as the front end was concerned.

At this point, I was motivated, determined and full of momentum. I felt like nothing could stop me! I was like a freight train.

Until...

The Back End

This is where the challenge really started showing it's difficulty, and it's where a lot of rabbit holes were dug.

The back end has quite a few moving parts, so I'll break it down into smaller chunks:

The Database

The first thing I had to do was implement a back end database to store the number of site visitors. The obvious solution was DynamoDB and it was very easy to set up.

The Lambda Function

Next, I knew I would need some way to communicate with the database that did not involve JavaScript on the webpage communicating directly with the database. So I wrote a Lambda function in Python that would update the visitor count and then retrieve the count. I had some experience with Python already in my current job as well as previous courses I had taken back in my college days, so this wasn't too bad either.

The API

With the Lambda function in place, I needed an API so I could expose the Lambda function such that the JavaScript on the front end could invoke it. So I created an API using API Gateway. This part proved to be somewhat challenging due to CORS. But thanks to hours of googling, reading AWS documentation and experimenting, I was able to fix this issue rather easily using my already existing Lambda function. The next hurdle I encountered was that the Lambda function from above needed to return the visitor count to the API in the correct JSON format, which I had overlooked. I spent an entire day trying to figure out why my Lambda function tested just fine but would cause API Gateway to return a 502 error until I re-read the documentation and realized what I had overlooked.

Testing

One of the requirements for this challenge was to implement unit testing. To accomplish this, I used pytest and a very cool library I stumbled upon called moto. What made moto very cool (and useful of course), was it's ability to mock AWS resources without needing to actually create them. This allowed me to easily mock up a DynamoDB table to ensure my back end Python script worked without the need to create extra resources or risk changes to production. One tip I will give here is that it is much easier to test your code when it is broken up into smaller functions. Also, when writing the test script, do it in SMALL chunks and test repeatedly to make sure everything works. I found that approach to be much easier than putting together a bunch of spaghetti code and then crossing my fingers in the hopes it would work. Not only was it easier to debug and troubleshoot, but it ended up making my code look cleaner.

Infrastructure as Code (IaC)

With testing out of the way, it was time to focus on IaC. For this requirement, I took advantage of AWS Serverless Application Model (SAM). It was not easy to get started with SAM as the documentation was very overwhelming, but thanks to this blog post, I was put at ease. After reading and following along with that post, I quickly became accustomed to the SAM template format and it was off to the races. Referring to AWS' SAM documentation no longer felt daunting.

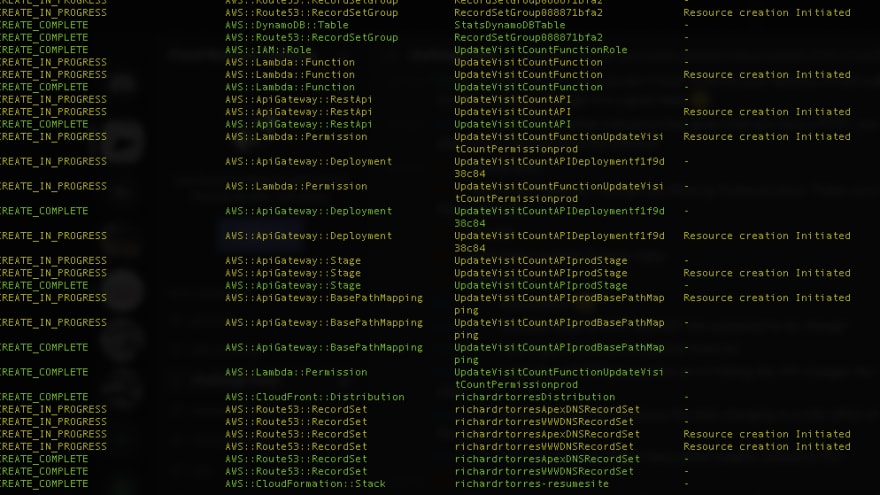

I was quickly able to create a template that automated the deployment/updating of my API, DynamoDB table and Lambda function. But I didn't want to stop there. With my newfound knowledge coupled with a lot of hours spent troubleshooting, experimenting and referring to AWS' documentation, I went as far as automating the creation of my Cloudfront distribution, my Route53 DNS records and even a custom domain name for my API. Once I was confident that everything worked, I tore down every single resource I created by hand (Route 53 DNS records, API, DynamoDB table, etc.), essentially blowing away my workload and then I deployed my SAM template. It was at this point that I realized just how powerful SAM is. The ability to easily deploy and delete my workload was so cool and it brought me back to being a kid at Toys“R”Us (RIP). I constantly kept deleting and re-deploying my workload for a good 30 minutes just for the heck of it and was mind-blown each time. This was clearly the proudest moment for me in this challenge.

Needless to say, this part of the challenge was complete.

Source Control and CI/CD

By this point, I was pumped. I was so close to completing the project and was in the final stretch. For source control, I decided to use Github. I created two repositories, one for my back end and one for my front end. I coupled this with Github actions and secrets to fulfill the CI/CD requirements. For the front end, I simply set up an action that would automatically synchronize the repository's contents with the S3 bucket hosting the web app. Boom! Easy. The back end though, was not as straight forward. However, after plenty of trial and error and experimenting with the Github workflow template, I had begun to see all green check marks when my Github action was invoked.

Conclusion

Overall, this challenge was worth every single minute, every rabbit hole dug, every long night spent. In completing this challenge, I have learned way more than I bargained for and I do not regret it one bit. I now know more about full-stack software development, version control, IaC, automation, CI/CD, cloud services, serverless and application security. I'd like to thank Forrest Brazeal for making this challenge possible. Because of it, I have a newfound love for Cloud computing and am very much looking forward to a career involving it.

You can view the finished product here: richardrtorres.com

Top comments (0)