Gorush is a Go-written application which we are planning to use to send push notifications to our mobile clients.

Gorush is a Go-written application which we are planning to use to send push notifications to our mobile clients.

The project’s home – https://github.com/appleboy/gorush

The service will be running in our Kubernetes cluster in a dedicated namespace and must be accessible within the cluster’s VPC only, so we will use an Internal ALB from AWS.

Run Gorush service

Namespace

Clone a repository:

$ git clone https://github.com/appleboy/gorush

$ cd gorush/k8s/

Create a namespace and a confgiMap which will be used to configure access to a local Redis service:

$ kubectl apply -f gorush-namespace.yaml

namespace/gorush created

$ kubectl apply -f gorush-configmap.yaml

configmap/gorush-config created

We also can use this configMap later to add our own config-file for the Gorush service.

Check resources:

$ kubectl -n gorush get cm

NAME DATA AGE

gorush-config 2 20s

Redis

Spin up a Redis service:

$ kubectl apply -f gorush-redis-deployment.yaml

deployment.extensions/redis created

$ kubectl apply -f gorush-redis-service.yaml

service/redis created

Gorush

I’d like to add an additional pod with Debian to run tests after creating the Gorush to make sure it’s working, so let’s update the deployment file gorush-deployment.yaml with a new container named Bastion:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: gorush

namespace: gorush

spec:

replicas: 3

template:

metadata:

labels:

app: gorush

tier: frontend

spec:

containers:

- image: appleboy/gorush

name: gorush

imagePullPolicy: Always

ports:

- containerPort: 8088

livenessProbe:

httpGet:

path: /healthz

port: 8088

initialDelaySeconds: 3

periodSeconds: 3

env:

- name: GORUSH_STAT_ENGINE

valueFrom:

configMapKeyRef:

name: gorush-config

key: stat.engine

- name: GORUSH_STAT_REDIS_ADDR

valueFrom:

configMapKeyRef:

name: gorush-config

key: stat.redis.host

- image: debian

name: bastion

command: ["sleep"]

args: ["6000"]

And now we can start the Gorush itself:

$ kubectl apply -f gorush-deployment.yaml

deployment.extensions/gorush created

Check pods:

$ kubectl -n gorush get po

NAME READY STATUS RESTARTS AGE

gorush-59bd9dd4fc-dzm47 2/2 Running 0 8s

gorush-59bd9dd4fc-fkrhw 2/2 Running 0 8s

gorush-59bd9dd4fc-klsbz 2/2 Running 0 8s

redis-7d5844c58d-7j5jp 1/1 Running 0 3m1s

AWS Internal Application Load Balancer

The next things to create are a Service with the NodePort and an Ingress service which will trigger an alb-ingress-controller to create a new load balancer on an AWS account.

Update the gorush-service.yaml and uncomment the NodePort and comment out the LoadBalancer lines:

...

#type: LoadBalancer

type: NodePort

...

So now it looks like:

apiVersion: v1

kind: Service

metadata:

name: gorush

namespace: gorush

labels:

app: gorush

tier: frontend

spec:

selector:

app: gorush

tier: frontend

# if your cluster supports it, uncomment the following to automatically create

# an external load-balanced IP for the frontend service.

#type: LoadBalancer

type: NodePort

ports:

- protocol: TCP

port: 80

targetPort: 8088

Create the service:

$ kubectl apply -f gorush-service.yaml

service/gorush created

And let’s configure the ALB. Update the gorush-aws-alb-ingress.yaml, add the kubernetes.io/ingress.class: alb to trigger the alb-ingress-controller, update scheme to the internal value, and set values for the subnets and a security-group.

Also, notice that servicePort was changed here from the original 8088 to the 80, as we are using an ALB and a dedicated NodePort Service:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: gorush

namespace: gorush

annotations:

# Kubernetes Ingress Controller for AWS ALB

# https://github.com/coreos/alb-ingress-controller

#alb.ingress.kubernetes.io/scheme: internet-facing

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internal

alb.ingress.kubernetes.io/subnets: subnet-010f9918532f52c6d, subnet-0f6dbde36b6669f48

alb.ingress.kubernetes.io/security-groups: sg-0f1df776a767a2589

spec:

rules:

- http:

paths:

- path: /*

backend:

serviceName: gorush

servicePort: 80

Create an ALB:

$ kubectl apply -f gorush-aws-alb-ingress.yaml

ingress.extensions/gorush created

Check alb-ingress-controller logs:

$ kubectl logs -f -n kube-system $(kubectl get po -n kube-system | egrep -o 'alb-ingress[a-zA-Z0-9-]+')

...

E0206 11:08:52.664554 1 controller.go:217] kubebuilder/controller "msg"="Reconciler error" "error"="no object matching key "gorush/gorush" in local store" "controller"="alb-ingress-controller" "request"={"Namespace":"gorush","Name":"gorush"}

I0206 11:08:53.731420 1 loadbalancer.go:191] gorush/gorush: creating LoadBalancer 3407a88c-gorush-gorush-f66a

I0206 11:08:54.372743 1 loadbalancer.go:208] gorush/gorush: LoadBalancer 3407a88c-gorush-gorush-f66a created, ARN: arn:aws:elasticloadbalancing:us-east-2:534***385:loadbalancer/app/3407a88c-gorush-gorush-f66a/d31b461c65a278f0

I0206 11:08:54.516572 1 targetgroup.go:119] gorush/gorush: creating target group 3407a88c-e50bdf2f1d39b9db54c

...

I0206 11:08:57.215907 1 rules.go:98] gorush/gorush: rule 1 modified with conditions [{ Field: "path-pattern", Values: ["/*"] }]

Check the Ingress service:

$ kubectl -n gorush get ingress

NAME HOSTS ADDRESS PORTS AGE

gorush * internal-3407a88c-gorush-gorush-f66a-***.us-east-2.elb.amazonaws.com 80 3m57s

Testing the API

Let’s connect to our Bastion hos, install dnsutils, dnsping and the curl packages.

To get a Pod we can use the following command:

$ kubectl -n gorush get pod | grep gorush | cut -d" " -f 1 | tail -1

gorush-59bd9dd4fc-klsbz

And to connect to the Bastion:

$ kubectl -n gorush exec -ti $(kubectl -n gorush get pod | grep gorush | cut -d" " -f 1 | tail -1) -c bastion bash

root@gorush-59bd9dd4fc-klsbz:/#

Install necessary utils, and check if our push-service is working:

root@gorush-59bd9dd4fc-klsbz:/# apt update && apt -y install dnsutils curl dnsdiag

root@gorush-59bd9dd4fc-klsbz:/# curl dualstack.internal-3407a88c-gorush-gorush-f66a-***.us-east-2.elb.amazonaws.com

{"text":"Welcome to notification server."}

Looks good so far.

Route53

Let’s check if our Internal ALB is resolved to a Private IP, as it’s type was set to the Internal:

root@gorush-59bd9dd4fc-klsbz:/# dig +short dualstack.internal-3407a88c-gorush-gorush-f66a-***.us-east-2.elb.amazonaws.com

10.0.15.167

10.0.23.138

Good.

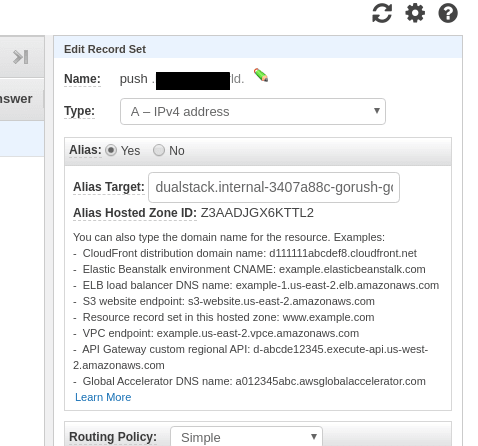

We’d like to use an own URL to access the ALB created above, so let’s go to the AWS Route53, and add a new record pointed to the ALB as usual – via an ALIAS:

And make a GET request from the Bastion to make it running inside of the VPS using its own DNS to resolve the domain to the ALB’s Private IPs:

root@gorush-59bd9dd4fc-klsbz:/# dig push.example.com +short

10.0.23.138

10.0.15.167

The service:

root@gorush-59bd9dd4fc-klsbz:/# curl push.example.com

{"text":"Welcome to notification server."}

Done.

Similar posts

- 08/10/2019 Kubernetes: part 2 – a cluster set up on AWS with AWS cloud-provider and AWS LoadBalancer

- 01/30/2020 Kubernetes: could not find the requested resource (get services http:heapster:) and running metrics-server

- 08/30/2018 AWS: Application Load Balancer – HTTP => HTTPS и host-header редиректы

- 08/15/2019 Kubernetes: part 3 – AWS EKS overview and manual EKS cluster set up

Top comments (0)