I've written before about developing software in Docker containers and while that has been a step forward for development and being able to isolate development environments, the strength of Docker has always been in running applications without having to worry about requirements or dependencies.

For managing clusters of applications we have plenty of options, Kubernetes, Docker Swarm, OpenStack and of course Docker Compose.

Options like Kubernetes are indispensable when operating at scale, with dozens, hundreds or even thousands of instances of multiple microservices all working together to provide a full application.

But when it comes to something like hosting a personal blog like this one then it can be overkill. Something simpler like Docker Compose will do just fine.

version: '3.1'

services:

ghost:

container_name: ghost

image: ghost:alpine

restart: always

ports:

- 127.0.0.1:2368:2368

volumes:

- ./content:/var/lib/ghost/content

- ./config.production.json:/var/lib/ghost/config.production.json

This is a relatively simple example of a docker-compose.yml file but it shows the important details, we specify our image, it's set to restart automatically in case of a crash or failure and mounts some files from the native file system and forwards to a port on the server itself.

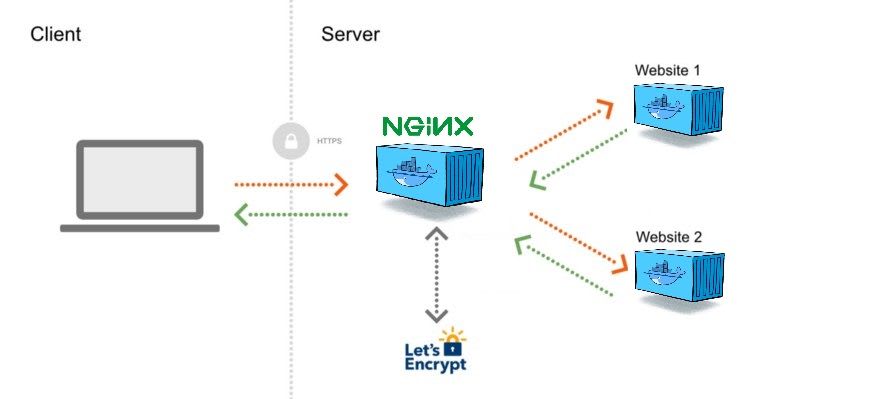

This is where we can set up a reverse proxy in nginx, but why install it when nginx is available as a docker image?

Particularly when we have the chance to use this amazing piece of work?

evertramos

/

nginx-proxy-automation

evertramos

/

nginx-proxy-automation

Automated docker nginx proxy integrated with letsencrypt.

It sits between one or more docker containers and the outside world, forwarding requests and also taking care of SSL certificates using LetsEncrypt.

To host our ghost blog behind it all we need to do is expand our docker-compose file and restart that container. The nginx proxy will detect it based on the extra environment variables and handle all proxy reversing and add your new SSL certificates.

version: '3.1'

services:

ghost:

container_name: ghost

image: ghost:alpine

restart: always

ports:

- 127.0.0.1:2368:2368

volumes:

- ./content:/var/lib/ghost/content

- ./config.production.json:/var/lib/ghost/config.production.json

environment:

# Note how VIRTUAL_HOST and LETSENCRYPT_HOST allow for multiple domains

- VIRTUAL_HOST=simonreynolds.ie,www.simonreynolds.ie

- LETSENCRYPT_HOST=simonreynolds.ie,www.simonreynolds.ie

- LETSENCRYPT_EMAIL=REDACTED@example.com

- MAIN_DOMAIN=simonreynolds.ie

- VIRTUAL_PORT=2368

networks:

default:

external:

name: webproxy

I took the extra step of creating the webproxy network myself so I can host multiple sites across different domains from just one server.

This makes spinning up small sites for testing ideas incredibly easy and fully automates SSL certificates so I never have to worry about expired certificates again!

Oldest comments (0)