This post continues our journey of statically checked SQL schemas in F# land. We're still using SQLProvider, still on .NET Core 3.1, and still living in CLI world with our console app. All the code lives in a public GitLab repo here. This post starts from this commit, and all the work is contained in MR#3. My previous post (F# SQLProvider Pipeline on GitLab Kubernetes Runners) refined our CI pipeline to run on a private k8s cluster instead of GitLab's shared runners which will let us iterate faster in this post.

So, for today, we want to dockerize our application and build out our pipeline to run docker build on every commit. Once the pipeline succeeds, we should merge in and tag the freshly minted code to cut a full release.

Now, because we strictly enforce our schema in a docker container - with our glorious SQLProvider - we should be aware that we may have some bumps in the road. The command we'll be issuing that could cause such bumps is dotnet publish since that runs the compiler and subsequently our SQLProvider. Let's just try a simple Dockerfile and iterate - #agile.

# use 3.1 LTS dotnet SDK image

FROM mcr.microsoft.com/dotnet/core/sdk:3.1-alpine AS compiler

WORKDIR /app

# add everything; .dockerignore will filter files and folders

ADD . .

# run publish!

RUN dotnet publish -f netcoreapp3.1 -o dist

# switch to a runtime image w/out full sdk

FROM mcr.microsoft.com/dotnet/core/runtime:3.1-alpine AS runtime

WORKDIR /app

# copy only the compiled dlls

COPY --from=compiler /dist .

# setup entrypoint to run the app

ENTRYPOINT ["dotnet", "test-sql-provider.dll"]

If you run this, you'll get an error along the lines of Exception while connecting which basically means our dotnet publish command, in our local docker daemon, could not find a postgre db to connect to. Luckily the docker build docs ( docker build --help ) show us a neat little option: --network. We want to use host networking to expose our postgres db or service. So now, locally, we can run docker build --network host -t test . and we'll get a docker image built! Yay!

Now, in order to run our container locally, you'll also need that --network host option: docker run -it --rm --network host your-image-tag. Now your docker container will be able to connect to your local database!

CI - The Real Deal

So it works locally - will it work in GitLab's CI?

Well... almost - in order to get a docker image built in our GitLab CI, we'll need to choose how to do so. In this post I'm opting for the Kaniko route but there is also the Docker-In-Docker route, although that route requires privileged containers. If at all possible, you should avoid using privileged containers.

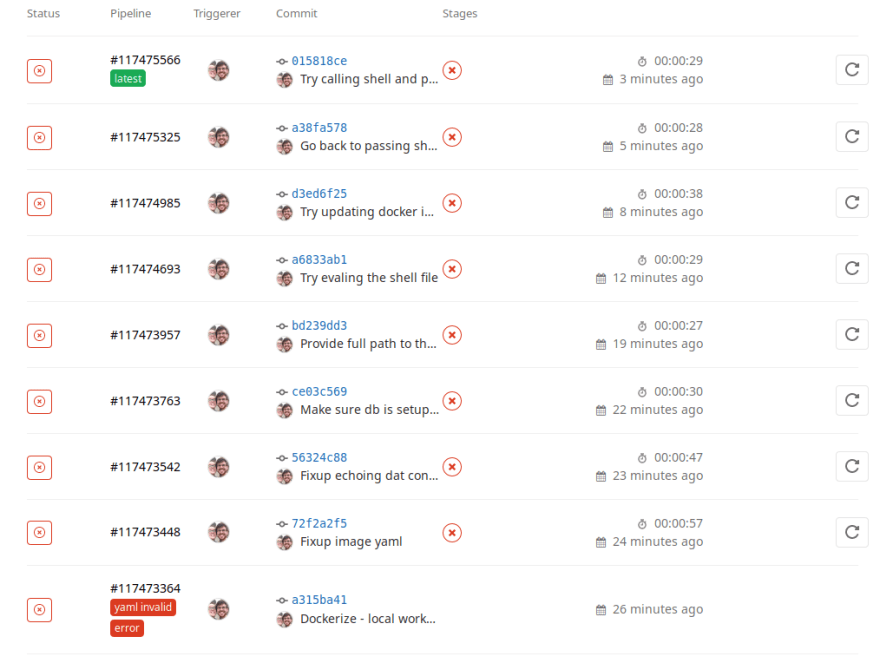

Now, even after choosing kaniko, going through the docs, and getting valid yaml - the road was uh, a little bumpy:

So I'll spare you my pain and jump to explaining Kaniko.

Kaniko is a google project for building OCI images with a plain binary instead of the docker daemon. It's an attempt at being a portable docker build. The reason this is useful is exactly our use case - we have a private CI fleet in k8s and would like to build docker images in a CI job without a privileged container.

That all sounds great - but the one gotcha I ran into is no matter what I did, _ I couldn't run _ .sh _ scripts in a kaniko container _. If you try, you'll likely get something like this:

docker run --entrypoint "" -it --rm gcr.io/kaniko-project/executor:debug sh

$ vi test.sh

$ chmod +x test.sh

$ cat test.sh

#!/bin/sh

echo 'hey'

# ./test.sh

sh: test.sh: not found

$ sh -c test.sh

sh: test.sh: not found

If you know anything about this... drop a comment or @ me on twitter - I would very much like to know why kaniko can't handle shell scripts. I know that the debug tag of the kaniko image has a shell and otherwise it just drops you into the kaniko executor, so it could have something to do with that - but I'm at a loss.

Why would we need to execute a shell script you ask? Well, to seed our database of course. But where there's a will there's a way! Instead of seeding our database in our CI pipeline with a script like we did , we could just create our own image that puts our schema into the containerized postgres db on startup. So that's what we'll do!

FROM postgres:12.1-alpine

RUN echo "CREATE SCHEMA IF NOT EXISTS test AUTHORIZATION test_app;" > /docker-entrypoint-initdb.d/setup.sql

RUN mkdir /sql

ADD schema.sql /sql/schema.sql

RUN cat /sql/schema.sql >> /docker-entrypoint-initdb.d/setup.sql

It's a pretty simple Dockerfile, basically just puts our schema in a special init folder. Postgres has documented environment variables to set up the test_app user and password, so make sure you set those if you try to pull this image down.

Now that we have a 'seeded' db of sorts (not seeded with data of course, just the schema) - we can use it in our pipelines!

GitOps Release

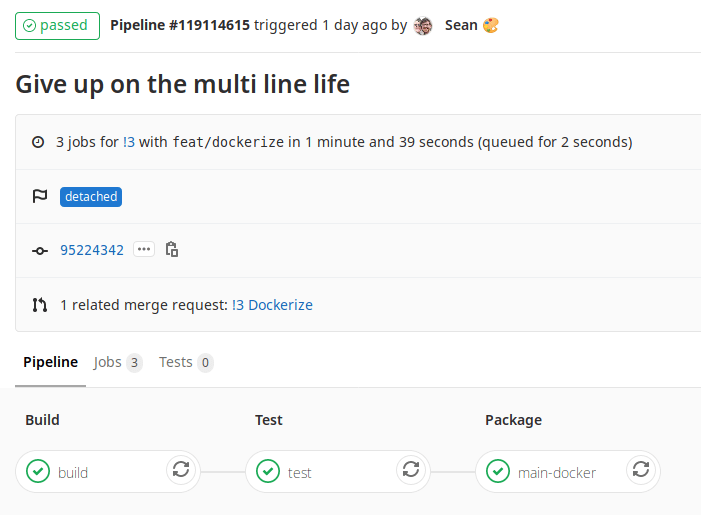

Eventually, we will want a release pipeline, and versioning, so we can go ahead and get that set up with tag builds for both the db image and our main app image. I also added some sweet tests so the build would be doing a little bit more than just compilation. I decided to squash the dotnet build and dotnet test steps into one build to shave some time. In this case, I think it makes sense to have them as steps in the same job as opposed to different jobs, but your case may vary.

- Tagged release of App's docker image

- Tagged release of db docker image

- We also have pre-releases, tagged with the commit SHAs

This was far trickier than I thought at first, and if you look at the whole pipeline history on my GitLab repo and my commit history, you'll see the experiments I was playing with (multi-lines, buildah, getting kaniko to work right, services, seeding the db...). But I sure did learn a lot! Before this adventure I didn't know about the network flag, kaniko, buildah, or how an F# SQLProvider project could be dockerized.

Conclusion

I think what I have now is quite stable. I feel comfortable with the tests I banged out in this merge request, and now that we are dockerized we can deploy our cat db app anywhere. Also, this MR landed a DB_CONN_STR environment variable, so as long as we add that --network host to docker run, we can pass this env var with the -e flag to connect our image to any database with the schema it was compiled against - and if we wanted to just pull that down too we have an image with the schema pre-baked. Nice!

In the next few posts we can now explore:

- Adding an API layer on top of our core logic

- Adding a front end maybe?

- Building out the pipeline to put the pre-release docker images through the paces (actually run integration tests on the docker image)

- Switch from rebuild to retag on a release build somehow

- Add more advanced db features to showcase the power of F#'s SQLProvider

- Add more useful tests (FsCheck tests are already very powerful for our helper layer)

- Migrate this kind of strategy to a 'real' project (like my trakr project)

See y'all soon!

Top comments (0)