In the previous article, we discussed important keywords frequently used in LLM that are beneficial to know directly.

We also covered Function Calling, which is central to modern LLMs, AI, and agents.

However, it seems that the concept of Function Calling (Tool Calling) isn't as widely understood as one might expect.

Therefore, I decided to summarize the concept of Function Calling clearly.

import { OpenAI } from "openai";

const openai = new OpenAI({ apiKey: "YOUR_API_KEY" });

async function callFunction() {

const response = await openai.chat.completions.create({

model: "gpt-4o",

messages: [

{ role: "user", content: "Tell me the current time." }

],

functions: [

{

name: "get_current_time",

description: "Returns the current time.",

parameters: {},

},

],

function_call: "auto",

});

if (response.choices[0].message.function_call) {

console.log("Function called by LLM:", response.choices[0].message.function_call.name);

}

}

callFunction();

If you've read the previous article, this code snippet might look familiar.

When you send a message to an LLM, it responds by selecting both the message and which function (if any) to call.

If the function_call option is set to required, it must always call a function. If it's set to auto, it may choose not to call one.

Then how am I supposed to get the function arguments in this case..??

This is actually the most important part. It's pretty rare to call a function without providing any arguments at all.

So typically, we ask the LLM to fill in these argument values for us.

To guide the LLM on how to fill them, we provide it with a function list, including the parameters defined using a JSON Schema. (A JSON Schema is basically a JSON specification describing exactly how the JSON object should be structured.)

async function callFunction() {

const response = await openai.chat.completions.create({

model: "gpt-4o",

messages: [

{ role: "user", content: "What's the weather like in Seoul?" }

],

functions: [

{

name: "get_weather",

description: "Returns the current weather information for a given city.",

parameters: {

type: "object",

properties: {

city: {

type: "string",

description: "Name of the city to retrieve weather information for."

}

},

required: ["city"]

}

}

],

function_call: "auto",

});

const functionCall = response.choices[0].message.function_call;

if (functionCall) {

console.log("Function called by LLM:", functionCall.name);

const args = JSON.parse(functionCall.arguments);

const result = get_weather(args.city);

console.log(`City: ${args.city}, Weather: ${result}`);

}

}

function get_weather(city) {

// Dummy data returned instead of a real API call

const weatherData = {

"Seoul": "Sunny, 15°C",

"New York": "Cloudy, 8°C",

"Tokyo": "Rainy, 12°C"

};

return weatherData[city] || "Weather information not found.";

}

callFunction();

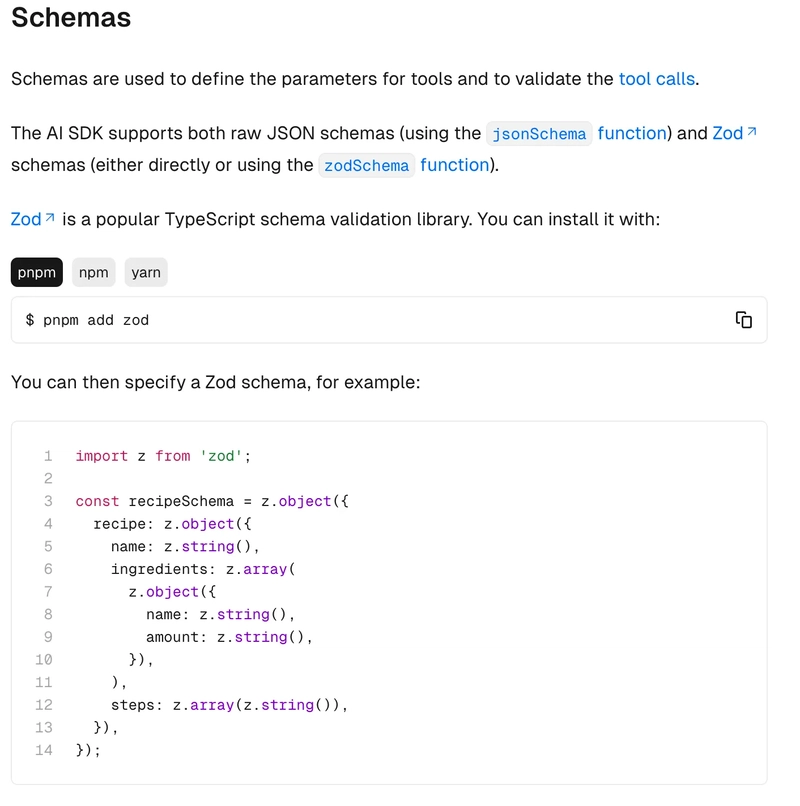

https://sdk.vercel.ai/docs/foundations/tools#schemas

And that's the story of modern Function Calling, up until today.

Then... what exactly can we do with this?

Well, you can build those beloved services you often use, like cline, continue, windsurf, or cursor.

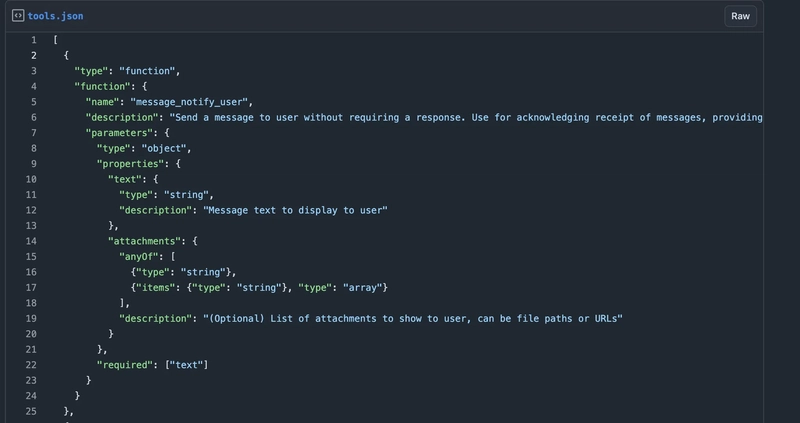

Here's a leaked prompt from a service called Manus:

https://gist.github.com/jlia0/db0a9695b3ca7609c9b1a08dcbf872c9

As you can see, they're enthusiastically providing a bunch of functions. All these details are fed into the LLM under the tools property.

In short, by using Function Calling, you can create all those amazing services mentioned above.

Now, you might think: "Okay, I get Function Calling, but how do we handle file modifications?"

This is also simple—just declare a function that the LLM can understand.

Instead of rewriting an entire file, you let the LLM rewrite only certain portions of the code—exactly like how we, humans, modify code bit by bit.

This is much cleaner, easier, and less error-prone.

But honestly, writing JSON Schema manually like this is just too much…

As we discussed earlier, manually writing each schema can feel quite tedious—what we'd casually call "annoying."

It's a task prone to mistakes when done by hand, and it's super easy for your schemas to drift away from the actual specifications without even noticing.

Think about Swagger—same issue there.

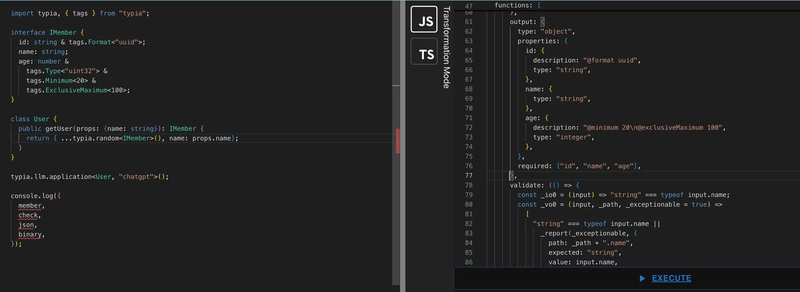

That's exactly why I prefer typia over zod.

Here's an example of how effortlessly Typia handles this:

Not only does it easily generate JSON Schemas like the example above, it even creates validation functions to verify your outputs.

But still, even with tools like this, building an agent from scratch can feel pretty tedious—unless you're someone who genuinely enjoys coding. Some people just want to quickly experiment or try out ideas without all the hassle.

That's exactly why our team is developing a library called Agentica:

https://github.com/wrtnlabs/agentica

Right now, we're deeply thinking about, "How can we make building agents as simple and intuitive as possible?"

Our goal is to make it accessible, whether you're a backend developer, frontend developer, or even someone completely outside of LLM-specific roles—so anyone can easily dive in and create their own agents.

Top comments (1)

Introducing function calling to elementary school students is a brilliant way to spark early interest in programming. The concept is presented in a fun and approachable manner, making it perfect for young learners. For older students or anyone needing help with academic writing, academized.com/research-paper-writ... research paper writing service offers excellent support with complex assignments. Academized provides expert assistance that helps students meet tight deadlines while maintaining high quality. It's refreshing to see both early education and advanced support services evolving side by side.