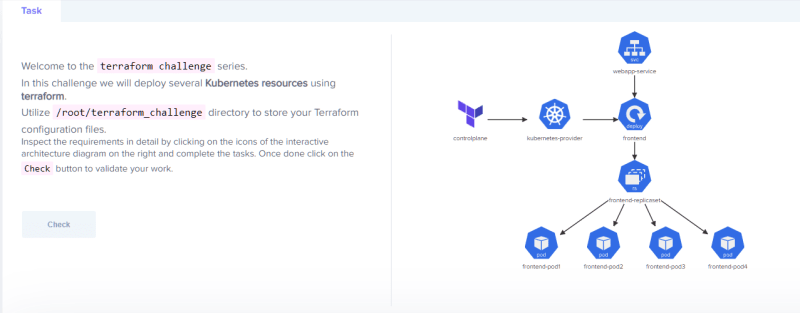

In this exercise, I will be deploying an application to a kubernetes cluster using terraform infrastructure-as-code, and also using an AWS S3 bucket as a remote backend with state-locking in an AWS DynamoDB table.

This exercise is part of the Terraform challenge administered by KodeKloud. All credits to kodekloud.com for the design and structure of the exercise.

Architecture

*

A kubernetes application that will deploy 4 replicas.

Task List

Infrastructure-As-Code (Terraform file)

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.11.0"

}

}

}

provider "kubernetes" {

# config_path = "~/.kube/config"

# config_context = "docker-desktop"

config_path = "/root/.kube/config"

config_context = "kubernetes@kubernetes_admin"

}

##Service

resource "kubernetes_service" "webapp-service" {

metadata {

name = "webapp-service"

}

spec {

selector = {

name = "webapp"

}

port {

port = 8080

node_port = 30080

}

type = "NodePort"

}

}

## Deployment

resource "kubernetes_deployment_v1" "frontend" {

metadata {

name = "frontend"

labels = {

name = "frontend"

}

}

spec {

replicas = 4

selector {

match_labels = {

name = "webapp"

}

}

template {

metadata {

labels = {

name = "webapp"

}

}

spec {

container {

image = "kodekloud/webapp-color:v1"

name = "simple-webapp"

port {

container_port = 8080

protocol = "TCP"

}

}

}

}

}

}

Displaying in browser - both local and remote deployments

Create an AWS s3 bucket for a remote backend and a DynamoDB table for state locking

Creating an s3 bucket in AWS for the remote state, and also enabling versioning in case rollback is needed for disaster recovery. Creating a DynamoDB table for state locking. Partition key must be "LockID" (spelling and case sensitive)Reconfigure for Terraform configuration to use S3 bucket as remote backend

Updated Configuration file:

terraform {

required_providers {

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.11.0"

}

aws = {

source = "hashicorp/aws"

version = "4.49.0"

}

}

backend "s3" {

bucket = "tenon-remote-backend"

key = "terraform.tfstate"

region = "us-east-1"

dynamodb_table = "tenon_state_locking"

}

}

provider "kubernetes" {

config_path = "~/.kube/config"

config_context = "docker-desktop"

# config_path = "/root/.kube/config"

# config_context = "kubernetes@kubernetes_admin"

}

provider "aws" {

shared_config_files = ["~/.aws/config"]

shared_credentials_files = ["~/.aws/credentials"]

profile = "iamadmin-general"

}

##Service

resource "kubernetes_service" "webapp-service" {

metadata {

name = "webapp-service"

}

spec {

selector = {

name = "webapp"

}

port {

port = 8080

node_port = 30080

}

type = "NodePort"

}

}

## Deployment

resource "kubernetes_deployment_v1" "frontend" {

metadata {

name = "frontend"

labels = {

name = "frontend"

}

}

spec {

replicas = 4

selector {

match_labels = {

name = "webapp"

}

}

template {

metadata {

labels = {

name = "webapp"

}

}

spec {

container {

image = "kodekloud/webapp-color:v1"

name = "simple-webapp"

port {

container_port = 8080

protocol = "TCP"

}

}

}

}

}

}

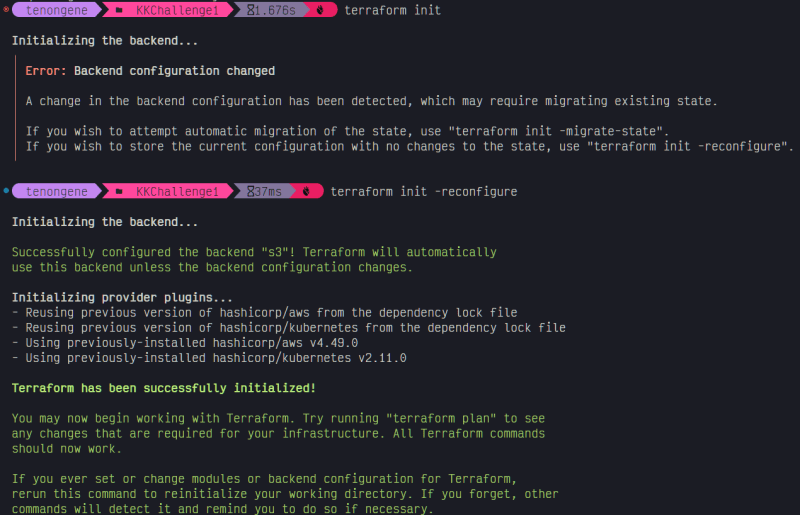

The new backend requires reconfiguration with terraform init -reconfigure

State has been refreshed successfully and Terraform is now using the remote s3 bucket as the state file backend, with state locking via the DynamoDB table

Top comments (0)