Manual Testing: The Essential First Step

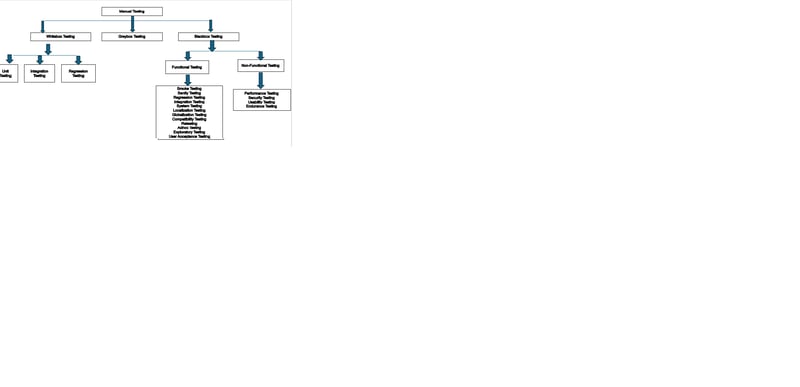

Manual testing, a fundamental quality assurance practice, relies on human testers to evaluate software. Following predefined test cases, testers assess the product from a user's perspective, identifying defects and providing detailed reports. It has 3 types Whitebox Testing, Blackbox testing and grey box testing.

Whitebox testing examines the software's internal structure. Developers analyze code, execution paths, and data flow, ensuring accuracy and security. Unit, integration, and regression testing are core Whitebox techniques. Whitebox testing's advantage is early error detection, reducing post-release issues. However, it requires programming knowledge and can introduce potential bias. Understanding these aspects is vital for effective manual testing.

Blackbox testing focuses on application behavior without revealing internal functionalities. Testers identify bugs by evaluating software from a user's perspective. Functional testing verifies if the software performs its intended tasks. Smoke testing ensures basic functionality, while regression testing checks for unintended impacts from updates. Sanity testing validates minor changes, and system testing evaluates end-to-end compliance. User acceptance testing confirms customer requirements are met. Globalization testing ensure multi-regional compatibility and localization testing ensure selected regional compatibility. Exploratory and ad-hoc testing uncover hidden issues. Non-functional testing assesses performance, load, stress, volume, and endurance testing.

Grey-box testing combines Blackbox and Whitebox techniques offering a balanced approach. By understanding both external behavior and some internal structure, testers enhance product quality and identify a wider range of defects. This hybrid method provides a comprehensive testing strategy, bridging the gap between user experience and code functionality.

Boundary Value Analysis: Pinpointing Errors at the Edge:

Boundary Value Analysis (BVA) is a crucial black-box testing technique that focuses on identifying errors at the edges of input value ranges. By concentrating on these boundaries, testers can uncover potential defects early in the development cycle.

Consider a system that accepts exam marks between 0 and 100, inclusive. BVA would test values precisely at these boundaries (0 and 100), as well as values just inside and outside the range (-1, 1, 99, and 101). This targeted approach ensures that the system handles extreme inputs correctly.

Boundary Values:

Lower boundary: 0

Upper boundary: 100

One of the key advantages of BVA is its effectiveness in identifying boundary-related issues. By focusing on these critical points, testers can provide comprehensive test coverage for values that are statistically more likely to cause errors. This targeted approach is also cost-effective, as it efficiently uncovers significant defects. Furthermore, BVA is accessible to both experienced and novice testers, making it a valuable tool for any testing team.

However, BVA has limitations. It primarily addresses boundary-related defects and may overlook issues occurring within the input domain. For systems with numerous inputs, creating test cases can become complex and time-consuming. Additionally, BVA may not cover all possible scenarios, highlighting the need for complementary testing techniques. Despite these limitations, BVA remains a vital component of a robust testing strategy, ensuring software reliability and quality.

Decision Table Testing: Mapping Complex Scenarios for Robust Software

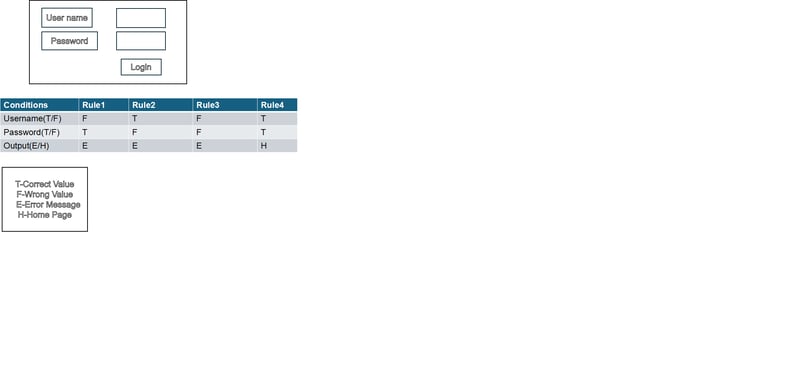

Decision Table Testing is a powerful black-box testing technique that excels at navigating complex business logic and represent in tabular format. It systematically maps input conditions to their corresponding outcomes, ensuring comprehensive test coverage.

This method is particularly valuable when dealing with scenarios involving multiple dependencies and intricate rules. At the heart of Decision Table Testing lie three key components: inputs, outputs, and rules. Rules, which are sets of conditions leading to specific actions, form the core of the table.

Consider Login functionality check as an example decision table.

The advantages of this approach are significant. It provides complete test coverage of all input combinations, minimizing the risk of overlooked scenarios. Moreover, it simplifies the identification of missing or conflicting business rules, enhancing the overall quality of the software specification.

However, Decision Table Testing is not without its challenges. For large systems with numerous inputs and rules, the complexity of the table can become overwhelming. Furthermore, the process of creating and executing these tables can be time-consuming. Nonetheless, when applied strategically, Decision Table Testing proves to be an invaluable tool for ensuring software reliability and accuracy in complex environments.

Human Touch in a Digital Age: The Future of Manual Testing

The world of software testing is rapidly changing, driven by the rise of AI and automation. But does this mean the end of manual testing? Absolutely not. Instead, it signals a transformation. The future isn't a battle between humans and machines, but a collaboration. Manual testers are adapting the changes, combining their unique human insights with the power of AI.

Lets think about an AI-powered image recognition app. Automated tests can confirm if it identifies objects, but can they can't tell how it feels? There we are. They evaluate the user experience- Is the interface intuitive? Are the labels accurate in complex situations? Can it handle unexpected inputs gracefully? For example, a tester might discover the app confuses a "small dog" with a "cat" in dim lighting—a subtle error automated tests might miss.

Manual testers will specialize in areas where AI falls short-exploratory testing, usability, and those tricky edge cases. They'll validate AI-generated test cases, ensuring they reflect real-world user behavior. And they'll provide essential feedback on the "human" aspects of software—accessibility, cultural nuances, and overall user satisfaction.

To thrive in this new landscape, manual testers need to expand their skill sets. Understanding AI concepts and learning to work with AI-powered tools is crucial. They'll become experts in recognizing AI's limitations, using their judgment to bridge the gaps. By embracing this evolution, manual testers will remain indispensable in ensuring software quality, bringing the essential human touch to an increasingly automated world.

Lets consider Google pay as an example for this scenario. GPay uses AI for fraud detection and personalized offers. Automated tests verify transaction security and data accuracy. Manual testers evaluate user experience: Is the interface intuitive for first-time users? Are personalized offers relevant and timely? Testers check for accessibility, ensuring users with disabilities can navigate the app. They also test edge cases, like unusual transaction patterns, to find AI logic flaws. Manual testing ensures GPay is not only secure and functional, but also user-friendly and accessible, enhancing trust and satisfaction beyond what automated tests can achieve.

Top comments (0)