Scheduled auto-scaling to zero with Lambda GO client

A seamless integration of Golang Lambda kubernetes client, EventBridge, and EKS, all efficiently deployed with Terraform, for dynamic, cost-effective auto-scaling in development environments.

Table of Contents

Introduction

In this insightful piece, we will delve into a cutting-edge solution designed to enhance DevOps efficiency and reduce costs in development environments. Leveraging the power of AWS technologies, we will explore how a Golang-based Lambda function, orchestrated by an EventBridge scheduler, can dynamically scale down EKS Fargate namespace deployments to zero. This strategy proves highly effective not only with Fargate but also when paired with autoscaler/karpenter solutions, offering a versatile approach to cost reduction and resource optimization. Significantly lowering resource utilization during non-peak hours, this method is both cost-effective and efficient. Also worth to highlight that we will touch a streamlined way of deploying this Lambda function using Terraform, showcasing an infrastructure-as-code approach that ensures consistency and ease of management.

The Golang Lambda Function - A Deep Dive

The heart of this solution is a simple Golang-based Lambda function designed to dynamically scale down deployments in specific namespaces of an EKS cluster. Here’s a closer look at how this function operates.

Our Lambda function is crafted in Go, a language known for its efficiency and suitability for concurrent tasks, making it a perfect choice for cloud-native applications.

package main

import (

"context"

"encoding/json"

"os"

eksauth "github.com/chankh/eksutil/pkg/auth"

autoscalingv1 "k8s.io/api/autoscaling/v1"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/client-go/kubernetes"

"github.com/aws/aws-lambda-go/lambda"

log "github.com/sirupsen/logrus"

)

type Payload struct {

ClusterName string `json:"clusterName"`

Namespaces []string `json:"namespaces"`

Replicas int32 `json:"replicas"`

}

func main() {

if os.Getenv("ENV") == "DEBUG" {

log.SetLevel(log.DebugLevel)

}

lambda.Start(handler)

}

func handler(ctx context.Context, payload Payload) (string, error) {

cfg := &eksauth.ClusterConfig{

ClusterName: payload.ClusterName,

}

clientset, err := eksauth.NewAuthClient(cfg)

if err != nil {

log.WithError(err).Error("Failed to create EKS client")

return "", err

}

scaled := make(map[string]int32)

for _, ns := range payload.Namespaces {

deployments, err := clientset.AppsV1().Deployments(ns).List(ctx, metav1.ListOptions{})

if err != nil {

log.WithError(err).Errorf("Failed to list deployments in namespace %s", ns)

continue

}

for _, deploy := range deployments.Items {

if err := scaleDeploy(clientset, ctx, ns, deploy.Name, payload.Replicas); err == nil {

scaled[ns+"/"+deploy.Name] = payload.Replicas

}

}

}

scaledJSON, err := json.Marshal(scaled)

if err != nil {

log.WithError(err).Error("Failed to marshal scaled deployments to JSON")

return "", err

}

log.Info("Scaled Deployments: ", string(scaledJSON))

return "Scaled Deployments: " + string(scaledJSON), nil

}

func scaleDeploy(client *kubernetes.Clientset, ctx context.Context, namespace, name string, replicas int32) error {

scale := &autoscalingv1.Scale{

ObjectMeta: metav1.ObjectMeta{

Name: name,

Namespace: namespace,

},

Spec: autoscalingv1.ScaleSpec{

Replicas: replicas,

},

}

_, err := client.AppsV1().Deployments(namespace).UpdateScale(ctx, name, scale, metav1.UpdateOptions{})

if err != nil {

log.WithError(err).Errorf("Failed to scale deployment %s in namespace %s", name, namespace)

} else {

log.Infof("Successfully scaled deployment %s in namespace %s to %d replicas", name, namespace, replicas)

}

return err

}

Key Components

- Dependencies and Libraries: The function uses the eksauth package for authenticating with EKS, and the Kubernetes client-go library for interacting with the cluster. AWS Lambda Go SDK is employed for Lambda functionalities, whilelogrus provides robust logging capabilities.

- Payload Structure: We define a Payload struct to encapsulate the input, including the target cluster’s name, the namespaces to scale, and the desired number of replicas.

- The Main Function: It initializes the log level based on an environment variable and launches the Lambda handler, demonstrating a simple yet effective setup.

- Lambda Handler: This function takes the Payload, authenticates with the EKS cluster, and iterates over namespaces to scale down deployments. It’s a demonstration of how straightforward interactions with Kubernetes can be orchestrated in a serverless environment.

- Scaling Logic with

scaleDeployfunction: Here, we actually adjust the scale of each deployment. This function showcases the practical application of the Kubernetes API in managing cluster resources.

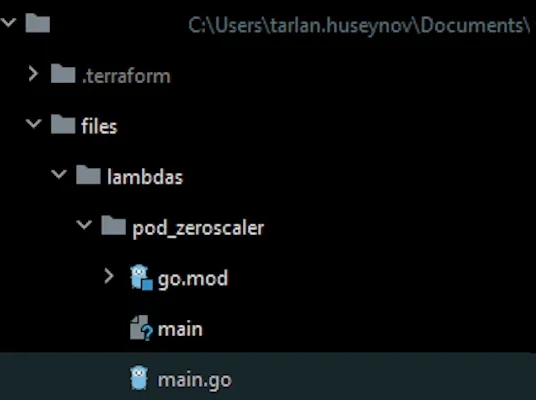

For seamless integration and management, we’ll be placing our Lambda function within a specific directory structure in our Terraform project. This approach not only keeps our project organized but also simplifies the deployment process.

We’ll store the Lambda function in files/lambdas/pod_zeroscaler (some fancy naming won’t hurt 😉) . This dedicated directory within our Terraform project ensures that our Lambda code is logically separated and easily accessible in a location where we can edit our code, build it and deploy it as a lambda function by applying terraform continuously ( we will look into how-to do that later ). Let’s initially set up the lambda function’s code basis.

Initialize the Go Module

Navigate to the pod_zeroscaler directory and run go mod init pod_zeroscaler followed by go mod tidy. This commands set up the necessary Go module files, helping manage dependencies and versioning.

Build the Lambda Function

After initializing the module, we compile our Lambda function using GOOS=linux go build -o main

Note: JetBrains Goland IDE - For managing projects that involve both Go code and Terraform modules, I highly recommend using JetBrains Goland IDE. Its integrated environment simplifies handling multiple aspects of the project, from coding to deployment. The IDE’s robust features enhance coding efficiency, debugging, and version control management, making it an invaluable tool for any DevOps engineer working in a cloud-native ecosystem. You can get the mentioned Terraform plugin here.

Deploying and Orchestrating the EKS Auto-Scaling Solution with Terraform

In this section, we delve into the infrastructure setup for our auto-scaling solution, using Terraform to deploy and orchestrate the components. This setup is a crucial part of our strategy to manage resources efficiently in a cloud-native DevOps environment.

Pre-requisites and Setup

Before we dive into the specifics, let’s establish our foundational pre-requisites:

- Existing Terraform Project: Our setup assumes that there’s an existing Terraform project with either a remote or local state setup.

- Pre-configured EKS Cluster: An EKS cluster is already in place, and our solution is designed to integrate with it seamlessly.

- Modular Code Format: The provided Terraform code is in a scratch format, ready to be modularized as per the project’s requirements.

locals {

cluster_name = "my-eks-cluster"

pod_zeroscaler = {

enabled = true

scale_out_schedule = "cron(00 09 ? * MON-FRI *)" # At 09:00:00 (UTC), Monday through Friday

scale_in_schedule = "cron(00 18 ? * MON-FRI *)" # At 18:00:00 (UTC), Monday through Friday

namespaces = ["default", "development"]

}

default_tags = {

Environment = var.env

Owner = "Devops"

Managed-by = "Terraform"

}

}

data "aws_iam_policy_document" "lambda_logging_policy" {

statement {

actions = [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

]

effect = "Allow"

resources = ["*"]

}

}

resource "aws_iam_policy" "lambda_logging_policy" {

name = "${local.cluster_name}-lambda-logging"

policy = data.aws_iam_policy_document.lambda_logging_policy.json

tags = local.default_tags

}

data "aws_iam_policy_document" "describe_cluster_lambda_policy" {

statement {

actions = ["eks:DescribeCluster"]

resources = [aws_eks_cluster.eks_cluster.arn]

}

}

resource "aws_iam_policy" "describe_cluster_lambda_policy" {

name = "describe-cluster-policy"

policy = data.aws_iam_policy_document.describe_cluster_lambda_policy.json

tags = local.default_tags

}

resource "aws_iam_role" "eks_lambda_role" {

name = "${local.cluster_name}-lambda-role"

tags = local.default_tags

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = ["lambda.amazonaws.com"]

}

},

]

})

}

resource "aws_iam_role_policy_attachment" "describe_cluster_policy" {

role = aws_iam_role.eks_lambda_role.name

policy_arn = aws_iam_policy.describe_cluster_lambda_policy.arn

}

resource "aws_iam_role_policy_attachment" "lambda_logging_policy" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

role = aws_iam_role.eks_lambda_role.name

policy_arn = aws_iam_policy.lambda_logging_policy.arn

}

data "archive_file" "pod_zeroscaler_lambda_zip" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

type = "zip"

source_file = "${path.root}/files/lambdas/pod_zeroscaler/main"

output_path = "/tmp/main.zip"

}

resource "aws_lambda_function" "pod_zeroscaler_lambda" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

filename = data.archive_file.pod_zeroscaler_lambda_zip[0].output_path

source_code_hash = data.archive_file.pod_zeroscaler_lambda_zip[0].output_base64sha256

function_name = "${local.cluster_name}-zeroscaler"

handler = "main"

runtime = "go1.x"

role = aws_iam_role.eks_lambda_role.name

environment {

variables = { CLUSTER_NAME = local.cluster_name }

}

}

// AWS EventBridge Scheduler

data "aws_iam_policy_document" "pod_zeroscaler_invoke_policy" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

statement {

effect = "Allow"

actions = ["lambda:InvokeFunction"]

resources = [aws_lambda_function.pod_zeroscaler_lambda[0].arn]

}

}

resource "aws_iam_policy" "pod_zeroscaler_invoke_policy" {

name = "${local.cluster_name}-zeroscaler-scheduler-policy"

count = local.pod_zeroscaler["enabled"] ? 1 : 0

description = "Allow function execution for scheduler role"

policy = data.aws_iam_policy_document.pod_zeroscaler_invoke_policy[0].json

}

data "aws_iam_policy_document" "pod_zeroscaler_invoke_role" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

statement {

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["scheduler.amazonaws.com"]

}

}

}

resource "aws_iam_role" "pod_zeroscaler_invoke_role" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

name = "${local.cluster_name}-zeroscaler-scheduler-role"

assume_role_policy = data.aws_iam_policy_document.pod_zeroscaler_invoke_role[0].json

}

resource "aws_iam_role_policy_attachment" "pod_zeroscaler_invoke_role" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

policy_arn = aws_iam_policy.pod_zeroscaler_invoke_policy[0].arn

role = aws_iam_role.pod_zeroscaler_invoke_role[0].name

}

resource "aws_scheduler_schedule" "pod_zeroscaler_scale_in" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

name = "${local.cluster_name}-zeroscaler-scale-in"

group_name = "default"

flexible_time_window {

mode = "OFF"

}

schedule_expression = local.pod_zeroscaler["scale_in_schedule"] #"cron(0 8 * * ? *)"

target {

arn = aws_lambda_function.pod_zeroscaler_lambda[0].arn

role_arn = aws_iam_role.pod_zeroscaler_invoke_role[0].arn

input = jsonencode({

"clusterName" = local.cluster_name

"namespaces" = local.pod_zeroscaler["namespaces"]

"replicas" = 0

})

}

}

resource "aws_scheduler_schedule" "pod_zeroscaler_scale_out" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

name = "${local.cluster_name}-zeroscaler-scale-out"

group_name = "default"

flexible_time_window {

mode = "OFF"

}

schedule_expression = local.pod_zeroscaler["scale_out_schedule"]

target {

arn = aws_lambda_function.pod_zeroscaler_lambda[0].arn

role_arn = aws_iam_role.pod_zeroscaler_invoke_role[0].arn

input = jsonencode({

"clusterName" = local.cluster_name

"namespaces" = local.pod_zeroscaler["namespaces"]

"replicas" = 1

})

}

}

Terraform Infrastructure Overview

Local Variables and Configuration

Cluster Name and Lambda Settings: We define key local variables, including the name of the EKS cluster and configurations for the Lambda function, such as its schedule and target namespaces.

Default Tags: These tags are applied to all created resources for easy tracking and management.IAM Policies and Roles

Lambda Logging and EKS Access: Policies are set up to allow the Lambda function to log activities and interact with the EKS cluster ( we will configureaws-auth configmap in a separate section to provide a complete granting necessary access to our lambda ).

Policy Attachments: We attach these policies to the Lambda function’s IAM role, ensuring it has the necessary permissions.Lambda Function Deployment

Function Packaging: The Lambda function, developed in Go and placed in files/lambdas/pod_zeroscaler, is packaged into a zip file.

Deployment: This package is then deployed as a Lambda function in AWS, complete with environment variables and IAM role associations.EventBridge Scheduler

Invoke Policy and Role: We create an IAM policy and role that allows EventBridge to invoke our Lambda function.

Scheduling Resources: Two EventBridge schedules are set up — one for scaling down (scaling in) and the other for scaling up (scaling out) — each targeting our Lambda function with specific payloads.

This Terraform setup not only deploys our Lambda function but also orchestrates its operation, aligning with our goal of automating and optimizing resource utilization in a Kubernetes environment. By utilizing Terraform, we ensure a reproducible and efficient deployment process, encapsulating complex cloud operations in a manageable codebase.

Access Configuration for Lambda in EKS

The final step in our setup involves configuring the AWS EKS cluster to grant the necessary permissions to our Lambda function. This is done by editing the aws-auth ConfigMap in the kube-system namespace and setting up appropriate Kubernetes roles and bindings using Terraform. This section ensures our Lambda function can interact effectively with the EKS cluster by using the lambda role attached to it.

Reference: Enabling IAM principal access to your cluster

We need to update the aws-auth ConfigMap in Kubernetes. This ConfigMap is crucial for controlling access to your EKS cluster. Here’s a sample snippet where the lambda role arn is added to a specific RBAC group named lambda-group ( we will create this group and role binding ).

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

- rolearn: arn:aws:iam::<ACCOUNT_ID>:role/my-eks-cluster-development-lambda-role

groups:

- lambda-group

- groups:

- system:bootstrappers

- system:nodes

- system:node-proxier

rolearn: arn:aws:iam::<ACCOUNT_ID>:role/my-eks-cluster-development-fargate-pod-execution-role

username: system:node:{{SessionName}}

- groups:yam

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::<ACCOUNT_ID>:role/my-eks-cluster-development-node-pod-execution-role

username: system:node:{{EC2PrivateDNSName}}

In this ConfigMap, we add a new role entry for our Lambda function’s IAM role. This entry assigns the Lambda role to a group, lambda-group, which we will reference in our Kubernetes role and binding.

Terraform codebase

# Cluster Role and Cluster Role Binding for Lambda

resource "kubernetes_cluster_role" "lambda_cluster_role" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

metadata {

name = "lambda-clusterrole"

}

rule {

api_groups = ["*"]

resources = ["deployments", "deployments/scale"]

verbs = ["get", "list", "watch", "create", "update", "patch", "delete"]

}

rule {

api_groups = [""]

resources = ["pods"]

verbs = ["get", "list", "watch", "create", "update"]

}

}

resource "kubernetes_cluster_role_binding" "lambda_cluster_role_binding" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

metadata {

name = "lambda-clusterrolebinding"

}

role_ref {

api_group = "rbac.authorization.k8s.io"

kind = "ClusterRole"

name = kubernetes_cluster_role.lambda_cluster[0]_role.metadata[0].name

}

subject {

kind = "Group"

name = "lambda-group"

api_group = "rbac.authorization.k8s.io"

}

}

We define a Kubernetes Cluster Role and a Cluster Role Binding in Terraform to grant our Lambda function the necessary permissions within the cluster.

The kubernetes_cluster_role resource defines the permissions our Lambda function needs. We grant it a wide range of verbs on deployments and pods, ensuring it can perform its scaling actions.

The kubernetes_cluster_role_binding resource binds the above role to the lambda-group. This binding ensures that any IAM role in the lambda-group (which includes our Lambda function’s IAM role) gets the permissions defined in the cluster role.

Automating ConfigMap Update with Terraform and Bash script

To streamline the process of updating the aws-auth ConfigMap in Kubernetes, we can automate this step using Terraform coupled with a local provisioner and a Bash script. This automation not only saves time but also reduces the potential for manual errors.

We’ll use a Bash script, aws_auth_patch.sh, located in files/scripts, to modify the aws-auth ConfigMap. This script checks if the necessary permissions are already in place and, if not, adds them.

#!/bin/bash

set -euo pipefail

# Script to update the aws-auth ConfigMap

AWS_AUTH=$(kubectl get -n kube-system configmap/aws-auth -o yaml)

if echo "${AWS_AUTH}" | grep -q "${GROUP}"; then

echo "Permission is already added for ${GROUP}"

else

NEW_ROLE=" - rolearn: ${ROLE_ARN}\n groups:\n - ${GROUP}"

PATCH=$(kubectl get -n kube-system configmap/aws-auth -o yaml | awk "/mapRoles: \|/{printf \"%s\n%s\n\", \$0, \"${NEW_ROLE}\";next}1")

kubectl patch configmap/aws-auth -n kube-system --patch "${PATCH}"

fi

This script retrieves the current aws-auth ConfigMap, checks if the required role is already added, and if not, it patches the ConfigMap with the new role.

In our Terraform setup, we’ll use a null_resource with a local-exec provisioner to execute this script automatically.

resource "null_resource" "patch_aws_auth" {

count = local.pod_zeroscaler["enabled"] ? 1 : 0

triggers = {

api_endpoint_up = aws_eks_cluster.eks_cluster.endpoint

lambda_bootstrapped = aws_iam_role.eks_lambda_role.id

script_hash = filemd5("${path.cwd}/files/scripts/aws_auth_patch.sh")

}

provisioner "local-exec" {

working_dir = "${path.cwd}/files/scripts"

command = "./aws_auth_patch.sh"

interpreter = ["bash"]

environment = {

ROLE_ARN = aws_iam_role.eks_lambda_role.arn

GROUP = kubernetes_cluster_role_binding.lambda_cluster_role_binding.subject[0].name

}

}

}

This Terraform resource triggers the script execution whenever there are changes to the EKS cluster endpoint, the Lambda IAM role, or the script itself. The script is executed in the appropriate directory, and necessary environment variables are passed to it.

Alternatively we could use eksctl + local provisioner instead of bash script + kubectl.

resource "null_resource" "patch_aws_auth_lambda_role" {

depends_on = [

aws_eks_fargate_profile.eks_cluster_fargate_kubesystem,

data.utils_aws_eks_update_kubeconfig.bootstrap_kubeconfig

]

provisioner "local-exec" {

working_dir = "../files/scripts"

command = <<-EOT

eksctl get iamidentitymapping \

--cluster ${local.cluster_name} \

--region ${local.region} \

--profile ${var.kubeconfig_profile} | grep -q "${aws_iam_role.lambda_role.arn}" || \

eksctl create iamidentitymapping \

--cluster ${local.cluster_name} \

--region ${local.region} \

--arn ${aws_iam_role.lambda_role.arn} \

--group ${kubernetes_cluster_role_binding.lambda_cluster_role_binding.subject[0].name} \

--username system:node:{{EC2PrivateDNSName}} \

--profile ${var.kubeconfig_profile}

EOT

}

}

Farewell 😊

Thank you for taking the time to read this article. I trust it has offered you valuable insights and practical knowledge to implement in your own DevOps endeavors. As we continue to navigate the ever-evolving landscape of cloud infrastructure and serverless architectures, remember the power of automation and efficient resource management. Keep pushing the boundaries, keep learning, and always strive for excellence!

Top comments (0)