Open-Sora: Discover the Best OpenAI Sora Alternative in 2024

Have you ever dreamed of creating stunning AI-generated videos but felt limited by expensive, proprietary tools like OpenAI's Sora? You're not alone. The recent release of Open-Sora, an open-source AI video generation model developed by HPC-AI Tech (the Colossal-AI team), has sent waves of excitement through the creative and tech communities. Offering powerful capabilities comparable to commercial alternatives, Open-Sora is quickly becoming the go-to solution for accessible, high-quality AI video creation.

In this article, we'll dive deep into what makes Open-Sora such a groundbreaking tool, explore its evolution, technical features, performance benchmarks, and how it stacks up against OpenAI's Sora. Whether you're a content creator, developer, or simply an AI enthusiast, you'll find plenty of reasons to get excited about Open-Sora.

Ready to explore more groundbreaking AI video tools? Check out Anakin AI's powerful video generation models like Minimax Video, Tencent Hunyuan, and Runway ML—all available in one streamlined platform. Elevate your creative projects today: Explore Anakin AI Video Generator

The Evolution of Open-Sora: From Promising Start to Industry Challenger

Open-Sora didn't become a sensation overnight. It has evolved significantly since its initial release, steadily improving its capabilities and performance:

Version History at a Glance:

- Open-Sora 1.0: Initial release, fully open-sourced training process and model architecture.

- Open-Sora 1.1: Introduced multi-resolution, multi-length, and multi-aspect-ratio video generation, along with image/video conditioning and editing.

- Open-Sora 1.2: Added rectified flow, 3D-VAE, and improved evaluation metrics.

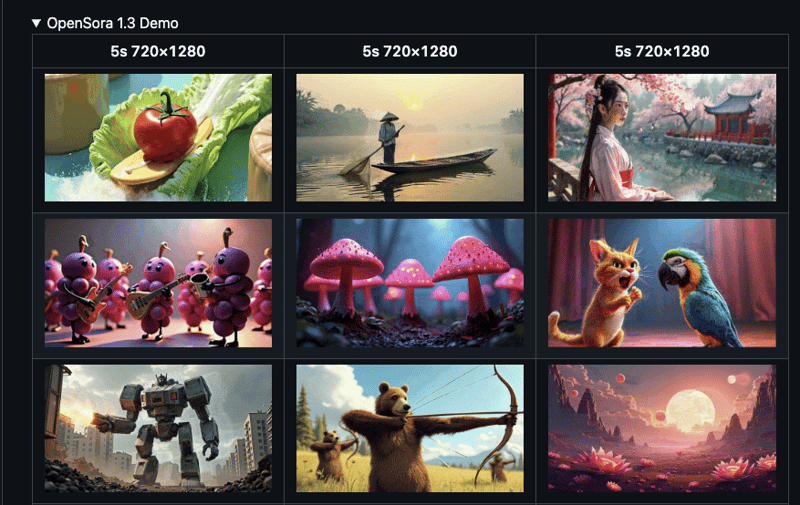

- Open-Sora 1.3: Implemented shift-window attention and unified spatial-temporal VAE, scaling up to 1.1 billion parameters.

- Open-Sora 2.0: The latest and most advanced version, boasting 11 billion parameters and nearly matching proprietary models like OpenAI's Sora.

Each iteration has brought Open-Sora closer to parity with industry-leading commercial models, democratizing access to powerful AI video generation technology.

Under the Hood: Technical Architecture and Core Features

What exactly makes Open-Sora 2.0 such a compelling alternative to OpenAI's Sora? Let's break down its innovative architecture and powerful capabilities:

Innovative Model Architecture:

- Masked Motion Diffusion Transformer (MMDiT): Utilizes advanced 3D full-attention mechanisms, significantly enhancing spatiotemporal feature modeling.

- Spatio-Temporal Diffusion Transformer (ST-DiT-2): Supports diverse video durations, resolutions, aspect ratios, and frame rates, making it highly versatile.

- High-Compression Video Autoencoder (Video DC-AE): Dramatically reduces inference time through efficient compression, allowing quicker video generation.

Impressive Generation Capabilities:

Open-Sora 2.0 offers diverse and intuitive video generation methods:

- Text-to-Video: Create engaging videos directly from textual descriptions.

- Image-to-Video: Bring static images to life with dynamic motion.

- Video-to-Video: Seamlessly modify existing video content.

- Motion Intensity Control: Adjust the intensity of motion with a simple "Motion Score" parameter (ranging from 1 to 7).

These features empower creators to produce highly customized, visually compelling content with ease.

Efficient Training Process: High Performance at a Fraction of the Cost

One of Open-Sora's standout achievements is its cost-effective training methodology. By leveraging innovative strategies, the Open-Sora team has significantly reduced training expenses compared to industry standards:

Smart Training Methodology:

- Multi-Stage Training: Begins with low-resolution frames, gradually fine-tuning for high-resolution outputs.

- Low-Resolution Priority Strategy: Prioritizes learning motion features first, then quality enhancement, saving up to 40x computing resources.

- Rigorous Data Filtering: Ensures high-quality training data, improving overall efficiency.

- Parallel Processing: Utilizes ColossalAI for optimized GPU utilization in distributed training environments.

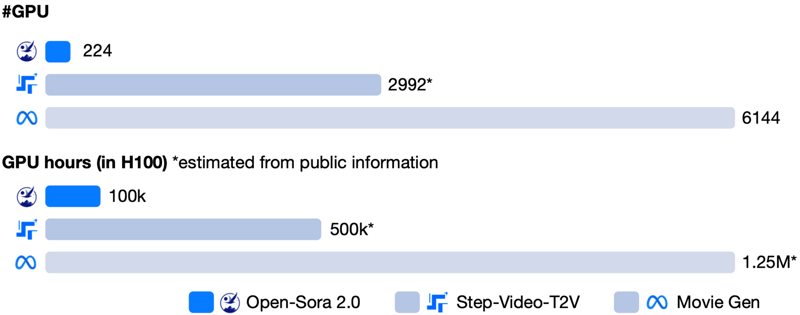

Remarkable Cost Efficiency:

- Open-Sora 2.0: Developed at approximately $200,000 (equivalent to 224 GPUs).

- Step-Video-T2V: Estimated at 2992 GPUs (500k GPU hours).

- Movie Gen: Requires approximately 6144 GPUs (1.25M GPU hours).

This represents a staggering 5-10x cost reduction compared to proprietary video generation models, making Open-Sora accessible to a broader range of users and developers.

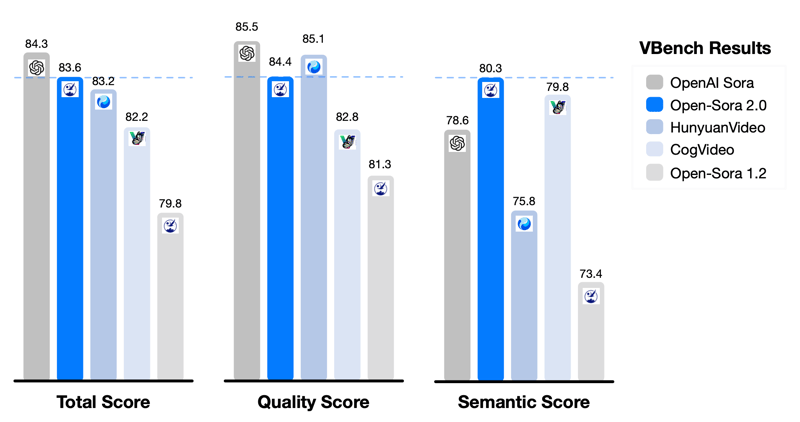

Performance Benchmarks: How Does Open-Sora Stack Up?

When evaluating AI models, performance benchmarks are crucial. Open-Sora 2.0 has shown impressive results, nearly matching OpenAI's Sora in key metrics:

VBench Evaluation Results:

- Total Score: Open-Sora 2.0 scored 83.6, compared to OpenAI Sora's 84.3.

- Quality Score: 84.4 (Open-Sora) vs. 85.5 (OpenAI Sora).

- Semantic Score: 80.3 (Open-Sora) vs. 78.6 (OpenAI Sora).

The performance gap between Open-Sora and OpenAI's Sora has narrowed dramatically—from 4.52% in earlier versions to just 0.69% today.

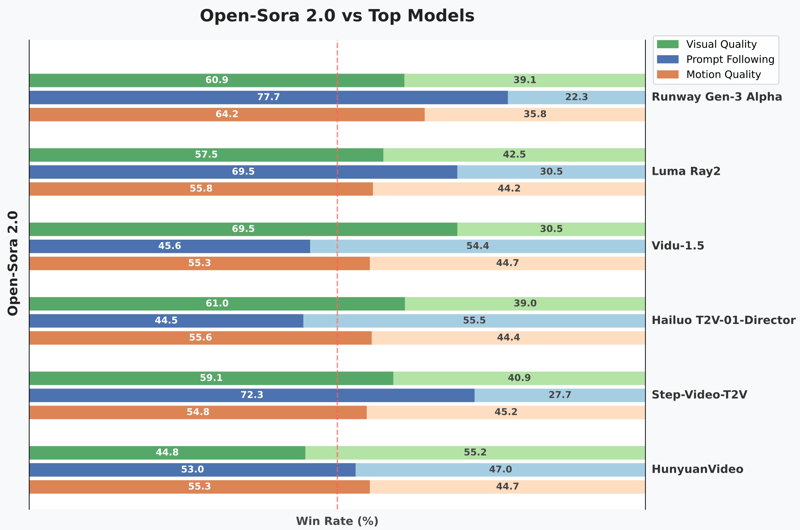

User Preference Win Rates:

In head-to-head comparisons, Open-Sora 2.0 consistently outperforms other leading models:

- Visual Quality: 69.5% win rate against Vidu-1.5, 61.0% against Hailuo T2V-01-Director.

- Prompt Following: 77.7% win rate against Runway Gen-3 Alpha, 72.3% against Step-Video-T2V.

- Motion Quality: 64.2% win rate against Runway Gen-3 Alpha, 55.8% against Luma Ray2.

These results clearly demonstrate Open-Sora's competitive edge, making it a viable alternative to expensive proprietary solutions.

Video Generation Specifications: What Can You Expect?

Open-Sora 2.0 offers robust video generation capabilities suitable for various creative needs:

Resolution and Length:

- Supports multiple resolutions (256px, 768px) and aspect ratios (16:9, 9:16, 1:1, 2.39:1).

- Generates videos up to 16 seconds at high quality (720p).

Frame Rate and Processing Time:

- Consistent 24 FPS output for smooth, cinematic quality.

- Processing times vary:

- 256×256 resolution: ~60 seconds on a single high-end GPU.

- 768×768 resolution: ~4.5 minutes with 8 GPUs in parallel.

- RTX 3090 GPU: 30 seconds for a 2-second 240p video, 60 seconds for a 4-second video.

Hardware Requirements and Installation: Getting Started

To start using Open-Sora, you'll need to meet specific hardware and software requirements:

System Requirements:

- Python: Version 3.8 or higher.

- PyTorch: Version 2.1.0 or higher.

- CUDA: Version 11.7 or higher.

GPU Memory Requirements:

- Consumer GPUs (e.g., RTX 3090 with 24GB VRAM): Suitable for short, lower-resolution videos.

- Professional GPUs (e.g., RTX 6000 Ada with 48GB VRAM): Recommended for higher resolutions and longer videos.

- H100/H800 GPUs: Ideal for maximum resolution and longer sequences.

Installation Steps:

- Clone the repository:

git clone https://github.com/hpcaitech/Open-Sora

- Set up Python environment:

conda create -n opensora python=3.8 -y

- Install required packages:

pip install -e .

- Download model weights from Hugging Face repositories.

- Optimize memory usage with the

--save_memoryflag during inference.

Limitations and Future Developments: What's Next for Open-Sora?

Despite its impressive capabilities, Open-Sora 2.0 still faces some limitations:

- Video Length: Currently capped at 16 seconds for high-quality outputs.

- Resolution Limits: Higher resolutions require multiple high-end GPUs.

- Memory Constraints: Consumer GPUs have limited capabilities.

However, the Open-Sora team is actively working on enhancements like multi-frame interpolation and improved temporal coherence, promising even smoother, longer AI-generated videos in the future.

Final Thoughts: Democratizing AI Video Generation

Open-Sora 2.0 represents a significant leap forward in democratizing AI video generation technology. With performance nearly matching proprietary models like OpenAI's Sora—but at a fraction of the cost—Open-Sora empowers creators, developers, and businesses to harness the power of AI video generation without prohibitive expenses.

As Open-Sora continues to evolve, it stands poised to revolutionize creative industries, offering accessible, high-quality video generation tools to everyone.

Ready to explore even more powerful AI video generation tools? Discover Minimax Video, Tencent Hunyuan, Runway ML, and more—all available on Anakin AI. Unleash your creativity today: Explore Anakin AI Video Generator

Top comments (0)