Let's face it - we've all been there. You're trying to find that perfect meme to sum up your existential dread over Monday morning coffee, but no matter what keywords you try, you just get unrelated cat photos. Enter semantic search: the technology that finally understands your chaotic search queries. In this post, I'll show you how I built a meme search engine that actually gets the joke using some cool tech magic (and Upstash's awesome tools).

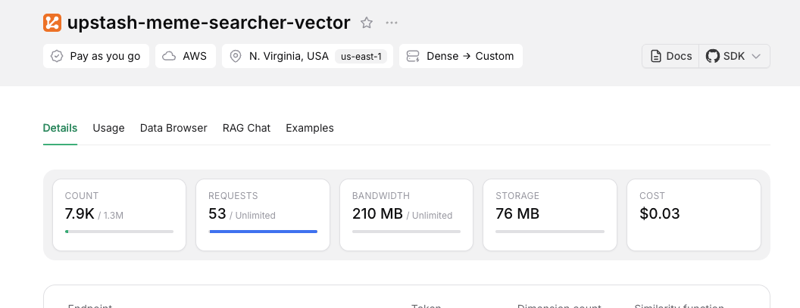

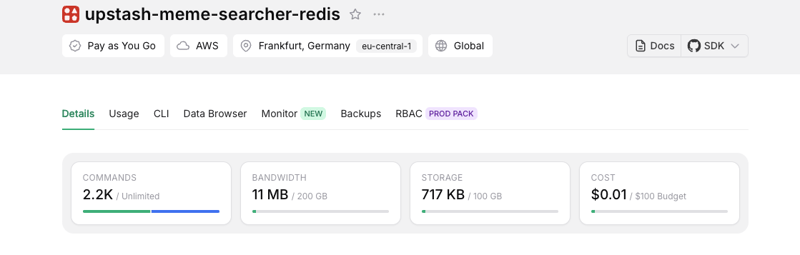

Upstash offers a vector database that enables scalable similarity searches across vectors, complete with features such as metadata filtering and built-in embedding models. Additionally, Upstash Redis provides powerful caching and rate limiting capabilities, making it an ideal foundation for modern AI-powered applications.

In this post, I'll walk you through how we created a semantic meme search engine using Upstash Vector and Redis that demonstrates how these powerful tools can be used to build an efficient and user-friendly search experience.

You can explore the project on GitHub or deploy it directly to your own Vercel account.

When Keywords Fail: The Meme Search Dilemma

Traditional search works great when you know exactly what you're looking for. "Grumpy Cat" → Grumpy Cat memes. Easy. But memes live in the weird space between literal meaning and cultural context. Try searching for "that feeling when your WiFi dies during a Zoom call" and you'll quickly hit the limits of keyword matching.

That's where semantic search shines. It's like having a friend who's deep into meme culture. Tell them "I need something for when adulting is too hard" and they'll hand you 10 variations of "This is Fine" dog memes without blinking. That's exactly what we're building here.

Tech Stack/Toolkit: Why These Choices Matter

Next.js 15: Because who wants to mess with complicated setups? Instant API routes + React goodness = happy developer.

Upstash Vector: Like a brain that remembers relationships between concepts (but way cheaper than a real brain).

Upstash Redis: The Swiss Army knife of databases - handles caching and keeps users from spamming our API.

LLM API: For the generating meta-data of meme images.Many different LLM models are available.

OpenAI Embeddings: Not a must, we can use different AI models (like the ones from Hugging Face).

Vercel Blob: For storing all those spicy memes without drowning in S3 configs.

Generating Meta-Data for meme images

role: "user",

content: [

{ type: "text", text: "Describe the image in detail." },

{

type: "image",

image: file,

},

],

},

],

});

images.push({ path: file, metadata: result.object.image });

Before generating embedding vectors for the meme images in our dataset, we needed to create comprehensive metadata for each image. To achieve this, I leveraged OpenAI's LLM API to automatically label all images with nine key parameters: path (hosted on Vercel), title, description, tags, memeContext, humor, format, textContent, person, and objects. The challenge was to capture both the visual content of the image and the text embedded within the meme. Below is an example of the metadata tagging:

{

"path": "https://kqznk1deju1f3qri.public.blob.vercel-storage.com/another_%20Bugs%20Bunny_s%20_No_-D6ht580Nj0I8mri3hYLVIHnRx4DNIB.jpg",

"metadata": {

"title": "Bugs Bunny 'No' Meme with Defiant Expression",

"description": "This meme features Bugs Bunny, a classic cartoon character from the Looney Tunes series, with a defiant expression. The image is a close-up of Bugs Bunny's face, showing him with half-closed eyes and a slightly open mouth, conveying disinterest or refusal. The word 'no' is displayed in bold white text, emphasizing his dismissive attitude. This meme is often used to humorously express firm rejection. The mid-20th-century animation style adds nostalgia.",

"tags": [

"Bugs Bunny",

"Looney Tunes",

"cartoon",

"meme",

"no",

"refusal",

"defiance",

"humor",

"animation",

"classic",

"nostalgia",

"expression",

"dismissive",

"rejection",

"bold text"

],

"memeContext": "A frame from Looney Tunes repurposed to convey humorous refusal in online conversations, leveraging Bugs Bunny's witty demeanor.",

"humor": "Exaggerated refusal using a beloved character, with simplicity enhancing comedic effect.",

"format": "Bugs Bunny 'No' Meme",

"textContent": "no",

"person": "Bugs Bunny, animated character",

"objects": "Bugs Bunny, text 'no'"

}

}

The LLM analyzed both the visual elements (e.g., character expressions, text placement) and cultural context (e.g., Bugs Bunny's nostalgic appeal) to generate rich, search-friendly metadata.

Preparing the Embeddings

For our meme search engine, we generated embeddings ourselves using OpenAI's text-embedding-3-small model, but I recommend you to try another embedding models to see variety. Each meme is represented by embedding its title and description:

export const generateEmbedding = async (value: string): Promise<number[]> => {

const input = value.replaceAll("\n", " ");

const { embedding } = await embed({

model: embeddingModel,

value: input,

});

return embedding;

};

Indexing the Vectors

For our meme search engine, we created a simple index structure where each meme is represented by:

- A vector embedding of its title and description

- Metadata including the title, description, and image path

- A unique ID for retrieval

The actual indexing process is handled through Upstash Vector's API:

// Initialize Upstash Vector client with the memes index

export const memesIndex = new Index({

url: process.env.UPSTASH_VECTOR_URL!,

token: process.env.UPSTASH_VECTOR_TOKEN!,

});

Unlike larger projects that might require batch processing, our meme database is small enough to be indexed efficiently without special optimizations. The simplicity of Upstash Vector's API made this process straight-forward.

Building the Meme Search Engine

The search functionality combines both vector similarity search and direct text matching (and if it duplicates drops it):

// For longer queries, perform both direct and semantic search

const directMatches = await findMemesByQuery(query);

const semanticMatches = await findSimilarMemes(query);

// Combine results, removing duplicates

const allMatches = uniqueItemsByTitle([...directMatches, ...semanticMatches]);

Vector search finds semantically similar content:

export const findSimilarMemes = async (description: string): Promise<Meme[]> => {

const embedding = await generateEmbedding(description);

const results = await memesIndex.query({

vector: embedding,

includeMetadata: true,

topK: 60,

includeVectors: false,

});

return results.map((result: any) => ({

id: String(result.id),

title: result.metadata?.title || '',

description: result.metadata?.description || '',

path: result.metadata?.path || '',

embedding: [],

similarity: result.score,

}));

};

For the search results, we include both metadata (title and description) and the image path, allowing us to display rich results to the user.

Implementing Caching and Rate Limiting

To optimize performance and control API usage, we implemented caching and rate limiting using Upstash Redis.

After we take from our url and token, can integrate with our code:

Redis Caching

// Create cache key

const cacheKey = query

? `q:${query.toLowerCase().replaceAll(" ", "_")}`

: "all_memes";

// Check cache first

const cached = await redis.get(cacheKey);

if (cached) {

// Return cached results

return { memes: JSON.parse(cached as string) };

}

// ... perform search ...

// Cache the results

await redis.set(cacheKey, JSON.stringify(allMatches), { ex: REDIS_CACHE_TTL });

Rate Limiting with Redis Sorted Sets

Let's integrate the rate limiting for prevent api usage:

const RATE_LIMIT = {

window: 60, // 1 minute

requests: 10 // Max 10 requests per minute

};

async function checkRateLimit(ip: string): Promise<boolean> {

const now = Math.floor(Date.now() / 1000);

const windowStart = now - RATE_LIMIT.window;

// Remove old entries

await redis.zremrangebyscore(`ratelimit:${ip}`, 0, windowStart);

// Count requests in the current window

const requestCount = await redis.zcount(`ratelimit:${ip}`, windowStart, '+inf');

// If under limit, add this request and return true

if (requestCount < RATE_LIMIT.requests) {

await redis.zadd(`ratelimit:${ip}`, { score: now, member: now.toString() });

return true;

}

return false;

}

Redis sorted sets provide an elegant and efficient way to implement a sliding window rate limiter. This approach offers several advantages:

- Accurate time-based expiration of old requests

- Efficient counting of requests within the current window

- Low memory usage even with high traffic

An Interesting Database Choice: Vercel Blob

Vercel Blob streamlines file storage with effortless integration. Unlike traditional databases, setup requires just three steps:

- Create a project

- Connect Vercel Blob storage

- Start uploading files—no complex configurations.

Its intuitive dashboard simplifies storage management, offering real-time asset organization, usage analytics, and seamless scalability—all directly within Vercel’s ecosystem. Perfect for developers prioritizing speed and simplicity.

User Interface

Initially, I designed a visually dense UI with complex components. But then I realized, ‘Akif, this project needs a design that highlights simplicity!’ — so I scaled back and created a cleaner, more minimalist interface.

- A prominent search box at the top of the page

- A responsive grid layout for displaying meme results

- Suggested searches for new users who haven't entered a query

Closing Words

Building a semantic meme search engine with Upstash Vector and Redis was remarkably straightforward. The combination of these services provided all the tools we needed to create a fast, efficient, and user-friendly search experience.

The features in Upstash Vector, such as automatic vector embeddings, metadata storage, and efficient similarity search, made the process quite easy. Similarly, Upstash Redis provided powerful tools for caching and rate limiting with minimal effort.

We had a lot of fun with this project and believe that Upstash's services are well-suited for building AI-powered applications of all kinds. If you have any feedback about the project, feel free to reach out on GitHub. Let's work together to make it even better!

Top comments (0)