👉🏻 A step-by-step guide to generating and refining product images with prompts with Amazon Bedrock.

Creating high-quality, realistic product images is crucial for industries like e-commerce, advertising, and digital content creation. with generative AI models available in Amazon Bedrock, businesses can automate and enhance their image creation workflows, generating customized visuals quickly and efficiently.

Amazon Bedrock provides a user-friendly interface and access to multiple foundation models optimized for different generative AI tasks. Amazon Titan Image Generator G1, is specifically designed to produce high-resolution, photorealistic images with fine-tuned control over attributes such as texture, lighting, and product variations.

In this use case, we will explore how to generate an advertising image for a skincare product and later on, remove the product itself to potentially replace another product. We will also create an variation of an existing image and use it as a marketing campaign poster.

Specifically, we will explore:

- The capabilities of the

Amazon Titan Image Generator G1, including how it handles product image generation. - How to invoke the model using the

Amazon Bedrockruntime within a Jupyter Notebook.

1. Amazon Bedrock Image Generative Model

Titan Image Generator v1 is a versatile tool that allows users to create, edit, and customize images based on simple text descriptions. Whether you're designing marketing materials, product visuals, or social media content, this model makes the process faster and more efficient. [1]

Here are some key features and real-world examples of how you can use them:

- Generate Images from Text: For example, if a skincare brand needs a “luxury serum bottle on a marble countertop with soft lighting,” they can enter this prompt, and Titan will generate a high-quality image in seconds.

- Edit Existing Images: Upload an image and make changes without manual work. A business could upload a lipstick product photo and change its color from red to pink just by typing “make the lipstick soft pink.”

- Inpainting & Outpainting: This helps fill in missing parts of an image or expand it beyond its original borders. For instance, a beauty brand might want to extend the background of a product photo to fit different ad formats without reshooting the image.

- Generate Variations: Need multiple product shots with different styles? Titan can create several versions of an image with minor changes. A cosmetic company testing different packaging colors could quickly generate variations to see which looks best.

- Style Transfer: Users can apply styles from reference images. For example, a skincare brand inspired by vintage apothecary designs could upload an old-fashioned product label and transform a modern image into something with a classic, retro feel.

With Amazon Titan Image Generator v1, businesses and content creators can save time, reduce costs, and produce high-quality visuals without needing advanced design skills or expensive photo shoots.

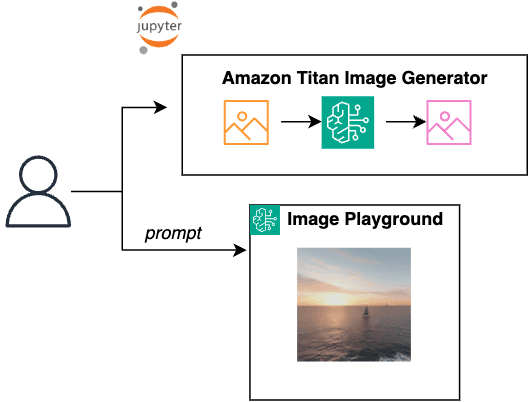

2. AWS tools and control flow

AWS Tools Used:

-

Amazon Titan Image Generator: A generative AI model available in

Amazon Bedrockthat creates images based on text prompts. - Jupyter Notebook: An interactive computing environment where users can write Python code to interact with AWS services, including Amazon Bedrock.

- Image Playground: A visualization tool where users can see the generated images and experiment with different styles, variations, and refinements.

Control Flow:

-

User Input (Prompt Entry):

- The user provides a text prompt (e.g., “A skincare bottle with floral background”) to describe the desired image.

-

Processing in Jupyter Notebook:

- The user writes a script in Jupyter Notebook to call the Amazon Titan Image Generator API via Amazon Bedrock runtime.

- The Titan model processes the request, generating an image based on the text prompt.

-

Amazon Titan Image Generation Pipeline:

- The model interprets the prompt and creates an image.

- It may apply additional AI processing techniques (e.g., style transfer, variations, inpainting).

- The final generated image is returned.

-

Image Playground Visualization:

- The user can view and further refine the generated image in Image Playground.

- Additional modifications, such as adjusting styles or re-generating variations, can be applied here.

3. Step-by-Step Guide on Image Generation & Variation

3.1 Generation & Variation in Bedrock Playground

3.1.1 Generate image with prompts

In AWS Console, go to Amazon Bedrock Image playground:

- Select Amazon as Model providers

- Select Titan Image Generator G1

- Click on Apply

A configuration panel is also available in the image playground to configure parameters before sending the request to the model.

The following configurations are available for the Generate image action:

- Negative prompt: A prompt that describes what you do not want in the image.

- Orientation: Landscape or portrait orientation.

- Size: The resolution of the image.

- Number of images: The number of images to generate.

- Prompt strength: How closely the model should follow the prompt.

- Seed: A random seed value to generate a different, but similar, image.

For the purpose of illustration and cost-effective consideration, we will start with a lower resolution 512 x 512, and only generate 1 image.

In the prompt input box, provide the prompt for the product image that you want to generate.

In this scenario, we are aiming to create a picture for a skincare product with soft and lightening background.

💡

How to create a good prompt for image generation:

- Basic Product Display: "A sleek, modern skincare serum bottle on a marble countertop with soft lighting and a blurred background."

- Lifestyle Context: "A hand applying a moisturizing cream, with a natural, bright bathroom setting in the background."

- Minimalist Branding Shot: "A luxury perfume bottle placed in the center with a clean, white background and soft shadows."

- Multiple Product Variants: "Three lipsticks in different shades arranged diagonally on a glossy black surface, reflecting light."

- Seasonal & Thematic: "A festive holiday-themed packaging for a skincare set, surrounded by pinecones and warm golden lights."

- E-commerce Ready Images: "A neatly arranged flat lay of a beauty product lineup on a pastel pink background with even lighting."

- Texture & Close-up Shots: "A macro shot of a hydrating gel with water droplets, showing a fresh and dewy texture."

3.1.2 Create image variations

Click on the image, there is an Action button in the configuration panel. Click the Actions dropdown below the configurations:

- Generate variations: Generate variations of the current image.

- Remove object: Remove an object from the image. Also referred to as inpainting.

- Replace background: Replace the background of the image.

- Replace object: Replace an object in the image with another object.

Inpainting is a powerful technique for removing or replacing objects and backgrounds in an image. It works by intelligently filling in missing or unwanted areas, ensuring the new content blends naturally with its surroundings. The model analyzes the surrounding details and generates a seamless replacement, making it ideal for tasks like erasing distractions, swapping backgrounds, or restoring damaged images.

In our scenario, we will try to remove the skincare product. When removing an object from an image in the playground editor, you need to specify the area using either a mask selector or a text prompt.

By default, the mask selector covers the entire image, but you can adjust it by dragging any of the four corners to focus on the specific area you want to edit.

Alternatively, you can use a mask prompt by describing the object you want to remove, allowing the model to intelligently identify and erase it.

After removal, we can review the image. The skincare bottle has been removed, though not entirely, and the background is attempting to fill the covered area with flowers. The refined image is not perfect, as there are still remnants of the bottle.

3.2 Generation & Variation in Bedrock runtime in Jupyter Notebook

3.2.1 Obtain AWS credentials

This notebook requires AWS credentials to access the Amazon Bedrock runtime, including ACCESS_KEY_ID, SECRET_ACCESS_KEY and REGION.

3.2.2 Import image and create Bedrock runtime

from IPython.display import display

from PIL import Image

display(Image.open("people.jpg"))

The people.jpg image has been pre-uploaded for this use case.

import boto3

bedrock = boto3.client(

'bedrock-runtime',

region_name=REGION,

aws_access_key_id=ACCESS_KEY_ID,

aws_secret_access_key=SECRET_ACCESS_KEY

)

The Amazon Bedrock runtime is used to interact with the Amazon Titan Image Generator model. The invoke_model method accepts inference parameters to specify how the image should be generated.

3.2.3 Invoke model

import base64

import json

import io

from botocore.exceptions import ClientError

def generate_image(model_id, body):

# Invoke the model

response = bedrock.invoke_model(

body=body,

modelId=model_id,

accept="application/json",

contentType="application/json"

)

response_body = json.loads(response.get("body").read())

# Convert the image to bytes

raw_image = response_body.get("images")[0]

bytes = raw_image.encode("ascii")

image_bytes = base64.b64decode(bytes)

# Return error if any

error = response_body.get("error")

if error is not None:

print(f"Error: {error}")

return image_bytes

The "people.jpg" image file is first read and encoded into base64 format, then decoded into a UTF-8 string. This resulting input_image string is used as part of the model inference parameters in the request body.

The model name "amazon.titan-image-generator-v1" is defined, and the body JSON object contains the relevant inference parameters.

The taskType is set to IMAGE_VARIATION, with the imageVariationParams object containing the input_image string. The text parameter serves as the model's prompt, while the negativeText parameter indicates what the model should avoid generating, similar to its use in the Image Playground. The images parameter can accept between 1 and 5 reference images.

The similarityStrength parameter controls how closely the generated image should resemble the reference image. A lower value increases randomness in the output. In this example, the similarityStrength is set to 1.0, ensuring the generated image is as similar as possible to the reference image.

with open("people.jpg", "rb") as image_file:

input_image = base64.b64encode(image_file.read()).decode('utf8')

model_id = 'amazon.titan-image-generator-v1'

body = json.dumps({

"taskType": "IMAGE_VARIATION",

"imageVariationParams": {

"text": "Generate a variation of the image with three different people, photo-realistic, 8k, hdr",

"negativeText": "bad quality, low resolution, cartoon",

"images": [input_image],

"similarityStrength": 1.0,

},

"imageGenerationConfig": {

"numberOfImages": 1,

"height": 512,

"width": 512,

"cfgScale": 8.0

}

})

This code performs the following steps:

-

Reading and encoding the image:

- The code opens the file "people.jpg" in binary read mode (

"rb"). - The contents of the image file are read and then encoded into a base64 format using the

base64.b64encode()function. - The result is then decoded into a UTF-8 string with

.decode('utf8')and stored in the variableinput_image.

- The code opens the file "people.jpg" in binary read mode (

-

Setting the model ID:

- The variable

model_idis assigned the string'amazon.titan-image-generator-v1', which represents the model that will be used to generate the image variation.

- The variable

-

Building the request body:

- The code creates a JSON object (

body) usingjson.dumps(), which prepares the parameters to send in the request to the model API.

- The code creates a JSON object (

try:

image_bytes = generate_image(model_id=model_id,

body=body)

display(Image.open(io.BytesIO(image_bytes)))

except ClientError as err:

message = err.response["Error"]["Message"]

print("A client error occured: " + format(message))

Trying to generate and display the image:

- The

tryblock attempts to execute the following:-

generate_image(): This function is called with themodel_idandbodyparameters, which contain the model ID and the request body containing the image generation details, respectively. The function likely sends a request to an image generation API and returns the image in bytes (image_bytes). -

Image.open(io.BytesIO(image_bytes)): The returnedimage_bytes(binary data) are passed toio.BytesIO(), which converts the byte data into a byte stream object that can be read like a file. TheImage.open()function from thePILlibrary (Python Imaging Library) is then used to open the image from this byte stream and display it.

-

In this use case, we can create many variations of the image to showcase products, with aligned styles and tones, without shooting for multiple products.

4. Conclusion

In this use case, we have:

- Utilized

Amazon Bedrockto access and integrate powerful AI models, specifically theTitan Image Generator Model, for generating and editing images. - Leveraged the Image Playground to easily experiment with and refine the image generation process by providing customizable prompts and parameters.

- Employed the

Amazon BedrockRuntime to simplify the deployment and execution of these AI models, streamlining the image generation workflow. - Customized image outputs using image variation parameters like text prompts, negative text, and similarity strength, ensuring the generated images align with specific requirements.

Reference and further readings:

[1] Amazon Titan Image Generator G1 models overview. https://docs.aws.amazon.com/bedrock/latest/userguide/titan-image-models.html

Top comments (1)

Such an amazing article!