Once you have adequately separated deployment and release, the next step is to choose mechanisms for controlling the progressive release of features. It is essential to select a release strategy that allows you to reduce risk in production. Because no matter how much you test a new version of your API before release, the actual test occurs when you finally put it in front of customers.

We can achieve to reduce this risk by performing a test or experiment with a small fraction of traffic and verifying the result. When the result is successful, the release to all traffic triggers. Specific strategies suit scenarios better than others and require varying degrees of additional services and infrastructure. In this post, we will explore 3 popular API release strategies that use an API Gateway nowadays.

Why use API gateway in API deployment

One benefit of moving to an API-based architecture is that we can iterate quickly and deploy new changes to our services. We also have the concept of traffic and routing established with an API Gateway for the modernized part of the architecture. API Gateway provides stages to allow you to have multiple deployed APIs behind the same gateway and it is capable of in-place updates with no downtime. Using API Gateway enables you to leverage the service's numerous API management features, such as authentication, rate throttling, observability (important metrics for APIs), multiple API versioning, and stage deployment management (deploying an API in multiple stages such as dev, test, stage, and prod).

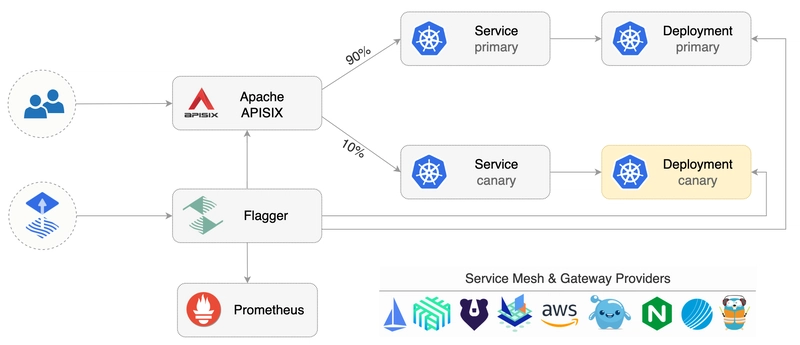

Open source API Gateway (Apache APISIX and Traefik), Service Mesh (Istio and Linkerd) solutions are capable of doing traffic splitting and implementing functionalities like Canary Release and Blue-Green deployment. With canary testing, you can make a critical examination of a new release of an API by selecting only a small portion of your user base. We will cover the canary release next section.

Canary release

A canary release introduces a new version of the API and flows a small percentage of the traffic to the canary. In API gateways, traffic splitting makes it possible to gradually shift or migrate traffic from one version of a target service to another. For example, a new version, v1.1, of a service can be deployed alongside the original, v1.0. Traffic shifting enables you to canary test or releases your new service by at first only routing a small percentage of user traffic, say 1%, to v1.1, then shifting all of your traffic to the new service over time.

This allows you to monitor the new service, look for technical problems, such as increased latency or error rates, and look for the desired business impact, such as an increase in key performance indicators like customer conversion ratio or average shopping checkout value. Traffic splitting enables you to run A/B or multivariate tests by dividing traffic destined for a target service between multiple versions of the service. For example, you can split traffic 50/50 across your v1.0 and v1.1 of the target service and see which performs better over a specific period of time. Learn more about the traffic split feature in Apache APISIX Ingress Controller.

Where appropriate, canary releases are an excellent option, as the percentage of traffic exposed to the canary is highly controlled. The trade-off is that the system must have good monitoring in place to be able to quickly identify an issue and roll back if necessary (which can be automated). This guide shows you how to use Apache APISIX and Flagger to quickly implement a canary release solution.

Traffic mirroring

In addition to using traffic splitting to run experiments, you can also use traffic mirroring to copy or duplicate traffic and send this to an additional location or series of locations. Frequently with traffic mirroring, the results of the duplicated requests are not returned to the calling service or end user. Instead, the responses are evaluated out-of-band for correctness, such as comparing the results generated by a refactored and existing service, or a selection of operational properties are observed as a new service version handles the request, such as response latency or CPU required.

Using traffic mirroring enables you to “dark release” services, where a user is kept in the dark about the new release but you can observe internally for the required effect.

Implementing traffic mirroring at the edge of systems has become increasingly popular over the years. APISIX offers the proxy-mirror plugin to mirror client requests. It duplicates the real online traffic to the mirroring service and enables specific analysis of the online traffic or request content without interrupting the online service.

Blue-Green

Blue-green is usually implemented at a point in the architecture that uses a router, gateway, or load balancer, behind which sits a complete blue environment and a green environment. The current blue environment represents the current live environment, and the green environment represents the next version of the stack. The green environment is checked prior to switching to live traffic, and at go live the traffic is flipped over from blue to green. The blue environment is now “off” but if a problem is spotted it is a quick rollback. The next change would go from green to blue, oscillating from the first release onward.

Blue-green works well due to its simplicity and it is one of the better deployment options for coupled services. It is also easier to manage persisting services, though you still need to be careful in the event of a rollback. It also requires double the number of resources to be able to run cold in parallel to the currently active environment.

Traffic management with Argo Rollouts

The strategies discussed to add a lot of value, but the rollout itself is a task that you would not want to have to manage manually. This is where a tool such as Argo Rollouts is valuable for demonstrating practically some of the concerns discussed.

Using Argo, it is possible to define a Rollout CRD (Custom Resource Definition) that represents the strategy you can take for rolling out a new canary of your API. A CRD allows Argo to extend the Kubernetes API to support rollout behavior. CRDs are a popular pattern with Kubernetes, and they allow the user to interact with one API with the extension to support different features.

You can use the Apache APISIX and Apache APISIX Ingress Controller for traffic management with Argo Rollouts. This guide shows you how to integrate ApisixRoute with Argo Rollouts using it as a weighted round-robin load balancer.

Summary

With the rise in Progressive Delivery approach, and also advanced requirements within Continuous Delivery before this, having the ability to separate the deployment and release of service (and corresponding API) is a powerful technique. The ability to canary release services and make use of API Gateway traffic split and mirroring features can provide a competitive advantage to your business in both mitigating risks of a bad release and also understanding your customer’s requirements more effectively.

Related resources

Recommended content 💁

➔ Read the blog posts:

Community⤵️

🙋 Join the Apache APISIX Community

🐦 Follow us on Twitter

📝 Find us on Slack

About the author

Visit my personal blog: www.iambobur.com

Top comments (1)

Cool