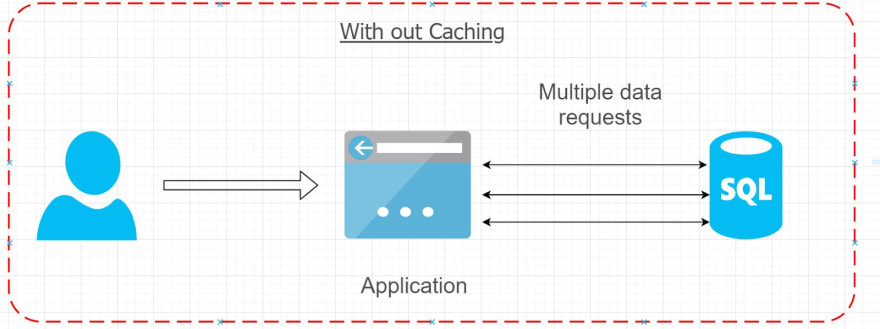

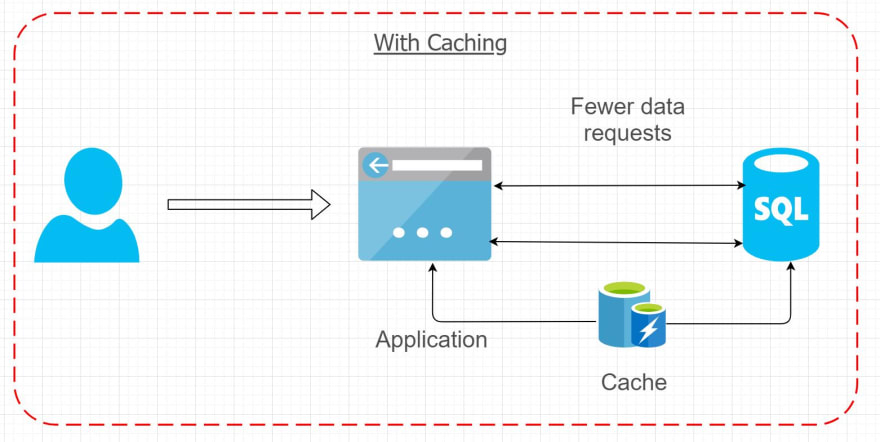

What is caching ?: A cache is a subset of data that is requested from a data source and stored using various mechanisms so that subsequent requests for that data are served up faster compared to accessing the data source directly. The primary purpose of caching is to improve the performance. The process of storing and accessing the cache data is called caching.

Why caching ?: The primary purpose of caching in either mobile or web applications is to eliminate redundant requests to external data sources for data that in most cases do not change yet have hit rate.More and more web and mobile applications these days are implementing caching primarily for two purposes.

- Improved performance

- Better User experience

- Reduce the load on database and network congestion

Caching in web applications : In case of web applications,the most basic level caching is the client side web caching using the HTTP cache embedded within the browser. When it comes to server caching the simplest form of cache is the In Memory cache which uses the memory of web server. The other popular form of caching uses the concept of Key-Value stores using solutions like Redis or Memcache. The Key-Value store based implementation can be configured or programmed within the application to cache content based on use case. Caching is implemented such that the cached data is maintained for a defined duration of time and later invalidated. A request is then made again to get the refreshed data. This will ensure that the new data is brought in periodically in case the data changes.

What to Cache?: Although it depends completely on the business use case on what to cache, In general

- Costly data retrieval operations are always a consideration when deciding the caching strategy.

- Data that does not have to frequently change. For example , historical data sets for reports.

- Data that is unique to a user’s session.

- Since caching is only a performance optimization technique, it is important to consider quantifying and comparing data operations before implementing caching. It could be a case where caching is not necessary and instead optimizing the code and data retrieval process might serve the purpose of optimization.

In-Memory & Distributed caching:

In-Memory Caching: In-Memory Caching is where all the cache objects are stored in the same instance as the application and share the memory with the application. This type of caching is locally available to the application and is typically fast. This type of caching works when applied in a scenario where the application only has one instance or has been deployed only to one server. Applications where expected traffic is not very much are usually hosted on one server. In-Memory caching in such scenarios helpes faster data access. With applications hosted on web farm where group of servers work collectively in a load balanced fashion to render the application, In-Memory caching will lead to cache inconsistency and does not serve the purpose of caching. This is because every time a user logs into the application the user hits a node and a copy of cache specific to that user is created on that node. When the user comes back to the application the next time the same user might hit a different node and the application sees that cache is not present on that node and goes on to create another copy of cache on the current node. There are mechanisms where the the cache can be kept consistent across all the nodes and invalidate at the same time on all nodes to maintain consistency but building such a mechanism might be an overload on the application in most cases. In-Memory caching is often not a preferred choice in enterprise grade applications with high traffic owing to performance reasons since the application and cache share the same memory and there is an overhead on the application owners to maintain high memory for the application to perform at optimum level.

In-Memory Caching in .NET: In-Memory Caching can be achieved using Microsoft.Extensions.Caching.Memory Namespace by installing respective nuget packages. Although there is also System.Runtime.Caching, Microsoft recommends using Extensions nuget package as it works natively with ASP.NET core dependency injection.

I have described in my other article how In-Memory Cache can be

Implementing in .NET Core using IMemoryCache along with a sample application.

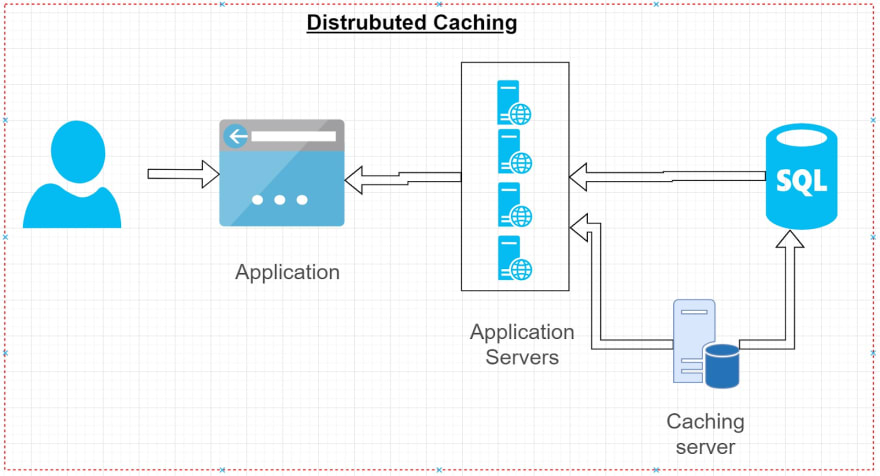

Distributed caching: Distributed Caching is built using the concept of key-value pairs and stored separately from where the application is hosted with its own memory. Unlike In-Memory caching , cache inconsistency and share memory are not matters of concern in this scenario but since cache key-value pairs are on different server hosted outside of the application, network latency and serialization are some performance bottlenecks one might encounter. Although cache server failure can also be a case for consideration, typically in mid to large scale enterprise grade applications caching servers are designed as master-slave to support failover scenarios. Distributed caching is often a preferred choice in enterprise grade applications with high traffic owing to performance and data consistency.

Distributed Caching in .NET: Distributed cache configuration is implementation specific as there are multiple solutions available. Some of the commonly used distributed caching solutions are

- SQL Server distributed cache, more information here.

- Redis

- Memcached

- Ncache In coming articles I will explain how they can be implemented in more detail.

Top comments (0)