The race was already on, and I was late. Over a thousand people were ahead of me, with almost 50 teams having submitted completed projects. Yet this is a hackathon, and no one knows what might stand out till the end!

I stumbled onto the Postman API Hackathon a week after it had opened. Back in November 2020, Postman had released a new service called Public Workspaces that was aimed at helping people to collaborate on APIs. With Postman Galaxy, their annual conference coming up on February 2nd-4th, they announced a hackathon with the results announced at it.

The gauntlet was thrown down in the format of a challenge to, “not just create a Postman public workspace with a Collection of APIs, but build something that is creative, has compelling value to developers, addresses a problem, or has community interest.” Alright, I thought, this is doable - I’ve spent late nights banging my head against the table trying to understand why something isn’t acting right.

Inspiration struck with a memory of Boss-Man Paul calling late at night, asking if a service was down. Nope, turned out he just had horrific internet. Yet this did bring me back to wondering if there was a better way to tell. Sure, the API is up for me and maybe for isitdownrightnow.com - but maybe the Dublin data center is experiencing issues that I’m not seeing!

Flexing my knuckles, imagining the crack, I dive into some code - create a GitHub repo, initialize a fresh AWS CDK project, and the hardest part… buy a snazzy domain name. And so, on January 15th api-network.info was acquired!

Instantly I started running into issues as my domain was not resolving no matter how much I refreshed my browser - so the first network took was decided upon, time for NSLoopup to give me insights! This was familiar ground thankfully, hustle some NodeJS code into a Lambda and deploy it.

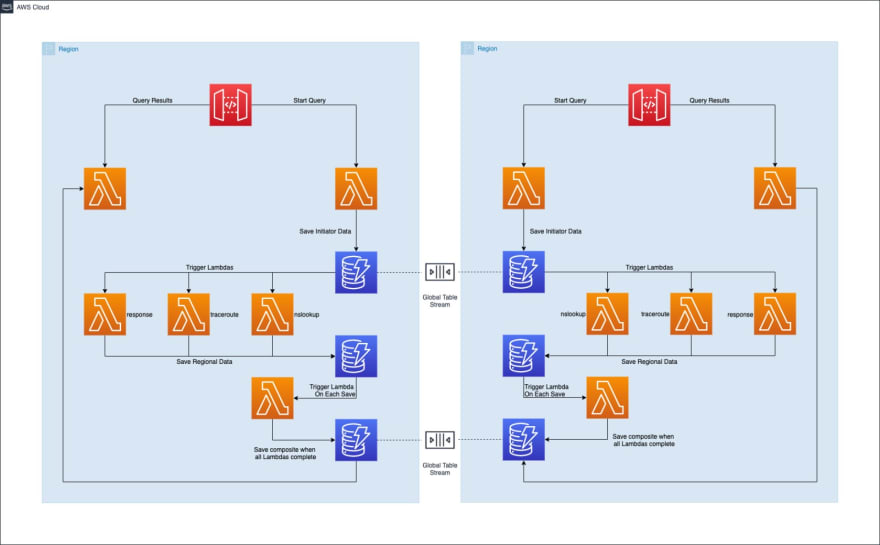

Yet having a single region tell me its status wasn’t very enlightening - so I need to think broader, multi-region broad. I went down several paths trying to figure a good way to coordinate my requests, both within a region and across them. The final solution I landed on was DynamoDB Global Tables - automatically replicate data across regions and allowing me to set up triggers within each region to fire off on any change to that data!

This sounded perfect - I could add additional multiple regions as I needed to! Back to the true work so, adding more network context than I would have a clue what to do with. Utilizing the newly released Lambda Docker containers I was even able to run some OS-level commands, like traceroute and dig. I assembled my core group of 5 commands and was ready to go global!

At this point, I ran straight into a wall. The perfect solution utilizing Global Tables and Triggers came back to bite in with a vengeance. It turns out that Global Tables are built in a single region, then that region builds the replications in other regions. My original code that simply would duplicate my whole stack in each region was thrown a curveball - now I needed to build my global tables, and then loop over all the regions to add my app stack.

Not too big of a change… until I realized that the DynamoDB triggers that the Lambda’s use are systematically named. With a timestamp. The primary region that built out the Global Tables had no way of telling me what the Stream ARN would be in Hong Kong, the only way to find it would be to describe the table IN Hong Kong. Dr. Frankenstein would be disgusted (at least the CDK team would be) at my abomination as I started calling the AWS SDK within the CDK construct.

This is a hackathon though - please leave your good practices at the door.

Now that I had a multi-region API, I could finally start tackling the actual main hackathon - building out a Public Workspace. Postman had provided links to several example collections to inspire folks, which led me to a big facepalm. Despite having used the application for years, I had never known you could visualize responses. From basic HTML to full-on Bootstrap, to D3JS charts. The quantity of data I was returning was too much to parse manually, this was the perfect solution - to build out charts that highlighted different aspects!

Over the next few days, I added data, massaged it, worked it into several charts that started to give me hope that the project made some sense. From simple bar charts, I stepped up to plotting out the traceroutes on a map, to designing a region reconciliation report on DNS or Requests.

The final hours were drawing to a close, it had been multiple days of late nights, tearing the infrastructure down and rebuilding it. Making decisions that I would go on and completely regret, but there was no time to go back and change them. It was the last day.

Five hours to go, no worries. Code is basically locked in now. Just one last part to do - a video demo. How hard can this be? Open up Photo Booth to record me, and start recording my screen.

Three hours later I just don’t care anymore - stumble over the script, sipping a can of Guinness that is slowly warming. Don’t know when I opened it. Edit the videos together and stare at the upload bar on YouTube. The hackathon site was about to start the 60-minute countdown. If there was an issue at this stage, it might require something stronger than Guinness to power me through to the end.

Yet it succeeded.

The video was uploaded, linked, and submitted with the project.

Time for some much-needed sleep, and fruitlessly thinking that for the next hackathon I’ll be better prepared. Time will tell.

Resources

- Postman Public Workspace

- API-Network.info

- API Key Management

- Multi Region Code

- Demo API to test against Code

- API Key Management Code

Follow-up

I'll post up some technical blogs diving into some of the problems I encountered later, from deploying custom Lambda Docker containers to managing multi-region API Keys.

Top comments (0)