“The more I learn, the more I realize how much I don't know.” ― Albert Einstein

Did you know that you can use the DeepSeek R1 Distilled variations without the need for any infrastructure or server setup? Yes, this is made possible by Amazon Bedrock! Not only can you invoke the DeepSeek R1 Distilled model using Bedrock Playground, but you can integrate it with other serverless services like Lambda and API gateway! You can build it using AWS SAM as well.

Let's bring it all together in this article!

In this article, I will explain how to use Amazon Bedrock to import DeepSeek R1 Distilled model and then invoke it using a Rest API and Lambda function.

I will use DeepSeek-R1-Distill-Llama-8B model for this solution. This is one of the six distilled model provided for DeepSeek R1.

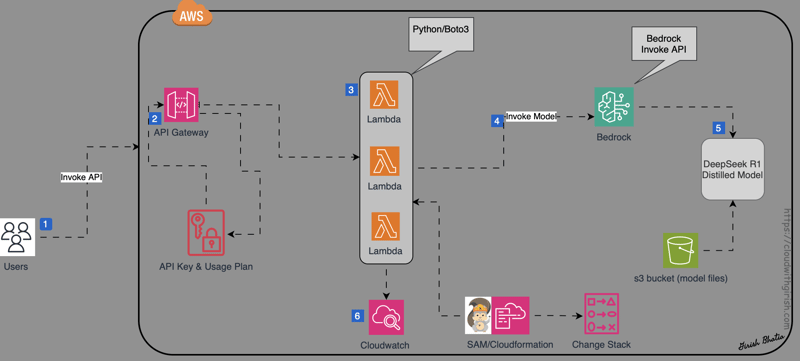

Let's look at the architecture diagram!

Introduction to Amazon Bedrock

Amazon Bedrock is a fully managed service that provides access to a variety of foundation models, including Anthropic Claude, AI21 Jurassic-2, Stability AI, Amazon Titan, and others.

As a serverless offering from Amazon, Bedrock enables seamless integration with popular foundation models (FMs). It also allows you to privately customize these models with your own data using AWS tools, eliminating the need to manage any infrastructure.

Additionally, Bedrock supports the import of custom models.

In this article, I will demonstrate how to use this feature by importing the DeepSeek R1 Distilled model into Amazon Bedrock.

Overview of DeepSeek R1 Distilled Models

DeepSeek R1 offers six distilled models ranging from 1.5B to 70B parameters. Key benefits of these models include lower computational requirements and high performance. They are well-suited for local hosting and serverless use cases.

For this exercise, I will use one of these six distilled models.

I will use DeepSeek-R1-Distill-Llama-8B model.

Download the DeepSeek R1 Distilled Model

I will download the DeepSeek R1 Distilled model from Hugging Face. If you are not familiar with Hugging face, it is an open source community that provides repository for ML/LLM models.

While distilled, it is still a large model, hence first I will get the git LFS installed.

Command: brew install git-lfs

Initialize/verify once installed:

Command: git lfs install

Next, I will clone the distilled model repository. I stated above that DeepSeek R1 provides six distilled models. I will download one of those.

Command: git clone https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Llama-8B

Create a S3 Bucket and Upload the DeepSeek R1 Distilled Model

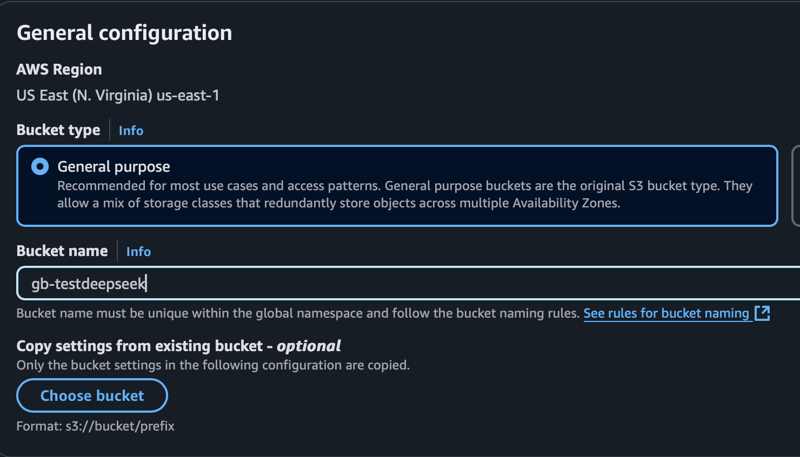

I will create a S3 bucket using AWS Console.

Login to AWS Management Console and navigate to S3 service.

Create a S3 bucket as below:

Bucket Name: gb-testdeepseek or another name of your choice. Must be a unique s3 bucket name.

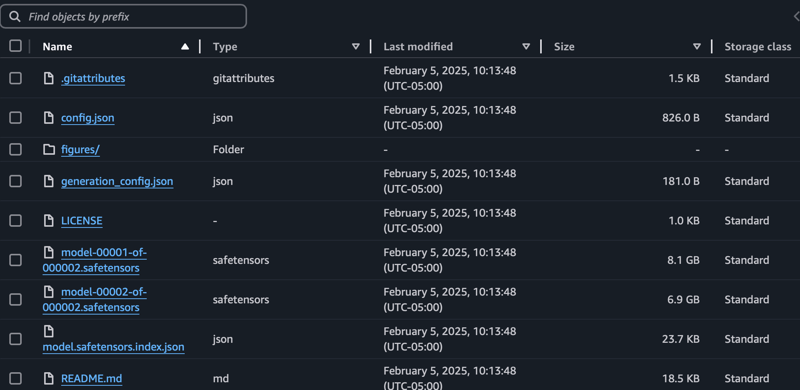

Once s3 bucket is created, I will upload the DeepSeek R1 distilled model to this this bucket.

There are multiple ways to upload the files into s3 bucket like using AWS Console, SDK, AWS CLI or AWS Sync service. I will use AWS CLI command to upload the model into s3 bucket.

Command: aws s3 cp ./DeepSeek-R1-Distill-Llama-8B s3://gb-testdeepseek --recursive --region us-east-1 -- exclude ".git/*" --exclude ".DS_Store"

It may take up to 30 mins to upload the model files into s3 bucket depending on your computer/internet speed.

Using AWS Console, navigate to your S3 bucket and confirm that model has been uploaded to the bucket.

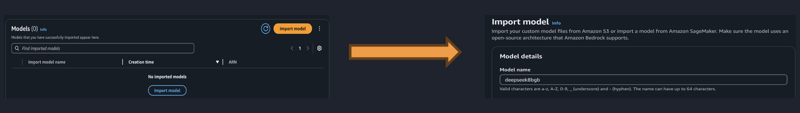

Import the Model to Amazon Bedrock

Model files are in s3 bucket however, this model needs to be imported in Bedrock before it is ready for the inference.

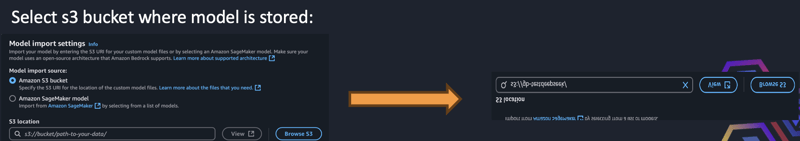

To import the model using AWS Console, navigate to Amazon Bedrock Console and Select Import Model.

Provide a name for the imported model.

Select s3 bucket where model is stored.

Click on Import Model.

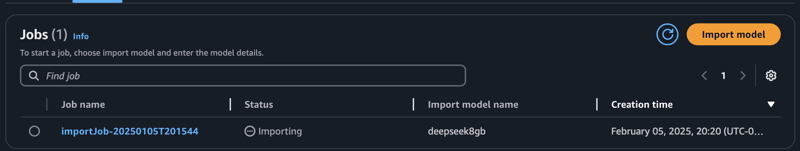

The process to import the model may take 15-20 minutes.

While model is being imported, you will see the 'importing' message.

It may take 5-20 mins for the import model job to complete.

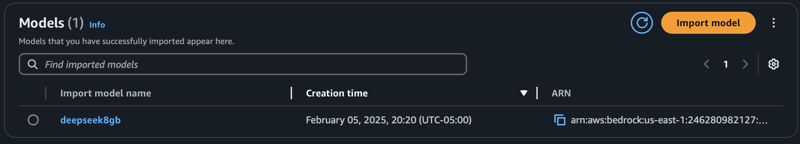

Once import job is completed, you will see the model in the imported models list.

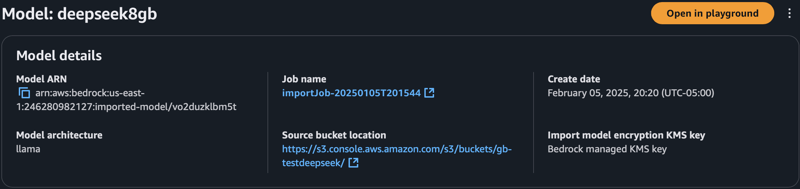

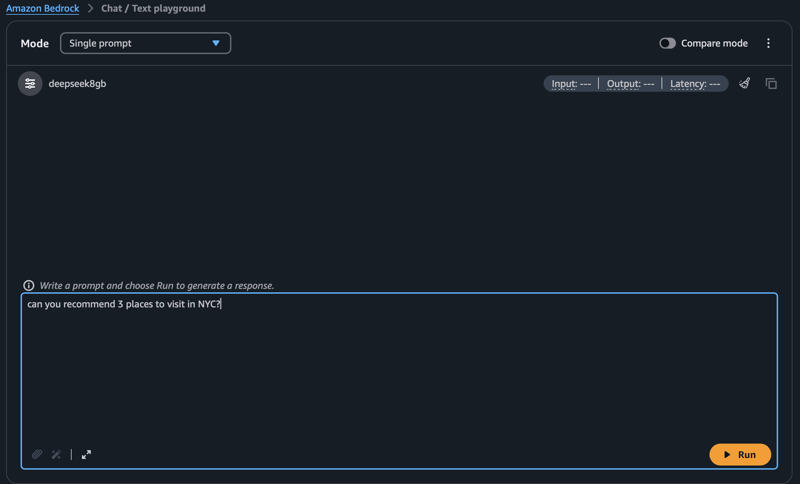

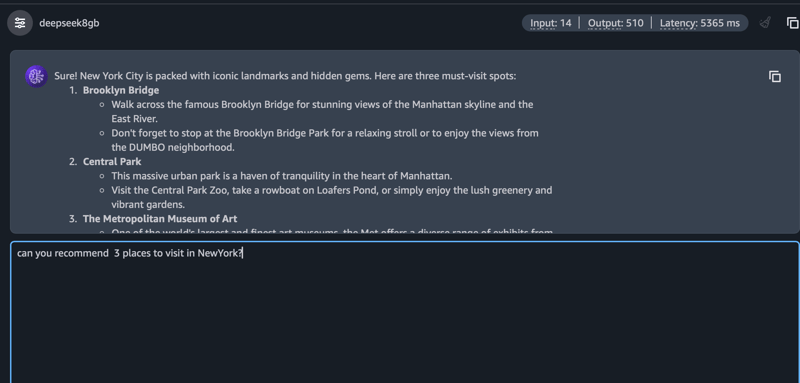

Validate the Model Using Amazon Bedrock Playground

Model has been successfully imported and now I can open it in the Bedrock Playground.

I can use the playground to issue a prompt. Model will act on this prompt and will provide a response.

Let's review the response provided by the model.

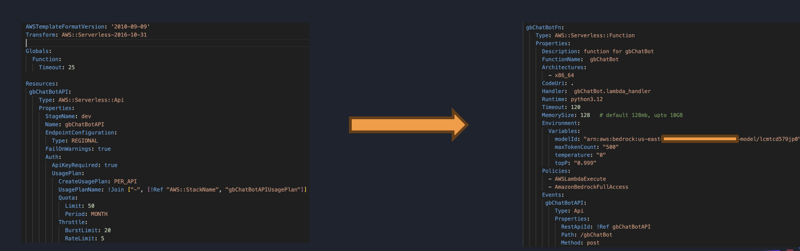

Build the Lambda Function/API Using AWS SAM

Let's build a lambda function and a rest API using AWS SAM.

The api will invoke the lambda function. Lambda function has the code to invoke bedrock and bedrock will use the imported model to provide the response to the prompt.

When resources are being built using IaC approach via AWS SAM, all resources are defined in the template.yaml file. Here is how template.yaml will look for this solution:

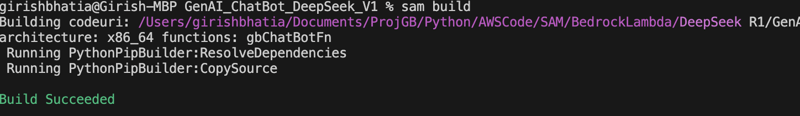

Build, invoke and deploy the code using AWS SAM

Command to build the code using AWS SAM is sam build. Run this command on the command prompt.

Command: sam build

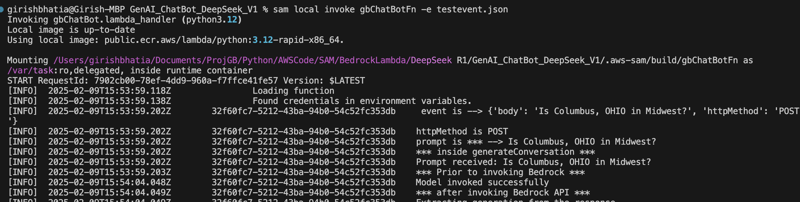

Once code is built, function can be invoked locally using sam local invoke command. At this point, code is not yet deployed in AWS Cloud. AWS SAM provides the ability to run the function code locally.

Command: sam local invoke gbChatBotFn -e testevent.json

All logs from the function are printed as well as the output response from the model.

Function is working without error and providing response per the design and use case, let's deploy it in the AWS Cloud.

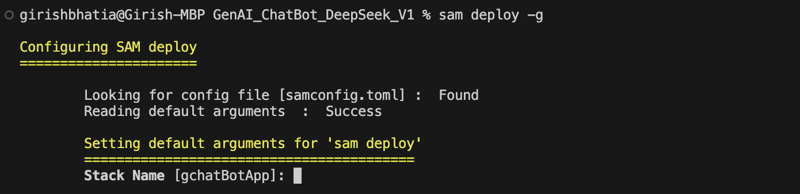

I will run the sam deploy command to deploy the code.

Command: sam deploy -g

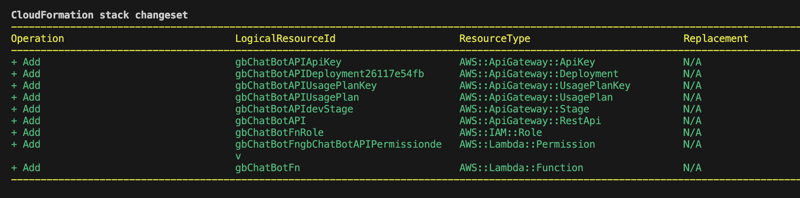

You will be provided list of components in the change set and will be asked to confirm the deployment.

Once deployment is completed, you can use the deployed API endpoint to start invoking the function!

Invoke the Model with Amazon Bedrock via API/Lambda

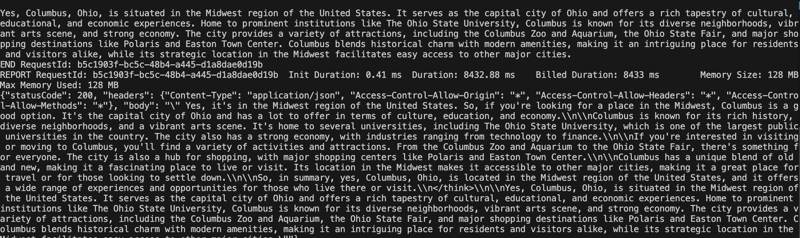

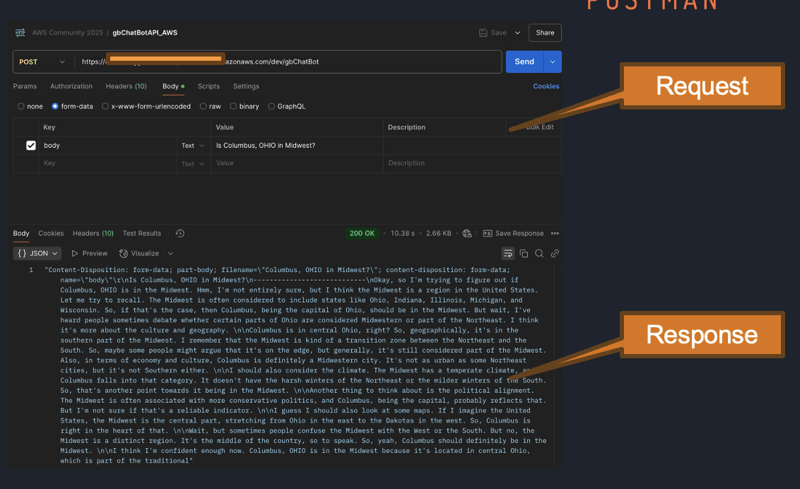

Now that I have the API endpoint, I will use the Postman to invoke the API and will pass the prompt for the model.

Prompt: Is Columbus, OHIO in Midwest?

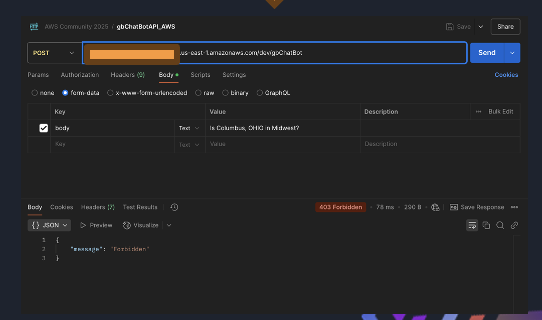

Remember, API is secured by the key, hence if api key is not provided, a 403 forbidden message will be displayed:

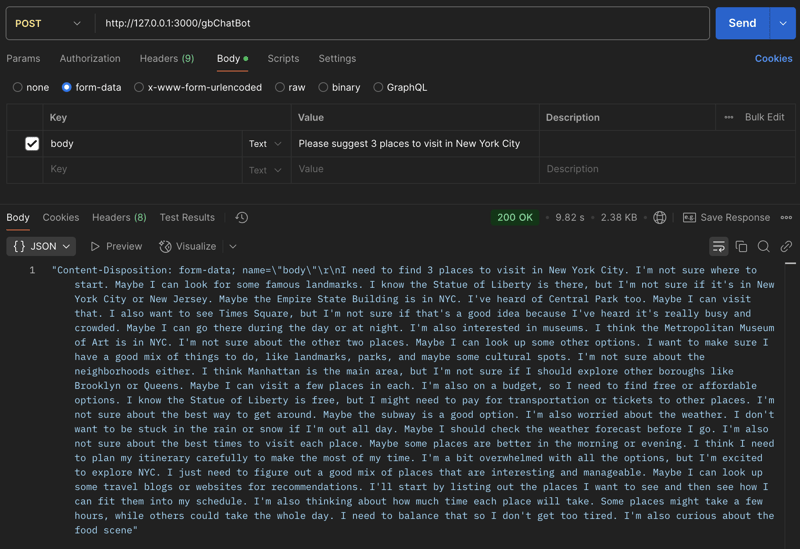

Prompt: Please suggest 3 places to visit in New York City

Cleaning Up Resources

Once done with this exercise, ensure to delete all the resources created so that these resources do not incur charges impacting overall cloud cost and budget.

We have multiple resources to delete for this workshop. Let's look at them one by one in the list below:

- Delete local model files to free up space

- Delete the models from the s3 bucket

- Delete the s3 bucket

- Delete the imported model in Bedrock

- Delete the lambda function and API

Conclusion

In this article, I demonstrated how to use the DeepSeek distilled model with Amazon Bedrock. I imported the custom model into Bedrock and invoked it using a REST API and a Lambda function integrated with the Amazon Bedrock service. I used the AWS CLI, AWS Management Console, AWS SAM, and Postman to validate the API.

I hope you found this article both helpful and informative!

Thank you for reading!

Watch the video here:

𝒢𝒾𝓇𝒾𝓈𝒽 ℬ𝒽𝒶𝓉𝒾𝒶

𝘈𝘞𝘚 𝘊𝘦𝘳𝘵𝘪𝘧𝘪𝘦𝘥 𝘚𝘰𝘭𝘶𝘵𝘪𝘰𝘯 𝘈𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵 & 𝘋𝘦𝘷𝘦𝘭𝘰𝘱𝘦𝘳 𝘈𝘴𝘴𝘰𝘤𝘪𝘢𝘵𝘦

𝘊𝘭𝘰𝘶𝘥 𝘛𝘦𝘤𝘩𝘯𝘰𝘭𝘰𝘨𝘺 𝘌𝘯𝘵𝘩𝘶𝘴𝘪𝘢𝘴𝘵

Top comments (0)