It is no secret that Codacy's underlying infrastructure relies heavily on AWS and, one of the services that we use most, is AWS's Relational Database Service (RDS) to host our Postgres databases.

In this blog post we'll describe our experience migrating a critical database with 18Tb of data from RDS to Aurora, with minimal downtime. Hopefully, this can be useful to you if you're dealing with a similar task. 😄

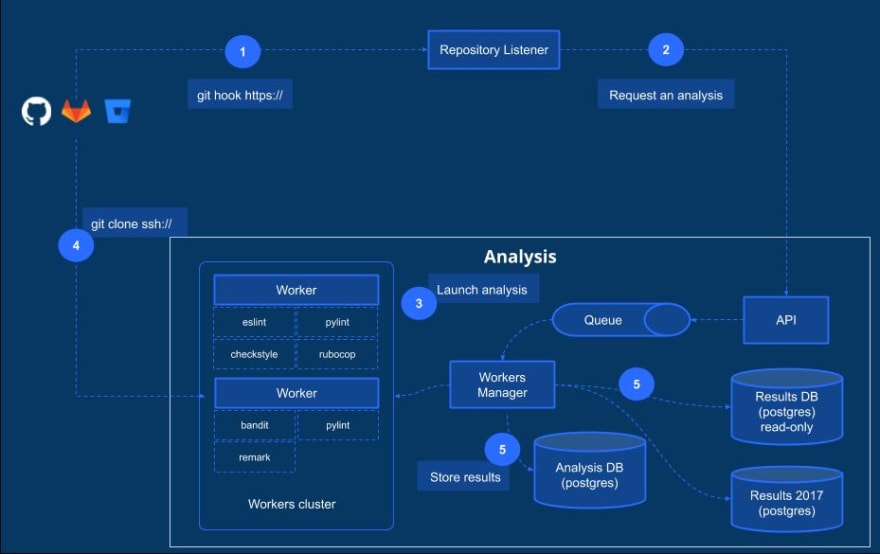

Analysis workflow, behind the scenes

Let's start with a brief description of the analysis process on Codacy, from the git providers (GitHub, Gitlab or Bitbucket) to the storage of results on the databases.

Whenever a commit or Pull/Merge request is done over a repository, it is detected on our side through the use of hooks [1]. Our internal component named "Repository Listener" is then responsible to request an analysis [2] which will be queued and picked by our "Workers Manager" that will launch the analysis over the repository [3]. The analysis will be done by the short-living "Workers" that clone the repository [4], execute multiple linters (eslint, pylint, checkstyle, etc.) and the data of the final results are then saved mostly in two different databases [5]: the "Results DB", which stores data related to the issues detected on the repositories, and the "Analysis DB", which stores data related to commits, pull requests, etc.

Data overload - Results DB

Codacy was born in 2012 and, by January 2017, the "Results DB" had approximately 6Tb of data, being the limit on postgres RDS at the time of 8Tb. Since we were having more and more analysis being done on Codacy, we were running out of time.

To solve this issue, by July 2017, a new database named "Results DB 2017" was created and new results started to be saved on this new database.

And here's a "funny" story: a few months later, AWS announced support for up to 16Tb of data on RDS, but that was already too late. 😥

Data overload - Analysis DB

Time goes by and, by September 2018, we have the same problem on the other database, the "Analysis DB". It had almost 14Tb of data, growing approximately 500Gb per month and the limit was 16Tb so, the math here is simple, we had 4 months to do something. In this case, because of the schema on this database, a simple solution similar to the one taken previously was almost impossible. 😨

Aurora

While researching for solutions to this problem, Aurora started to show up as a good candidate, since it automatically grows storage as needed, up to 64Tb. Among other benefits announced on Aurora's product page, the ease of migration from RDS and the performance benefits, caught our attention.

Regarding performance benefits, Aurora's official docs claimed up to a 3x increase in throughput performance over stock PostgreSQL 9.6 on similar hardware, but there were also testimonials that the performance increased 12x, just by migrating to Aurora.

Migration process

After deciding to go for Aurora, we started to investigate on how to do the migration with minimal downtime. We had 3 different options for it:

- Use a database snapshot from the original database and migrate it to Aurora. This option would require a huge downtime to complete (according to AWS support, 12 hours per Tb). 👎

- Use AWS Database Migration Service (DMS). This option, on the positive side, would not require any downtime during the migration and it would automatically upgrade our database from Postgres 9.4 to 9.6, which was a requirment since 9.6 is the oldest version compatible with Aurora. However, on the negative side, despite having no downtime, the whole process would still be very slow, since it would take around 12 hours per Tb and indexes would need to be recreated manually because DMS doesn't propagate them. This would be problematic since it could take a large amount of time to recreate the indexes for our biggest tables. 👎

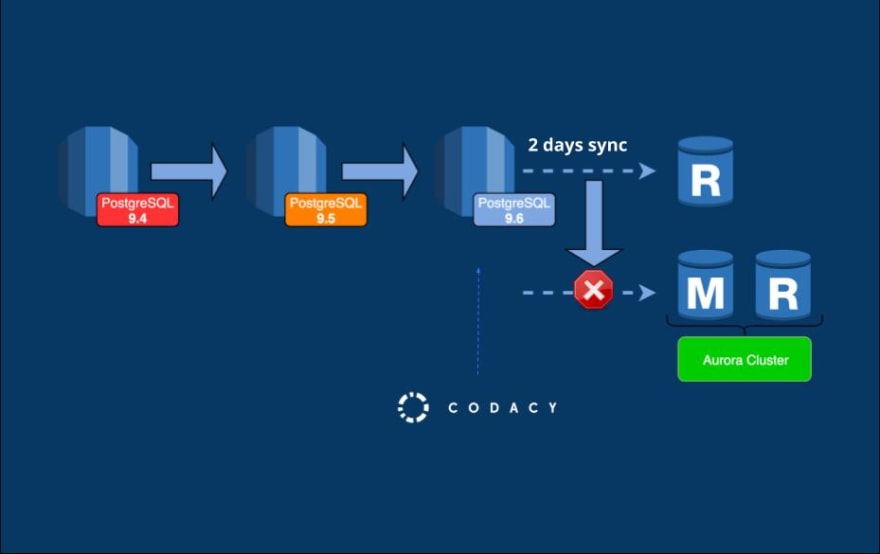

- Use an Aurora Read Replica. This looked the best solution to do the migration because it would require minimal downtime and, during dry runs, we verified that it was taking between 2 and 3 hours per TB, which was acceptable since we could leave Codacy running during that period on the old database, without having any impact. We would also need to upgrade manually the database to 9.6, but the process seemed to be easy, with minimal downtime. 👍

1st round

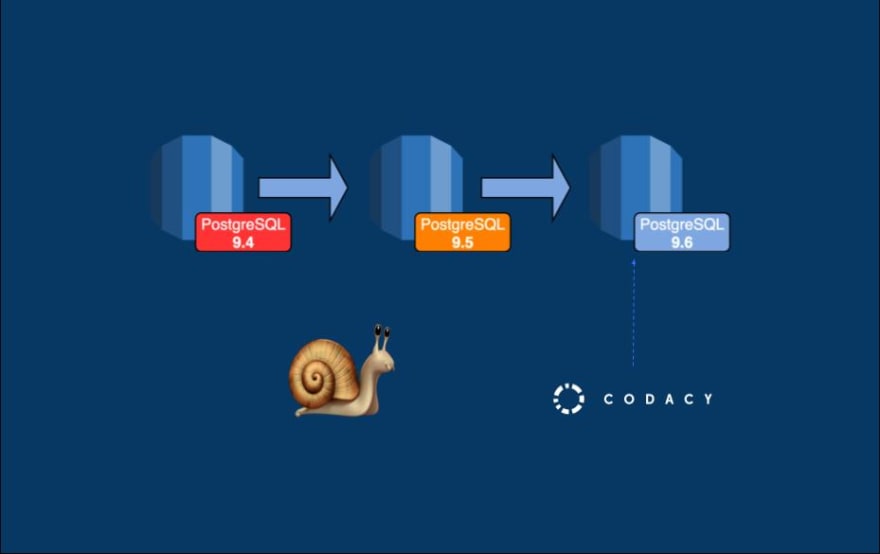

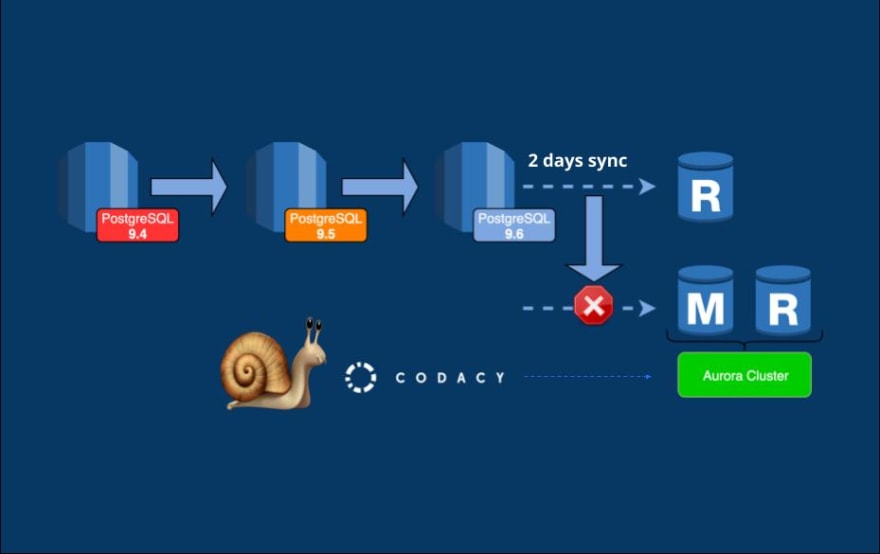

As previously referred, we had to upgrade our database from Postgres 9.4 to 9.6. This basically consisted in running two commands using the AWS CLI, as described here, being the first the upgrade to 9.5 and then to 9.6. Since the whole process for the upgrade could take around 1 hour of downtime, we planned to do it on a Saturday morning when we had less people running analysis, warned our users some days before, deployed Codacy's maintenance page and started the upgrade.

Oops! 😱 After the upgrade, Codacy was looking really slow, taking several minutes to load data from the database. After some minutes, completely clueless, we decided to revert everything and investigate the problem later. 😞

After taking a better look to the aws official docs, we noticed a useful recommendation about running the ANALYZE operation to refresh the pg_statistic table. This internal table is important for query performance since it stores statistics that are used by the query planner (you can read more about it here).

Before trying this again, we tested the ANALYZE operation on a test database and noticed that it could take several hours to finish. Therefore, we started to research ways to run it faster and we ended up using the vacuumdb utility. Here's the command that we ran:

vacuumdb -Ze -h analysisdb --analyze-in-stages -j 20

These are the important parts:

-

analyze-in-stages: some statistics are created as fast as possible, to make the database usable and then, the full statistics are be produced in the subsequent stages. There are 3 stages in total and after the first one, we verified that the database had already an acceptable performance. And it took only some seconds to finish this first stage! -

-j 20: it will start 20 jobs to run the analyze command in parallel, reducing the time of the processing.

2nd round

After successfully upgrading the database, the second, and final, step was to create an Amazon Aurora PostgreSQL Read Replica using the previous upgraded database as a source. It took around two entire days to the lag between the RDS instance and the Aurora Read Replica got close to 0.

When it happened, we promoted the read replica to master, breaking the link with the source database and therefore, stopping the replication. In this last step we had to stop every write operation on the source database before doing the promotion, to guarantee the full replication of the two instances. Again, we had to do this last step in another Saturday morning, since it required some minutes of downtime.

After removing the maintenance page, we started testing Codacy, now running on Aurora, and we faced performance issues, again. Our first thought was that we would have to run the ANALYZE operation again, and so we did. But it was still slow. 😨

We decided to execute an EXPLAIN to show the execution plan of a select statement over one of the most used tables and we noticed that the query planner was mistakenly preferring sequential scans over index scans. After some more investigation in place we found the root cause of this issue: with the migration we "lost" the previous parameter group that we were using for RDS and started using the default. In that parameter group, we had the random_page_cost set to 1, provided that the default PostgreSQL value of 4 is tuned for HDD, where random access to disk is more expensive than sequential access. Since Aurora is using solid state drives behind the scenes we had to reduce this parameter to 1 again to start using the indexes instead of doing sequential scans. 🎊

Here's another "funny" story: while we were struggling with the migration and almost running out of time, AWS announced another storage limit increase on RDS for up to 32Tb. However, this didn't came out of the box and the AWS support team had to upgrade the underlying filesystem of the database from 32 to 64 bits to be able to use more than 16Tb. We had to stop any transactions on the database for this upgrade to happen on their side and the whole operation took around 2 hours of downtime. Despite this limit increase, we were already commited with the migration to Aurora and decided to go for it. This also gave us a bit more time to prepare it and we ended up doing it only on May 2019, with a bit more than 18Tb on the database.

Wins

Besides having automatic storage provisioning as needed, up to 64Tb, what are the other positive outcomes of this migration?

Performance Insights

Previously, we were using pgbadger to generate HTML reports from the logs and get useful statistics about query performance on the database. With Aurora we got Performance Insights out of the box and we are now able to get useful, always updated, statistics straight from the AWS Management Console.

Write latency

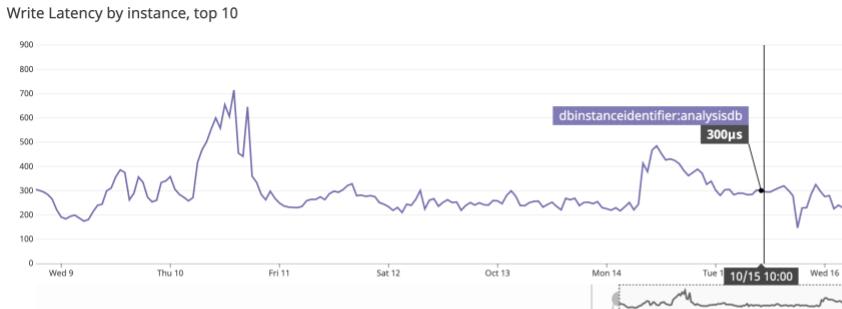

We also had some performance improvements, especially regarding to the latency of the write operations.

As it is visible, the latency was slowly increasing on RDS, reaching an average of 23ms on November 2018.

On Aurora, the write latency is now in the order of microseconds. Not bad! 😄

Future

And that's it! What should we be doing next?

Aurora Serverless

Databases on AWS can get expensive, especially with the large amounts of data that we have on some databases. Using Aurora Serverless, since it is able to seamlessly scale compute and memory capacity as needed, we could reduce the resources for our database automatically when we have less load on it, for instance, during weekends.

Consider different types of databases, other than Postgres

Postgres is great, but we are using it for almost everything and, in some situations, maybe we shouldn't. To be able to properly scale horizontally, we should start considering other types of databases, like NoSQL databases such as MongoDB, DocumentDB or CouchDB.

Top comments (1)

Nice work. Learned new things!